federated专题

联邦学习论文阅读:2018 Federated learning with non-IID data

介绍 这是一篇2018年挂在arXiv上的文章,是一篇针对FL中数据Non-IID的工作。 作者发现,对于高度Non-IID的数据集,FedAvg的准确性下降了55%。 作者提出了可以用权重散度(weight divergence)来解释这种性能下降,这个权重散度用各client上的数据类别分布与总体分布之间的EMD(earth mover’s distance)来量化。 关于什么是EMD,

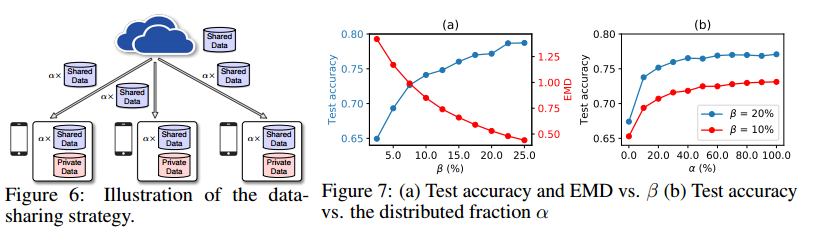

Personalized Subgraph Federated Learning,FED-PUB,2023,ICML 2023

个性化子图联邦学习 paper:Personalized Subgraph Federated Learning code Abstract 更大的全局图的子图可能分布在多个设备上,并且由于隐私限制只能在本地访问,尽管子图之间可能存在链接。最近提出的子图联邦学习(FL)方法通过在局部子图上分布式训练图神经网络(gnn)来处理局部子图之间的缺失链接。然而,他们忽略了由全局图的不同社区组成的子图之

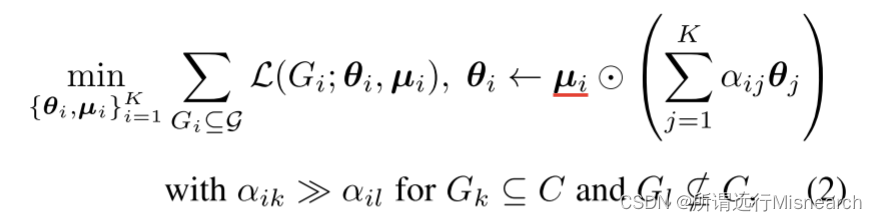

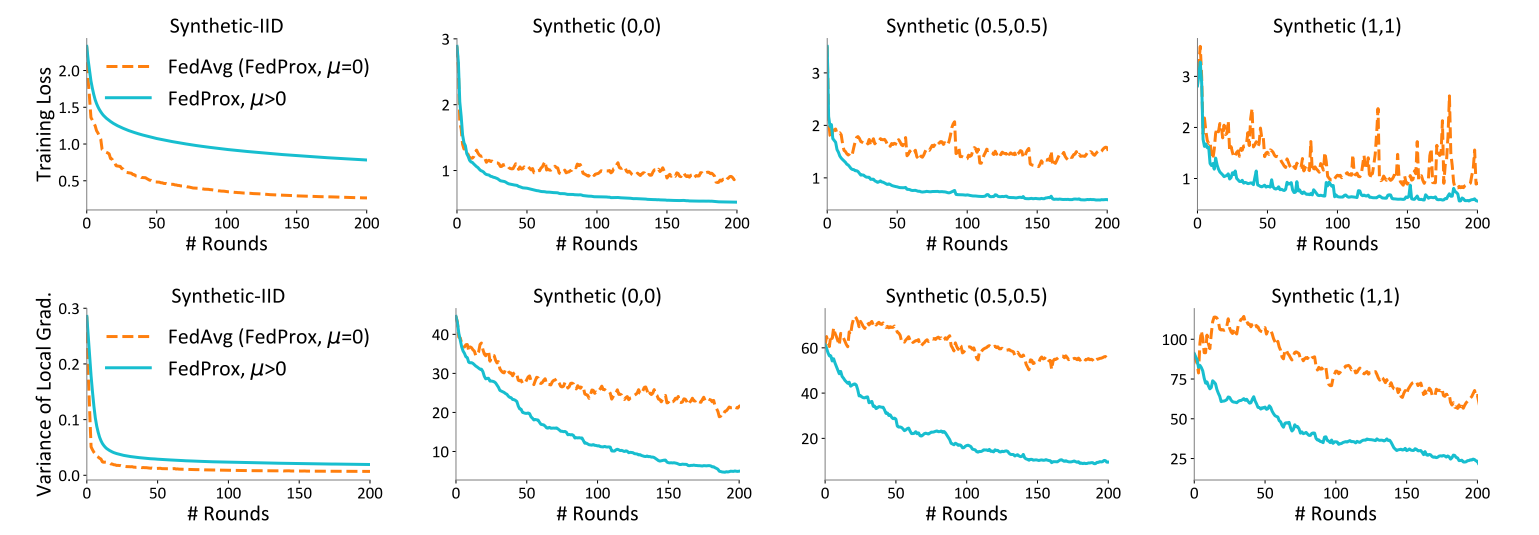

详解FedProx:FedAvg的改进版 Federated optimization in heterogeneous networks

FedProx:2020 FedAvg的改进 论文:《Federated Optimization in Heterogeneous Networks》 引用量:4445 源码地址: 官方实现(tensorflow)https://github.com/litian96/FedProx 几个pytorch实现:https://github.com/ki-ljl/FedProx-PyTorch ,

Federated Learning: 相关

Federated Learning: The Future of Distributed Machine Learning https://medium.com/syncedreview/federated-learning-the-future-of-distributed-machine-learning-eec95242d897 这篇文章把Federated Learning是什

MySQL 远程连接(federated存储引擎)

FEDERATED存储引擎是访问远程数据库中的表,在平时开发中可以用此特性来访问远程库中的参数表之类的,还是非常方便的。使用时直接在本地构建一个federated表来链接远程数据表,配置好之后本地数据库可以直接和远程数据表进行同步,实际上这个数据库并不是真实存放数据,所需要的数据都是存放在远程服务器上。 开启federated存储引擎 先查看federated存储引擎存储引擎是否启用 SHO

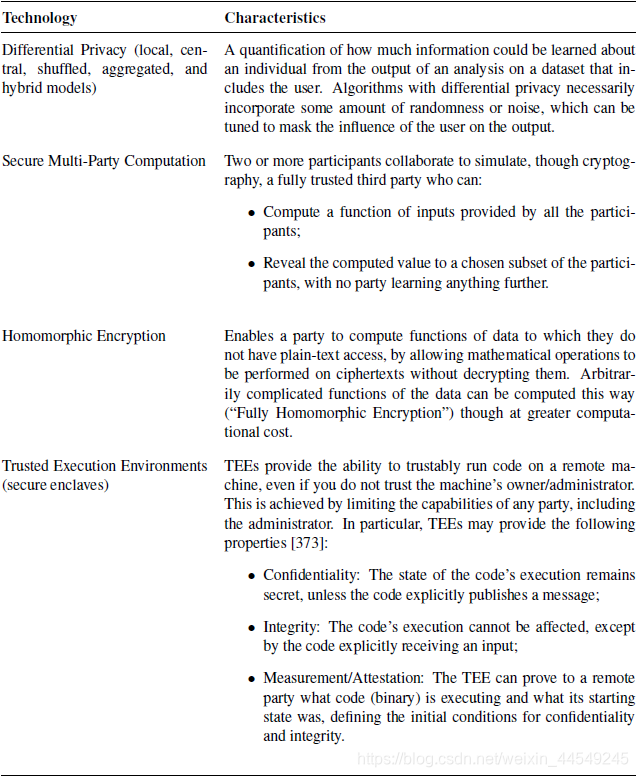

POSEIDON: Privacy-Preserving Federated NeuralNetwork Learning

写在最前面,感觉这一篇的技术更贴近于密码学,所以部分核心技术读起来比较吃力。仅供大家参考哇~ Abstract—In this paper, we address the problem of privacypreserving training and evaluation of neural networks in an N-party, federated learning setting

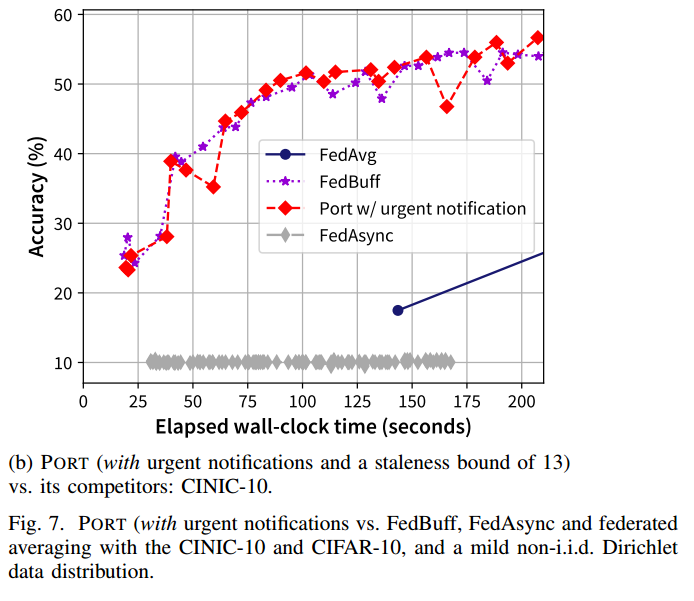

【论文笔记 | 异步联邦】PORT:How Asynchronous can Federated Learning Be?

1. 论文信息 How Asynchronous can Federated Learning Be?2022 IEEE/ACM 30th International Symposium on Quality of Service (IWQoS). IEEE, 2022,不属于ccf认定 2. introduction 2.1. 背景: 现有的异步FL文献中设计的启发式方法都只反映设计空间

【论文笔记 | 异步联邦】Asynchronous Federated Optimization

论文信息 Asynchronous Federated Optimization,OPT2020: 12th Annual Workshop on Optimization for Machine Learning,不属于ccfa introduction 背景:联邦学习有三个关键性质 任务激活不频繁(比较难以达成条件):对于弱边缘设备,学习任务只在设备空闲、充电、连接非计量网络时执行通信

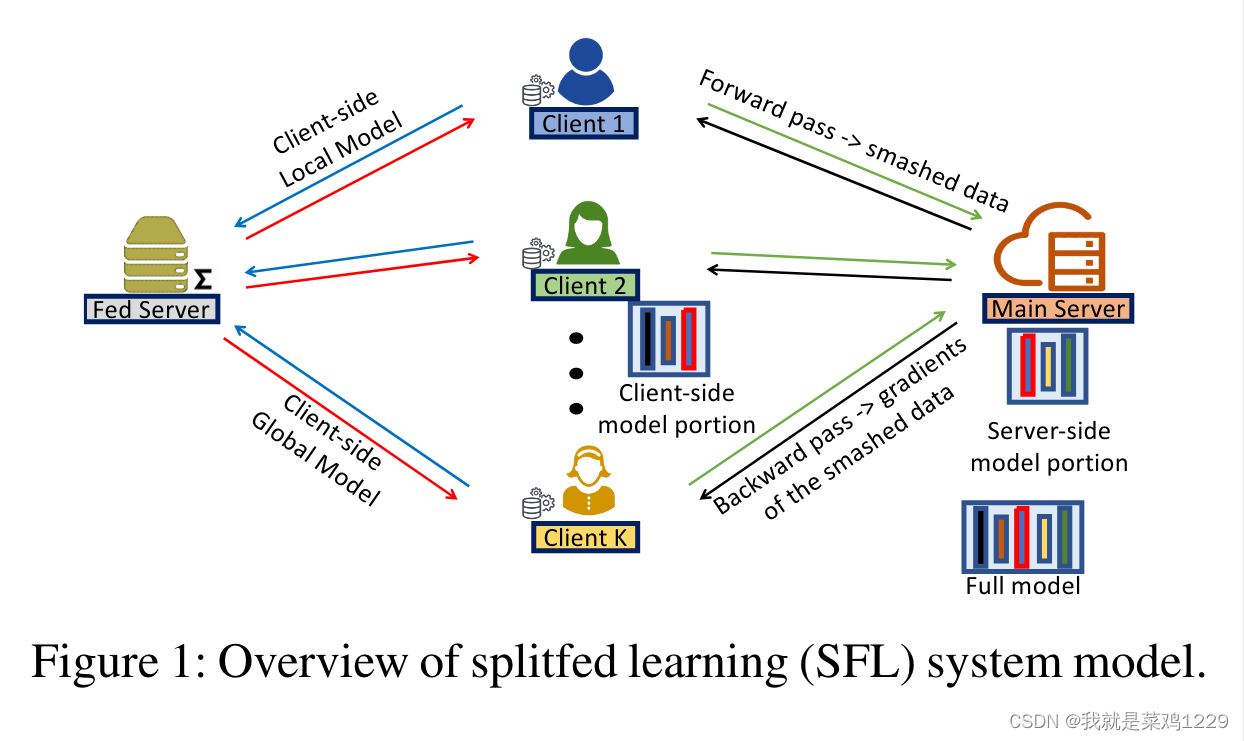

【论文阅读——SplitFed: When Federated Learning Meets Split Learning】

级别CCFA 1.摘要 联邦学习(FL)和分割学习(SL)是两种流行的分布式机器学习方法。两者都采用了模型对数据的场景;客户端在不共享原始数据的情况下训练和测试机器学习模型。由于机器学习模型的架构在客户端和服务器之间分割,SL提供了比FL更好的模型隐私性。此外,分割模型使SL成为资源受限环境的更好选择。然而,由于在多个客户端之间基于中继进行训练,SL的速度比FL慢。 2.贡献 本文提出了一

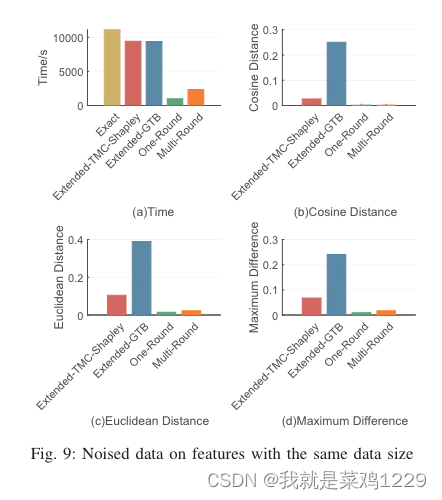

【论文阅读——Profit Allocation for Federated Learning】

1.摘要 由于更为严格的数据管理法规,如《通用数据保护条例》(GDPR),传统的机器学习服务生产模式正在转向联邦学习这一范式。联邦学习允许多个数据提供者在其本地保留数据的同时,协作训练一个共享模型。推动联邦学习实际应用的关键在于如何将联合模型产生的利润公平地分配给每个数据提供者。为了实现公平的利润分配,衡量每个数据提供者对联合模型贡献的度量标准至关重要。Shapley值是合作博弈论中的一项经典概

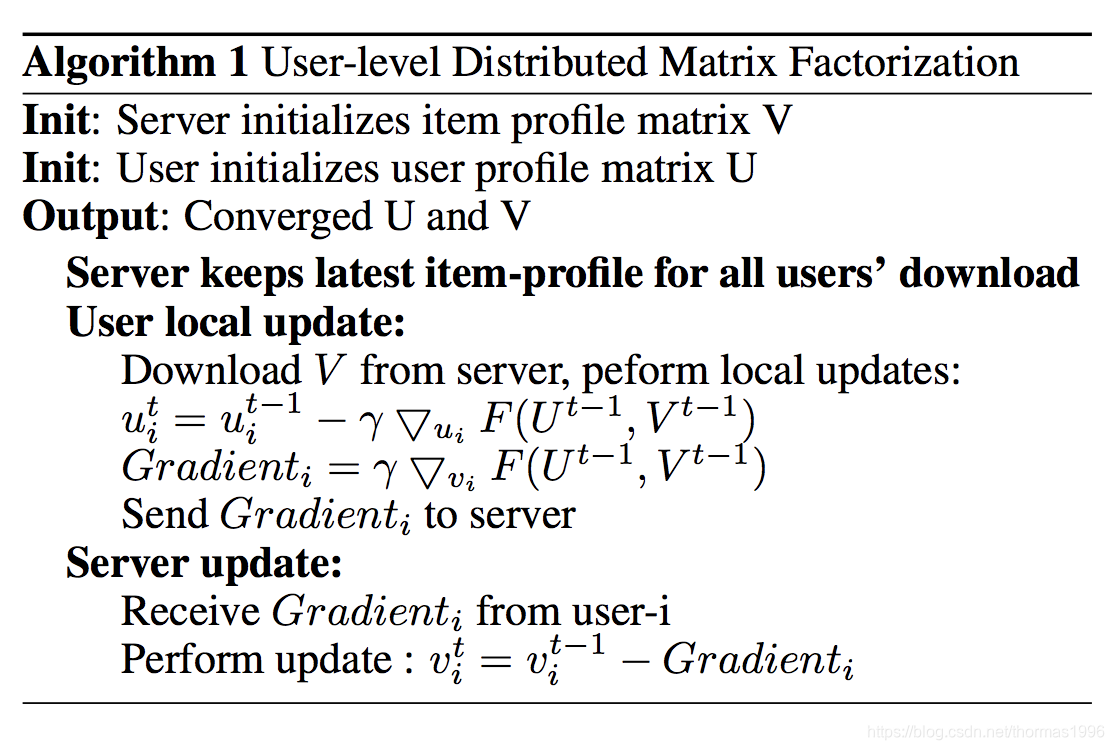

联邦学习论文阅读:Secure Federated Matrix Factorization

这是六月刚刚挂上arXiv的文章,杨老师学生的工作 link 代码 摘要 这篇文章提出了联邦化的矩阵分解算法,作者发现传梯度也会泄露信息,所以利用同态加密来进一步保证用户数据的隐私性。 框架 基本框架和federated collaborative filtering那篇文章是一样的:一个标准的横向联邦框架,user vector保留在本地训练,只上传加密后的更新梯度,服务器进行汇总,然

联邦学习论文阅读:Asynchronous Federated Optimization

这是UIUC的一篇刚刚挂在arXiv上的文章:Asynchronous Federated Optimization。 我对边缘计算和异步算法不太了解,直观的理解是作为一个user,我上传的梯度参数是延迟的,也就是说central server当前已经更新过这次的梯度了,并且已经开始计算下一次甚至下下次的global gradient了,那么我这次的参数实际上是混在其他用户下一次更新的数据中的。

FedDefender: Client-Side Attack-Tolerant Federated Learning

与现有的大部分方法不同,FedDefender是在客户端层面的防御机制。 方法叠的有点多 大部分方法都在④这一步防御,通过设计鲁邦的聚合策略等,但是本文通过修改本地训练策略,来更新模型,文章主要基于两个观点: 本地模型不容易过拟合噪声知识蒸馏传递正确的全局信息 Step 1. 本地元更新 我们通过元学习以鲁棒的方式训练良性局部模型。目标是发现模型的参数,即使在被噪声信息干扰后也

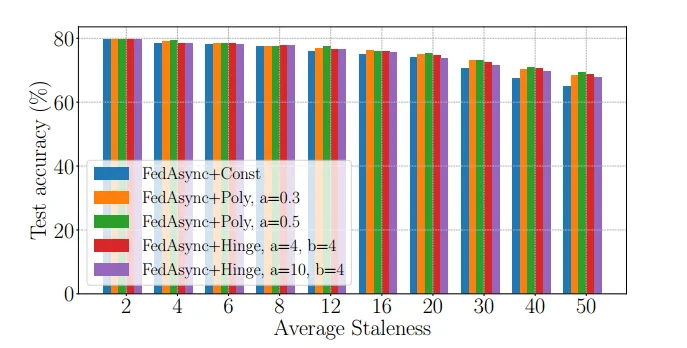

FedAsync Asynchronous Federated Optimization

文章目录 IntroductionMethodologyConvergence analysisExperiments Introduction 联邦学习有三个关键属性: 不频繁的任务激活。对于弱边缘设备,学习任务只在设备空闲、充电、连接非计量网络时执行.沟通不频繁。边缘设备和远程服务器之间的连接可能经常不可用、缓慢或昂贵(就通信成本或电池电量使用而言)。非iid训练数据。对于

Eliminating Domain Bias for Federated Learning in Representation Space【文笔可参考】

文章及作者信息: NIPS2023 Jianqing Zhang 上海交通大学 之前中的NeurIPS'23论文刚今天传到arxiv上,这次我把federated learning的每一轮看成是一次bi-directional knowledge transfer过程,提出了一种促进server和client之间bi-directional knowledge transfer的方

联邦学习(Federated Learning)学习笔记

文章目录 前言一、联邦学习FedAVG和分布式SGD二、联邦学习中的SGD三、梯度压缩和误差补偿四、两种文中给出的新算法总结 前言 内容仅为笔者主观想法,记录下来以供之后回顾,如有错误请谅解。 一、联邦学习FedAVG和分布式SGD 文献:Federated Learning of Deep Networks using Model Averaging 在

READ-2360 Securing Federated Learning against Overwhelming Collusive Attackers

论文名称Securing Federated Learning against Overwhelming Collusive Attackers作者Priyesh Ranjan; Ashish Gupta; Federico Coro; Sajal K. Das来源IEEE GLOBECOM 2022领域Machine Learning - Federal learning - Security

SplitFed: When Federated Learning Meets Split Learning

论文链接: [2004.12088v1] SplitFed: When Federated Learning Meets Split Learning (arxiv.org) AAAI 2022 摘要: 联邦学习+分割学习:消除其固有的缺点的两种方法,以及结合差分隐私和PixelDP的精细架构配置,以增强数据隐私和模型的鲁棒性 提出联邦学习和分割学习的融合,基于差分隐私的度量 和 Pix

Federated Unlearning for On-Device Recommendation

WSDM 2023 CCF-B Federated Unlearning for On-Device Recommendation 本文工作的主要介绍 本文主要介绍了一种名为FRU(Federated Recommendation Unlearning)的联邦学习框架,用于在设备端的推荐系统中实现用户数据的有效擦除和模型重建。FRU通过存储用户的历史更新并校准这些更新,实现了有效的用户数据擦

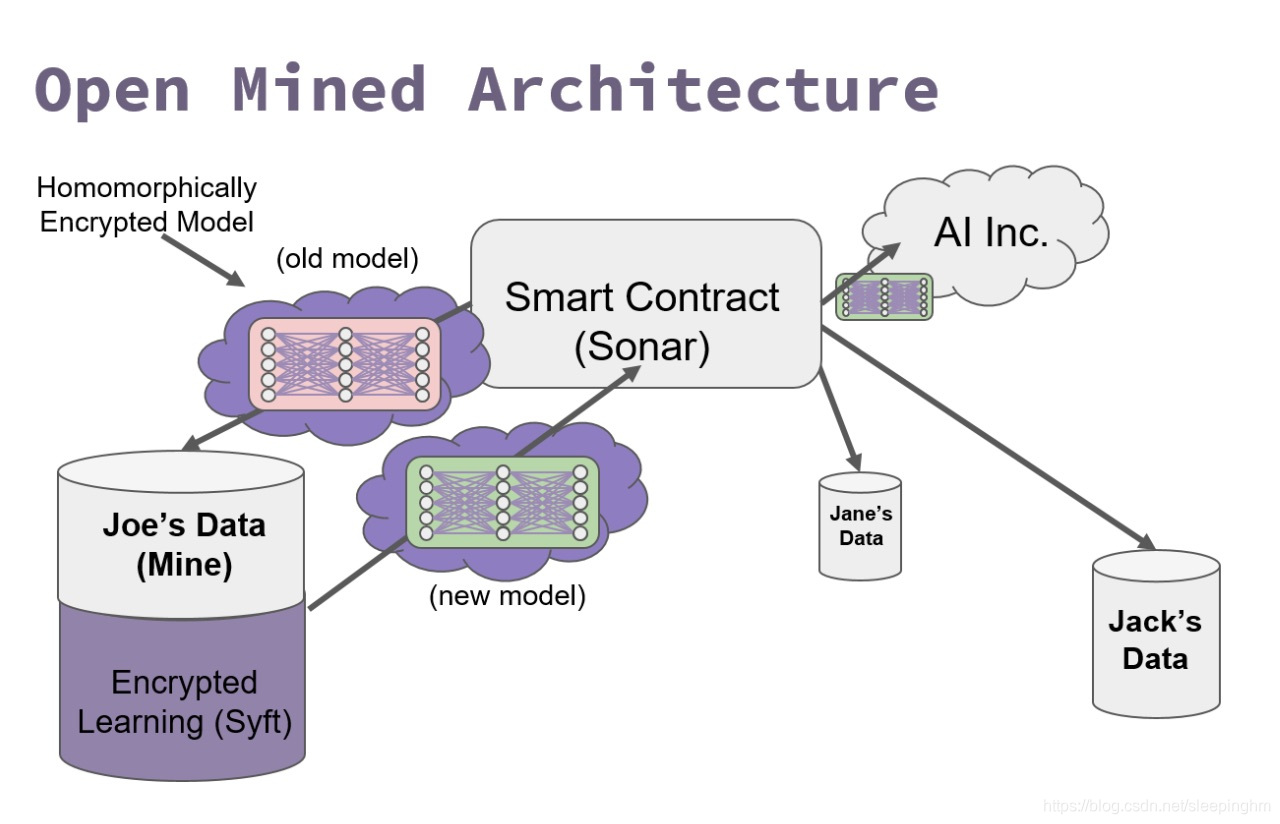

Federated Machine Learning:Concept and Applications

https://www.cnblogs.com/lucifer1997/p/11223964.htmlhttps://blog.csdn.net/qq_36375505/article/details/88554005https://blog.csdn.net/weixin_44774630/article/details/97529260?utm_source=app 关键技术: 差分隐私D

Differentially Private Federated Learning: A Client Level Perspective

介绍 我们的目标并不是只保护数据。相反,我们希望确保一个学习模型不会显示客户是否参与了分散的培训。这意味着客户机的整个数据集受到保护,不受来自其他客户机的差异攻击。 我们的主要贡献:首先,我们展示了在联合学习中保持较高的模型性能时,客户机的参与是可以隐藏的。我们证明了我们提出的算法可以在模型性能损失很小的情况下实现客户级差异隐私。与此同时发表的一项独立研究[6]提出了一个类似的客户级dp程序。然

01联邦学习的介绍:Why And How Federated Learning

什么是联邦学习 联邦学习可以在不直接访问训练数据的情况下构建机器学习系统。数据保留在原始位置,这有助于确保隐私并降低通信成本。 联邦学习:将模型下传到用户侧进行模型训练,而无需将用户侧数据上传进行集中训练; 传统的机器学习:将用户数据上传集中,然后进行模型训练。 为什么需要联邦学习 隐私问题(我不想分享我的自己的照片),联邦学习可以不将你的数据共享出去。 监管需要(HIPAA,G

【对Adaptive Federated Dropout的解读】

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档 对Adaptive Federated Dropout的解读 前言一、AFD是什么?二、AFD主要使用的技术1.Activations Score Map——激活分数图(1)Multi-Model Adaptive Federated Dropout(2)Single-Model Adaptive Federated

mysql使用federated_实现类似oracle的dblink的功能

1.查看 federated 功能是否开启 命令: show engines; 如下:我查询我的已经开启了。如果没有开启,在mysql的配置文件my.ini(windows下) mysqld标签下添加federated,然后重启mysql服务。 2.假如A库作为业务库,B库作为其他业务库,就是把B库的表映射到业务库A库中,供A库使用。 A库中建立对B库的映射。 B库给A库只读权限(

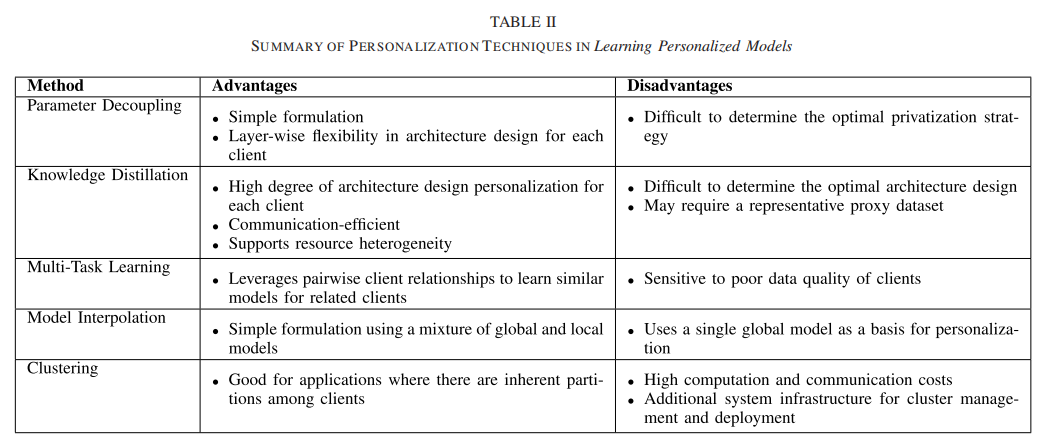

【论文 | 联邦学习】 | Towards Personalized Federated Learning 走向个性化的联邦学习

Towards Personalized Federated Learning 标题:Towards Personalized Federated Learning 收录于:IEEE Transactions on Neural Networks and Learning Systems (Mar 28, 2022) 作者单位:NTU,Alibaba Group

【论文记录】Advances and Open Problems in Federated Learning

他人总结:[link] \, [link] 讨论最优化算法的部分没看懂 4.1 \, Actors, Threat Models, and Privacy in Depth Various threat models for different adversarial actors (malicious / honest-but-curious) : 4.2 \, Too