t5专题

NLP-文本摘要:利用预训练模型进行文本摘要任务【transformers:pipeline、T5、BART、Pegasus】

一、pipeline 可以使用pipeline快速实现文本摘要 from transformers import pipelinesummarizer = pipeline(task="summarization", model='t5-small')text = """summarize: (CNN)For the second time during his papacy, Pope Fr

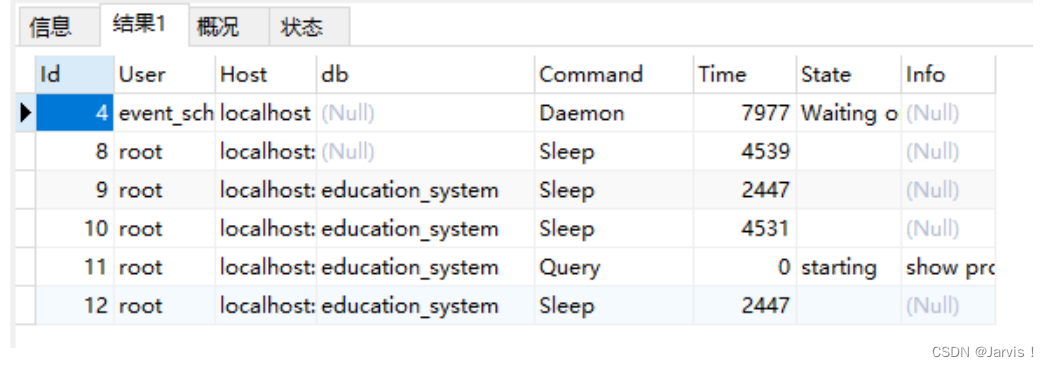

昇思AI框架实践2:基于T5的SQL语句生成模型推理

MindSpore 基于T5的SQL语句生成项目实施 基于T5的SQL语句生成项目介绍 本项目旨在开发一个基于T5-small模型的自然语言转SQL语句生成器。该生成器能够将用户以自然语言形式提出的查询请求转换为对应的SQL查询语句,从而使得即使是不熟悉SQL语言的用户也能够轻松地从数据库中检索所需信息。本项目使用HuggingFace中经过大量英文语料预训练的T5-small模型并对其模型

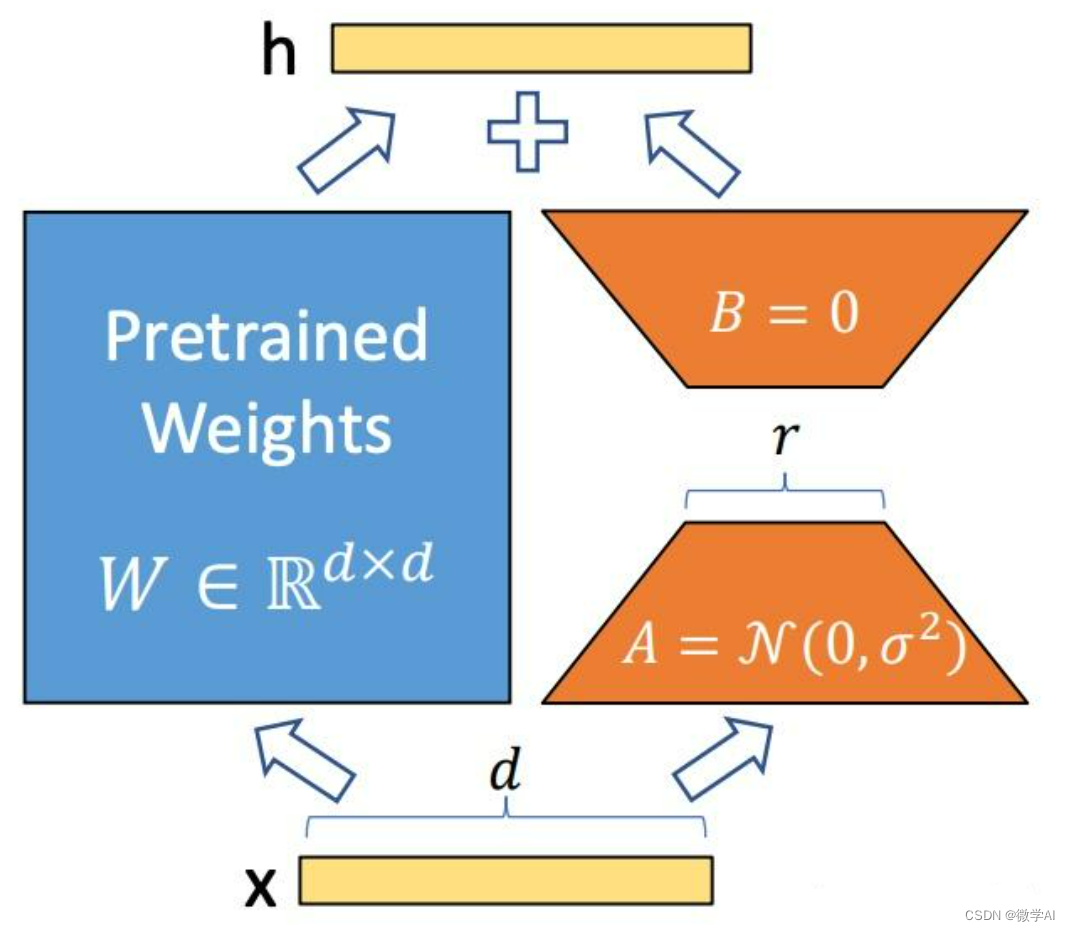

Transformer微调实战:通过低秩分解(LoRA)对T5模型进行微调(LoRA Fine Tune)

scient scient一个用python实现科学计算相关算法的包,包括自然语言、图像、神经网络、优化算法、机器学习、图计算等模块。 scient源码和编译安装包可以在Python package index获取。 The source code and binary installers for the latest released version are available at t

NLP主流大模型如GPT3/chatGPT/T5/PaLM/LLaMA/GLM的原理和差异有哪些-详细解读

自然语言处理(NLP)领域的多个大型语言模型(如GPT-3、ChatGPT、T5、PaLM、LLaMA和GLM)在结构和功能上有显著差异。以下是对这些模型的原理和差异的深入分析: GPT-3 (Generative Pre-trained Transformer 3) 虽然GPT-4O很火,正当其时,GPT-5马上发布,但是其基地是-3,研究-3也是认识大模型的一个基础 原理 架构: 基于

FLAN-T5模型的文本摘要任务

Text Summarization with FLAN-T5 — ROCm Blogs (amd.com) 在这篇博客中,我们展示了如何使用HuggingFace在AMD GPU + ROCm系统上对语言模型FLAN-T5进行微调,以执行文本摘要任务。 介绍 FLAN-T5是谷歌发布的一个开源大型语言模型,相较于之前的T5模型有所增强。它是一个已经在指令数据集上进行预训练的编码

MySQL数据操作与查询-T5 MySQL函数

一、数学函数和控制流函数 1、数学函数 (1)abs(x) 计算x的绝对值。 1 select abs(‐5.5),abs(10) (2)pow(x,y) 计算x的y次方的值。 1 select pow(2,8),pow(8,2) (3)round(x) 和 round(x,y)

⌈ 传知代码 ⌋ Flan-T5 使用指南

💛前情提要💛 本文是传知代码平台中的相关前沿知识与技术的分享~ 接下来我们即将进入一个全新的空间,对技术有一个全新的视角~ 本文所涉及所有资源均在传知代码平台可获取 以下的内容一定会让你对AI 赋能时代有一个颠覆性的认识哦!!! 以下内容干货满满,跟上步伐吧~ 📌导航小助手📌 💡本章重点🍞一. 概述🍞二. Flan-T5介绍🍞三. 部署流程🫓总结

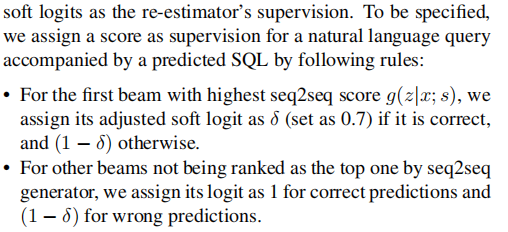

【Text2SQL 论文】T5-SR:使用 T5 生成中间表示来得到 SQL

论文:T5-SR: A Unified Seq-to-Seq Decoding Strategy for Semantic Parsing ⭐⭐⭐ 北大 & 中科大,arXiv:2306.08368 文章目录 一、论文速读二、中间表示:SSQL三、Score Re-estimator四、总结 一、论文速读 本文设计了一个 NL 和 SQL 的中间表示 SSQL,然后使用

【T5中的激活函数】GLU Variants Improve Transformer

【mT5中的激活函数】GLU Variants Improve Transformer 论文信息 阅读评价 Abstract Introduction Gated Linear Units (GLU) and Variants Experiments on Text-to-Text Transfer Transformer (T5) Conclusion 论文信息

聚集在腾讯CSIG的T5科学家,究竟是怎样的存在?

“T5”在腾讯是怎么样的存在? 这个问题,如果给两万名腾讯技术人员来回答,大部分的答案估计只有一个字 —— 神。 要知道,在腾讯职级能力体系里,大多数人达到T3已殊为不易,已是人才市场上的重要参照。 腾讯对T5科学家的评定标准极其严苛:他们不仅要是各自领域公认的资深专家,还需要有足够的战略眼光参与公司重大领域和项目。 这让创立20年的腾讯T5科学家极为稀缺,此前他们像七龙珠一般星

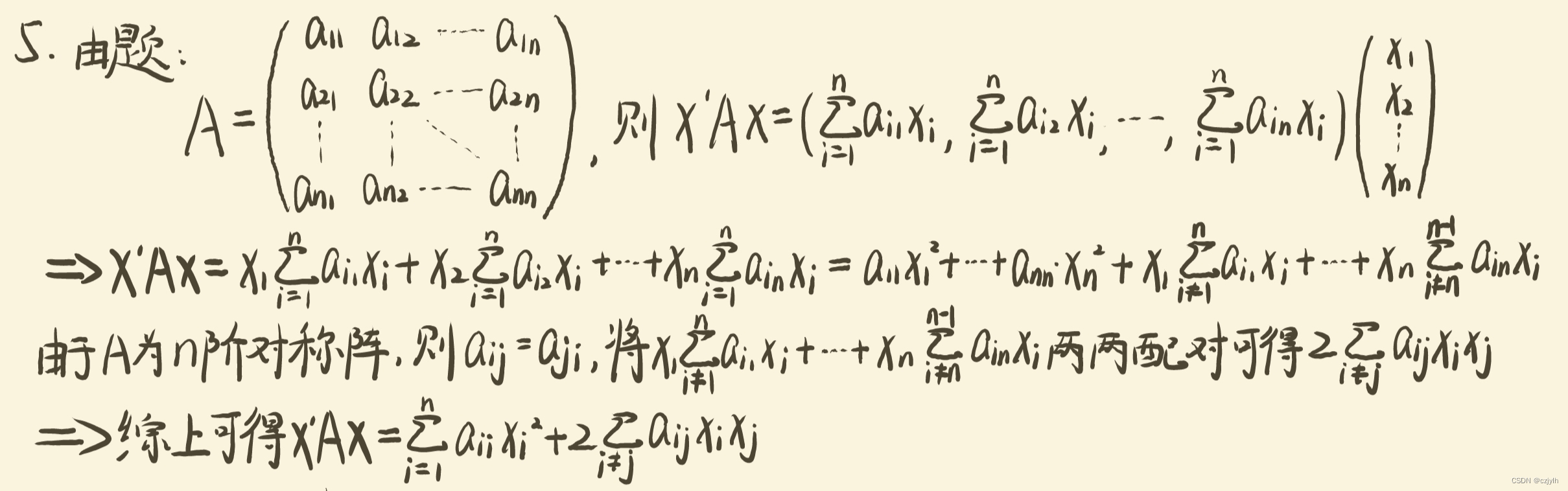

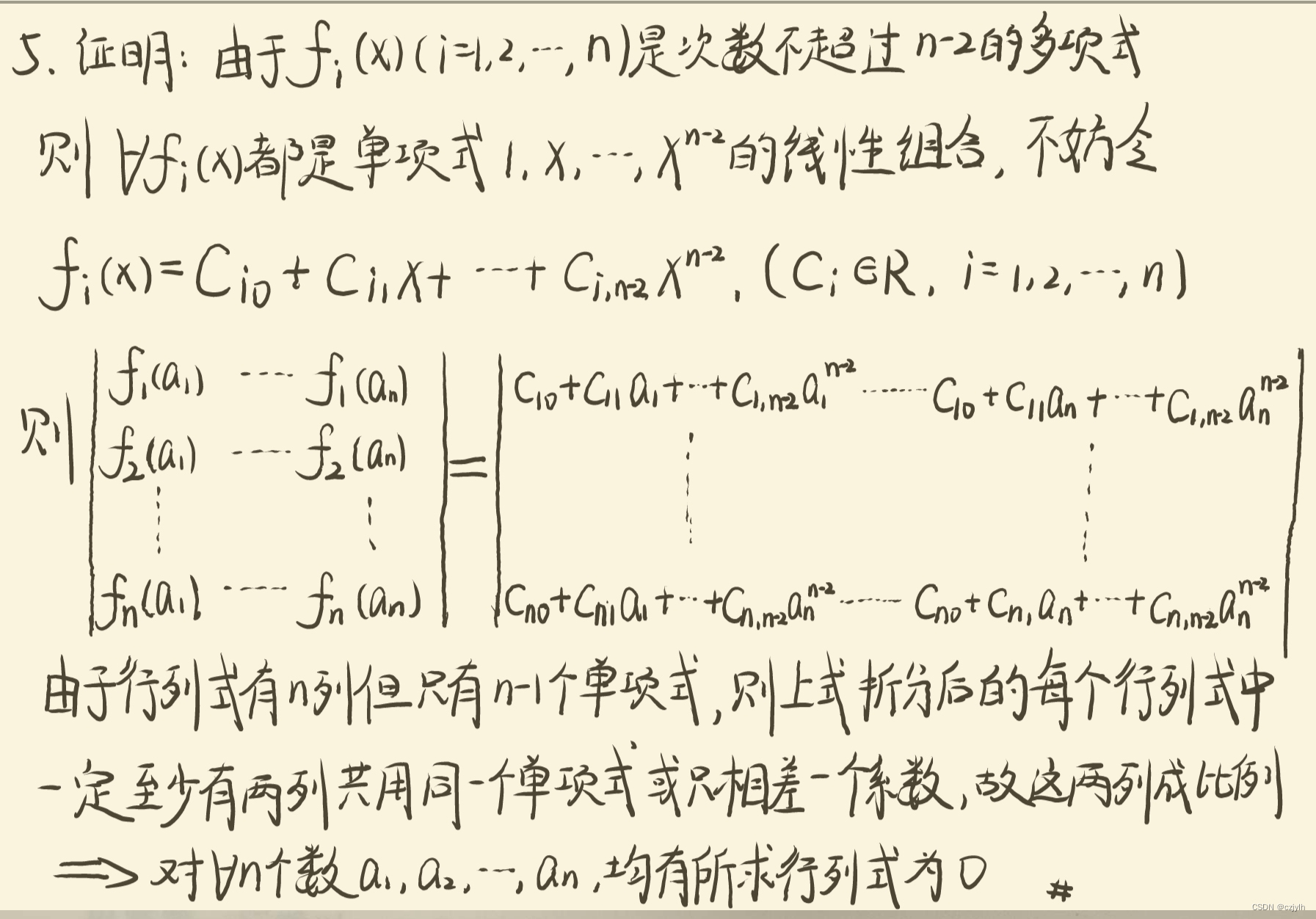

高代绿皮第四版课后习题1.5 T5

原题 设 是次数不超过 n-2 的多项式,求证: 对 均有 解析 思路: 由题干条件可知 都是单项式 的线性组合 不妨令 利用上式将所求行列式中的 展开可发现 行列式有n列但只有n-1个单项式 则将其拆分后的每个行列式中一定至少有两列共用同一个单项式或只差一个系数 故这些行列式必有两列成比例,即恒等于0,即证原命题 参考解题细节:

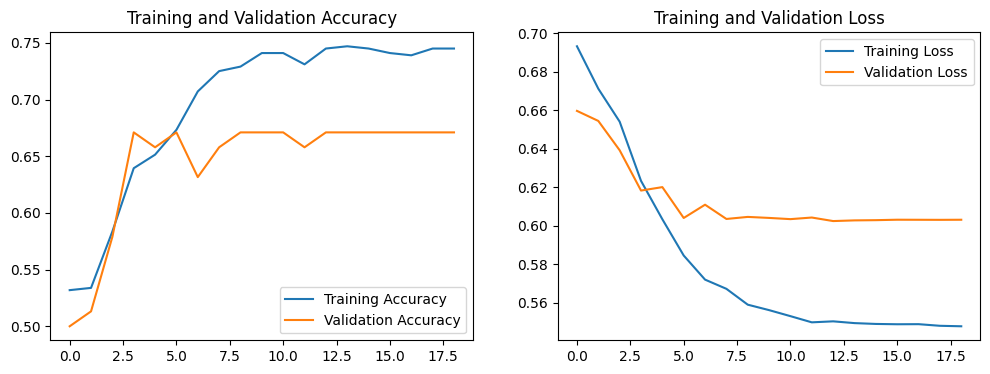

第T5周:运动鞋品牌识别

🍨 本文为🔗365天深度学习训练营 中的学习记录博客🍖 原作者:K同学啊 | 接辅导、项目定制 训练过程心得 这次加入了早停和指数变化学习率,确实是很有用的方法,但是最开始自己设置参数时,总感觉教案设置的学习率太大(虽然后面公布答案就是改的学习率)。但最开始我没有改学习率,而是把decay_rate调的很小(后来发现不太合适……)。然后一直在改参数,果然使用Adam优化器还是不能把

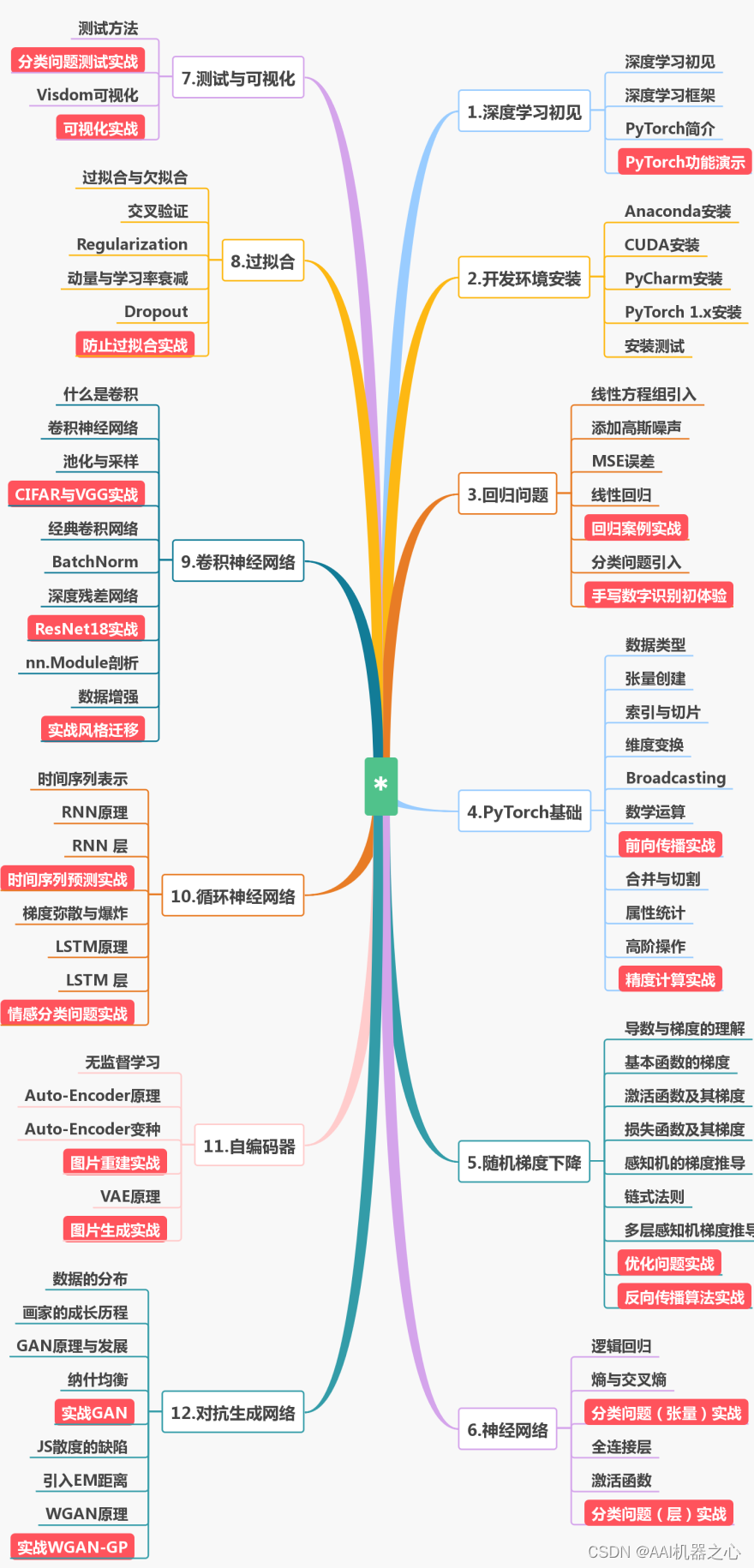

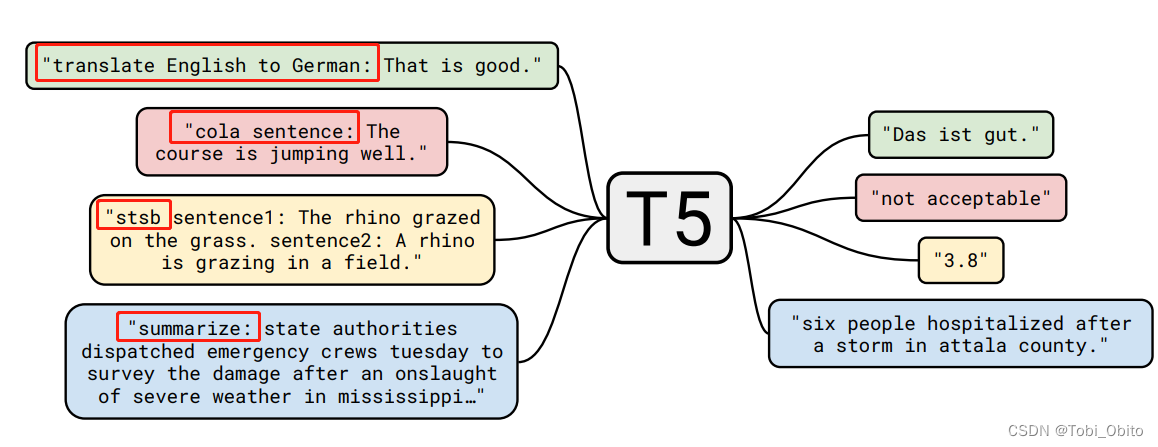

冻结Prompt微调LM: T5 PET

T5 paper: 2019.10 Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer Task: Everything Prompt: 前缀式人工prompt Model: Encoder-Decoder Take Away: 加入前缀Prompt,所有NLP任务都可

冻结Prompt微调LM: T5 PET (a)

T5 paper: 2019.10 Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer Task: Everything Prompt: 前缀式人工prompt Model: Encoder-Decoder Take Away: 加入前缀Prompt,所有NLP任务都可以转

自然语言处理实战项目25-T5模型和BERT模型的应用场景以及对比研究、问题解答

大家好,我是微学AI,今天给大家介绍一下自然语言处理实战项目25-T5模型和BERT模型的应用场景以及对比研究、问题解答。T5模型和BERT模型是两种常用的自然语言处理模型。T5是一种序列到序列模型,可以处理各种NLP任务,而BERT主要用于预训练语言表示。T5使用了类似于BERT的预训练方式,但采用了更广泛的输入输出形式。T5具有很强的任务适应性,可以通过微调来完成多种不同的NLP任务。而BER

详细介绍如何使用T5实现文本摘要:微调和构建 Gradio 应用程序-含完整源码

对高效文本摘要的需求从未如此迫切。无论您是正在处理冗长研究论文的学生还是浏览新闻文章的专业人士,快速提取关键见解的能力都是非常宝贵的。T5 是一种因多项 NLP 任务而闻名的预训练语言模型,擅长文本摘要。使用 T5 的文本摘要与 Hugging Face API 是无缝的。然而,对 T5 进行文本摘要微调可以解锁许多新功能。这正是我们将在本文中发现的内容。 使用 T5 进行文本摘要 我

NLP-预训练模型:迁移学习(拿已经训练好的模型来使用)【预训练模型:BERT、GPT、Transformer-XL、XLNet、RoBerta、XLM、T5】、微调、微调脚本、【GLUE数据集】

深度学习-自然语言处理:迁移学习(拿已经训练好的模型来使用)【GLUE数据集、预训练模型(BERT、GPT、transformer-XL、XLNet、T5)、微调、微调脚本】 一、迁移学习概述二、NLP中的标准数据集1、GLUE数据集合的下载方式2、GLUE子数据集的样式及其任务类型2.1 CoLA数据集【判断句子语法是否正确】2.2 SST-2数据集【情感分类】2.3 MRPC数据集【判断

Huggingface T5模型代码笔记

0 前言 本博客主要记录如何使用T5模型在自己的Seq2seq模型上进行Fine-tune。 1 文档介绍 本文档介绍来源于Huggingface官方文档,参考T5。 1.1 概述 T5模型是由Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou

VT-VRPA2-1-1X/V0/T5控制4WRE6比例方向阀放大板

带阀芯位移反馈不带集成式放大器比例方向阀控制放大器,替代力士乐同型号产品,可以完全互换使用;适用于控制力士乐系列带电位置反馈的4WRE6通径和4WRE10通径2X系列比例方向阀;0~+10V、4~20mA指令控制信号任意可选;直接安装在35mm导轨上,方便连接 控制4WRE6...-2X系列比例方向阀,VT-VRPA2-1-1X/V0/T1,VT-VRPA2-1-1X/V0/T5,安装支

大模型的实践应用9-利用LoRA方法在单个GPU上微调FLAN-T5模型的过程讲解与实现

大家好,我是微学AI,今天给大家介绍一下大模型的实践应用9-利用LoRA方法在单个GPU上微调FLAN-T5模型的过程讲解与实现,文本我们将向您展示如何应用大型语言模型的低秩适应(LoRA)在单个GPU上微调FLAN-T5 XXL(110 亿个参数)模型。我们将利用Transformers、Accelerate和PEFT等第三方库。 1. 设置开发环境 这里我使用已设置好的 CUDA 驱动程

面试京东T5,被按在地上摩擦,鬼知道我经历了什么?【好文分享】

一转眼间,光阴飞快,各大企业都开始招人,各大学校也开始准备陆续入学。金三银四已经快结束了,有的朋友发来喜报,面试上了一线大厂,也有没有面试上的朋友跑来跟我说,被虐惨了,几天给大家分享下我一个面试京东的朋友的经历,希望给正在面试的朋友共勉。 面试京东被问到的问题: 测试理论基础 什么是软件测试? 答:软件测试是在规定的条件下对程序进行操作,以发现错误,对软件质量进行 评估。 软件测试的目的是什么

新版友价源码T5商城(整站6月16升级版)

介绍: 友价源码T5商城((整站6月16升级版))站长亲测:完整无错请放心购买!整站带演示数据6月16升级版,带17套电脑模版(带新版niu电脑模版)加2套手机模版。友价商城系统源码20220211补丁 手机端:1、修复服务订单不能提交验收的BUG2、底部增加消息提醒功能电脑端:3、更新支付宝电脑网站支付接口(旧版接口支付宝官方即将停用)4、下次更新将停用旧版支付宝接口(请尽快申请支付宝新接口)