norm专题

Pytorch中不同的Norm归一化详细讲解

在做项目或者看论文时,总是能看到Norm这个关键的Layer,但是不同的Norm Layer具有不同的作用,准备好接招了吗?(本文结论全部根据pytorch官方文档得出,请放心食用) 一. LayerNorm LayerNorm的公示如下: y = x − E [ x ] Var [ x ] + ϵ ∗ γ + β y=\frac{x-\mathrm{E}[x]}{\sqrt{\op

CV-CNN-2015:GoogleNet-V2【首次提出Batch Norm方法:每次先对input数据进行归一化,再送入下层神经网络输入层(解决了协方差偏移问题)】【小的卷积核代替掉大的卷积核】

GoogLeNet凭借其优秀的表现,得到了很多研究人员的学习和使用,因此GoogLeNet团队又对其进行了进一步地发掘改进,产生了升级版本的GoogLeNet。 GoogLeNet设计的初衷就是要又准又快,而如果只是单纯的堆叠网络虽然可以提高准确率,但是会导致计算效率有明显的下降,所以如何在不增加过多计算量的同时提高网络的表达能力就成为了一个问题。 Inception V2版本的解决方案就是修

python 库 Numpy 中如何求取向量范数 np.linalg.norm(求范数)(向量的第二范数为传统意义上的向量长度),(如何求取向量的单位向量)

转载自: https://www.cnblogs.com/devilmaycry812839668/p/9352814.html 求取向量二范数,并求取单位向量(行向量计算) import numpy as npx=np.array([[0, 3, 4], [2, 6, 4]])y=np.linalg.norm(x, axis=1, keepdims=True)z=x/y x 为需

概率统计Python计算:连续型随机变量分布(norm)

scipy.stats的norm对象表示正态分布,下表说明norm的几个常用函数。 函数名参数功能rvs(loc, scale, size)loc,scale:分布参数 μ \mu μ和 σ \sigma σ,缺省值分别为0和1,size:产生的随机数个数,缺省值为1产生size个随机数pdf(x, loc, scale)x:自变量取值,loc,scale:与上同概率密度函数 f ( x )

l0-Norm, l1-Norm, l2-Norm, … , l-infinity Norm

I’m working on things related to norm a lot lately and it is time to talk about it. In this post we are going to discuss about a whole family of norm. What is a norm? Mathematically a norm is a tota

Why L1 norm for sparse models?

Explanation 1 Consider the vector x⃗ =(1,ε)∈R2 where ε>0 is small. The l1 and l2 norms of x⃗ , respectively, are given by ||x⃗ ||1=1+ε, ||x⃗ ||22=1+ε2 Now say that, as

clip_grad_norm_ 梯度裁剪

torch.nn.utils.clip_grad_norm_ 函数是用来对模型的梯度进行裁剪的。在深度学习中,经常会使用梯度下降算法来更新模型的参数,以最小化损失函数。然而,在训练过程中,梯度可能会变得非常大,这可能导致训练不稳定甚至梯度爆炸的情况。 裁剪梯度的作用是限制梯度的大小,防止它们变得过大。裁剪梯度的常见方式是通过计算梯度的范数(即梯度向量的长度),如果梯度的范数超过了设定的阈值,则对

【python】torch.expand()广播机制|torch.norm()

1. torch.expand()广播机制 在处理3D点云时, 有时需要对两帧点云进行逐点的三维坐标相加减、做点积等运算, 但是读入的PCD文件中,点云数量并不一定是相等的 那么首要的一个问题就是, 如何将两帧点云处理成大小相同的矩阵然后进行计算? torch 中一个常用的方法是expand函数, 例如下面这段函数: def expand_matrix(ori_A, ori_B):poin

(转载)matlab中各种范数求解norm

原文http://blog.csdn.net/yihaizhiyan/article/details/6904599 %X为向量,求欧几里德范数,即 。 n = norm(X,inf) %求 无穷-范数,即 。 n = norm(X,1) %求1-范数,即 。 n = norm(X,-inf) %求向量X的元素的绝对值的最小值,即 。 n = norm(X, p) %求p-范数,即 ,所以no

【机器学习300问】88、什么是Batch Norm算法?

一、什么是Batch Norm? (1)Batch Norm的本质 神经网络中的Batch Normalization(批量归一化,简称BatchNorm或BN)是一种改进神经网络训练过程的规范化方法,BatchNorm的主要目的是加速神经网络的训练并提高模型的性能。 在深度学习训练过程中,尤其是深层神经网络中,每层输入的数据分布可能会随着训练的进行而发生变化

NoRM:MongoDB的另一个.NET提供器

原文链接 [收藏] « » NoRM是个包装了MongoDB的.NET程序库,简化了.NET操纵该文档数据库的过程。其主要特性有:强类型的接口、支持LINQ、支持.NET与Mono。 该提供器的主要特性有: 为MongoDB提供了一个强类型的接口支持大多数常用的MongoDB命令支持LINQ-to-MongoDB兼容于.NET与MonoBSON到.NET CL

2024-04-19 问AI: 介绍一下 Frobenius norm 在深度学习中的应用

文心一言 Frobenius范数在深度学习中具有广泛的应用。深度学习是一种模仿人类大脑神经网络结构进行信息处理和学习的方法,其核心是神经网络,由多层神经元组成。在神经网络的训练过程中,Frobenius范数可以帮助我们衡量模型参数的大小和重要性。 具体来说,通过计算权重矩阵的Frobenius范数,我们可以评估模型的复杂度和规模。这有助于我们了解模型的特性,进而进行参数的正则化或剪枝。正则化是

Weighted Nuclear Norm Minimization with Application to Image Denoising——学习笔记

加权核范数(WNNM)最小化及其在图像去噪中的应用——学习笔记 前景提要不同权重 w w w条件下的求解方法权重按非升序排列 w 1 ≥ ⋅ ⋅ ≥ w n ≥ 0 w_1≥··≥w_n≥0 w1≥⋅⋅≥wn≥0权重按任意序排列权重按非降序排列 0 ≤ w 1 ≤ ⋅ ⋅ ≤ w n 0≤w_1≤··≤w_n 0≤w1≤⋅⋅≤wn WNNM在图像去噪中的应用 前景提要

matlab中norm是什么函数

norm函数 格式 n = norm(A); n = norm(A,p); 功能 1、如果A是矩阵 n=norm(A) 返回A的最大奇异值,即max(svd(A)) n=norm(A,p) 根据p的不同,返回不同的值 P返回值1返回A中最大一列和,即max(sum(abs(A)))2返回A的最大奇异值,和n=norm(A)用法一样inf返回A中最大一行和,即max(sum(abs(A’))

.norm() 范数

(A- B).norm().item() 是默认计算A与B的第二范数,如果你想计算差向量的第一范数(也称为L1范数),可以在norm()方法中传递p=1参数,这样就会计算出L1范数。例如: (A- B).norm(p=1).item() 其中,使用.item()方法将结果转换为Python的标量(scalar)类型

转:L2 norm L1 norm什么意思

L2 norm L1 norm什么意思 L2 norm就是欧几里德距离 L1 norm就是绝对值相加,又称曼哈顿距离 搞统计的人总是喜欢搞什么“变量选择”,变量选择实际上的 限制条件是L0 Norm,但这玩艺不好整,于是就转而求L1 Norm(使用均方误差,就是Lasso ,当然在Lasso出来之前搞信号处理的就有过类似的工作),Bishop在书里对着RVM好一通 吹牛,其实RVM只是隐含着去

深度学习方法(十六):Batch Normalization及其变种——Layer Norm, Group Norm,Weight Norm等

很久没写博文了,今天晚上得点空, 抽时间把一块很基础的Layer设计——归一化层写一下,主要是方便自己日后查阅。写的可能会有点慢,有空就写一点。 本文的内容包括: Batch NormalizationLayer NormalizationInstance NormalizationGroup NormalizationWeight NormalizationBatch Renormaliza

到底什么是L2 Norm

最近复现论文有这么一个结构: 池化之后有一个l2-norm。norm是normalization的缩写。Ok,看看这是啥: 标准化?正规化?归一化?… 正确答案 L2归一化:将一组数变成0-1之间。pytorch调用的函数是F.normalize。文档是这样写的: 对于L2来说,p=2,分母就是 ( x 1 2 + x 2 2 + . . . + x n 2 ) \sqrt{( x_1^

ANN Converse to SNN|(2) Max-Norm

Fast-Classifying, High-Accuracy Spiking Deep Networks Through Weight and Threshold Balancing 作者:Peter U. Diehl,Daniel Neil, Jonathan Binas,Matthew Cook,Shih-Chii Liu and Michael Pfeiffer 会议:IJCNN201

random.multivariate_normal和norm.rvs

np.random.multivariate_normal方法用于根据实际情况生成一个多元正态分布矩阵,其在Python3中的定义如下: def multivariate_normal(mean, cov, size=None, check_valid=None, tol=None) 其中mean和cov为必要的传参而size,check_valid以及tol为可选参数。 mean

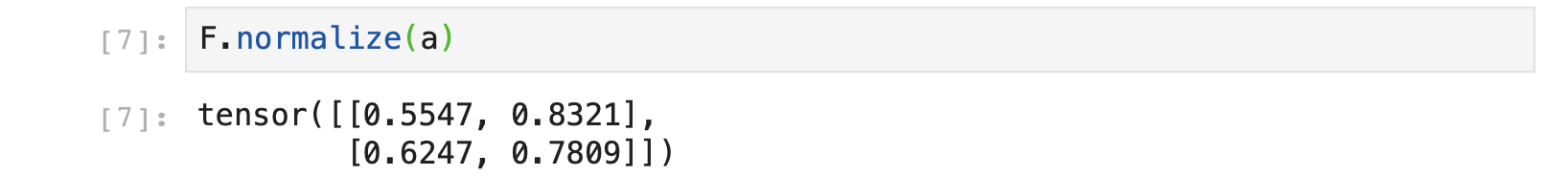

Transformer模型中前置Norm与后置Norm的区别

主要介绍原始Transformer和Vision Transformer中的Norm层不同位置的区别。 文章目录 前言 不同位置的作用 总结 前言 在讨论Transformer模型和Vision Transformer (ViT)模型中归一化层位置的不同,我们首先需要理解归一化层(Normalization)在这些模型中的作用。归一化层主要用于调整输入数据的尺度,以减少梯度消

NORM_X 和 SCALE_X 指令 模拟量反馈和给定

NORM_X 标准化指令:数据的归一化,是将数据按比例缩放,介于0-1之间的实数; SCALE_X 缩放指令:将归一化的数据按照比例放大,是NORM_X的逆操作;

43、实战 - 手写一个 batch norm 算法

这是我们手写的 CNN 网络中第三个经典算法。 在 resnet50 这个神经网络中,总共有 conv , bn, relu, pooling, fc(全连接), softmax 这几个经典算法。 而conv,pooling 在之前的章节已经手写过了,relu 属于一行代码就可以写完的算法,很简单可以暂时忽略,fc(全连接)可以直接用 conv 替换,或者简化一下 conv 的逻辑就可以,so

Pytorch:torch.nn.utils.clip_grad_norm_梯度截断_解读

torch.nn.utils.clip_grad_norm_函数主要作用: 神经网络深度逐渐增加,网络参数量增多的时候,容易引起梯度消失和梯度爆炸。对于梯度爆炸问题,解决方法之一便是进行梯度剪裁torch.nn.utils.clip_grad_norm_(),即设置一个梯度大小的上限。 注:旧版为torch.nn.utils.clip_grad_norm() 函数参数: 官网链接:ht

Transformer中的layer norm(包含代码解释)

在transformer中存在add&norm操作,add操作很简单,就是把注意力矩阵和原来的矩阵相加,也就是残差链接,可以有效减少梯度消失。 下图为layer norm的解释图,可以看出layer norm是针对一个token来做的归一化操作。 具体的实现,我们来看下面这段代码,我们的目标就是使用torch中的LN去计算一个EM,然后我们再自己手动计算一个EM,看看LN到底是不是针对toke

超参数调试与BN(Batch Norm)

1. 深度学习中的超参数 深度学习最难之一的问题,也是被许多人不喜的愿意就是超参数,深度学习中有许多超参数,例如常见的学习率、隐藏层的数量、优化算法中的超参数等等。这些超参数往往没有一个固定的比较好的值,在不同的领域、不同的场景、甚至是硬件条件不变,最适合的超参数也在变化,因此,想要掌握深度学习,超参数是不得不面对的一件事。 那么超参数那么多,到底怎样选取合适的值呢? 我们首先根据大多数人的