本文主要是介绍双目深度估计 立体匹配 论文综述及数据集汇总 Deep Stereo Matching paper review and dataset,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

双目深度估计 立体匹配 论文综述及数据集汇总 paper review and dataset

- Paper

- 0. End-to-End Learning of Geometry and Context for Deep Stereo Regression

- 1. StereoNet: Guided Hierarchical Refinement for Real-Time Edge-Aware Depth Prediction

- 2. Single View Stereo Matching

- 3. Zoom and Learn: Generalizing Deep Stereo Matching to Novel Domains

- 4. Self-Supervised Learning for Stereo Matching with Self-Improving Ability

- 5. Unsupervised Learning of Stereo Matching

- 6. Pyramid Stereo Matching Network

- 7. Learning for Disparity Estimation through Feature Constancy

- 8. SegStereo: Exploiting Semantic Information for Disparity

- 9. DispSegNet: Leveraging Semantics for End-to-End Learning of Disparity Estimation from Stereo Imagery

- Dataset

- 1.KITTI

- 2. Flyingthings3D

- 3. InStereo2K

- 4. https://www.eth3d.net/overview

- 5. http://vision.middlebury.edu/stereo/

找论文搭配 Sci-Hub 食用更佳 💪

Sci-Hub 实时更新 : https://tool.yovisun.com/scihub/

公益科研通文献求助:https://www.ablesci.com/

paper list / CV方向数据集汇总

计算机视觉中利用两个相机从两个视点对同一个目标场景获取两个视点图像,再计算两个视点图像中同点的视差获得目标场景的3D深度信息。

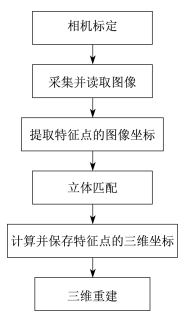

典型的双目立体视觉计算过程包含下面四个步骤:图像畸变矫正、立体图像对校正、图像配准和三角法重投影视差图计算。其难点在于光照信息和视差估计及标定。

相机通过目标物体表面的反射光将空间三维物体转化为平面二维图像,而双目立体视觉是相机成像的逆过程,即以两台相机模拟人眼,通过计算两幅图像对应点间的位置差异并基于视差原理得到物体的三维几何信息,实现三维重建及还原。

求解相机光心位置到二维成像平面和真实三维空间的转换关系(参数矩阵)的过程被称为相机标定,因此,相机标定的准确性对三维重建精度有很大影响。目前常见的相机标定的方法有:摄影测量学的传统标定方法,精度高计算复杂;直接线性变换法,忽略畸变,计算简化,精度低;Tsai两步法;基于 Kruppa方程的自标定方法;张正友标定法;

双目立体视觉所使用的特征点是人工放置的具有特定形状的标志点,而圆形标志点因为在摄影变换下能够保持椭圆形状不变,方便提取和识别而被广泛使用。由于传感器采集到的图像信息中都存在噪声,所以在特征点提取前要对图像进行预处理,通常采取图像滤波方法来降低噪声。由于三维重建需要还原待测物体的深度信息,因此一般采用基于灰度变化的提取算法,基于某一点的灰度值与周围不同的思想进行比较和提取。

双目立体视觉中最为关键的技术是图像的匹配。左右相机由于所处空间位置的不同,拍摄得到的图像在成像平面上会存在水平和深度视差,从而导致两幅图像存在差异。通过左右图像中相同点的准确匹配,这种差异可以被消除。

三维重建可以看作物体通过相机成像的逆过程,和人们用双眼观察三维空间中的物体是相似的。三维重建的原理是基于之前的图像获取、相机标定、特征提取和立体匹配等工作,获得相机的内外参数以及图像特征点的对应关系,最终利用视差原理和三角测量原理得到三维坐标,恢复被测物体的深度信息,实现三维重建。

Paper

0. End-to-End Learning of Geometry and Context for Deep Stereo Regression

1. StereoNet: Guided Hierarchical Refinement for Real-Time Edge-Aware Depth Prediction

arxiv.org/pdf/1807.08865.pdf

复现代码:meteorshowers/StereoNet

2. Single View Stereo Matching

https://arxiv.org/pdf/1803.02612.pdf

https://github.com/lawy623/SVS

单视图双目匹配模型(Single View Stereo Matching, SVS)把单目深度估计分解为两个子过程—视图合成过程和双目匹配过程。

模型的视图合成过程由视图合成网络完成,输入左图,网络合成该图像对应的右图;双目匹配过程由双目匹配网络完成,输入左图以及合成的右图,预测出左图每一个像素的视差值。

视图合成网络: 首先把左图平移多个不同像素距离,得到多张不同的图片,再使用神经网络预测组合系数,把多张平移后的左图和预测的系数组合得到预测的右图。具体就是视图合成网络基于Deep3D (ECCV2016)模型,通过输入一张左图,主干网络对其提取不同尺度的特征,再经过上采样层把不同尺度的特征统一至同一个尺寸,然后经过累加操作融合成输出特征并预测出概率视差图,最后经过选择模块(selection module)结合概率视差图以及输入的左图,得到预测的右图。

双目匹配网络: 双目匹配需要把左图像素和右图中其对应像素进行匹配,再由匹配的像素差算出左图像素对应的深度,而之前的单目深度估计方法均不能显式引入类似的几何约束。由于深度学习模型的引入,双目匹配算法的性能近年来得到了极大的提升。本文的双目匹配网络基于DispNet(CVPR2016)模型, 该模型在KITTI双目匹配数据集上能够达到理想的精度,左图以及合成的右图经过几个卷积层之后,得到的特征会经过1D相关操作(correlation)。相关操作被证明在双目匹配深度学习算法中起关键性的作用,基于相关操作,本文方法显式地引入几何约束;其得到的特征图和左图提取到的特征图进行拼接作为编-解码网络的输入,并最终预测视差图。

3. Zoom and Learn: Generalizing Deep Stereo Matching to Novel Domains

https://arxiv.org/pdf/1803.06641

4. Self-Supervised Learning for Stereo Matching with Self-Improving Ability

5. Unsupervised Learning of Stereo Matching

6. Pyramid Stereo Matching Network

7. Learning for Disparity Estimation through Feature Constancy

8. SegStereo: Exploiting Semantic Information for Disparity

9. DispSegNet: Leveraging Semantics for End-to-End Learning of Disparity Estimation from Stereo Imagery

-

Hierarchical Discrete Distribution Decomposition for Match Density Estimation

-

https://paperswithcode.com/paper/srh-net-stacked-recurrent-hourglass-network test.py

-

https://paperswithcode.com/paper/generalized-closed-form-formulae-for-feature C++

-

https://paperswithcode.com/paper/plantstereo-a-stereo-matching-benchmark-for 985??

-

https://paperswithcode.com/paper/rational-polynomial-camera-model-warping-for 武大遥感

-

https://paperswithcode.com/paper/resdepth-a-deep-prior-for-3d-reconstruction 遥感3D重建

-

https://paperswithcode.com/task/stereo-matching-1/latest

-

https://paperswithcode.com/paper/global-local-path-networks-for-monocular ing

-

https://github.com/ArminMasoumian/GCNDepth/blob/main/mono/apis/trainer.py

-

https://github.com/hyBlue/FSRE-Depth

-

https://github.com/YvanYin/DiverseDepth soon

-

https://github.com/iro-cp/FCRN-DepthPrediction

双目:

- CFNet: Cascade and Fused Cost Volume for Robust Stereo Matching https://github.com/gallenszl/CFNet Pretrained

- ESNet: An Efficient Stereo Matching Network https://github.com/macrohuang1993/ESNet

- Hierarchical Neural Architecture Searchfor Deep Stereo Matching [NeurIPS 20] https://github.com/XuelianCheng/LEAStereo

- YOLOStereo3D: A Step Back to 2D for Efficient Stereo 3D Detection https://github.com/Owen-Liuyuxuan/visualDet3D

- Domain-invariant Stereo Matching Networks https://github.com/feihuzhang/DSMNet

- Cascade Cost Volume for High-Resolution Multi-View Stereo and Stereo Matching https://github.com/alibaba/cascade-stereo/tree/master/CasStereoNet

- FADNet: A Fast and Accurate Network for Disparity Estimation https://github.com/HKBU-HPML/FADNet released the pre-trained Scene Flow model.

- AANet: Adaptive Aggregation Network for Efficient Stereo Matching, CVPR 2020 https://github.com/haofeixu/aanet

- RAFT-Stereo: Multilevel Recurrent Field Transforms for Stereo Matching https://github.com/princeton-vl/raft-stereo pretrained

- MobileStereoNet: Towards Lightweight Deep Networks for Stereo Matching https://github.com/cogsys-tuebingen/mobilestereonet pretrained

- Correlate-and-Excite: Real-Time Stereo Matching via Guided Cost Volume Excitation https://github.com/antabangun/coex

- Pyramid Stereo Matching Network https://github.com/JiaRenChang/PSMNet pretrained

- Anytime Stereo Image Depth Estimation on Mobile Devices https://github.com/mileyan/AnyNet pretrained

- StereoNet: Guided Hierarchical Refinement for Real-Time Edge-Aware Depth Prediction https://github.com/andrewlstewart/StereoNet_PyTorch

单目:

- M4Depth: A motion-based approach for monocular depth estimation on video sequences https://github.com/michael-fonder/M4Depth pretrained

- Towards Interpretable Deep Networks for Monocular Depth Estimation https://github.com/youzunzhi/interpretablemde pretrained

- StructDepth: Leveraging the structural regularities for self-supervised indoor depth estimation https://github.com/sjtu-visys/structdepth pretrained

- Photon-Starved Scene Inference using Single Photon Cameras https://github.com/bhavyagoyal/spclowlight/tree/master/monodepth

- Single Image Depth Prediction with Wavelet Decomposition https://github.com/nianticlabs/wavelet-monodepth/tree/main/KITTI pretrained

- Boosting Monocular Depth Estimation Models to High-Resolution via Content-Adaptive Multi-Resolution Merging https://github.com/compphoto/BoostingMonocularDepth

- GCNDepth: Self-supervised Monocular Depth Estimation based on Graph Convolutional Network https://github.com/arminmasoumian/gcndepth

- From Big to Small: Multi-Scale Local Planar Guidance for Monocular Depth Estimation https://github.com/cleinc/bts

- High Quality Monocular Depth Estimation via Transfer Learning https://github.com/ialhashim/DenseDepth

- DORN: Deep Ordinal Regression Network for Monocular Depth Estimation https://github.com/hufu6371/DORN caffe pretrained

- Channel-Wise Attention-Based Network for Self-Supervised Monocular Depth Estimation https://github.com/kamiLight/CADepth-master pretrained

- Self-Supervised Monocular Depth Estimation with Internal Feature Fusion(arXiv) https://github.com/brandleyzhou/DIFFNet pretrained

Dataset

1.KITTI

A. Geiger, P. Lenz, and R. Urtasun. Are we ready for autonomous driving? the kitti vision benchmark suite. In CVPR, 2012. 2, 4. https://gas.graviti.cn/dataset/hello-dataset/KITTIStereo2015/download http://www.cvlibs.net/datasets/kitti/

2. Flyingthings3D

https://lmb.informatik.uni-freiburg.de/resources/datasets/SceneFlowDatasets.en.html

ReDWeb V1 datase https://sites.google.com/site/redwebcvpr18/

3. InStereo2K

https://gitcode.net/mirrors/YuhuaXu/StereoDataset?utm_source=csdn_github_accelerator

4. https://www.eth3d.net/overview

eth3d

5. http://vision.middlebury.edu/stereo/

middlebury 三种大小分辨率

这篇关于双目深度估计 立体匹配 论文综述及数据集汇总 Deep Stereo Matching paper review and dataset的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!