本文主要是介绍鸢尾花和月亮数据集,运用线性LDA、k-means和SVM算法进行二分类可视化分析,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

文章目录

- 一、线性LDA

- 1.鸢尾花LDA

- 2.月亮集LDA

- 二、K-means

- 1.鸢尾花k-means

- 2.月亮集k-means

- 三、SVM

- 1.鸢尾花svm

- 2.月亮集svm

- 四、SVM的优缺点

- 优点

- 缺点

- 五、参考文章

一、线性LDA

1.鸢尾花LDA

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasetsdef LDA(X, y):#根据y等于0或1分类X1 = np.array([X[i] for i in range(len(X)) if y[i] == 0])X2 = np.array([X[i] for i in range(len(X)) if y[i] == 1])len1 = len(X1)len2 = len(X2) mju1 = np.mean(X1, axis=0)#求中心点mju2 = np.mean(X2, axis=0)cov1 = np.dot((X1 - mju1).T, (X1 - mju1))cov2=np.dot((X2 - mju2).T, (X2 - mju2))Sw = cov1 + cov2a=mju1-mju2a=(np.array([a])).Tw=(np.dot(np.linalg.inv(Sw),a))X1_new =func(X1, w)X2_new = func(X2, w)y1_new = [1 for i in range(len1)]y2_new = [2 for i in range(len2)]return X1_new,X2_new,y1_new,y2_new

def func(x, w):return np.dot((x), w)iris = datasets.load_iris()

X = iris["data"][:, (2, 3)] # 花瓣长度与花瓣宽度 petal length, petal width

y = iris["target"]

#print(y)

setosa_or_versicolor = (y == 0) | (y == 1)

X = X[setosa_or_versicolor]

y = y[setosa_or_versicolor]

#print(Sw)

x1_new, X2_new, y1_new, y2_new = LDA(X, y)

plt.xlabel('花瓣长度')

plt.ylabel('花瓣宽度')

plt.rcParams['font.sans-serif']=['SimHei'] #显示中文标签

plt.rcParams['axes.unicode_minus']=False

plt.scatter(X[:, 0], X[:, 1], marker='o', c=y)

plt.title("Iris_LDA")

plt.show()

2.月亮集LDA

def LDA(X, y):#根据y等于0或1分类X1 = np.array([X[i] for i in range(len(X)) if y[i] == 0])X2 = np.array([X[i] for i in range(len(X)) if y[i] == 1])len1 = len(X1)len2 = len(X2) mju1 = np.mean(X1, axis=0)#求中心点mju2 = np.mean(X2, axis=0)cov1 = np.dot((X1 - mju1).T, (X1 - mju1))cov2=np.dot((X2 - mju2).T, (X2 - mju2))Sw = cov1 + cov2a=mju1-mju2a=(np.array([a])).Tw=(np.dot(np.linalg.inv(Sw),a))X1_new =func(X1, w)X2_new = func(X2, w)y1_new = [1 for i in range(len1)]y2_new = [2 for i in range(len2)]

def func(x, w):return np.dot((x), w)

X, y = datasets.make_moons(n_samples=100, noise=0.15, random_state=42)

plt.scatter(X[:, 0], X[:, 1], marker='o', c=y)

plt.title("moon_LDA")

plt.show()

二、K-means

1.鸢尾花k-means

from sklearn import datasets

import matplotlib.pyplot as plt

from sklearn.cluster import KMeans#加载数据集,是一个字典类似Java中的map

lris_df = datasets.load_iris()#挑选出前两个维度作为x轴和y轴,你也可以选择其他维度

x_axis = lris_df.data[:,0]

y_axis = lris_df.data[:,2]model = KMeans(n_clusters=2)#训练模型

model.fit(lris_df.data)#选取行标为100的那条数据,进行预测

prddicted_label= model.predict([[6.3, 3.3, 6, 2.5]])#预测全部150条数据

all_predictions = model.predict(lris_df.data)#打印出来对150条数据的聚类散点图

plt.scatter(x_axis, y_axis, c=all_predictions)

plt.title("Iris_KMeans")

plt.show()

2.月亮集k-means

#基于k-means算法对月亮数据集进行分类

import matplotlib.pyplot as plt

from sklearn.cluster import KMeans

from sklearn.datasets import make_moons

from sklearn.pipeline import Pipeline

import numpy as np

X,y=make_moons(n_samples=100,shuffle=True,noise=0.15,random_state=42)

clf = KMeans(n_clusters=2)

clf.fit(X,y)

predicted = clf.predict(X)

plt.scatter(X[:,0], X[:,1], c=predicted, marker='s',s=100,cmap=plt.cm.Paired)

plt.title("Moon_KMeans")

plt.show()

三、SVM

1.鸢尾花svm

from sklearn.svm import SVC

from sklearn import datasetsiris = datasets.load_iris()

X = iris["data"][:, (2, 3)] # petal length, petal width

y = iris["target"]setosa_or_versicolor = (y == 0) | (y == 1)

X = X[setosa_or_versicolor]

y = y[setosa_or_versicolor]# SVM Classifier model

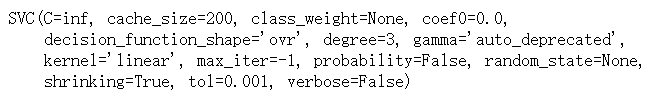

svm_clf = SVC(kernel="linear", C=float("inf"))

svm_clf.fit(X, y)

def plot_svc_decision_boundary(svm_clf, xmin, xmax):# 获取决策边界的w和bw = svm_clf.coef_[0]b = svm_clf.intercept_[0]# At the decision boundary, w0*x0 + w1*x1 + b = 0# => x1 = -w0/w1 * x0 - b/w1x0 = np.linspace(xmin, xmax, 200)# 画中间的粗线decision_boundary = -w[0]/w[1] * x0 - b/w[1]# 计算间隔margin = 1/w[1]gutter_up = decision_boundary + margingutter_down = decision_boundary - margin# 获取支持向量svs = svm_clf.support_vectors_plt.scatter(svs[:, 0], svs[:, 1], s=180, facecolors='#FFAAAA')plt.plot(x0, decision_boundary, "k-", linewidth=2)plt.plot(x0, gutter_up, "k--", linewidth=2)plt.plot(x0, gutter_down, "k--", linewidth=2)

# Bad models

x0 = np.linspace(0, 5.5, 200)plt.figure(figsize=(12,2.7))plt.axis([0, 5.5, 0, 2])plt.subplot(122)

plot_svc_decision_boundary(svm_clf, 0, 5.5)

plt.plot(X[:, 0][y==1], X[:, 1][y==1], "bs")

plt.plot(X[:, 0][y==0], X[:, 1][y==0], "yo")

plt.xlabel("Petal length", fontsize=14)

plt.axis([0, 5.5, 0, 2])

plt.title("Iris_svm")

plt.show()

2.月亮集svm

from sklearn.datasets import make_moons

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import PolynomialFeatures

import numpy as np

from sklearn import datasets

from sklearn.preprocessing import StandardScaler

from sklearn.svm import LinearSVC

X, y = make_moons(n_samples=100, noise=0.15, random_state=42)

polynomial_svm_clf = Pipeline([# 将源数据 映射到 3阶多项式("poly_features", PolynomialFeatures(degree=3)),# 标准化("scaler", StandardScaler()),# SVC线性分类器("svm_clf", LinearSVC(C=10, loss="hinge", random_state=42))])

polynomial_svm_clf.fit(X, y)

def plot_dataset(X, y, axes):plt.plot(X[:, 0][y==0], X[:, 1][y==0], "bs")plt.plot(X[:, 0][y==1], X[:, 1][y==1], "g^")plt.axis(axes)plt.grid(True, which='both')

def plot_predictions(clf, axes):# 打表x0s = np.linspace(axes[0], axes[1], 100)x1s = np.linspace(axes[2], axes[3], 100)x0, x1 = np.meshgrid(x0s, x1s)X = np.c_[x0.ravel(), x1.ravel()]y_pred = clf.predict(X).reshape(x0.shape)y_decision = clf.decision_function(X).reshape(x0.shape)

# print(y_pred)

# print(y_decision)plt.contourf(x0, x1, y_pred, cmap=plt.cm.brg, alpha=0.2)plt.contourf(x0, x1, y_decision, cmap=plt.cm.brg, alpha=0.1)

plot_predictions(polynomial_svm_clf, [-1.5, 2.5, -1, 1.5])

plot_dataset(X, y, [-1.5, 2.5, -1, 1.5])

plt.title("moon_svm")

plt.show()

四、SVM的优缺点

优点

1、使用核函数可以向高维空间进行映射

2、使用核函数可以解决非线性的分类

3、分类思想很简单,就是将样本与决策面的间隔最大化

4、分类效果较好

缺点

1、对大规模数据训练比较困难

2、无法直接支持多分类,但是可以使用间接的方法来做

五、参考文章

https://blog.csdn.net/qq_45213986/article/details/106186415?fps=1&locationNum=2?ops_request_misc=&request_id=&biz_id=102&utm_term=python%E5%AE%9E%E7%8E%B0%E9%B8%A2%E5%B0%BE%E8%8A%B1LDA&utm_medium=distribute.pc_search_result.none-task-blog-2allsobaiduweb~default-1-106186415

https://blog.csdn.net/zrh_CSDN/article/details/80934248

这篇关于鸢尾花和月亮数据集,运用线性LDA、k-means和SVM算法进行二分类可视化分析的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!