本文主要是介绍GAMES101-Assignment3,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

GAMES101-Assignment3

参考文章:

1.《GAMES101》作业框架问题详解

2. Games101:作业3(管线分析、深度插值、libpng warning、双线性插值等)

3.【GAMES101】作业3(提高)与法线贴图原理和渲染管线框架分析

文件清单:

CMakeLists.txt(项目配置清单,cmake根据此清单进行系统构建、编译、测试)

OBJ_Loader.h(用于加载三维模型)

global.hpp(定义全局变量PI)

rasterizer.hpp(光栅器头文件)

Texture.hpp(声明纹理宽高和根据纹理坐标获取像素颜色的函数getColor)

Triangle.hpp(三角形的头文件,定义其相关属性)

Shader.hpp(声明颜色、法向量、纹理、纹理坐标,支持纹理映射,定义了fragment_shader_payload,其中包括了 Fragment Shader 可能用到的参数)

rasterizer.cpp(生成渲染器界面与绘制)

Texture.cpp(目前为空文件)

Triangle.cpp(画出三角形)

main.cpp

1.实验要求

2.实验内容及其结果

2.1 rasterize_triangle in rasterizer.cpp

barycentric

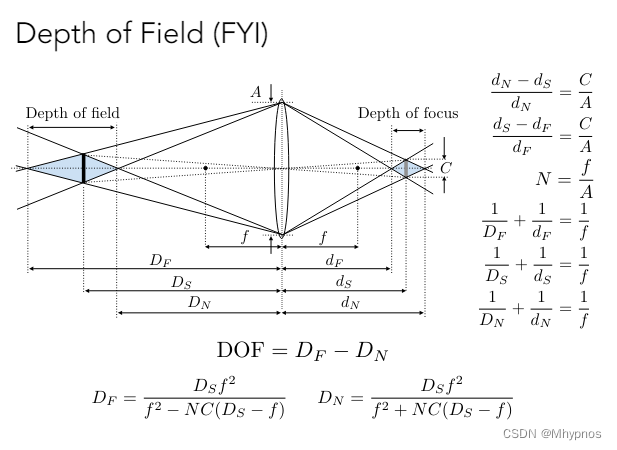

初始化空间中三角形三个点的颜色、法向量、纹理坐标、shading point位置,通过重心公式对三角形内的各个属性进行插值,计算得到的各个属性传入fragment_shader_payload

//Screen space rasterization

void rst::rasterizer::rasterize_triangle(const Triangle& t, const std::array<Eigen::Vector3f, 3>& view_pos)

{// TODO: From your HW3, get the triangle rasterization code.// TODO: Inside your rasterization loop:// * v[i].w() is the vertex view space depth value z.// * Z is interpolated view space depth for the current pixel// * zp is depth between zNear and zFar, used for z-buffer// float Z = 1.0 / (alpha / v[0].w() + beta / v[1].w() + gamma / v[2].w());// float zp = alpha * v[0].z() / v[0].w() + beta * v[1].z() / v[1].w() + gamma * v[2].z() / v[2].w();// zp *= Z;// TODO: Interpolate the attributes:// auto interpolated_color// auto interpolated_normal// auto interpolated_texcoords// auto interpolated_shadingcoords// Use: fragment_shader_payload payload( interpolated_color, interpolated_normal.normalized(), interpolated_texcoords, texture ? &*texture : nullptr);// Use: payload.view_pos = interpolated_shadingcoords;// Use: Instead of passing the triangle's color directly to the frame buffer, pass the color to the shaders first to get the final color;// Use: auto pixel_color = fragment_shader(payload);auto v = t.toVector4();// Define bound of box//INT_MAX: infnity represented by top limitation of integerint BoxMin_X =INT_MAX,BoxMin_Y=INT_MAX;//INT_MIN: infnity represented by bottom limitation of integerint BoxMax_X =INT_MIN,BoxMax_Y=INT_MIN;//iterate to find boundfor (int i = 0; i < 3; i++){BoxMin_X=std::min(BoxMin_X,(int)v[i][0]);BoxMin_Y=std::min(BoxMin_Y,(int)v[i][1]);BoxMax_X=std::max(BoxMax_X,(int)v[i][0]);BoxMax_Y=std::max(BoxMax_Y,(int)v[i][1]);}//iterate pixel inside of bounding boxfor (int i = BoxMin_X; i <= BoxMax_X; i++){for (int j = BoxMin_Y; j <= BoxMax_Y; j++){//float x=i+0.5,y=i+0.5;//check if center of current pixel is inside the triangleif (insideTriangle(i,j,t.v))//if centric pixel(x,y) is insidez. three point v[0],v[1],v[2]in triangle {//interpolated depth valueauto[alpha, beta, gamma] = computeBarycentric2D(i+0.5, j+0.5, t.v);float w_reciprocal = 1.0/(alpha / v[0].w() + beta / v[1].w() + gamma / v[2].w());float z_interpolated = alpha * v[0].z() / v[0].w() + beta * v[1].z() / v[1].w() + gamma * v[2].z() / v[2].w();z_interpolated *= w_reciprocal;//interpolated depth value is compared with depth_bufferif (-z_interpolated < depth_buf[get_index(i,j)])//get buffer index of pixel(x,y){ //color interpolateauto interpolated_color = interpolate(alpha,beta,gamma,t.color[0],t.color[1],t.color[2],1);//normal vector interpolateauto interpolated_normal = interpolate(alpha,beta,gamma,t.normal[0],t.normal[1],t.normal[2],1).normalized();//texture coordinates interpolateauto interpolated_texcoords = interpolate(alpha,beta,gamma,t.tex_coords[0],t.tex_coords[1],t.tex_coords[2],1);//shading point coordinates interpolateauto interpolated_shadingcoords = interpolate(alpha,beta,gamma,view_pos[0],view_pos[1],view_pos[2],1);//interpolated attributes send to fragment_shader_payloadfragment_shader_payload payload(interpolated_color,interpolated_normal.normalized(),interpolated_texcoords,texture ? &*texture :nullptr);//send orignal coord to view_pospayload.view_pos = interpolated_shadingcoords;//update depth bufferdepth_buf[get_index(i,j)] = -z_interpolated;//update frame bufferframe_buf[get_index(i,j)] = fragment_shader(payload);//get color of 1 out of 3 vertex in triangle,then set this color to current pixelset_pixel({i,j},frame_buf[get_index(i,j)]);//set_pixel({i,j,1},t.getColor());//(x,y,1) homogeneous coord,num 1 represent a point}}}}

}

2.2 get_projection_matrix in main.cpp

MVP变换

Eigen::Matrix4f get_projection_matrix(float eye_fov, float aspect_ratio, float zNear, float zFar)

{// TODO: Use the same projection matrix from the previous assignmentsEigen::Matrix4f projection = Eigen::Matrix4f::Identity();// TODO: Implement this function// Create the projection matrix for the given parameters.// Then return it.// n=zNear f=zFar // calculate r l t b according to eye_fov and aspect_ratio // tan(eye_fov/2)=t/|n|,aspect_ratio=r/t// t=2|n|tan(eye_fov/2),r=t*aspect_ratio,l=-r,b=-tfloat t=2*abs(zNear)*tan(eye_fov/2),b=-t;float r=aspect_ratio*t,l=-r;eye_fov=(eye_fov/180.0)*MY_PI;//angle to rad//perspective projection M_{projection}= M_{orthographics}*M_{persp to ortho}Eigen::Matrix4f M_persp_to_ortho,M_ortho,M_scale,M_translate;M_persp_to_ortho << zNear,0,0,0,0,zNear,0,0,0,0,zNear+zFar,-zNear*zFar,0,0,1,0;//M_{orthographics}=scale*translateM_scale << 2/(r-l),0,0,0,0,2/(t-b),0,0,0,0,2/(zNear-zFar),0,0,0,0,1;M_translate << 1,0,0,-(r+l)/2,0,1,0,-(t+b)/2,0,0,1,-(zNear+zFar)/2,0,0,0,1;M_ortho = M_scale*M_translate;projection = M_ortho*M_persp_to_ortho*projection;return projection;}

in main.cpp 激活相应 shader

编译后运行

./Rasterizer output.png normal

2.3 phong_fragment_shader in main.cpp

Blinn-phong 反射模型: 正确实现 phong_fragment_shader 对应的反射模型

Eigen::Vector3f phong_fragment_shader(const fragment_shader_payload& payload)

{Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);Eigen::Vector3f kd = payload.color;Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);auto l1 = light{{20, 20, 20}, {500, 500, 500}};auto l2 = light{{-20, 20, 0}, {500, 500, 500}};std::vector<light> lights = {l1, l2};Eigen::Vector3f amb_light_intensity{10, 10, 10};Eigen::Vector3f eye_pos{0, 0, 10};float p = 150;//p value control range of specularEigen::Vector3f color = payload.color;Eigen::Vector3f point = payload.view_pos;Eigen::Vector3f normal = payload.normal;Eigen::Vector3f result_color = {0, 0, 0};Eigen::Vector3f l,v,dif,h,spe,amb;for (auto& light : lights){// TODO: For each light source in the code, calculate what the *ambient*, *diffuse*, and *specular* // components are. Then, accumulate that result on the *result_color* object.//light vector ll = (light.position - point).normalized();//view vector vv = (eye_pos - point).normalized();//distance r from light to shading point,namely norm of light vector//r = (light.position - point).dot(light.position - point);//diffuse L_d= kd(I/r^2)max(0,n dot l)dif = kd.cwiseProduct(light.intensity / (light.position - point).dot(light.position - point))*std::fmax(0, normal.dot(l));//normal is n vector//half vector h=(v+l)/norm(v+l)h = (v+l) / ((v+l).dot(v+l));//specular L_s=ks(I/r^2)max(0,n dot h)^pspe = ks.cwiseProduct(light.intensity / ((light.position - point).dot(light.position - point)))*pow(std::fmax(0,normal.dot(h.normalized())),p);//ambient La=kaIaamb = ka.cwiseProduct(amb_light_intensity);result_color += (dif + spe + amb);}return result_color * 255.f;

}

in main.cpp 激活相应 shader

编译后运行

./Rasterizer output.png phong

2.4 texture_fragment_shader in main.cpp

Texture mapping: 将 phong_fragment_shader 的代码拷贝到 texture_fragment_shader, 在此基础上正确实现 Texture Mapping.

phong_fragment_shader中color = payload_color

texture_fragment_shader中color = texture_color

Eigen::Vector3f texture_fragment_shader(const fragment_shader_payload& payload)

{Eigen::Vector3f return_color = {0, 0, 0};if (payload.texture){// TODO: Get the texture value at the texture coordinates of the current fragmentreturn_color = payload.texture->getColor(payload.tex_coords.x(),payload.tex_coords.y());}Eigen::Vector3f texture_color;texture_color << return_color.x(), return_color.y(), return_color.z();//ambient term coefficient kaEigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);//diffuse reflection coefficient kdEigen::Vector3f kd = texture_color / 255.f;//specular term coefficient ksEigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);//light's position and intensityauto l1 = light{{20, 20, 20}, {500, 500, 500}};auto l2 = light{{-20, 20, 0}, {500, 500, 500}};std::vector<light> lights = {l1, l2};Eigen::Vector3f amb_light_intensity{10, 10, 10};Eigen::Vector3f eye_pos{0, 0, 10};float p = 150;//color of textureEigen::Vector3f color = texture_color;//coord of shading pointEigen::Vector3f point = payload.view_pos;//normal vector of shading pointEigen::Vector3f normal = payload.normal;Eigen::Vector3f result_color = {0, 0, 0};Eigen::Vector3f l,v,dif,h,spe,amb;for (auto& light : lights){// TODO: For each light source in the code, calculate what the *ambient*, *diffuse*, and *specular* // components are. Then, accumulate that result on the *result_color* object.//light vector ll = (light.position - point).normalized();//view vector vv = (eye_pos - point).normalized();//distance r from light to shading point,namely norm of light vector//(light.position - point).dot(light.position - point)//diffuse L_d= kd(I/r^2)max(0,n dot l)dif = kd.cwiseProduct(light.intensity / ((light.position - point).dot(light.position - point)))*std::fmax(0, normal.dot(l));//normal is n vector//half vector h=(v+l)/norm(v+l)h = (v+l) / ((v+l).dot(v+l));//specular L_s=ks(I/r^2)max(0,n dot h)^pspe = ks.cwiseProduct(light.intensity / ((light.position - point).dot(light.position - point)))*pow(std::fmax(0,normal.dot(h.normalized())),p);//ambient La=kaIaamb = ka.cwiseProduct(amb_light_intensity);result_color += (dif + spe + amb);}return result_color * 255.f;

}

in main.cpp 激活相应shader

编译后运行

./Rasterizer output.png texture

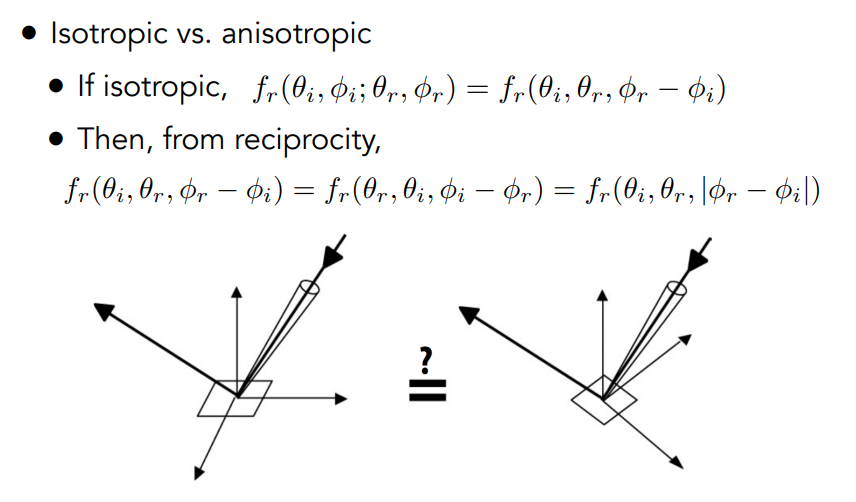

2.5 bump mapping in main.cpp

Eigen::Vector3f bump_fragment_shader(const fragment_shader_payload& payload)

{Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);Eigen::Vector3f kd = payload.color;Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);auto l1 = light{{20, 20, 20}, {500, 500, 500}};auto l2 = light{{-20, 20, 0}, {500, 500, 500}};std::vector<light> lights = {l1, l2};Eigen::Vector3f amb_light_intensity{10, 10, 10};Eigen::Vector3f eye_pos{0, 0, 10};float p = 150;Eigen::Vector3f color = payload.color; Eigen::Vector3f point = payload.view_pos;Eigen::Vector3f normal = payload.normal;float kh = 0.2, kn = 0.1;// TODO: Implement bump mapping here// Let n = normal = (x, y, z)// Vector t = (x*y/sqrt(x*x+z*z),sqrt(x*x+z*z),z*y/sqrt(x*x+z*z))// Vector b = n cross product t// Matrix TBN = [t b n]// dU = kh * kn * (h(u+1/w,v)-h(u,v))// dV = kh * kn * (h(u,v+1/h)-h(u,v))// Vector ln = (-dU, -dV, 1)// Normal n = normalize(TBN * ln)//------------------------//perturb the normal in 3D//original surface normal n(p)=(0,0,1)//derivative at p are //dp/du=c1*[h(u+1)-h(u)]//dp/dv=c2*[h(v+1)-h(v)]//perturbed normal is then n(p)=(-dp/du,-dp/dv,1).normalized()float x=normal.x(),y=normal.y(),z=normal.z();Eigen::Vector3f t{x*y / std::sqrt(x*x+z*z), x*z / std::sqrt(x*x+z*z), z*y / std::sqrt(x*x+z*z)};Eigen::Vector3f b = normal.cross(t);Eigen::Matrix3f TBN;TBN << t.x(),b.x(),normal.x(),t.y(),b.y(),normal.y(),t.z(),b.z(),normal.z();float u = payload.tex_coords.x(),v = payload.tex_coords.y();float w = payload.texture->width,h = payload.texture->height;float dU = kh*kn*(payload.texture->getColor(u+1.0f/w,v).norm() - payload.texture->getColor(u,v).norm());float dV = kh*kn*(payload.texture->getColor(u,v+1.0f/h).norm() - payload.texture->getColor(u,v).norm());Eigen::Vector3f perturbed_n{-dU,-dV,1.0f};normal = TBN * perturbed_n;//-------------------------Eigen::Vector3f result_color = {0, 0, 0};result_color = normal.normalized();return result_color * 255.f;

}

编译后运行

./Rasterizer output.png bump

2.6 displacement mapping in main.cpp

Eigen::Vector3f displacement_fragment_shader(const fragment_shader_payload& payload)

{Eigen::Vector3f ka = Eigen::Vector3f(0.005, 0.005, 0.005);Eigen::Vector3f kd = payload.color;Eigen::Vector3f ks = Eigen::Vector3f(0.7937, 0.7937, 0.7937);auto l1 = light{{20, 20, 20}, {500, 500, 500}};auto l2 = light{{-20, 20, 0}, {500, 500, 500}};std::vector<light> lights = {l1, l2};Eigen::Vector3f amb_light_intensity{10, 10, 10};Eigen::Vector3f eye_pos{0, 0, 10};float p = 150;Eigen::Vector3f color = payload.color; Eigen::Vector3f point = payload.view_pos;Eigen::Vector3f normal = payload.normal;float kh = 0.2, kn = 0.1;// TODO: Implement displacement mapping here// Let n = normal = (x, y, z)// Vector t = (x*y/sqrt(x*x+z*z),sqrt(x*x+z*z),z*y/sqrt(x*x+z*z))// Vector b = n cross product t// Matrix TBN = [t b n]// dU = kh * kn * (h(u+1/w,v)-h(u,v))// dV = kh * kn * (h(u,v+1/h)-h(u,v))// Vector ln = (-dU, -dV, 1)// Position p = p + kn * n * h(u,v)// Normal n = normalize(TBN * ln)//------------------------//perturb the normal in 3D//original surface normal n(p)=(0,0,1)//derivative at p are //dp/du=c1*[h(u+1)-h(u)]//dp/dv=c2*[h(v+1)-h(v)]//perturbed normal is then n(p)=(-dp/du,-dp/dv,1).normalized()float x=normal.x(),y=normal.y(),z=normal.z();Eigen::Vector3f t{x*y / std::sqrt(x*x+z*z), x*z / std::sqrt(x*x+z*z), z*y / std::sqrt(x*x+z*z)};Eigen::Vector3f b = normal.cross(t);Eigen::Matrix3f TBN;TBN << t.x(),b.x(),normal.x(),t.y(),b.y(),normal.y(),t.z(),b.z(),normal.z();float u = payload.tex_coords.x(),v = payload.tex_coords.y();float w = payload.texture->width,h = payload.texture->height;float dU = kh*kn*(payload.texture->getColor(u+1.0f/w,v).norm() - payload.texture->getColor(u,v).norm());float dV = kh*kn*(payload.texture->getColor(u,v+1.0f/h).norm() - payload.texture->getColor(u,v).norm());Eigen::Vector3f perturbed_n{-dU,-dV,1.0f};normal = TBN * perturbed_n;point += (kn * normal * payload.texture->getColor(u,v).norm()); //-------------------------Eigen::Vector3f result_color = {0, 0, 0};Eigen::Vector3f light_vec,view_vec,dif,half_vec,spe,amb;for (auto& light : lights){// TODO: For each light source in the code, calculate what the *ambient*, *diffuse*, and *specular* // components are. Then, accumulate that result on the *result_color* object.//light vector llight_vec = (light.position - point).normalized();//view vector vview_vec = (eye_pos - point).normalized();//+l??//distance r from light to shading point,namely norm of light vector//r = (light.position - point).dot(light.position - point);//diffuse L_d= kd(I/r^2)max(0,n dot l)dif = kd.cwiseProduct(light.intensity / (light.position - point).dot(light.position - point))*std::fmax(0, normal.dot(light_vec));//normal is n vector//half vector h=(v+l)/norm(v+l)half_vec = (view_vec+light_vec) / ((view_vec+light_vec).dot(view_vec+light_vec));//specular L_s=ks(I/r^2)max(0,n dot h)^pspe = ks.cwiseProduct(light.intensity / ((light.position - point).dot(light.position - point)))*pow(std::fmax(0,normal.dot(half_vec.normalized())),p);//ambient La=kaIaamb = ka.cwiseProduct(amb_light_intensity);result_color += (dif + spe + amb);}return result_color * 255.f;

}

编译后运行

./Rasterizer output.png displacement

这篇关于GAMES101-Assignment3的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!