本文主要是介绍贝叶斯优化调参实战(随机森林,lgbm波士顿房价),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

本文名字叫做贝叶斯优化实战~~就说明我不会在这里讲它的理论知识。因为我还没看懂。。。

不过用起来是真的舒服,真是好用的不行呢~

开始本文之前,我先说一下我目前用到的调参的手段。

1.网格搜索与随机搜索:

图来自:https://www.cnblogs.com/marsggbo/p/9866764.html

我们都知道神经网络训练是由许多超参数决定的,例如网络深度,学习率,卷积核大小等等。所以为了找到一个最好的超参数组合,最直观的的想法就是Grid Search,其实也就是穷举搜索,示意图如下。

但是我们都知道机器学习训练模型是一个非常耗时的过程,而且现如今随着网络越来越复杂,超参数也越来越多,以如今计算力而言要想将每种可能的超参数组合都实验一遍(即Grid Search)明显不现实,所以一般就是事先限定若干种可能,但是这样搜索仍然不高效。

所以为了提高搜索效率,人们提出随机搜索,示意图如下。虽然随机搜索得到的结果互相之间差异较大,但是实验证明随机搜索的确比网格搜索效果要好。

2.贝叶斯优化

先说一下它的好处吧:我记得我之前调SVR网格搜索时候,就是一个噩梦,3000个数据也得搞个小一会~我知道我的小mbp是不适合运行这些,但是你这也太慢了吧。而且事实证明不仅慢,效果其实也有待商榷。算了,不讨论有多差了,来看看贝叶斯优化吧。真实太棒了。

下面我会以一个随机森林二分类与一个lightgbm回归两个例子展示贝叶斯优化,我相信以后遇到别的模型调参,有这两个模板在,问题都不大~我还是建议这些代码都要背下来,这样以后顺手就来多帅哦。

关于安装:

pip install bayesian-optimization

我的版本:

bayesian-optimization-1.0.0

bayesian-optimization 的github:https://github.com/fmfn/BayesianOptimization/blob/master/examples/basic-tour.ipynb

3.RF分类调参

参考:https://www.cnblogs.com/yangruiGB2312/p/9374377.html

from sklearn.datasets import make_classification

from sklearn.ensemble import RandomForestClassifier

from sklearn.cross_validation import cross_val_score

from bayes_opt import BayesianOptimization# 产生随机分类数据集,10个特征, 2个类别

x, y = make_classification(n_samples=1000,n_features=10,n_classes=2)

我们先看看不调参的结果:

import numpy as np

rf = RandomForestClassifier()

print(np.mean(cross_val_score(rf, x, y, cv=20, scoring='roc_auc')))

0.9839600000000001

bayes调参初探

我们先定义一个目标函数,里面放入我们希望优化的函数。比如此时,函数输入为随机森林的所有参数,输出为模型交叉验证5次的AUC均值,作为我们的目标函数。因为bayes_opt库只支持最大值,所以最后的输出如果是越小越好,那么需要在前面加上负号,以转为最大值。由于bayes优化只能优化连续超参数,因此要加上int()转为离散超参数。

def rf_cv(n_estimators, min_samples_split, max_features, max_depth):val = cross_val_score(RandomForestClassifier(n_estimators=int(n_estimators),min_samples_split=int(min_samples_split),max_features=min(max_features, 0.999), # floatmax_depth=int(max_depth),random_state=2),x, y, 'roc_auc', cv=5).mean()return val

然后我们就可以实例化一个bayes优化对象了:

rf_bo = BayesianOptimization(rf_cv,{'n_estimators': (10, 250),'min_samples_split': (2, 25),'max_features': (0.1, 0.999),'max_depth': (5, 15)})

里面的第一个参数是我们的优化目标函数,第二个参数是我们所需要输入的超参数名称,以及其范围。超参数名称必须和目标函数的输入名称一一对应。

完成上面两步之后,我们就可以运行bayes优化了!

rf_bo.maximize()

等到程序结束,我们可以查看当前最优的参数和结果:

rf_bo.max

{'target': 0.9896799999999999,'params': {'max_depth': 12.022326832956438,'max_features': 0.42437136034968226,'min_samples_split': 17.51437357464919,'n_estimators': 116.69549115408005}}

总结:

由于我没有指定迭代次数。他就会首先随机找5个点,之后在迭代25次,共30次。

4.lgbm波士顿房价回归调参

贝叶斯优化github:

https://github.com/fmfn/BayesianOptimization/blob/master/examples/basic-tour.ipynb

因为这部分代码比较冗长,我写的时候也很费劲觉得,但是思想是很明确的。所以我就不加说明了,如有疑问请留言我进行文字补充。

以波士顿房价为例

#导入包

from sklearn.datasets import load_boston

from bayes_opt import BayesianOptimization

import lightgbm as lgb

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

import warnings

warnings.filterwarnings('ignore')

import pandas as pd

import numpy as np

from sklearn import metrics

import matplotlib.pyplot as plt

import seaborn as sns

plt.style.use('ggplot')

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import KFold

data = load_boston()

data.keys()

dict_keys(['data', 'target', 'feature_names', 'DESCR'])

X = data.data

Y = data.target

X_train,X_test,Y_train,Y_test = train_test_split(X,Y,test_size=0.2,random_state=2019)

# 数据标准归一化

ss = StandardScaler()

X_train = ss.fit_transform(X_train)

X_test = ss.transform(X_test)

如果不调参:

regressor = lgb.LGBMRegressor(n_estimators=80)

regressor.fit(X_train,Y_train)

LGBMRegressor(boosting_type='gbdt', class_weight=None, colsample_bytree=1.0,importance_type='split', learning_rate=0.1, max_depth=-1,min_child_samples=20, min_child_weight=0.001, min_split_gain=0.0,n_estimators=80, n_jobs=-1, num_leaves=31, objective=None,random_state=None, reg_alpha=0.0, reg_lambda=0.0, silent=True,subsample=1.0, subsample_for_bin=200000, subsample_freq=0)

# plt.scatter(regressor.predict(X_test),Y_test)

sns.regplot(regressor.predict(X_test),Y_test)

#lgbm没有调参的r2

metrics.r2_score(regressor.predict(X_test),Y_test)

0.7846789447853599

#看看rf不调参的表现,只是看看,与本文无关

rf = RandomForestRegressor(n_estimators=80)

rf.fit(X_train,Y_train)

metrics.r2_score(rf.predict(X_test),Y_test)

0.7647630340879641

开始调参!!!

def LGB_CV(max_depth,num_leaves,min_data_in_leaf,feature_fraction,bagging_fraction,lambda_l1):folds = KFold(n_splits=5, shuffle=True, random_state=15)oof = np.zeros(X_train.shape[0])for fold_, (trn_idx, val_idx) in enumerate(folds.split(X_train, Y_train)):print("fold n°{}".format(fold_))trn_data = lgb.Dataset(X_train[trn_idx],label=Y_train[trn_idx],)val_data = lgb.Dataset(X_train[val_idx],label=Y_train[val_idx],)param = {'num_leaves': int(num_leaves),'min_data_in_leaf': int(min_data_in_leaf), 'objective':'regression','max_depth': int(max_depth),'learning_rate': 0.01,"boosting": "gbdt","feature_fraction": feature_fraction,"bagging_freq": 1,"bagging_fraction": bagging_fraction ,"bagging_seed": 11,"metric": 'rmse',"lambda_l1": lambda_l1,"verbosity": -1}clf = lgb.train(param,trn_data,5000,valid_sets = [trn_data, val_data],verbose_eval=500,early_stopping_rounds = 200)oof[val_idx] = clf.predict(X_train[val_idx],num_iteration=clf.best_iteration)del clf, trn_idx, val_idxreturn metrics.r2_score(oof, Y_train)

LGB_BO = BayesianOptimization(LGB_CV, {'max_depth': (4, 20),'num_leaves': (5, 130),'min_data_in_leaf': (5, 30),'feature_fraction': (0.7, 1.0),'bagging_fraction': (0.7, 1.0),'lambda_l1': (0, 6)})

LGB_BO.maximize(init_points=2,n_iter=3)

用调好的参数预测test

folds = KFold(n_splits=5, shuffle=True, random_state=15)

oof = np.zeros(X_train.shape[0])

predictions = np.zeros(X_test.shape[0])for fold_, (trn_idx, val_idx) in enumerate(folds.split(X_train, Y_train)):print("fold n°{}".format(fold_))trn_data = lgb.Dataset(X_train[trn_idx],label=Y_train[trn_idx],)val_data = lgb.Dataset(X_train[val_idx],label=Y_train[val_idx],)param = {'num_leaves': 6,'min_data_in_leaf': 5, 'objective':'regression','max_depth': 4,'learning_rate': 0.01,"boosting": "gbdt","feature_fraction": 0.9669589486825311,"bagging_freq": 1,"bagging_fraction": 0.8552076268718115 ,"bagging_seed": 11,"metric": 'rmse',"lambda_l1": 4.243403644533201,"verbosity": -1}clf = lgb.train(param,trn_data,5000,valid_sets = [trn_data, val_data],verbose_eval=500,early_stopping_rounds = 200)oof[val_idx] = clf.predict(X_train[val_idx],num_iteration=clf.best_iteration)

# del clf, trn_idx, val_idxpredictions += clf.predict(X_test, num_iteration=clf.best_iteration) / folds.n_splits

# del clf, trn_idx, val_idx

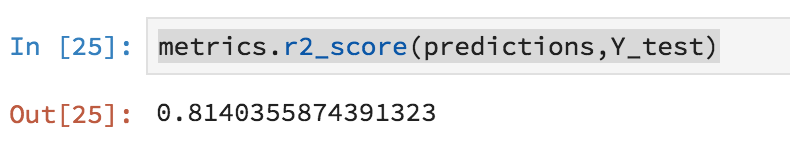

metrics.r2_score(predictions,Y_test)

可以看到,提升还是有的~~

over~有疑问欢迎交流

参考:

lgbm英文文档:

https://lightgbm.readthedocs.io/en/latest/Experiments.html

https://github.com/Microsoft/LightGBM/blob/master/examples/python-guide/simple_example.py

https://www.kaggle.com/fabiendaniel/hyperparameter-tuning/notebook

https://www.kaggle.com/rsmits/bayesian-optimization-for-lightgbm-parameters/notebook

https://www.cnblogs.com/yangruiGB2312/p/9374377.html

贝叶斯优化github:

https://github.com/fmfn/BayesianOptimization/blob/master/examples/basic-tour.ipynb

这篇关于贝叶斯优化调参实战(随机森林,lgbm波士顿房价)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!