本文主要是介绍【从kitti开始自动驾驶】--7.2 实现3d侦测框(移植至ROS,显示于RVIZ),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

“天意终究难参”

- 1 kitti_all中的改动

- 1.1 准备工作

- 1.2 发布者建立

- 1.3 矫正的instance读取

- 1.4 所需数据提取转为数组

- 1.5 为每个object都画框

- 1.6 执行发布函数

- 2 publish_utils中的改动

- 2.1 变量定义

- 2.2 为不同object加入不同颜色

- 2.3 maker_array操作

- 2.4 大遍历每一个object

- 2.5 小遍历每两个点

- 2.6 发布

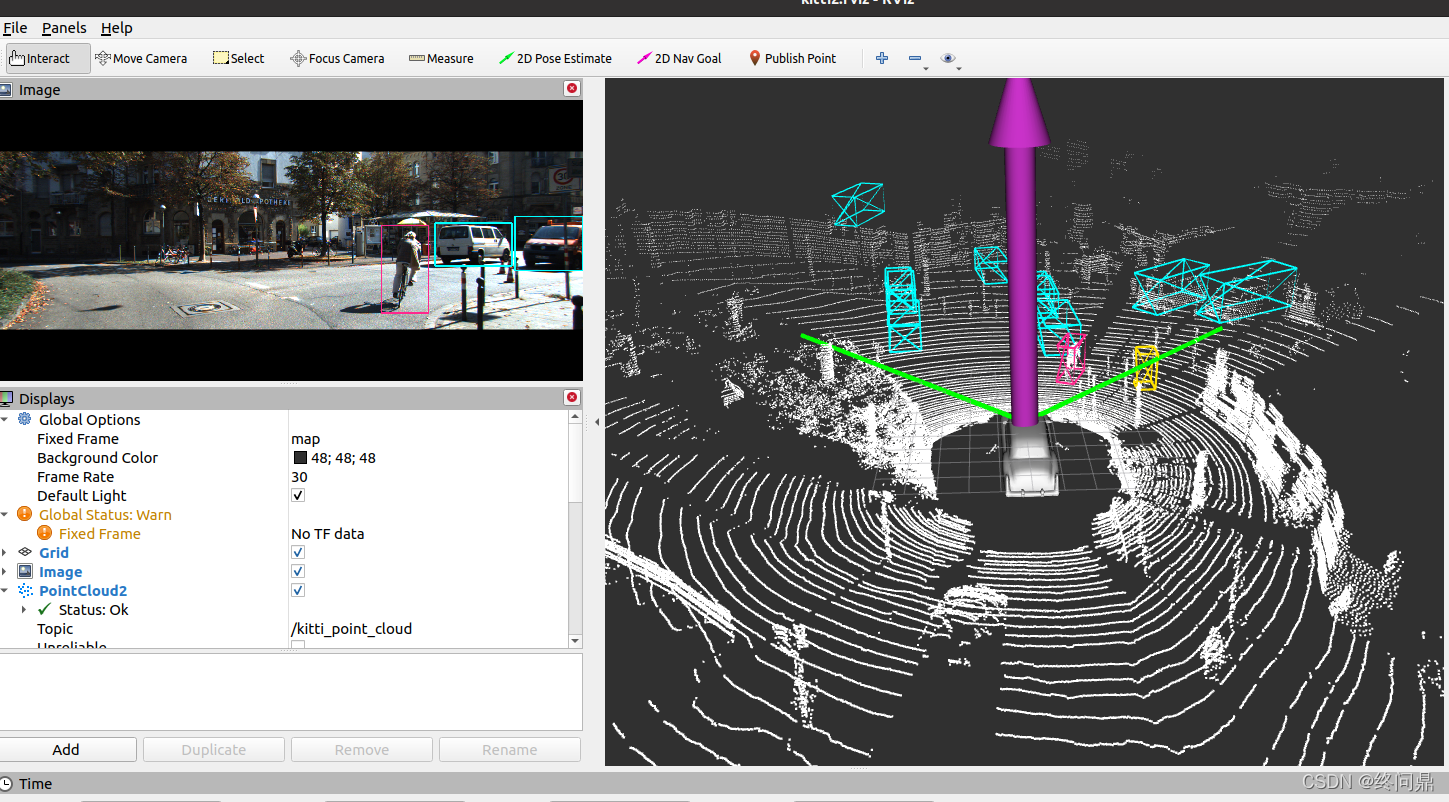

- 3.效果查看

- 4. 源码

- 4.1 publish_utils.py代码

- 4.2 kitti_all.py

本节将采用在jupyter notebook上的方式执行对单张图片上实现对所有object的3D打标,以及移植到ROS上,通过RVIZ可视化出来

tracking资料存放在/kitti_folder/tracking中

jupyter工程存放在/test3_autodrive_ws/src/jupyter_prj下的2D_tracking_label.ipynb

数据文件存放在/home/qinsir/kitti_folder/tracking/data_tracking_label_2/training/label_02

下,可以看到是txt文件

矫正程序在test3_autodrive_ws/src/demo1_kitti_pub_photo/scripts/calibration.py中

上一节已经实现在jupyter-notebook平台上的稳定运行,本节将其移植至ROS中即可

1 kitti_all中的改动

1.1 准备工作

- 导入之前写好的cali的包

- 引进根据坐标定位矩形框八个顶点的坐标的函数

from calibration import *

def compute_3d_box_cam2(h, w, l, x, y, z, yaw)

1.2 发布者建立

box3d_pub = rospy.Publisher("kitti_3d", MarkerArray, queue_size=10)

1.3 矫正的instance读取

calib = Calibration('/home/qinsir/kitti_folder/2011_09_26', from_video=True)

1.4 所需数据提取转为数组

boxes_3d = np.array(df_tracking[df_tracking.frame==frame][['height', 'width', 'length', 'pos_x', 'pos_y', 'pos_z', 'rot_y']])1.5 为每个object都画框

- 每个object迭代一次

- 第一个数组用来存放每一次经过转换的8个点的坐标

- 一个物体是8X3, 每个物体都存在corners_3d_velos中

corners_3d_velos = []for box_3d in boxes_3d:corners_3d_cam2 = compute_3d_box_cam2(*box_3d) #用*表展开为7个参数#经过矫正到velodyne的函数,入口参数为从cam到velodyne#需要8X3,转置一下corners_3d_velo = calib.project_ref_to_velo(corners_3d_cam2.T)corners_3d_velos += [corners_3d_velo] #逐个存入列表1.6 执行发布函数

- 没啥说的,因为要在publish_utils.py中定义

- 最后一个参数是为了为不同obj指定不同颜色

publish_3dbox(box3d_pub, corners_3d_velos, types) #发布三维

2 publish_utils中的改动

2.1 变量定义

- 定义生命周期,和图片的生存周期,一秒十帧一样

- 定义每两个之间需要划线的点,用X代表前方

LIFETIME = 0.1 #我没用上LINES = [[0,1], [1,2], [2,3], [3, 0]] #底面

LINES += [[4,5], [5,6], [6,7], [7,4]] #顶面

LINES += [[4,0], [5,1], [6,2], [7,3]] #中间的四条线

LINES += [[4,1], [5,0]] #前边用"X"标志

2.2 为不同object加入不同颜色

- 因为这里的颜色范围是0-1,所以进行归一化

b, g, r = DETECTION_COLOR_DICT[types[i]]marker.color.r = r / 255.0marker.color.g = g / 255.0marker.color.b = b / 255.0 #这条线的颜色

2.3 maker_array操作

- 因为一次发布多条线,所以采用marker_array同时发送的方法

- 常规的,建立marker_array,marker,给marker填充数据再增加到marker_array中

- 操作较为常规,就不在此展现代码了

2.4 大遍历每一个object

- i是序号

- corners_3d_velo是该物体对应的8个点

for i, corners_3d_velo in enumerate(corners_3d_velos):

2.5 小遍历每两个点

- 在我们定义的需要连接的点对中,进行划线

- 需要连线的一对点/一条线收录进同一个marker中,在append到maker_array中

for l in LINES:p1 = corners_3d_velo[l[0]]marker.points.append(Point(p1[0], p1[1], p1[2]))p2 = corners_3d_velo[l[1]]marker.points.append(Point(p2[0], p2[1], p2[2]))

2.6 发布

box3d_pub.publish(marker_array)

3.效果查看

4. 源码

4.1 publish_utils.py代码

#!/usr/bin/env python3

#coding:utf-8import rospy

from std_msgs.msg import Header

from sensor_msgs.msg import Image, PointCloud2, Imu, NavSatFix

from visualization_msgs.msg import Marker, MarkerArray

import sensor_msgs.point_cloud2 as pcl2

from geometry_msgs.msg import Point

from cv_bridge import CvBridge

import numpy as np

import tf

import cv2FRAME_ID = "map"

DETECTION_COLOR_DICT = {'Car':(255, 255, 0), 'Pedestrian':(0, 226, 255), 'Cyclist':(141, 40, 255)}

#为三种车型,分别添加颜色值对应的框

LIFETIME = 0.1

#生存周期LINES = [[0,1], [1,2], [2,3], [3, 0]] #底面

LINES += [[4,5], [5,6], [6,7], [7,4]] #顶面

LINES += [[4,0], [5,1], [6,2], [7,3]] #中间的四条线

LINES += [[4,1], [5,0]] #前边用"X"标志

'''

def publish_camera(cam_pub, bridge, image):cam_pub.publish(bridge.cv2_to_imgmsg(image, 'bgr8'))

'''

def publish_camera(cam_pub, bridge, image, boxes, types):#画出每一个坐标for typ, box in zip(types, boxes): #for一个矩阵,它是一行一行读取# 左上角,右下角,像素都是整数top_left = int(box[0]), int(box[1])right_down = int(box[2]), int(box[3])# 画矩形cv2.rectangle(image, top_left, right_down, DETECTION_COLOR_DICT[typ], 2)cam_pub.publish(bridge.cv2_to_imgmsg(image, 'bgr8'))def publish_point_cloud(pcl_pub, point_cloud):header = Header()header.frame_id = FRAME_IDheader.stamp = rospy.Time.now()pcl_pub.publish(pcl2.create_cloud_xyz32(header, point_cloud[:, :3]))def publish_ego_car(ego_car_pub):'publish left and right 45 degree FOV and ego car model mesh'#header部分marker = Marker()marker.header.frame_id = FRAME_IDmarker.header.stamp = rospy.Time.now()# marker的id marker.id = 0marker.action = Marker.ADD # 加入一个markermarker.lifetime = rospy.Duration() # 生存时间,()中无参数永远出现marker.type = Marker.LINE_STRIP #marker 的type,有很多种,这里选择线条marker.color.r = 0.0marker.color.g = 1.0marker.color.b = 0.0 #这条线的颜色marker.color.a = 1.0 #透明度 1不透明marker.scale.x = 0.2 #大小,粗细#设定marker中的资料marker.points = []# 两条线,三个点即可#原点是0,0,0, 看左上角和右上角的数据要看kitti的设定,看坐标marker.points.append(Point(10, -10, 0))marker.points.append(Point(0, 0, 0))marker.points.append(Point(10, 10, 0))ego_car_pub.publish(marker) #设定完毕,发布def publish_car_model(model):#header部分mesh_marker = Marker()mesh_marker.header.frame_id = FRAME_IDmesh_marker.header.stamp = rospy.Time.now()# marker的id mesh_marker.id = -1mesh_marker.lifetime = rospy.Duration() # 生存时间,()中无参数永远出现mesh_marker.type = Marker.MESH_RESOURCE #marker 的type,有很多种,这里选择meshmesh_marker.mesh_resource = "package://demo1_kitti_pub_photo/mesh/car_model/car.DAE"#平移量设置mesh_marker.pose.position.x = 0.0mesh_marker.pose.position.y = 0.0#以为0,0,0 是velodyne的坐标(车顶),这里坐标是车底,所以是负数mesh_marker.pose.position.z = -1.73#旋转量设定q = tf.transformations.quaternion_from_euler(np.pi/2, 0, np.pi/2)# 这里的参数和下载模型的预设角度有关,旋转关系,根据显示效果而调整,转成四元数q#x轴旋转mesh_marker.pose.orientation.x = q[0]mesh_marker.pose.orientation.y = q[1]mesh_marker.pose.orientation.z = q[2]mesh_marker.pose.orientation.w = q[3]#颜色设定(白色)mesh_marker.color.r = 1.0mesh_marker.color.g = 1.0mesh_marker.color.b = 1.0mesh_marker.color.a = 1.0#设置体积: x,y,z方向的膨胀程度mesh_marker.scale.x = 0.4mesh_marker.scale.y = 0.4mesh_marker.scale.z = 0.4model.publish(mesh_marker) #设定完毕,发布def publish_two_marker(kitti_two_marker):#建立markerarraymarkerarray = MarkerArray()# 绿线设定marker = Marker()marker.header.frame_id = FRAME_IDmarker.header.stamp = rospy.Time.now()# marker的id marker.id = 0marker.action = Marker.ADD # 加入一个markermarker.lifetime = rospy.Duration() # 生存时间,()中无参数永远出现marker.type = Marker.LINE_STRIP #marker 的type,有很多种,这里选择线条marker.color.r = 0.0marker.color.g = 1.0marker.color.b = 0.0 #这条线的颜色marker.color.a = 1.0 #透明度 1不透明marker.scale.x = 0.2 #大小,粗细#设定marker中的资料marker.points = []# 两条线,三个点即可#原点是0,0,0, 看左上角和右上角的数据要看kitti的设定,看坐标marker.points.append(Point(10, -10, 0))marker.points.append(Point(0, 0, 0))marker.points.append(Point(10, 10, 0))#加入第一个markerarray.markers.append(marker)mesh_marker = Marker()mesh_marker.header.frame_id = FRAME_IDmesh_marker.header.stamp = rospy.Time.now()# marker的id mesh_marker.id = -1mesh_marker.lifetime = rospy.Duration() # 生存时间,()中无参数永远出现mesh_marker.type = Marker.MESH_RESOURCE #marker 的type,有很多种,这里选择meshmesh_marker.mesh_resource = "package://demo1_kitti_pub_photo/mesh/car_model/car.DAE"#平移量设置mesh_marker.pose.position.x = 0.0mesh_marker.pose.position.y = 0.0#以为0,0,0 是velodyne的坐标(车顶),这里坐标是车底,所以是负数mesh_marker.pose.position.z = -1.73#旋转量设定q = tf.transformations.quaternion_from_euler(np.pi/2, 0, np.pi/2)# 这里的参数和下载模型的预设角度有关,旋转关系,根据显示效果而调整,转成四元数q#x轴旋转mesh_marker.pose.orientation.x = q[0]mesh_marker.pose.orientation.y = q[1]mesh_marker.pose.orientation.z = q[2]mesh_marker.pose.orientation.w = q[3]#颜色设定(白色)mesh_marker.color.r = 1.0mesh_marker.color.g = 1.0mesh_marker.color.b = 1.0mesh_marker.color.a = 1.0#设置体积: x,y,z方向的膨胀程度mesh_marker.scale.x = 0.4mesh_marker.scale.y = 0.4mesh_marker.scale.z = 0.4# 加入第二个:车子模型markerarray.markers.append(mesh_marker)#发布kitti_two_marker.publish(markerarray)def publish_imu(imu_pub, imu_data):# 消息建立imu = Imu()#头填充imu.header.frame_id = FRAME_IDimu.header.stamp = rospy.Time.now()#旋转角度q = tf.transformations.quaternion_from_euler(float(imu_data.roll), float(imu_data.pitch), float(imu_data.yaw))# 将roll, pitch, yaw转成可被电脑识别的四元数q,并设定出去imu.orientation.x = q[0]imu.orientation.y = q[1]imu.orientation.z = q[2]imu.orientation.w = q[3]#线性加速度imu.linear_acceleration.x = imu_data.afimu.linear_acceleration.y = imu_data.alimu.linear_acceleration.z = imu_data.au #角速度imu.angular_velocity.x = imu_data.wfimu.angular_velocity.y = imu_data.wlimu.angular_velocity.z = imu_data.wu#发布imu_pub.publish(imu)def publish_gps(gps_pub, imu_data):gps = NavSatFix()#头填充gps.header.frame_id = FRAME_IDgps.header.stamp = rospy.Time.now()#维度, 经度 和海拔gps.latitude = imu_data.latgps.longitude = imu_data.longps.altitude = imu_data.algps_pub.publish(gps)def publish_3dbox(box3d_pub, corners_3d_velos, types):marker_array = MarkerArray()#对每一组数据/交通工具/矩形框进行迭代for i, corners_3d_velo in enumerate(corners_3d_velos):#header部分marker = Marker()marker.header.frame_id = FRAME_IDmarker.header.stamp = rospy.Time.now()# marker的id marker.id = imarker.action = Marker.ADD # 加入一个markermarker.lifetime = rospy.Duration(0) # 生存时间,()中无参数永远出现marker.type = Marker.LINE_STRIP #marker 的type,有很多种,这里选择线条b, g, r = DETECTION_COLOR_DICT[types[i]]marker.color.r = r / 255.0marker.color.g = g / 255.0marker.color.b = b / 255.0 #这条线的颜色marker.color.a = 1.0 #透明度 1不透明marker.scale.x = 0.1 #大小,粗细#设定marker中的资料marker.points = []#对每两个点之间进行迭代,存在则连for l in LINES :p1 = corners_3d_velo[l[0]]marker.points.append(Point(p1[0], p1[1], p1[2]))p2 = corners_3d_velo[l[1]]marker.points.append(Point(p2[0], p2[1], p2[2]))marker_array.markers.append(marker)box3d_pub.publish(marker_array)4.2 kitti_all.py

#!/usr/bin/env python3

#coding:utf-8

from data_utils import *

from publish_utils import *

from calibration import *DATA_PATH = '/home/qinsir/kitti_folder/2011_09_26/2011_09_26_drive_0005_sync/'# 根据长宽高XYZ和旋转角坐标定位

def compute_3d_box_cam2(h, w, l, x, y, z, yaw):'''return : 3xn in cam2 coordinate'''R = np.array([[np.cos(yaw), 0, np.sin(yaw)], [0, 1, 0], [-np.sin(yaw), 0, np.cos(yaw)]])x_corners = [l/2, l/2, -l/2, -l/2, l/2, l/2, -l/2, -l/2]y_corners = [0, 0, 0, 0, -h, -h, -h, -h]z_corners = [w/2, -w/2, -w/2, w/2, w/2, -w/2, -w/2, w/2]corners_3d_cam2 = np.dot(R, np.vstack([x_corners, y_corners, z_corners]))corners_3d_cam2 += np.vstack([x, y, z])return corners_3d_cam2if __name__ == '__main__':frame = 0rospy.init_node('kitti_node', anonymous=True) #默认节点可以重名cam_pub = rospy.Publisher('kitti_cam', Image, queue_size=10)pcl_pub = rospy.Publisher("kitti_point_cloud", PointCloud2, queue_size=10)#ego_pub = rospy.Publisher('kitti_ego_car', Marker, queue_size=10)#model_pub = rospy.Publisher("kitti_car_model", Marker, queue_size=10)two_marker_pub = rospy.Publisher("kitti_two_mark", MarkerArray, queue_size=10)imu_pub = rospy.Publisher("kitti_imu", Imu, queue_size=10) #IMU发布者gps_pub = rospy.Publisher("kitti_gps", NavSatFix, queue_size=10)box3d_pub = rospy.Publisher("kitti_3d", MarkerArray, queue_size=10)bridge = CvBridge() #opencv支持的图片和ROS可以读取的图片之间转换的桥梁rate = rospy.Rate(10)#以第0组(厢型车等归类适用的tracking数据)为数据读取df_tracking = read_tracking('/home/qinsir/kitti_folder/tracking/data_tracking_label_2/training/label_02/0000.txt')calib = Calibration('/home/qinsir/kitti_folder/2011_09_26', from_video=True)while not rospy.is_shutdown():boxes_2d = np.array(df_tracking[df_tracking.frame==frame][["bbox_left", "bbox_top", "bbox_right", "bbox_bottom"]]) #格式转换,第0帧所有的打标框types = np.array(df_tracking[df_tracking.frame==frame]["type"])boxes_3d = np.array(df_tracking[df_tracking.frame==frame][['height', 'width', 'length', 'pos_x', 'pos_y', 'pos_z', 'rot_y']])#保存8个雷达坐标系下点的坐标的listcorners_3d_velos = []#对于图片中的每一个记录物体都进行计算for box_3d in boxes_3d:#相机里的坐标corners_3d_cam2 = compute_3d_box_cam2(*box_3d) #用*表展开为7个参数#经过矫正到velodyne的函数,入口参数为从cam到velodyne#需要8X3,转置一下corners_3d_velo = calib.project_ref_to_velo(corners_3d_cam2.T)corners_3d_velos += [corners_3d_velo] #逐个存入列表#使用OS,路径串接,%010d,这个字串有10个数字(比如0000000001).pngimg = read_camera(os.path.join(DATA_PATH, 'image_02/data/%010d.png'%frame)) point_cloud = read_point_cloud(os.path.join(DATA_PATH, "velodyne_points/data/%010d.bin"%frame))imu_data = read_imu(os.path.join(DATA_PATH, "oxts/data/%010d.txt"%frame))publish_camera(cam_pub, bridge, img, boxes_2d, types)#publish_camera(cam_pub, bridge, img)publish_point_cloud(pcl_pub, point_cloud)publish_3dbox(box3d_pub, corners_3d_velos, types) #发布三维#publish_ego_car(ego_pub)#publish_car_model(model_pub)publish_two_marker(two_marker_pub)publish_imu(imu_pub, imu_data)publish_gps(gps_pub, imu_data)rospy.loginfo('new file publish')rate.sleep()frame += 1frame %= 154

这篇关于【从kitti开始自动驾驶】--7.2 实现3d侦测框(移植至ROS,显示于RVIZ)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!