本文主要是介绍ROS openni_tracker:骨架追踪,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

ROS 中的openni_tracker 骨架跟踪学习资料整理。

代码注释比较详细:

// openni_tracker.cpp#include <ros/ros.h>

#include <ros/package.h>

#include <tf/transform_broadcaster.h>

#include <kdl/frames.hpp>#include <XnOpenNI.h>

#include <XnCodecIDs.h>

#include <XnCppWrapper.h>using std::string;

xn::Context g_Context;

xn::DepthGenerator g_DepthGenerator;

xn::UserGenerator g_UserGenerator;XnBool g_bNeedPose = FALSE;//初始化,FALSE = 0,是否指定特定的姿势

XnChar g_strPose[20] = "";//姿势的名字//检测到人

void XN_CALLBACK_TYPE User_NewUser(xn::UserGenerator& generator, XnUserID nId, void* pCookie) {ROS_INFO("New User %d,g_bNeedPose = %d", nId,g_bNeedPose);//此时g_bNeedPose = TRUE;g_strPose = psi;if (g_bNeedPose)//检测特定的用户姿势,此时UserPose_PoseDetected 回调函数执行g_UserGenerator.GetPoseDetectionCap().StartPoseDetection(g_strPose, nId);elseg_UserGenerator.GetSkeletonCap().RequestCalibration(nId, TRUE);

}

//检测不到人

void XN_CALLBACK_TYPE User_LostUser(xn::UserGenerator& generator, XnUserID nId, void* pCookie) {ROS_INFO("Lost user %d", nId);

}//开始校准

void XN_CALLBACK_TYPE UserCalibration_CalibrationStart(xn::SkeletonCapability& capability, XnUserID nId, void* pCookie) {ROS_INFO("Calibration started for user %d", nId);

}

//校准结束

void XN_CALLBACK_TYPE UserCalibration_CalibrationEnd(xn::SkeletonCapability& capability, XnUserID nId, XnBool bSuccess, void* pCookie) {if (bSuccess) {//校准成功,开始追踪骨架ROS_INFO("Calibration complete, start tracking user %d", nId);g_UserGenerator.GetSkeletonCap().StartTracking(nId);}else {//失败,重新开始检测姿势ROS_INFO("Calibration failed for user %d", nId);if (g_bNeedPose)g_UserGenerator.GetPoseDetectionCap().StartPoseDetection(g_strPose, nId);elseg_UserGenerator.GetSkeletonCap().RequestCalibration(nId, TRUE);//TRUE 忽略以前的校准以强制进一步校准。}

}//检测姿势

void XN_CALLBACK_TYPE UserPose_PoseDetected(xn::PoseDetectionCapability& capability, XnChar const* strPose, XnUserID nId, void* pCookie) {ROS_INFO("Pose %s detected for user %d", strPose, nId);g_UserGenerator.GetPoseDetectionCap().StopPoseDetection(nId);//开始校准g_UserGenerator.GetSkeletonCap().RequestCalibration(nId, TRUE);

}//TF 转换

void publishTransform(XnUserID const& user, XnSkeletonJoint const& joint, string const& frame_id, string const& child_frame_id) {static tf::TransformBroadcaster br;XnSkeletonJointPosition joint_position;//特定关节的位置。结构体,包含世界坐标和置信度//获取最近生成的用户数据中的骨架关节之一的位置。g_UserGenerator.GetSkeletonCap().GetSkeletonJointPosition(user, joint, joint_position);double x = -joint_position.position.X / 1000.0;double y = joint_position.position.Y / 1000.0;double z = joint_position.position.Z / 1000.0;XnSkeletonJointOrientation joint_orientation; //特定关节的方向。结构体中包含 方向和置信度//获取特定关节的方向g_UserGenerator.GetSkeletonCap().GetSkeletonJointOrientation(user, joint, joint_orientation);XnFloat* m = joint_orientation.orientation.elements;KDL::Rotation rotation(m[0], m[1], m[2],m[3], m[4], m[5],m[6], m[7], m[8]);double qx, qy, qz, qw;//获取此矩阵的四元数rotation.GetQuaternion(qx, qy, qz, qw);char child_frame_no[128];snprintf(child_frame_no, sizeof(child_frame_no), "%s_%d", child_frame_id.c_str(), user);tf::Transform transform;//设置平移元素transform.setOrigin(tf::Vector3(x, y, z));//通过四元数设置旋转元素transform.setRotation(tf::Quaternion(qx, -qy, -qz, qw));// #4994 基准点(摄像头位置)tf::Transform change_frame;change_frame.setOrigin(tf::Vector3(0, 0, 0));tf::Quaternion frame_rotation;frame_rotation.setEulerZYX(1.5708, 0, 1.5708);change_frame.setRotation(frame_rotation);transform = change_frame * transform;br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), frame_id, child_frame_no));

}void publishTransforms(const std::string& frame_id) {XnUserID users[15];XnUInt16 users_count = 15;g_UserGenerator.GetUsers(users, users_count);for (int i = 0; i < users_count; ++i) {XnUserID user = users[i];//if (!g_UserGenerator.GetSkeletonCap().IsTracking(user))continue;publishTransform(user, XN_SKEL_HEAD, frame_id, "head");publishTransform(user, XN_SKEL_NECK, frame_id, "neck");publishTransform(user, XN_SKEL_TORSO, frame_id, "torso");publishTransform(user, XN_SKEL_LEFT_SHOULDER, frame_id, "left_shoulder");publishTransform(user, XN_SKEL_LEFT_ELBOW, frame_id, "left_elbow");publishTransform(user, XN_SKEL_LEFT_HAND, frame_id, "left_hand");publishTransform(user, XN_SKEL_RIGHT_SHOULDER, frame_id, "right_shoulder");publishTransform(user, XN_SKEL_RIGHT_ELBOW, frame_id, "right_elbow");publishTransform(user, XN_SKEL_RIGHT_HAND, frame_id, "right_hand");publishTransform(user, XN_SKEL_LEFT_HIP, frame_id, "left_hip");publishTransform(user, XN_SKEL_LEFT_KNEE, frame_id, "left_knee");publishTransform(user, XN_SKEL_LEFT_FOOT, frame_id, "left_foot");publishTransform(user, XN_SKEL_RIGHT_HIP, frame_id, "right_hip");publishTransform(user, XN_SKEL_RIGHT_KNEE, frame_id, "right_knee");publishTransform(user, XN_SKEL_RIGHT_FOOT, frame_id, "right_foot");}

}#define CHECK_RC(nRetVal, what) \if (nRetVal != XN_STATUS_OK) \{ \ROS_ERROR("%s failed: %s", what, xnGetStatusString(nRetVal));\return nRetVal; \}else{ \ROS_INFO("%s OK: %s", what, xnGetStatusString(nRetVal)) ; \} \int main(int argc, char **argv) {ros::init(argc, argv, "openni_tracker");ros::NodeHandle nh;//配置文件的路径string configFilename = ros::package::getPath("openni_tracker") + "/openni_tracker.xml";ROS_INFO("configName==%s",configFilename.c_str());XnStatus nRetVal = g_Context.InitFromXmlFile(configFilename.c_str());CHECK_RC(nRetVal, "InitFromXml");/*搜索指定类型的现有已创建节点并返回其引用。* 参数1:指定搜索的类型* 参数2:现有已创建节点的引用*/nRetVal = g_Context.FindExistingNode(XN_NODE_TYPE_DEPTH, g_DepthGenerator);CHECK_RC(nRetVal, "Find depth generator");nRetVal = g_Context.FindExistingNode(XN_NODE_TYPE_USER, g_UserGenerator);if (nRetVal != XN_STATUS_OK) {nRetVal = g_UserGenerator.Create(g_Context);if (nRetVal != XN_STATUS_OK) {ROS_ERROR("NITE is likely missing: Please install NITE >= 1.5.2.21. Check the readme for download information. Error Info: User generator failed: %s", xnGetStatusString(nRetVal));return nRetVal;}}if (!g_UserGenerator.IsCapabilitySupported(XN_CAPABILITY_SKELETON)) {ROS_INFO("Supplied user generator doesn't support skeleton");return 1;}XnCallbackHandle hUserCallbacks;/*“新用户”和“失去用户”事件的注册。参数:User_NewUser,检测到新用户回调函数参数:User_LostUser,检测到失去用户回调*/g_UserGenerator.RegisterUserCallbacks(User_NewUser, User_LostUser, NULL, hUserCallbacks);XnCallbackHandle hCalibrationCallbacks;/*注册校准开始和结束事件* 参数:UserCalibration_CalibrationStart ,校准开始回调函数* 参数:UserCalibration_CalibrationEnd ,校准结束回调函数*/g_UserGenerator.GetSkeletonCap().RegisterCalibrationCallbacks(UserCalibration_CalibrationStart, UserCalibration_CalibrationEnd, NULL, hCalibrationCallbacks);//是否需要对特定姿势进行校准,适用于所有用户if (g_UserGenerator.GetSkeletonCap().NeedPoseForCalibration()) {ROS_INFO("g_bNeedPose = TRUE");//此处将g_bNeedPose 赋值 1;g_bNeedPose = TRUE;//是否支持特殊姿势校准if (!g_UserGenerator.IsCapabilitySupported(XN_CAPABILITY_POSE_DETECTION)) {ROS_INFO("Pose required, but not supported");return 1;}XnCallbackHandle hPoseCallbacks;/*注册检测姿势事件* 参数:UserPose_PoseDetected 开始检测姿势回调函数* 第二个参数:检测姿势结束回调函数* */g_UserGenerator.GetPoseDetectionCap().RegisterToPoseCallbacks(UserPose_PoseDetected, NULL, NULL, hPoseCallbacks);ROS_INFO("NAME1==%s",g_strPose);/*** 此方法仅在NeedPoseForCalibration()方法返回TRUE时使用,* 并且返回姿势名称。*/g_UserGenerator.GetSkeletonCap().GetCalibrationPose(g_strPose);ROS_INFO("NAME2==%s",g_strPose);}/** 设置骨架轮廓。 骨架配置文件指定哪些关节处于活动状态,哪些关节处于非活动状态。* UserGenerator节点仅为活动关节生成输出数据。* 此配置文件适用于@ref UserGenerator节点生成的所有骨架。* 参数:指定要设置的配置文件* 配置文件作用是使程序能够选择生成所有关节,还是只是上或下躯干,或只是头和手。* 使用SetJointActive()方法选择配置文件,使其具有比此方法更好的识别率,例如,能够选择特定的手或脚。* 这个函数只提供程序需要的数据,因此执行效率和相应时间由很大的提升* */g_UserGenerator.GetSkeletonCap().SetSkeletonProfile(XN_SKEL_PROFILE_ALL);//确保所有创建的@ref dict_gen节点“生成器节点”正在生成数据nRetVal = g_Context.StartGeneratingAll();CHECK_RC(nRetVal, "StartGenerating");ros::Rate r(30);ros::NodeHandle pnh("~");string frame_id("xtion_depth_frame");pnh.getParam("camera_frame_id", frame_id);while (ros::ok()) {/*将上下文中的所有生成器节点更新为其最新的可用数据,* 等待所有节点有新的数据可用。我们要确保所有创建的生成器节点正在生成数据,不然会一直在等待*/g_Context.WaitAndUpdateAll();publishTransforms(frame_id);r.sleep();}g_Context.Shutdown();return 0;

}

追踪流程大体如下:

1.注册检测到人和人消失的回调函数

2.注册注册检测姿势,校准开始结束回调函数

3.判断是否需要检测特定姿势,是否支持该功能

4.获取姿势的名字(”Psi”)

5.设置骨架轮廓配置。

6.启动所有生成器节点

7.等待所有节点有新的数据可用。期间执行相应的回调函数(前面注册过)。

7.1 检测到user,开始姿势检测

7.2 姿势检测到之后开始校准

7.3 校准成功,开始追踪,失败则重新检测姿势。

8.tf转换,在rviz中显示。

运行openni_tracker

编译完成后,

启动openni camera:$roslaunch handsfree_bringup xtion_fake_laser_openni2.launch(我用的是handfree)

在另一个终端中运行: rosrun openni_tracker openni_tracker

启动rviz:rosrun rviz rviz -d rospack find rbx1_vision/skeleton_frames.rviz(这里也可以用自己的或者新建一个)。

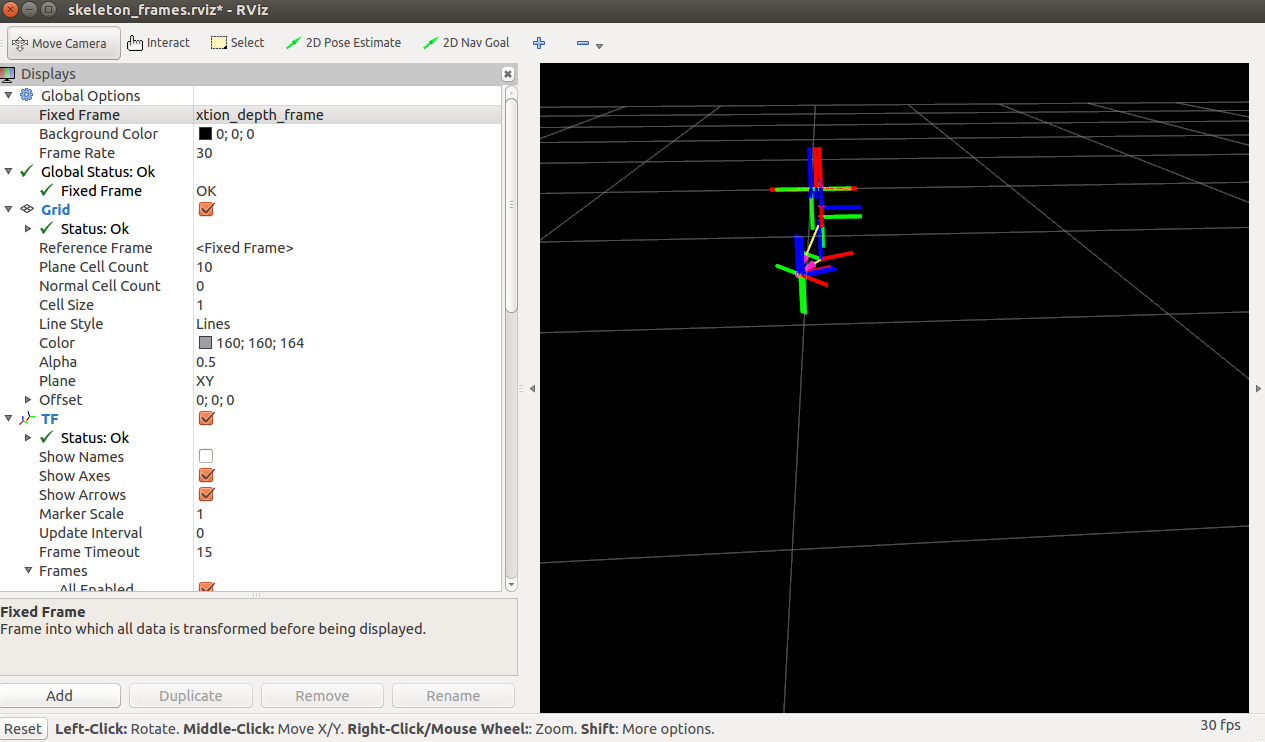

rviz显示如图:

将Fixed Frame 改为程序中的frameid,我代码中写的是xtion_depth_frame

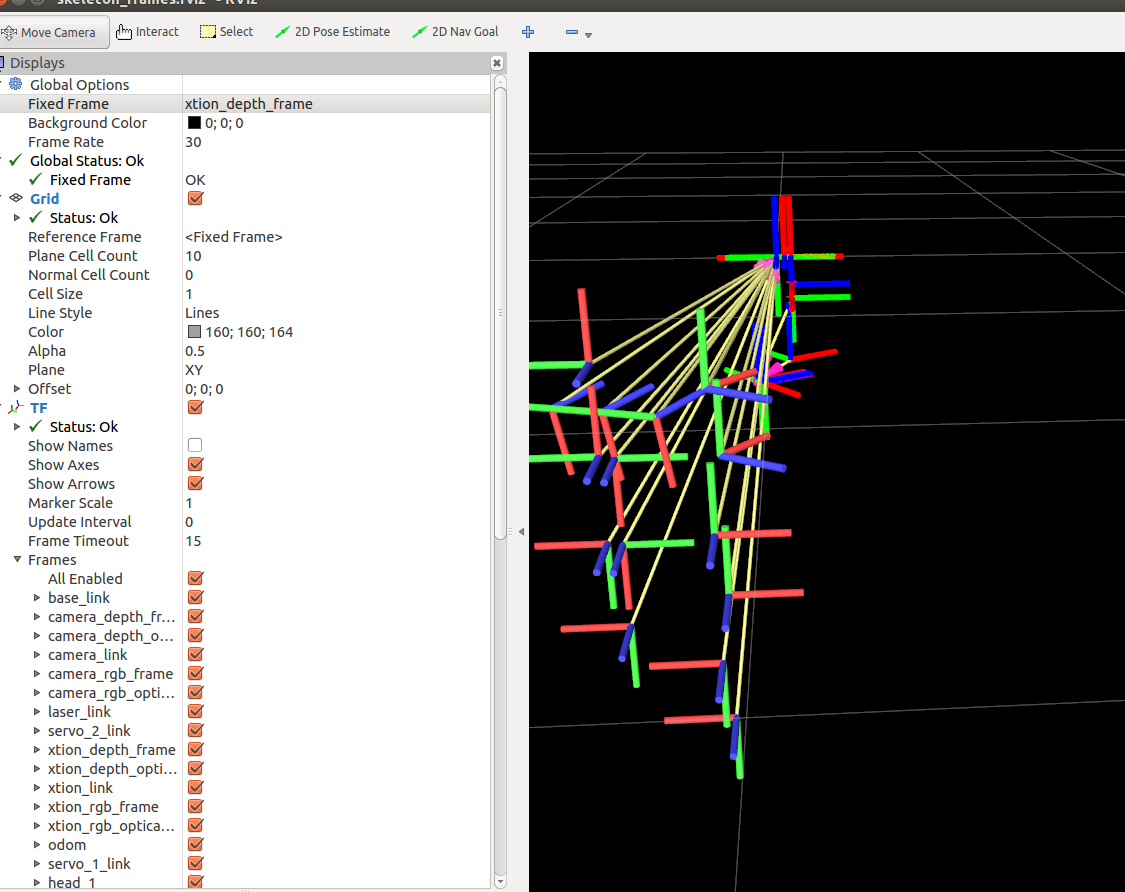

站在摄像头前方,摆出投降的姿势,1-5s之后,会出现你的关节点图。

最终效果图:

这篇关于ROS openni_tracker:骨架追踪的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!