本文主要是介绍Ubuntu18.04 realsenseD435i ROS orbslam2,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

目录

环境安装

OpenCV、ROS

Eigen3

Pangolin

PCL

代码运行前期操作

使用数据集简单运行

ROS下使用rosbag运行

修改为彩色:

保存地图

加入RealSense D435i相机

安装

标定

相关库文件:

使用realsense运行

感谢大佬的文章:基于深度相机 RealSense D435i 的 ORB SLAM 2 - 简书

之前一直使用Kinect进行尝试,但是一直点云都出不来

环境安装

OpenCV、ROS

Ubuntu安装、重装_echo_gou的博客-CSDN博客

Eigen3

wget https://gitlab.com/libeigen/eigen/-/archive/3.2.10/eigen-3.2.10.tar.gztar -xvzf eigen-3.2.10.tar.gz mkdir build

cd build

cmake ..

sudo make installPangolin

注意这里只能下载0.5的版本,不然在编译代码的时候会报错:

在 ORB SLAM 2 中那些很炫酷的实时建图画面是通过 Pangolin 实现的。

Pangolin 是一个轻量级的开发库,控制 OpenGL 的显示、交互等。

Pangolin 的核心依赖是 OpenGL 和 GLEW。

安装pip:sudo apt install python-pip

# OpenGL

sudo apt install libgl1-mesa-dev# Glew

sudo apt install libglew-dev# CMake

sudo apt install cmake# python

sudo apt install libpython2.7-dev

sudo python -mpip install numpy pyopengl Pillow pybind11# Wayland

sudo apt install pkg-config

sudo apt install libegl1-mesa-dev libwayland-dev libxkbcommon-dev wayland-protocols# FFMPEG (For video decoding and image rescaling)

sudo apt install ffmpeg libavcodec-dev libavutil-dev libavformat-dev libswscale-dev libavdevice-dev# DC1394 (For firewire input)

sudo apt install libdc1394-22-dev libraw1394-dev# libjpeg, libpng, libtiff, libopenexr (For reading still-image sequences)

sudo apt install libjpeg-dev libpng-dev libtiff5-dev libopenexr-dev# build Pangolin

#git clone https://github.com/stevenlovegrove/Pangolin.git

#这里代码需要手动去下载0.5版本的:

git clone --recursive https://github.com/stevenlovegrove/Pangolin.git -b v0.5cd Pangolinmkdir buildcd buildcmake ..cmake --build . # 注意最后那个点测试:

cd ~/Pangolin/build/examples/HelloPangolin

./HelloPangolin PCL

sudo apt install libpcl-dev代码运行前期操作

工作空间:

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/src

catkin_init_workspace

cd ~/catkin_ws

catkin_make代码:

git clone https://github.com/gaoxiang12/ORBSLAM2_with_pointcloud_map.gitgit clone https://github.com/raulmur/ORB_SLAM2.git ORB_SLAM2

其中是一个压缩包和文件夹,我们只需要其中的子文件夹 ORB_SLAM2_modified。

把原本 ORB SLAM2 repo 中的 Vocabulary 文件夹和它里面的

ORBvoc.txt.tar.gz文件拷贝过来,放到ORB_SLAM2_modified路径下。把

~/ORB_SLAM2_modified/build,~/ORB_SLAM2_modified/Thirdparty/DBoW2/build和~/ORB_SLAM2_modified/Thirdparty/g2o/build

~/ORB_SLAM2_modified/Examples/ROS/ORB_SLAM2/build这四个 build 文件夹删掉

在/opt/ros/melodic/setup.bash中的最后加入对应的路径,即:

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:xxx/xxx/catkin_ws/src/ORB_SLAM2_modified/Examples/ROS

记得每次运行的时候要source一下/opt/ros/melodic/setup.bash,或者在.bashrc中加上上面的export,但是在多个orbslam代码的时候容易出错,所以需要时刻用下面的语句检查路径是否export成功。

查看自己的rospath:

echo $ROS_PACKAGE_PATH输出里面需要有刚刚加入的ROS路径,即xxx/xxx/catkin_ws/src/ORB_SLAM2_modified/Examples/ROS,才可以在ROS下执行。

修改

~/ORB_SLAM2_modified/Examples/ROS/ORB_SLAM2/CMakeLists.txt文件,加入pcl... find_package(Eigen3 3.1.0 REQUIRED) find_package(Pangolin REQUIRED) find_package( PCL 1.7 REQUIRED ) ####### 1include_directories( ${PROJECT_SOURCE_DIR} ${PROJECT_SOURCE_DIR}/../../../ ${PROJECT_SOURCE_DIR}/../../../include ${Pangolin_INCLUDE_DIRS} ${PCL_INCLUDE_DIRS} ####### 2 )add_definitions( ${PCL_DEFINITIONS} ) ####### 3 link_directories( ${PCL_LIBRARY_DIRS} ) ####### 4set(LIBS ${OpenCV_LIBS} ${EIGEN3_LIBS} ${Pangolin_LIBRARIES} ${PROJECT_SOURCE_DIR}/../../../Thirdparty/DBoW2/lib/libDBoW2.so ${PROJECT_SOURCE_DIR}/../../../Thirdparty/g2o/lib/libg2o.so ${PROJECT_SOURCE_DIR}/../../../lib/libORB_SLAM2.so ${PCL_LIBRARIES} ####### 5 ) ...

这里因为作者除了这个代码还有其他的orbslam代码,所以ROS空间下的文件夹的名字要作区分,这里我将 ORB_SLAM2_modified/Examples/ROS/下的文件夹改成了ORB_SLAM2-2,同时需要在build_ros.sh中进行相应的路径修改。

然后运行

ORB_SLAM2_modified中的./build.sh ./build_ros.sh

使用数据集简单运行

下载数据集:

rgbd_dataset_freiburg1_xyz

下面网址下载好以后拷贝associate.py至ORB_SLAM2主文件夹内。在associate.py所在的目录执行:(将RGB信息和深度信息链接到一起)

cvpr-ros-pkg - Revision 232: /trunk/rgbd_benchmark/rgbd_benchmark_tools/src/rgbd_benchmark_tools

python associate.py data/rgbd_dataset_freiburg1_xyz/rgb.txt data/rgbd_dataset_freiburg1_xyz/depth.txt > data/rgbd_dataset_freiburg1_xyz/associations.txt运行:

./bin/rgbd_tum Vocabulary/ORBvoc.txt Examples/RGB-D/TUM1.yaml data/rgbd_dataset_freiburg1_xyz data/rgbd_dataset_freiburg1_xyz/associations.txt

ROS下使用rosbag运行

复制 TUM1.yaml 文件,命名为 TUM1_ROS.yaml,修改其中的参数 DepthMapFactor: 1.0

然后运行:

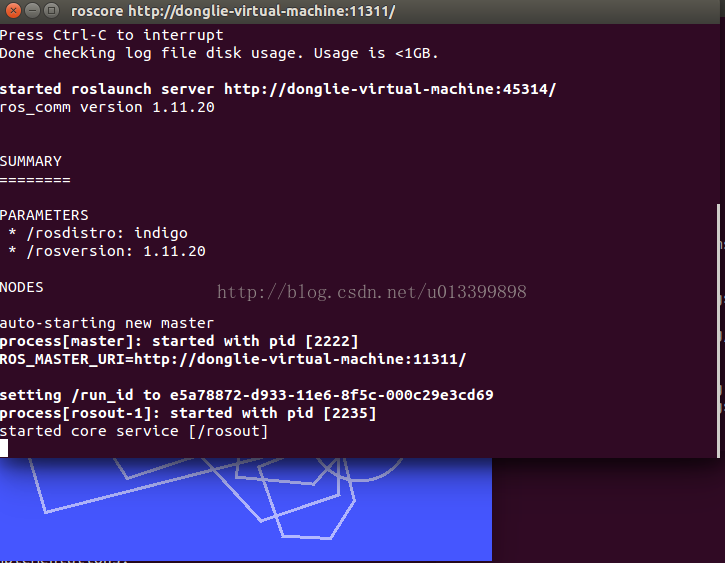

roscore

rosrun ORB_SLAM2-2 RGBD Vocabulary/ORBvoc.bin Examples/RGB-D/TUM1_ROS.yaml

rosbag play data/rgbd_dataset_freiburg1_xyz.bag /camera/rgb/image_color:=/camera/rgb/image_raw /camera/depth/image:=/camera/depth_registered/image_raw

修改为彩色:

修改

Tracking.h

Frame mCurrentFrame; cv::Mat mImRGB;//declared 105行 cv::Mat mImGray;Tracking.cc的两个地方

cv::Mat Tracking::GrabImageRGBD(const cv::Mat &imRGB,const cv::Mat &imD, const double ×tamp) { mImRGB = imRGB; //Modified place 1 210行 mImGray = imRGB;mpPointCloudMapping->insertKeyFrame( pKF, this->mImRGB, this->mImDepth ); //Modified place 2 1142行将mImGray 改为 mImRGB执行build.sh 和 build_ros.sh后再次运行之前的代码:

./bin/rgbd_tum Vocabulary/ORBvoc.txt Examples/RGB-D/TUM1.yaml data/rgbd_dataset_freiburg1_xyz data/rgbd_dataset_freiburg1_xyz/associations.txt

保存地图

修改~/ORB_SLAM2_modified/src/pointcloudmapping.cc

#include <pcl/io/pcd_io.h>pcl::io::savePCDFileBinary("vslam.pcd", *globalMap); // 只需要加入这一句

./build.sh然后再运行前述的各个 SLAM 命令就可以在 ~/ORB_SLAM2_modified 路径下产生一个名为 vslam.pcd 的点云文件。

然后安装查看器

sudo apt-get install pcl-tools

pcl_viewer vslam.pcd

加入RealSense D435i相机

安装

sudo apt-key adv --keyserver keys.gnupg.net --recv-key F6E65AC044F831AC80A06380C8B3A55A6F3EFCDE || sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-key F6E65AC044F831AC80A06380C8B3A55A6F3EFCDE

sudo add-apt-repository "deb http://realsense-hw-public.s3.amazonaws.com/Debian/apt-repo bionic main" -u

sudo apt-get install librealsense2-dkms

sudo apt-get install librealsense2-utils

sudo apt-get install librealsense2-dev

sudo apt-get install librealsense2-dbg如果上面代码中的两句话执行出错可以执行这两句(注意执行下面第二句的时候需要科学上网)

sudo apt-key adv --keyserver keys.gnupg.net --recv-key C8B3A55A6F3EFCDE || sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-key C8B3A55A6F3EFCDE

sudo add-apt-repository "deb https://librealsense.intel.com/Debian/apt-repo $(lsb_release -cs) main" -u然后就装在了/opt/ros/melodic/share/这个目录中

测试:

realsense-viewer

输入

modinfo uvcvideo | grep "version:"![]()

输入:

dkms status![]()

标定

。。。。

相关库文件:

需要用到 ROS 的 realsense 库 ros-$ROS_VER-realsense2-camera。需要注意的是,这个 ROS 库并不依赖于 RealSense SDK 2.0,两者是完全独立的。因此,如果只是想在 ROS 中使用 realsense,并不需要先安装上边的 RealSense SDK 2.0。

sudo apt-get install ros-melodic-realsense2-camera

sudo apt-get install ros-melodic-realsense2-description使用realsense运行

修改/opt/ros/melodic/share/realsense2_camera.launch中的rs_camera.launch 文件将两者改为true。

![]()

具体的参数解释:https://github.com/IntelRealSense/realsense-ros

前者是让不同传感器数据(depth, RGB, IMU)实现时间同步,即具有相同的 timestamp。

后者会增加若干 rostopic,其中我们比较关心的是 /camera/aligned_depth_to_color/image_raw

这里还需要配置修改realsense.yaml文件:(这里我是用的是网上的参数,具体需要自己标定才会更精确。同时网上的大多数yaml文件是没有关于点云的参数的,这里如果需要使用点云的话需要自己添加对应的点云配置)

使用下面的命令可以输出realsense的内参从而修改yaml文件

roscore roslaunch realsense2_camera rs_rgbd.launch rostopic echo /camera/color/camera_info如果找不到rgbd_launch:sudo apt-get install ros-melodic-rgbd-launch

%YAML:1.0#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 909.2099609375

Camera.fy: 908.0496215820

Camera.cx: 630.6794433593

Camera.cy: 371.4115905761

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

Camera.p3: 0.0

Camera.width: 1280

Camera.height: 720

# Camera frames per second

Camera.fps: 30.0

# IR projector baseline times fx (aprox.)

# bf = baseline (in meters) * fx, D435i的 baseline = 50 mm

Camera.bf: 30. # Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1# Close/Far threshold. Baseline times.

ThDepth: 40.0# Deptmap values factor

DepthMapFactor: 1000.0#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1000# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500PointCloudMapping.Resolution: 0.01

meank: 50

thresh: 2.0启动相机:

roslaunch realsense2_camera rs_rgbd.launch运行:

rosrun ORB_SLAM2-2 RGBD Vocabulary/ORBvoc.bin Examples/RGB-D/RealSense.yaml /camera/rgb/image_raw:=/camera/color/image_raw /camera/depth_registered/image_raw:=/camera/aligned_depth_to_color/image_raw

这篇关于Ubuntu18.04 realsenseD435i ROS orbslam2的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!