本文主要是介绍FouriDown: Factoring Down-Sampling into Shuffling and Superposing,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

摘要

https://openreview.net/pdf?id=nCwStXFDQu

空间下采样技术,如步长卷积、高斯下采样和最近邻下采样,在深度神经网络中至关重要。在本文中,我们重新审视了空间下采样家族的工作机制,并分析了先前方法中使用的静态加权策略所引起的偏差效应。为了克服这种偏差限制,我们提出了一种在傅里叶域中的新型下采样范式,简称FouriDown,它统一了现有的下采样技术。受信号采样定理的启发,我们将非参数的静态加权下采样算子参数化为统一傅里叶函数内的可学习和上下文自适应算子。具体而言,我们在单个通道维度内以物理上封闭的方式组织二维平面的相应频率位置。然后,我们根据一个指示器执行逐点通道混洗,该指示器确定一个通道的信号频率区间是否容易发生混叠,从而确保加权参数学习的一致性。FouriDown作为一种通用算子,包含四个关键组件:二维离散傅里叶变换、上下文混洗规则、傅里叶加权自适应叠加规则和二维逆傅里叶变换。这些组件可以轻松地集成到现有的图像恢复网络中。为了证明FouriDown的有效性,我们在图像去模糊和低光图像增强方面进行了广泛的实验。结果一致表明,FouriDown可以显著提高性能。代码已公开,以促进对FouriDown的进一步探索和应用,网址为https://github.com/zqcrafts/FouriDown。

1 Introduction

下采样技术[1,2,3]在深度神经网络中发挥着至关重要的作用,因为它在扩大感受野、提取层次特征、提高计算效率以及处理尺度和平移变化方面具有优势。然而,根据信号采样定理,现有的下采样技术,如步长卷积、高斯下采样和最近邻下采样[1,4,5,6],不可避免地降低了离散信号的采样频率,导致高频信号被意外地折叠到低频区域,从而产生频率混叠现象。

为了解决混叠问题,已经开发了多种策略[7,8,9,10,11,12,13]。这些策略通过应用低通滤波机制对信号进行预处理,旨在通过不同类型的低通设计来滤除高频信息。常用的两种类型包括理想低通滤波器,它在傅里叶域中截断高频信息,以及高斯低通滤波器,它逐渐衰减边界附近的频率分量。然而,理想低通滤波器可能会由于频谱泄漏而引入环状伪影,而高斯低通滤波器则可能导致对视觉识别任务至关重要的边缘信息大量丢失。

在下采样家族中,流行的方法依赖于静态加权策略,这可能导致意外的偏差(详见第4.4节)。如图1所示,步长卷积和步长池化变体依赖于静态模板 w i = [ 0.25 , 0.25 , 0.25 , 0.25 ] w_{i}=[0.25,0.25,0.25,0.25] wi=[0.25,0.25,0.25,0.25]在对应的角落位置上进行操作,而理想低通滤波器则利用 w i = [ 0.25 , 0 , 0 , 0 ] w_{i}=[0.25,0,0,0] wi=[0.25,0,0,0]加权模板。(证明见附录B。)所有这些加权模板都适用于所有协调位置,并且与特征上下文无关。人们普遍认为,缺乏上下文相关性的静态采样方法对于视觉任务来说不是最优的。因此,如图1所示,我们致力于将不同的下采样方法统一起来,并实现一种最优的方法,即在傅里叶域中通过可学习和上下文自适应的参数化函数来统一下采样建模规则。

In this study, we delve into the working mechanism of the spatial down-sampling family and analyze the biased effects resulting from the static weighting strategy used in existing down-sampling approaches. To solve the bias problem, we propose a novel down-sampling paradigm called FouriDown, which operates in the Fourier domain and adapts the feature sampling based on the image context. Inspired by the signal sampling theorem, we parameterize the non-parameter static weighting down-sampling operator as a learnable and context-adaptive operator in a unified Fourier function. Furthermore, drawing from this insight, we organize the corresponding frequency positions of the 2 D plane, ensuring that they are physically closed in a single channel dimension. We then perform pointwise channel shuffling based on an indicator that determines whether a channel’s signal frequency bin is prone to aliasing, thereby maintaining the consistency of the weighting parameter learning. FouriDown, as a generic operator, comprises four key components: 2D discrete Fourier transform, context shuffling rules, Fourier weighting-adaptively superposing rules, and 2D inverse Fourier transform. These components can be readily integrated into existing image restoration networks, allowing for a plug-and-play approach. To verify its efficacy, we conduct extensive experiments across multiple computer vision tasks, including image de-blurring and low-light image enhancement. The results demonstrate that FouriDown consistently outperforms the baselines, showcasing its capability of performance improvement.

In conclusion, this work propose a novel and unified framework for the research of down-sampling, which have the following contributions.

- We provide the first exploration of the aliasing problem in deep neural networks, analyzing it from a spectrum perspective.

- To achieve dynamic frequency aliasing, we introduce a unified approach to down-sampling strategies within the Fourier function. Additionally, we propose a learnable and context-adaptive down-sampling operator based on the Nyquist signal sampling theorem.

- Our proposed down-sampling approach serves as a plug-and-play operator, consistently enhancing the performance of image restoration tasks, such as low-light enhancement and image deblurring.

2 Related Work

2.1 Traditional Down-Sampling

Downsampling is an important and common operator in computer vision [14, 15, 16, 17, 18, 19, 20, 21,22,23,24,25] , which benefits from enlarging the receptive field and reducing computational costs. So many models incorporate downsampling to allow the primary reconstruction components conducting at a lower resolution. Moreover, with the emergence of increasingly compute-intensive large models, downsampling becomes especially crucial, particularly for high-resolution input images.

Previous downsampling methods often utilized local spatial neighborhood computations (e.g., Bilinear, Bicubic and MaxPooling), which show decent performances across various tasks. However, these computations are relatively fixed, making it challenging to maintain consistent performance across different tasks. To address this, some methods made specific designs to make the downsampling more efficient in specific tasks. For instance, some works [12,11,10,7] introduce the Gaussian blur kernel before the downsampling convolution to combat aliasing for better shift-invariance in classification tasks. Grabinski et al. [26,27] equip the ideal low-pass filter or the hamming filter into downsampling to enhance model robustness and avoid overfitting.

2.2 Dynamic Down-Sampling

Due to the development of data-driven deep learning, in addition to traditional down-sampling, some other works [28,29,30,31,32,33] introduce dynamic downsampling to adaptively adjust for different tasks, thereby achieving better generalizability. For instance, Pixel-Shuffle [28] enables dynamic spatial neighborhood computation through the interaction between feature channels and spaces, restoring finer details more effectively. Kim et al. [29] proposes a task-aware image downsampling to support upsampling for more efficient restoration.

In addition to dynamic neighborhood computation, dynamic strides have also gained widespread attention in recent years. For instance, Riad et al. [30] posits that the commonly adopted integer stride of 2 for downsampling might not be optimal. Consequently, they introduce learnable strides to explore a better trade-off between computation costs and performances. However, the stride is still spatially uniformly distributed, which might not be the best fit for images with uneven texture density distributions. To address this issue, dynamic non-uniform sampling garners significant attention [31, 32, 33]. For example, Thavamani et al. [31] proposed a saliency-guided non-uniform sampling method aimed at reducing computation while retaining task-relevant image information.

In conclusion, most of recent researches focus on dynamic neighborhood computation or dynamic stride for down-sampling, where the paradigm can be represented as \operatorname{Down}(s) , where s denotes the stride. However, in this work, we observe that the methods based on this downsampling paradigm employ static frequency aliasing, which may potentially hinder further development towards effective downsampling. However, learning dynamic frequency aliasing upon the existing paradigm poses challenges. To address this issue, we revisit downsampling from a spectral perspective and propose a novel paradigm for it, denoted as FouriDown (s, w) . This paradigm, while retaining the stride parameter, introduces a new parameter, w , which represents the weight of frequency aliasing during downsampling and is related to strides. Further, based on this framework, we present an elegant and effective approach to achieve downsampling with dynamic frequency aliasing, demonstrating notable performance improvements across multiple tasks and network architectures.

3 Method

Definitions. f(x, y) \in \mathbb{R}^{\mathrm{H}} \times \mathrm{W} \times \mathrm{C} is the 2-D discrete spatial signal and the sampling rates in x and y axis are \Omega_{x} and \Omega_{y} , respectively. F(u, v) \in \mathbb{R}^{\mathrm{H} \times \mathrm{W} \times \mathrm{C}} is the Fourier transform of f(x, y) , where the maximum frequencies in u and v axis are respectively denoted as u_{\max } and v_{\max } . Moreover, f^{\prime}(x, y) \in \mathbb{R}^{\frac{\mathrm{H}}{2} \times \frac{\mathrm{w}}{2} \times \mathrm{C}} is 2-strided down-sampled \mathrm{f}(\mathrm{x}, \mathrm{y}) and its Fourier transform F^{\prime}(u, v) .

Theorem-1. Shuffling and Superposing. The spatial down-sampling typically results in a shrinkage of the tolerance for the maximum frequency of the signal. Specifically, high frequencies will fold back into new low frequencies and superpose onto the original low frequencies. To illustrate with 1 dimensional signal, the high and low frequency superposition in the down-sampling can be formulated as

F^{\prime}(u)=\mathbb{S}\left(F(u), F\left(u+\frac{\Omega_{x}}{2}\right)\right) \quad \text { when } \quad u \in\left(0, \frac{\Omega_{x}}{2}\right)

where \mathbb{S} is a superposing operator. Note that the high frequency is F\left(u+\frac{\Omega_{x}}{2}\right) considering positive directions, while the low frequency is F(u) considering positive directions instead.

Theorem-2. Static Averaging Superposing. For an image, the spatial down-sampling operator with 2 strides can be equivalent to dividing the Fourier spectrum into 2 \times 2 equal parts and superposing them averagely by \frac{1}{4} factor

where F_{(i, j)}(u, v) is a sub-matrix of F(u, v) by equally dividing F(u, v) into 2 \times 2 partitions and i, j \in{0,1} . Given that Down _{2} is 2 -strided down-sampling operator and IDFT is inverse discrete Fourier transform, we have

\operatorname{Down}{2}(f(x, y))=\operatorname{IDFT}\left(\frac{1}{4} \sum{i=0}^{1} \sum_{j=0}^{1} F_{(i, j)}(u, v)\right)

The proof of the above theorem can be found in the Appendix, and examples of 1-D and 2-D signals can also be referred in Figure 2(a) and (b).

3.1 Architecture Design

In this work, we argue that the the static superposing strategy like the stride-based down-sampling in Theorem- 2 might lead to biased effects. Motivated by adaptively learning ability of CNNs, we aim to parameterize the non-parameter static superposing step as a learnable and context-adaptive operator in the Fourier domain.

Definition-2 (Shuffle-Invariance) Given an operator z( . ) that is shuffle-invariant and o_{1}, o_{2}, o_{3}, o_{4} as different components, the shuffle-invariant is defined as z\left(o_{1}, o_{2}, o_{3}, o_{4}\right)=z\left(\operatorname{shuffle}\left(o_{1}, o_{2}, o_{3}, o_{4}\right)\right) , where shuffle(.) is shuffling the order of input components arbitrarily.

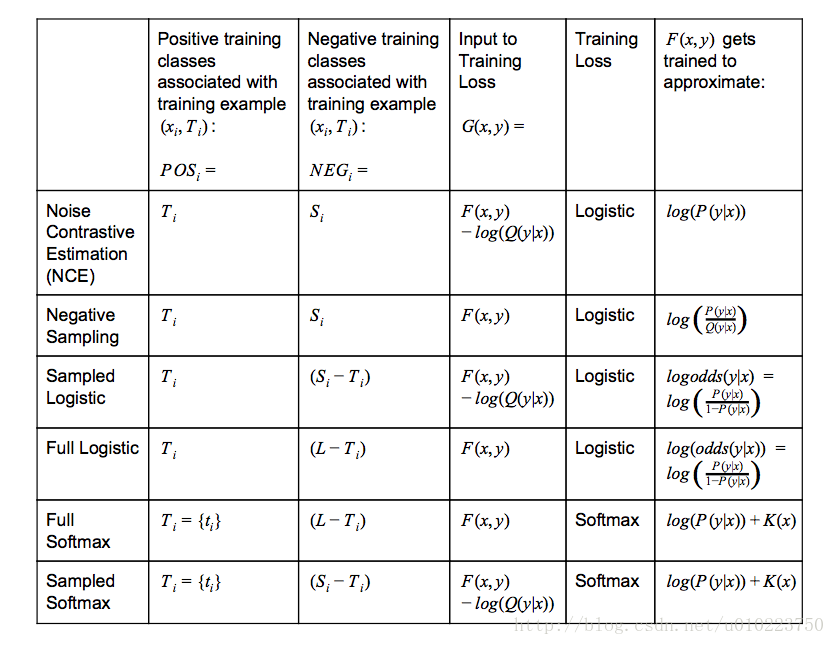

Note that the average operator in Theorem-2 is shuffle-invariant. For example, Aver (a, b, c, d)= Aver (b, a, c, d) ). However, different from averaging, the convolution operator, which is sensitive to the input order, does not have this property.

To alleviate this problem, we design a spectral-shuffle strategy that first performs shuffling according to Theorem-1 and then aligns across different frequency bands, as shown in Figure 3. Specifically, we initially spilt the original spectrum F(u, v) into 16 patches equally. Then, according to Theorem-1, we classify these patches into 4 group, where each group is pixel-wise matched frequency bin for superposing. However, the energy distribution in each group is different. Considering the shufflevariance of convolution operators, we reorder the intra-group sequence for inter-group alignment. The alignment is motivated by wavelet theory, where intra-group frequencies are reordered according to low-frequency and high-frequency in horizontal direction, high-frequency in vertical direction, and high-frequency in diagonal direction. Then, the aligned groups are sorted orderly on channels for pixel-wise matching in the channel dimension. Finally, we perform adaptively weighted superposition on channels by learned weights for the down-sampling results. The main implementation is depicted in Algorithm 1. Code will be public.

4 Experiments and Discussion

To validate the efficacy of our proposed FouriDown, we execute comprehensive experiments across several computer vision tasks and conduct exhaustive ablation studies to evaluate its resilience.

4.1 Experimental Settings

Image enhancement. For image enhancement, we assess our FouriDown model using the LOL [34] and Huawei [35] benchmarks. The LOL dataset contains 500 image pairs ( 485 for training, 15 for testing), and the Huawei dataset contains 2480 paired images ( 2200 for training, 280 for testing). We compare our results with two established baselines, SID [36] and DRBN [37].

Image deblurring. For image deblurring, we utilize DeepDeblur [38] and MPRNet [39] on the DVD dataset [40], which includes 2103 training and 1111 test pairs. We further validate our model’s generalizability using the HIDE dataset [41].

Image denoising. In the context of image de-noising, our training involves the SIDD dataset [42]. Subsequent performance assessments are carried out on the remaining validation samples from the SIDD dataset and on the DND benchmark dataset [43]. For comparative analysis, we choose baselines such as MIRNet [44], and Restormer [45].

Image dehazing. For image dehazing, we employ RESIDE dataset for evaluations. We also use two different network designs MSBDN [46] and GridNet [47] with our proposed operator for validation.

4.2 Implementation Details

Regarding the above competitive baselines, we perform the comparison over the following configurations by replacing the down-sampling operator, such as strided convolution and strided pooling), with the proposed FouriDown operator. Additionally, we also perform comparisons with previous anti-aliasing down-sampling methods, including Gaussian filter [7] and “ideal” Low-Pass Filter (LPF) [13], which conduct the static modulation on the spectrum.

- Original: The original down-sampling in the baseline;

- Gaussian: Following [7], equipping the Gaussian filter in all channels before the original down-sampling for anti-aliasing;

- LPF: Following [13], equipping the “ideal” Low-Pass Filter in all channels before the original down-sampling operator for anti-aliasing;

- Ours: Replacing our proposed FouriDown with the original down-sampling operator;

4.3 Comparison and Analysis

Quantitative Comparison. To demonstrate the effectiveness of our proposed FouriDown, we conduct extensive experiments as shown in Tables 1-4. The best results are in bold. Above and below the baseline are highlighted in red and blue, respectively. From these tables, although previous anti-aliasing methods may be useful for some image restoration tasks, their static weights limit their universality in other tasks. For instance, while the LPF approach performs well in dehazing, it fails to deliver effective in deblurring and low-light enhancement. In contrast, our method is proved to be effective across the majority of image restoration tasks. Specifically, we achieved an improvement of 1.82 dB in low-light enhancement and 0.42 dB in dehazing on LOL and Reside dataset respectively.

Further, we compare the computing costs with other methods shown in Table 5. We include results from traditional down-sampling techniques like bicubic, bilinear, pixel-unshuffle, 2 \times 2 learnable CNN (with stride =2 ), max-pooling, average-pooling, LPF, Gaussian and Ours. Noting that the “Original” down-sampling of the method is pointed by asterisk (‘*’). This will allow a clearer contrast and showcase the advantages of our method not only against anti-aliasing approaches but also against these conventional down-sampling methods.

Qualitative Comparison. Due to space constraints, we only present a qualitative comparison on the low-light enhancement task. As illustrated in Figure 4 and Figure 5, our FouriDown reduces original artifacts presented in the SID due to the more flexible frequency interactions. Then, we compare the visualizations of the feature maps and their corresponding spectra between FouriDown and other down-sampling methods (see Figure 6 and Figure 7). It can be observed that the model equipped with FouriDown generates much stronger responses to degradation-aware regions, i.e. global lowillumination in the low-light enhancement task. In contrast, the model with other down-sampling method responds weakly to these regions. The results demonstrates the effectiveness of FouriDown in capturing degradation-aware information by adaptive frequency superposition in down-sampling. For the Gaussian method, its response to degradation is relatively large (second only to FouriDown), thus achieving performance that is also second only to FouriDown. Similarly, as the LFP method has the poorest performance, its feature response of the low-light areas is also the lowest. The performance of other methods is roughly similar, so their feature responses are also quite similar, indicating a similar capability to capture image degradation areas. Additionally, from the spectral comparison in Figure 7, it can be observed that the Gaussian method loses a lot of high-frequency information compared to FouriDown. This leads to challenges in recovering textures in dark areas. Hence, although the Gaussian method exhibits good responses, FouriDown achieves better performances compared to it. More qualitative comparisons can be found in the following supplementary material.

4.4 Discussions

Bias Effects by Static Superposing. As shown in Figure 8, we compare different down-sampling methods with static superposing manner, and find they have various bias effects.

Frequency Aliasing Visualization. To delve deeper into the high-low frequency interactions in down-sampling, we examine the spectrum of images down-sampled by factors of 4 x, 2 x , and 1 x . Following Theorem 1, some regions of spectrums are overlaid on the same frequency band, with smaller scales overlaying larger ones, as shown in Figure 9. This alignment of same bandwidth reveals a rectangular contour at the intersections, where high-frequencies not obeying the Nyquist theory fold into low frequencies during down-sampling, as pointed by the yellow arrow. This suggests that it is significant for down-sampling to modulate frequencies, otherwise it might degrade the original signal undesirably.

Other Discussions. Because of space constraints, for more discussions, including extensions to Theorem-2 and revisiting of previous anti-aliasing methods in the proposed FouriDown framework, could be referred to the supplementary material.

5 Limitations

In this work, we explore spatial down-sampling from a frequency-domain perspective and optimize the static weighting of previous down-sampling with a stride of 2 in the frequency domain. Our modeling of down-sampling is based on using uniformly distributed impulse sequences as the sampling function, hence exploring the characteristics of the sampling function in the frequency domain. However, for non-uniform down-sampling, where the sampling rate varies according to the content, our method might become limited. We hope to overcome this limitation in the future work by exploring the frequency domain response of non-uniform sampling functions.

6 Conclusion

In our study, we revisit the spatial down-sampling techniques and anti-aliasing strategies from a Fourier domain perspective, recognizing their reliance on static high and low frequency superposing. As a result, we propose a novel approach (FouriDown) to learn a learnable frequency-context interplay among high and low frequencies during down-sampling. Moreover, this work is the first exploration of dynamic frequency interaction in down-sampling. The FouriDown is designed based on the signal sampling theory, so it is convenient to replace most of current down-sampling and anti-aliasing techniques. Extensive experiments demonstrate the performance improvements across a variety of vision tasks.

Ultimately, we believe that down-sampling is a crucial research direction in the future. It allows for network design at a lower resolution, significantly reducing the computational overhead and enabling light-weighting models.

Broader Impact

This work further exploits the potential of down-sampling in the Fourier domain and offers a new perspective that frequency band shuffling and superposing for future research of down-sampling. Down-sampling techniques can potentially make the future model development more efficient and effective, beneficial for various machine learning and AI applications. Nonetheless, the efficacy of our method could be a source of concern if not properly utilized, especially in terms of the safety of real-world applications. To alleviate such concerns, we plan to rigorously investigate the robustness and effectiveness of our approach across a more diverse spectrum of real-world scenarios.

Acknowledgments

This work was supported by the JKW Research Funds under Grant 20-163-14-LZ-001-004-01, and the Anhui Provincial Natural Science Foundation under Grant 2108085UD12. We acknowledge the support of GPU cluster built by MCC Lab of Information Science and Technology Institution, USTC.

这篇关于FouriDown: Factoring Down-Sampling into Shuffling and Superposing的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!

![[机器学习与深度学习] - No.1 基于Negative Sampling SKip-Gram Word2vec模型学习总结](https://img-blog.csdnimg.cn/img_convert/8c61d4a1a0606904cba380eaafffa1d7.png)