本文主要是介绍【论文学习】Fast Online Object Tracking and Segmentation: A Unifying Approach 在线快速目标跟踪与分割 -论文学习,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

Fast Online Object Tracking and Segmentation: A Unifying Approach

在线快速目标跟踪与分割:一种通用方法

摘要

论文提出一种实时VOT和半监督VOS的通用方法。

该方法称为SiamMask,通过二值分割任务生成损失,改进了全卷积Siamese 方法的离线训练步骤。

训练完成后,SiamMask 依靠init 单个bbox并在线运行,生成与类别无关的对象分割Mask,和旋转bbox。速度可达每秒55帧。

策略实现了VOT-2018上最佳的跟踪效果。同时实现了DAVIS-2016和DAVIS-2017上半监督VOS任务的最佳性能和速度。

项目地址:http://www.robots.ox.ac.uk/˜qwang/SiamMask

1.引言

跟踪是一项基本任务。广泛应用在视频分析程序中,目标对象的某种程度推理。

跟踪允许在帧之间建立前后对象的对应关系[34]。

跟踪广泛用于各种场景,如自动监控,车辆导航,视频标签,人机交互和活动识别。

VOT的目的,在视频的第一帧中,给定任意感兴趣Object的位置,尽可能准确的预测它在所有后续帧中的位置。[48]对许多应用来说,视频流传输时的在线跟踪很重要。换句话讲,tracker 不应利用后续的帧来推断物体的当前位置[26]。

这个VOT基准所描绘的场景,代表了具有简单轴对齐(例如[56,52])或旋转[26,27] bbox 的目标对象。

这样简单的标注方法数据标注成本较低。更重要的是,它允许用户快速,简单的执行目标初始化。

2.相关工作

VOT

半监督VOS

3.方法

3.1.全卷积联合网络

【SiamFC】

作为跟踪系统的基本组成部分,离线训练的全卷积Siamese网络,可用于比较目标图像z和稍大是待搜索图像x,来获取响应 map。

z是以目标对象为中心裁剪的 w×h区域,x是以目标最新估计位置为中心裁切的较大区域。

这两个输入使用相同的CNN fθ处理,生成两个相互关联的特征图。

【SiamRPN】

依靠RPN大大提高了SiamFC的性能(RPN)[46,14],RPN对估算目标位置可 输出可变宽高比的bbox。尤其在SiamRPN中,每个行对一组k个anchor box proposals和相应的对象/背景scores 进行编码。因此,SiamRPN 对 box predictions与分类scores可并行输出。两个输出分支已使用 smooth L1 和交叉熵损失训练过[28,第3.2节]。

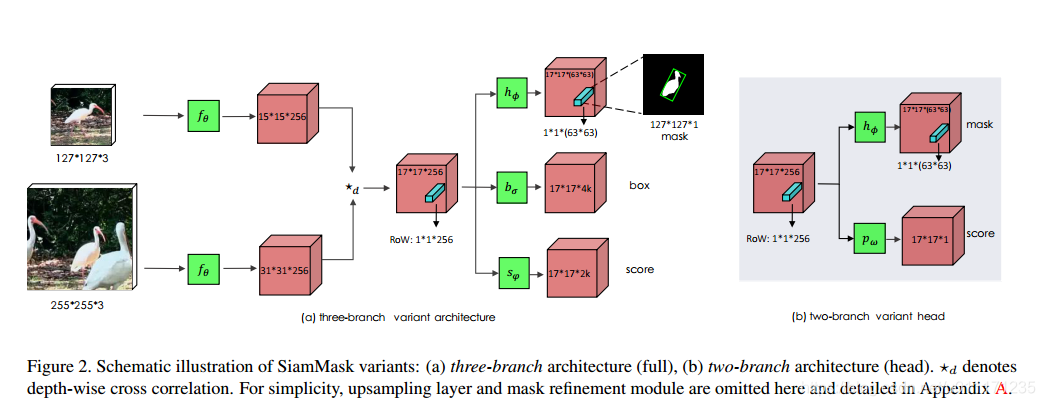

3.2. SiamMask

Loss function

Mask representation

Two variants

Box generation

3.3. Implementation details

Network architecture

Training

Inference

4.实验

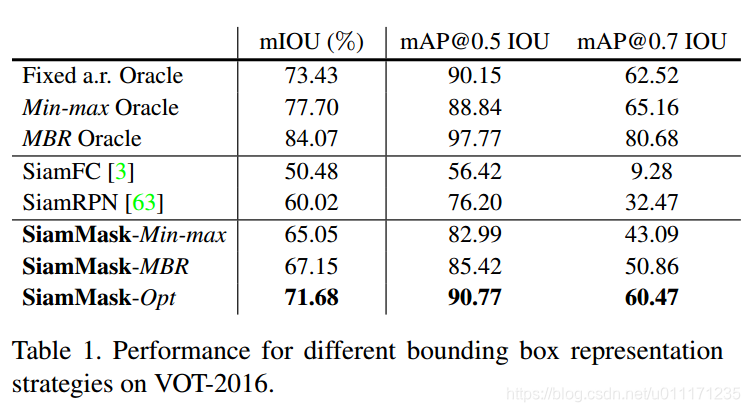

4.1.VOT 评估

Datasets and settings.

How much does the object representation matter?

Results on VOT-2018 and VOT-2016.

4.2.半监督VOS评估

Datasets and settings.

Results on DAVIS and YouTube-VOS.

4.3.进一步分析

Network architecture

Multi-task training

Timing.

Failure cases.

结论

介绍了SiamMask,使用全卷积连体跟踪器对目标生成类别无关的二值分割Mask。

展示其如何成功的同时应用在VOT和半监督VOS任务上。

达到现有跟踪器最佳精度,同时也实现了最快的VOS。

提出的 SiamMask 的两个变种,只需一个简单地box进行初始化,在线操作,实时运行,并且无需对测试序列进行任何调整。

Acknowledgements

引用

[1] L. Bao, B. Wu, and W. Liu. Cnn in mrf: Video object segmentation via inference in a cnn-based higher-order spatiotemporal mrf. In IEEE Conference on Computer Vision and

Pattern Recognition, 2018. 2, 3, 6

[2] L. Bertinetto, J. F. Henriques, J. Valmadre, P. H. S. Torr, and

A. Vedaldi. Learning feed-forward one-shot learners. In Advances in Neural Information Processing Systems, 2016. 3

[3] L. Bertinetto, J. Valmadre, J. F. Henriques, A. Vedaldi, and

P. H. Torr. Fully-convolutional siamese networks for object

tracking. In European Conference on Computer Vision workshops, 2016. 2, 3, 4, 5, 6

[4] D. S. Bolme, J. R. Beveridge, B. A. Draper, and Y. M. Lui.

Visual object tracking using adaptive correlation filters. In

IEEE Conference on Computer Vision and Pattern Recognition, 2010. 2

[5] S. Caelles, K.-K. Maninis, J. Pont-Tuset, L. Leal-Taixe, ´

D. Cremers, and L. Van Gool. One-shot video object segmentation. In IEEE Conference on Computer Vision and

Pattern Recognition, 2017. 7

[6] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and

A. L. Yuille. Deeplab: Semantic image segmentation with

deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018. 5, 11

[7] Y. Chen, J. Pont-Tuset, A. Montes, and L. Van Gool. Blazingly fast video object segmentation with pixel-wise metric

learning. In IEEE Conference on Computer Vision and Pattern Recognition, 2018. 2, 3, 7

[8] J. Cheng, Y.-H. Tsai, W.-C. Hung, S. Wang, and M.-H. Yang.

Fast and accurate online video object segmentation via tracking parts. In IEEE Conference on Computer Vision and Pattern Recognition, 2018. 2, 3, 6, 7

[9] J. Cheng, Y.-H. Tsai, S. Wang, and M.-H. Yang. Segflow:

Joint learning for video object segmentation and optical

flow. In IEEE International Conference on Computer Vision,

2017. 3, 7

[10] H. Ci, C. Wang, and Y. Wang. Video object segmentation by

learning location-sensitive embeddings. In European Conference on Computer Vision, 2018. 2

[11] D. Comaniciu, V. Ramesh, and P. Meer. Real-time tracking

of non-rigid objects using mean shift. In IEEE Conference

on Computer Vision and Pattern Recognition, 2000. 2

[12] M. Danelljan, G. Bhat, F. S. Khan, and M. Felsberg. Eco:

Efficient convolution operators for tracking. In IEEE Conference on Computer Vision and Pattern Recognition, 2017.

1, 2

[13] M. Danelljan, G. Hager, F. S. Khan, and M. Felsberg. Learn- ¨

ing spatially regularized correlation filters for visual tracking. In IEEE International Conference on Computer Vision,

2015. 2, 5

[14] C. Feichtenhofer, A. Pinz, and A. Zisserman. Detect to track

and track to detect. In IEEE International Conference on

Computer Vision, 2017. 3

[15] A. He, C. Luo, X. Tian, and W. Zeng. Towards a better match

in siamese network based visual object tracker. In European

Conference on Computer Vision workshops, 2018. 2, 6, 7

[16] A. He, C. Luo, X. Tian, and W. Zeng. A twofold siamese

network for real-time object tracking. In IEEE Conference

on Computer Vision and Pattern Recognition, 2018. 3

[17] K. He, G. Gkioxari, P. Dollar, and R. Girshick. Mask r- ´

cnn. In IEEE International Conference on Computer Vision,

2017. 4

[18] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning

for image recognition. In IEEE Conference on Computer

Vision and Pattern Recognition, 2016. 5, 11

[19] D. Held, S. Thrun, and S. Savarese. Learning to track at 100

fps with deep regression networks. In European Conference

on Computer Vision, 2016. 2, 5

[20] J. F. Henriques, R. Caseiro, P. Martins, and J. Batista. Highspeed tracking with kernelized correlation filters. IEEE

Transactions on Pattern Analysis and Machine Intelligence,

2015. 2, 5

[21] Y.-T. Hu, J.-B. Huang, and A. G. Schwing. Videomatch:

Matching based video object segmentation. In European

Conference on Computer Vision, 2018. 2, 3

[22] V. Jampani, R. Gadde, and P. V. Gehler. Video propagation

networks. In IEEE Conference on Computer Vision and Pattern Recognition, 2017. 2, 3, 7

[23] A. Khoreva, R. Benenson, E. Ilg, T. Brox, and B. Schiele.

Lucid data dreaming for object tracking. In IEEE Conference on Computer Vision and Pattern Recognition workshops, 2017. 2, 3, 6

[24] H. Kiani Galoogahi, T. Sim, and S. Lucey. Multi-channel

correlation filters. In IEEE International Conference on

Computer Vision, 2013. 2

[25] H. Kiani Galoogahi, T. Sim, and S. Lucey. Correlation filters

with limited boundaries. In IEEE Conference on Computer

Vision and Pattern Recognition, 2015. 2

[26] M. Kristan, A. Leonardis, J. Matas, M. Felsberg,

R. Pflugfelder, L. Cehovin, T. Voj ˇ ´ır, G. Hager, A. Luke ¨ zi ˇ c, ˇ

G. Fernandez, et al. The visual object tracking vot2016 chal- ´

lenge results. In European Conference on Computer Vision,

2016. 1, 3, 5

[27] M. Kristan, A. Leonardis, J. Matas, M. Felsberg,

R. Pfugfelder, L. C. Zajc, T. Vojir, G. Bhat, A. Lukezic,

A. Eldesokey, G. Fernandez, and et al. The sixth visual object

tracking vot-2018 challenge results. In European Conference

on Computer Vision workshops, 2018. 1, 3, 5, 8, 12

[28] B. Li, J. Yan, W. Wu, Z. Zhu, and X. Hu. High performance

visual tracking with siamese region proposal network. In

IEEE Conference on Computer Vision and Pattern Recognition, 2018. 2, 3, 4, 5, 7

[29] F. Li, C. Tian, W. Zuo, L. Zhang, and M.-H. Yang. Learning spatial-temporal regularized correlation filters for visual

tracking. In IEEE Conference on Computer Vision and Pattern Recognition, 2018. 2, 6, 7

[30] X. Li and C. C. Loy. Video object segmentation with joint

re-identification and attention-aware mask propagation. In

European Conference on Computer Vision, 2018. 2, 3, 6

[31] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollar, and C. L. Zitnick. Microsoft coco: Com- ´

mon objects in context. In European Conference on Computer Vision, 2014. 5

9[32] J. Long, E. Shelhamer, and T. Darrell. Fully convolutional

networks for semantic segmentation. In IEEE Conference on

Computer Vision and Pattern Recognition, 2015. 4

[33] A. Lukezic, T. Vojir, L. C. Zajc, J. Matas, and M. Kristan.

Discriminative correlation filter with channel and spatial reliability. In IEEE Conference on Computer Vision and Pattern

Recognition, 2017. 2, 5, 6, 7

[34] T. Makovski, G. A. Vazquez, and Y. V. Jiang. Visual learning

in multiple-object tracking. PLoS One, 2008. 1

[35] K.-K. Maninis, S. Caelles, Y. Chen, J. Pont-Tuset, L. LealTaixe, D. Cremers, and L. Van Gool. Video object segmen- ´

tation without temporal information. In IEEE Transactions

on Pattern Analysis and Machine Intelligence, 2017. 2, 3, 6

[36] N. Marki, F. Perazzi, O. Wang, and A. Sorkine-Hornung. Bi- ¨

lateral space video segmentation. In IEEE Conference on

Computer Vision and Pattern Recognition, 2016. 2, 3, 6

[37] O. Miksik, J.-M. Perez-R ´ ua, P. H. Torr, and P. P ´ erez. Roam: ´

a rich object appearance model with application to rotoscoping. In IEEE Conference on Computer Vision and Pattern

Recognition, 2017. 1

[38] F. Perazzi. Video Object Segmentation. PhD thesis, ETH

Zurich, 2017. 1, 3, 6

[39] F. Perazzi, A. Khoreva, R. Benenson, B. Schiele, and

A. Sorkine-Hornung. Learning video object segmentation

from static images. In IEEE Conference on Computer Vision

and Pattern Recognition, 2017. 2, 3, 6, 7

[40] F. Perazzi, J. Pont-Tuset, B. McWilliams, L. Van Gool,

M. Gross, and A. Sorkine-Hornung. A benchmark dataset

and evaluation methodology for video object segmentation.

In IEEE Conference on Computer Vision and Pattern Recognition, 2017. 1, 3, 6, 7, 8, 13

[41] F. Perazzi, O. Wang, M. Gross, and A. Sorkine-Hornung.

Fully connected object proposals for video segmentation. In

IEEE International Conference on Computer Vision, 2015. 3

[42] P. Perez, C. Hue, J. Vermaak, and M. Gangnet. Color-Based ´

Probabilistic Tracking. In European Conference on Computer Vision, 2002. 2

[43] P. O. Pinheiro, R. Collobert, and P. Dollar. Learning to seg- ´

ment object candidates. In Advances in Neural Information

Processing Systems, 2015. 2, 4

[44] P. O. Pinheiro, T.-Y. Lin, R. Collobert, and P. Dollar. Learn- ´

ing to refine object segments. In European Conference on

Computer Vision, 2016. 4, 7, 11

[45] J. Pont-Tuset, F. Perazzi, S. Caelles, P. Arbelaez, A. Sorkine- ´

Hornung, and L. Van Gool. The 2017 davis challenge on video object segmentation. arXiv preprint

arXiv:1704.00675, 2017. 6, 8, 13

[46] S. Ren, K. He, R. Girshick, and J. Sun. Faster r-cnn: Towards

real-time object detection with region proposal networks. In

Advances in Neural Information Processing Systems, 2015.

2, 3

[47] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh,

S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein,

et al. Imagenet large scale visual recognition challenge. International Journal of Computer Vision, 2015. 5

[48] A. W. Smeulders, D. M. Chu, R. Cucchiara, S. Calderara,

A. Dehghan, and M. Shah. Visual tracking: An experimental

survey. IEEE Transactions on Pattern Analysis and Machine

Intelligence, 2014. 1, 3

[49] R. Tao, E. Gavves, and A. W. Smeulders. Siamese instance

search for tracking. In IEEE Conference on Computer Vision

and Pattern Recognition, 2016. 2

[50] Y.-H. Tsai, M.-H. Yang, and M. J. Black. Video segmentation via object flow. In IEEE Conference on Computer Vision

and Pattern Recognition, 2016. 2, 3, 6

[51] J. Valmadre, L. Bertinetto, J. Henriques, A. Vedaldi, and

P. H. S. Torr. End-to-end representation learning for correlation filter based tracking. In IEEE Conference on Computer

Vision and Pattern Recognition, 2017. 2

[52] J. Valmadre, L. Bertinetto, J. F. Henriques, R. Tao,

A. Vedaldi, A. Smeulders, P. H. S. Torr, and E. Gavves.

Long-term tracking in the wild: A benchmark. In European

Conference on Computer Vision, 2018. 1

[53] P. Voigtlaender and B. Leibe. Online adaptation of convolutional neural networks for video object segmentation. In

British Machine Vision Conference, 2017. 2, 3, 6, 7

[54] T. Vojir and J. Matas. Pixel-wise object segmentations for

the vot 2016 dataset. Research Report CTU-CMP-2017–01,

Center for Machine Perception, Czech Technical University,

Prague, Czech Republic, 2017. 6

[55] L. Wen, D. Du, Z. Lei, S. Z. Li, and M.-H. Yang. Jots: Joint

online tracking and segmentation. In IEEE Conference on

Computer Vision and Pattern Recognition, 2015. 2, 3, 6

[56] Y. Wu, J. Lim, and M.-H. Yang. Online object tracking: A

benchmark. In IEEE Conference on Computer Vision and

Pattern Recognition, 2013. 1, 3

[57] S. Wug Oh, J.-Y. Lee, K. Sunkavalli, and S. Joo Kim. Fast

video object segmentation by reference-guided mask propagation. In IEEE Conference on Computer Vision and Pattern

Recognition, 2018. 2, 3, 7

[58] N. Xu, L. Yang, Y. Fan, J. Yang, D. Yue, Y. Liang, B. Price,

S. Cohen, and T. Huang. Youtube-vos: Sequence-tosequence video object segmentation. In European Conference on Computer Vision, 2018. 2, 5, 6

[59] L. Yang, Y. Wang, X. Xiong, J. Yang, and A. K. Katsaggelos.

Efficient video object segmentation via network modulation.

In IEEE Conference on Computer Vision and Pattern Recognition, June 2018. 2, 3, 7

[60] T. Yang and A. B. Chan. Learning dynamic memory networks for object tracking. In European Conference on Computer Vision, 2018. 2, 3

[61] D. Yeo, J. Son, B. Han, and J. H. Han. Superpixel-based

tracking-by-segmentation using markov chains. In IEEE

Conference on Computer Vision and Pattern Recognition,

2017. 2

[62] J. S. Yoon, F. Rameau, J. Kim, S. Lee, S. Shin, and I. S.

Kweon. Pixel-level matching for video object segmentation

using convolutional neural networks. In IEEE International

Conference on Computer Vision, 2017. 7

[63] Z. Zhu, Q. Wang, B. Li, W. Wu, J. Yan, and W. Hu.

Distractor-aware siamese networks for visual object tracking. In European Conference on Computer Vision, 2018. 2,

3, 5, 6, 7

A. Architectural details

Network backbone

Network heads

Mask refinement module

B. Further qualitative results

Different masks at different locations

Benchmark sequences

这篇关于【论文学习】Fast Online Object Tracking and Segmentation: A Unifying Approach 在线快速目标跟踪与分割 -论文学习的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!