本文主要是介绍双目相机标定以及立体测距原理及OpenCV实现,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

单目相机标定的目标是获取相机的内参和外参,内参(1/dx,1/dy,Cx,Cy,f)表征了相机的内部结构参数,外参是相机的旋转矩阵R和平移向量t。内参中dx和dy是相机单个感光单元芯片的长度和宽度,是一个物理尺寸,有时候会有dx=dy,这时候感光单元是一个正方形。Cx和Cy分别代表相机感光芯片的中心点在x和y方向上可能存在的偏移,因为芯片在安装到相机模组上的时候,由于制造精度和组装工艺的影响,很难做到中心完全重合。f代表相机的焦距。双目标定的第一步需要分别获取左右相机的内外参数,之后通过立体标定对左右两幅图像进行立体校准和对其,最后就是确定两个相机的相对位置关系,即中心距。

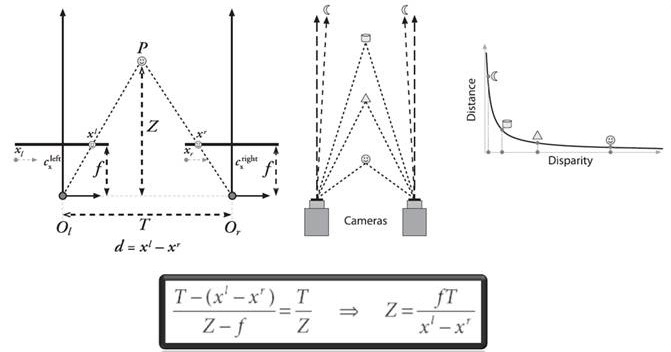

首先看一下双目测距的基本原理:

假设有一个点p,沿着垂直于相机中心连线方向上下移动,则其在左右相机上的成像点的位置会不断变化,即d=x1-x2的大小不断变化,并且点p和相机之间的距离Z跟视差d存在着反比关系。上式中视差d可以通过两个相机中心距T减去p点分别在左右图像上的投影点偏离中心点的值获得,所以只要获取到了两个相机的中心距T,就可以评估出p点距离相机的距离,这个中心距T也是双目标定中需要确立的参数之一。

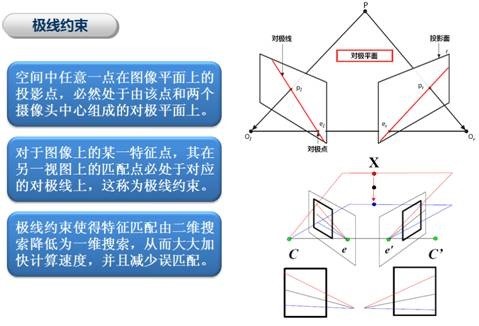

当然这一切有一个前提就是要在两个相机成像上定位到同一个点p上,就是要把左右两个图片的点匹配起来,这就涉及到双目校正的动作。如果通过一幅图片上一个点的特征在另一个二维图像空间上匹配对应点,这个过程会非常耗时。为了减少匹配搜索的运算量,我们可以利用极限约束使得对应点的匹配由二维搜索空间降到一维搜索空间。

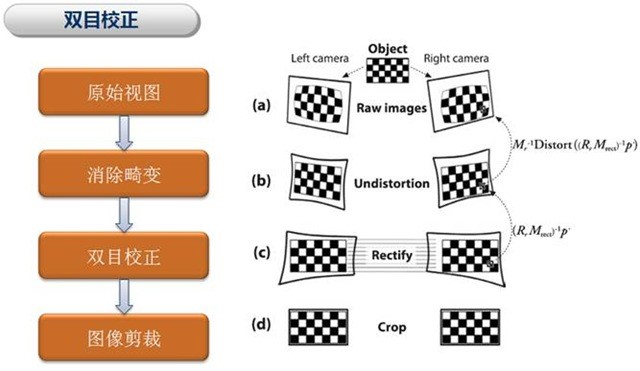

这时候要用双目校正把消除畸变后的两幅图像在水平方向严格的对齐,使得两幅图像的对极线恰好在同一水平线上,这样一幅图像上任意一点与其在另一幅图像上的匹配点就必然具有相同的行号,只需要在该行进行一维搜索就可匹配到对应点。

下边Opencv双目相机校正的代码是在自带的程序stereo_calib.cpp基础上修改的,位置在“XX\opencv\sources\samples\cpp\”,使用时拷贝目录下的26张图片和stereo_calib.xml到当前工程目录下,并在工程调试->命令参数里设置参数为:StereoCalibration -w 9 -h 6 stereo_calib.xml

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"#include <vector>

#include <string>

#include <algorithm>

#include <iostream>

#include <iterator>

#include <stdio.h>

#include <stdlib.h>

#include <ctype.h>using namespace cv;

using namespace std;static void StereoCalib(const vector<string>& imagelist, Size boardSize, bool useCalibrated=true, bool showRectified=true)

{if( imagelist.size() % 2 != 0 ){cout << "Error: the image list contains odd (non-even) number of elements\n";return;}bool displayCorners = true;//true;const int maxScale = 2;const float squareSize = 1.f; // Set this to your actual square size// ARRAY AND VECTOR STORAGE:vector<vector<Point2f> > imagePoints[2];vector<vector<Point3f> > objectPoints;Size imageSize;int i, j, k, nimages = (int)imagelist.size()/2;imagePoints[0].resize(nimages);imagePoints[1].resize(nimages);vector<string> goodImageList;for( i = j = 0; i < nimages; i++ ){for( k = 0; k < 2; k++ ){const string& filename = imagelist[i*2+k];Mat img = imread(filename, 0);if(img.empty())break;if( imageSize == Size() )imageSize = img.size();else if( img.size() != imageSize ){cout << "The image " << filename << " has the size different from the first image size. Skipping the pair\n";break;}bool found = false;vector<Point2f>& corners = imagePoints[k][j];for( int scale = 1; scale <= maxScale; scale++ ){Mat timg;if( scale == 1 )timg = img;elseresize(img, timg, Size(), scale, scale);found = findChessboardCorners(timg, boardSize, corners,CV_CALIB_CB_ADAPTIVE_THRESH | CV_CALIB_CB_NORMALIZE_IMAGE);if( found ){if( scale > 1 ){Mat cornersMat(corners);cornersMat *= 1./scale;}break;}}if( displayCorners ){cout << filename << endl;Mat cimg, cimg1;cvtColor(img, cimg, COLOR_GRAY2BGR);drawChessboardCorners(cimg, boardSize, corners, found);double sf = 640./MAX(img.rows, img.cols);resize(cimg, cimg1, Size(), sf, sf);imshow("corners", cimg1); char c = (char)waitKey(500);if( c == 27 || c == 'q' || c == 'Q' ) //Allow ESC to quitexit(-1);}elseputchar('.');if( !found )break;cornerSubPix(img, corners, Size(11,11), Size(-1,-1),TermCriteria(CV_TERMCRIT_ITER+CV_TERMCRIT_EPS,30, 0.01));}if( k == 2 ){goodImageList.push_back(imagelist[i*2]);goodImageList.push_back(imagelist[i*2+1]);j++;}}cout << j << " pairs have been successfully detected.\n";nimages = j;if( nimages < 2 ){cout << "Error: too little pairs to run the calibration\n";return;}imagePoints[0].resize(nimages);imagePoints[1].resize(nimages);objectPoints.resize(nimages);for( i = 0; i < nimages; i++ ){for( j = 0; j < boardSize.height; j++ )for( k = 0; k < boardSize.width; k++ )objectPoints[i].push_back(Point3f(k*squareSize, j*squareSize, 0));}cout << "Running stereo calibration ...\n";Mat cameraMatrix[2], distCoeffs[2];cameraMatrix[0] = Mat::eye(3, 3, CV_64F);cameraMatrix[1] = Mat::eye(3, 3, CV_64F);Mat R, T, E, F;double rms = stereoCalibrate(objectPoints, imagePoints[0], imagePoints[1],cameraMatrix[0], distCoeffs[0],cameraMatrix[1], distCoeffs[1],imageSize, R, T, E, F,TermCriteria(CV_TERMCRIT_ITER+CV_TERMCRIT_EPS, 100, 1e-5),CV_CALIB_FIX_ASPECT_RATIO +CV_CALIB_ZERO_TANGENT_DIST +CV_CALIB_SAME_FOCAL_LENGTH +CV_CALIB_RATIONAL_MODEL +CV_CALIB_FIX_K3 + CV_CALIB_FIX_K4 + CV_CALIB_FIX_K5);cout << "done with RMS error=" << rms << endl;// CALIBRATION QUALITY CHECK// because the output fundamental matrix implicitly// includes all the output information,// we can check the quality of calibration using the// epipolar geometry constraint: m2^t*F*m1=0double err = 0;int npoints = 0;vector<Vec3f> lines[2];for( i = 0; i < nimages; i++ ){int npt = (int)imagePoints[0][i].size();Mat imgpt[2];for( k = 0; k < 2; k++ ){imgpt[k] = Mat(imagePoints[k][i]);undistortPoints(imgpt[k], imgpt[k], cameraMatrix[k], distCoeffs[k], Mat(), cameraMatrix[k]);computeCorrespondEpilines(imgpt[k], k+1, F, lines[k]);}for( j = 0; j < npt; j++ ){double errij = fabs(imagePoints[0][i][j].x*lines[1][j][0] +imagePoints[0][i][j].y*lines[1][j][1] + lines[1][j][2]) +fabs(imagePoints[1][i][j].x*lines[0][j][0] +imagePoints[1][i][j].y*lines[0][j][1] + lines[0][j][2]);err += errij;}npoints += npt;}cout << "average reprojection err = " << err/npoints << endl;// save intrinsic parametersFileStorage fs("intrinsics.yml", CV_STORAGE_WRITE);if( fs.isOpened() ){fs << "M1" << cameraMatrix[0] << "D1" << distCoeffs[0] <<"M2" << cameraMatrix[1] << "D2" << distCoeffs[1];fs.release();}elsecout << "Error: can not save the intrinsic parameters\n";Mat R1, R2, P1, P2, Q;Rect validRoi[2];stereoRectify(cameraMatrix[0], distCoeffs[0],cameraMatrix[1], distCoeffs[1],imageSize, R, T, R1, R2, P1, P2, Q,CALIB_ZERO_DISPARITY, 1, imageSize, &validRoi[0], &validRoi[1]);fs.open("extrinsics.yml", CV_STORAGE_WRITE);if( fs.isOpened() ){fs << "R" << R << "T" << T << "R1" << R1 << "R2" << R2 << "P1" << P1 << "P2" << P2 << "Q" << Q;fs.release();}elsecout << "Error: can not save the extrinsic parameters\n";// OpenCV can handle left-right// or up-down camera arrangementsbool isVerticalStereo = fabs(P2.at<double>(1, 3)) > fabs(P2.at<double>(0, 3));// COMPUTE AND DISPLAY RECTIFICATIONif( !showRectified )return;Mat rmap[2][2];// IF BY CALIBRATED (BOUGUET'S METHOD)if( useCalibrated ){// we already computed everything}// OR ELSE HARTLEY'S METHODelse// use intrinsic parameters of each camera, but// compute the rectification transformation directly// from the fundamental matrix{vector<Point2f> allimgpt[2];for( k = 0; k < 2; k++ ){for( i = 0; i < nimages; i++ )std::copy(imagePoints[k][i].begin(), imagePoints[k][i].end(), back_inserter(allimgpt[k]));}F = findFundamentalMat(Mat(allimgpt[0]), Mat(allimgpt[1]), FM_8POINT, 0, 0);Mat H1, H2;stereoRectifyUncalibrated(Mat(allimgpt[0]), Mat(allimgpt[1]), F, imageSize, H1, H2, 3);R1 = cameraMatrix[0].inv()*H1*cameraMatrix[0];R2 = cameraMatrix[1].inv()*H2*cameraMatrix[1];P1 = cameraMatrix[0];P2 = cameraMatrix[1];}//Precompute maps for cv::remap()initUndistortRectifyMap(cameraMatrix[0], distCoeffs[0], R1, P1, imageSize, CV_16SC2, rmap[0][0], rmap[0][1]);initUndistortRectifyMap(cameraMatrix[1], distCoeffs[1], R2, P2, imageSize, CV_16SC2, rmap[1][0], rmap[1][1]);Mat canvas;double sf;int w, h;if( !isVerticalStereo ){sf = 600./MAX(imageSize.width, imageSize.height);w = cvRound(imageSize.width*sf);h = cvRound(imageSize.height*sf);canvas.create(h, w*2, CV_8UC3);}else{sf = 300./MAX(imageSize.width, imageSize.height);w = cvRound(imageSize.width*sf);h = cvRound(imageSize.height*sf);canvas.create(h*2, w, CV_8UC3);}for( i = 0; i < nimages; i++ ){for( k = 0; k < 2; k++ ){Mat img = imread(goodImageList[i*2+k], 0), rimg, cimg;remap(img, rimg, rmap[k][0], rmap[k][1], CV_INTER_LINEAR);imshow("单目相机校正",rimg);waitKey();cvtColor(rimg, cimg, COLOR_GRAY2BGR);Mat canvasPart = !isVerticalStereo ? canvas(Rect(w*k, 0, w, h)) : canvas(Rect(0, h*k, w, h));resize(cimg, canvasPart, canvasPart.size(), 0, 0, CV_INTER_AREA);if( useCalibrated ){Rect vroi(cvRound(validRoi[k].x*sf), cvRound(validRoi[k].y*sf),cvRound(validRoi[k].width*sf), cvRound(validRoi[k].height*sf));rectangle(canvasPart, vroi, Scalar(0,0,255), 3, 8);}}if( !isVerticalStereo )for( j = 0; j < canvas.rows; j += 16 )line(canvas, Point(0, j), Point(canvas.cols, j), Scalar(0, 255, 0), 1, 8);elsefor( j = 0; j < canvas.cols; j += 16 )line(canvas, Point(j, 0), Point(j, canvas.rows), Scalar(0, 255, 0), 1, 8);imshow("双目相机校正对齐", canvas);waitKey();char c = (char)waitKey();if( c == 27 || c == 'q' || c == 'Q' )break;}

}static bool readStringList( const string& filename, vector<string>& l )

{l.resize(0);FileStorage fs(filename, FileStorage::READ);if( !fs.isOpened() )return false;FileNode n = fs.getFirstTopLevelNode();if( n.type() != FileNode::SEQ )return false;FileNodeIterator it = n.begin(), it_end = n.end();for( ; it != it_end; ++it )l.push_back((string)*it);return true;

}int main(int argc, char** argv)

{Size boardSize;string imagelistfn;bool showRectified = true;for( int i = 1; i < argc; i++ ){if( string(argv[i]) == "-w" ){if( sscanf(argv[++i], "%d", &boardSize.width) != 1 || boardSize.width <= 0 ){cout << "invalid board width" << endl;return -1;}}else if( string(argv[i]) == "-h" ){if( sscanf(argv[++i], "%d", &boardSize.height) != 1 || boardSize.height <= 0 ){cout << "invalid board height" << endl;return -1;}}else if( string(argv[i]) == "-nr" )showRectified = false;else if( string(argv[i]) == "--help" )return -1;else if( argv[i][0] == '-' ){cout << "invalid option " << argv[i] << endl;return 0;}elseimagelistfn = argv[i];}if( imagelistfn == "" ){imagelistfn = "stereo_calib.xml";boardSize = Size(9, 6);}else if( boardSize.width <= 0 || boardSize.height <= 0 ){cout << "if you specified XML file with chessboards, you should also specify the board width and height (-w and -h options)" << endl;return 0;}vector<string> imagelist;bool ok = readStringList(imagelistfn, imagelist);if(!ok || imagelist.empty()){cout << "can not open " << imagelistfn << " or the string list is empty" << endl;return -1;}StereoCalib(imagelist, boardSize, true, showRectified);return 0;

}

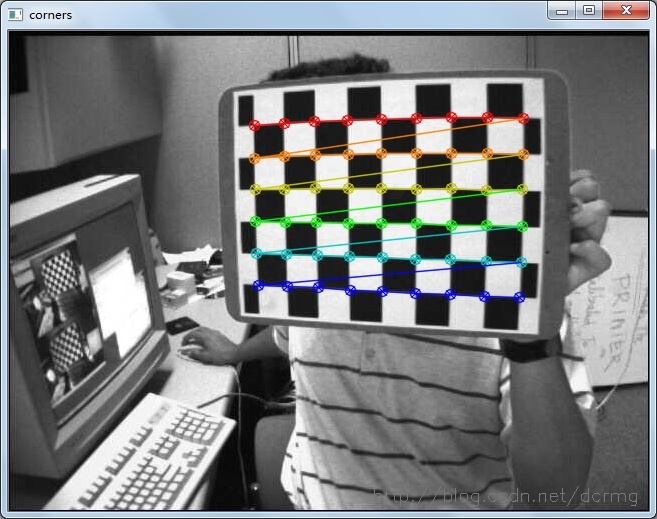

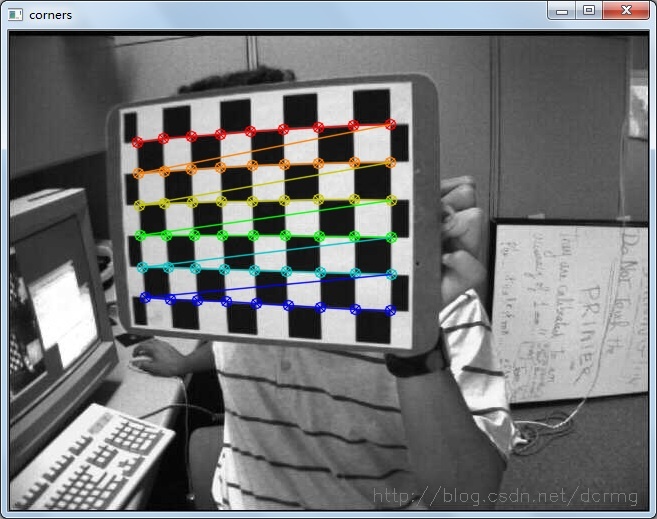

右相机其中一个标定图片查找到的角点:

左相机其中一个标定图片查找到的角点:

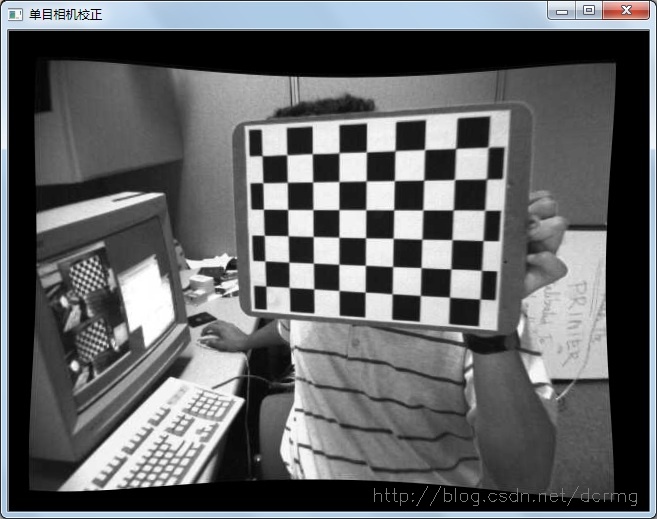

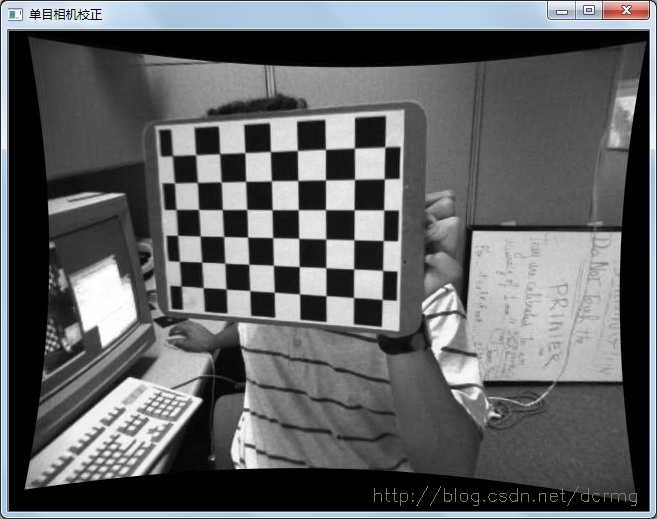

右相机单目校正:

左相机单目校正:

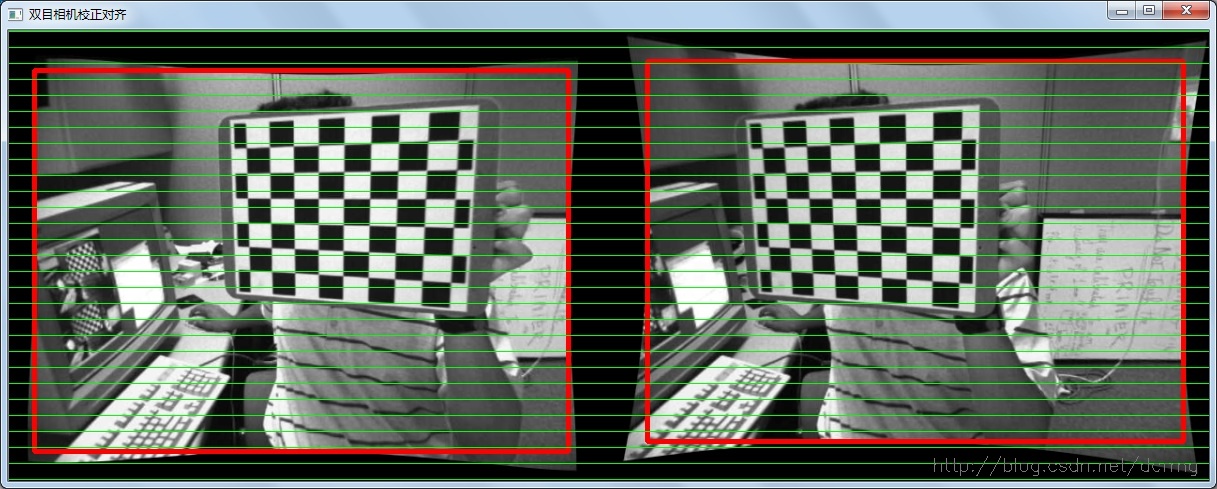

左右相机双目立体校正:

双目相机标定后,可以看到左右相机对应匹配点基本上已经水平对齐。

之后在该程序基础上运行stereo_match.cpp,求左右相机的视差。同样工程调试->命令参数里设置参数为:left01.jpg right01.jpg --algorithm=bm -i intrinsics.yml -e extrinsics.yml:

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/contrib/contrib.hpp"#include <stdio.h>using namespace cv;static void saveXYZ(const char* filename, const Mat& mat)

{const double max_z = 1.0e4;FILE* fp = fopen(filename, "wt");for(int y = 0; y < mat.rows; y++){for(int x = 0; x < mat.cols; x++){Vec3f point = mat.at<Vec3f>(y, x);if(fabs(point[2] - max_z) < FLT_EPSILON || fabs(point[2]) > max_z) continue;fprintf(fp, "%f %f %f\n", point[0], point[1], point[2]);}}fclose(fp);

}int main(int argc, char** argv)

{const char* algorithm_opt = "--algorithm=";const char* maxdisp_opt = "--max-disparity=";const char* blocksize_opt = "--blocksize=";const char* nodisplay_opt = "--no-display";const char* scale_opt = "--scale=";if(argc < 3){return 0;}const char* img1_filename = 0;const char* img2_filename = 0;const char* intrinsic_filename = 0;const char* extrinsic_filename = 0;const char* disparity_filename = 0;const char* point_cloud_filename = 0;enum { STEREO_BM=0, STEREO_SGBM=1, STEREO_HH=2, STEREO_VAR=3 };int alg = STEREO_SGBM;int SADWindowSize = 0, numberOfDisparities = 0;bool no_display = false;float scale = 1.f;StereoBM bm;StereoSGBM sgbm;StereoVar var;for( int i = 1; i < argc; i++ ){if( argv[i][0] != '-' ){if( !img1_filename )img1_filename = argv[i];elseimg2_filename = argv[i];}else if( strncmp(argv[i], algorithm_opt, strlen(algorithm_opt)) == 0 ){char* _alg = argv[i] + strlen(algorithm_opt);alg = strcmp(_alg, "bm") == 0 ? STEREO_BM :strcmp(_alg, "sgbm") == 0 ? STEREO_SGBM :strcmp(_alg, "hh") == 0 ? STEREO_HH :strcmp(_alg, "var") == 0 ? STEREO_VAR : -1;if( alg < 0 ){printf("Command-line parameter error: Unknown stereo algorithm\n\n");return -1;}}else if( strncmp(argv[i], maxdisp_opt, strlen(maxdisp_opt)) == 0 ){if( sscanf( argv[i] + strlen(maxdisp_opt), "%d", &numberOfDisparities ) != 1 ||numberOfDisparities < 1 || numberOfDisparities % 16 != 0 ){printf("Command-line parameter error: The max disparity (--maxdisparity=<...>) must be a positive integer divisible by 16\n");return -1;}}else if( strncmp(argv[i], blocksize_opt, strlen(blocksize_opt)) == 0 ){if( sscanf( argv[i] + strlen(blocksize_opt), "%d", &SADWindowSize ) != 1 ||SADWindowSize < 1 || SADWindowSize % 2 != 1 ){printf("Command-line parameter error: The block size (--blocksize=<...>) must be a positive odd number\n");return -1;}}else if( strncmp(argv[i], scale_opt, strlen(scale_opt)) == 0 ){if( sscanf( argv[i] + strlen(scale_opt), "%f", &scale ) != 1 || scale < 0 ){printf("Command-line parameter error: The scale factor (--scale=<...>) must be a positive floating-point number\n");return -1;}}else if( strcmp(argv[i], nodisplay_opt) == 0 )no_display = true;else if( strcmp(argv[i], "-i" ) == 0 )intrinsic_filename = argv[++i];else if( strcmp(argv[i], "-e" ) == 0 )extrinsic_filename = argv[++i];else if( strcmp(argv[i], "-o" ) == 0 )disparity_filename = argv[++i];else if( strcmp(argv[i], "-p" ) == 0 )point_cloud_filename = argv[++i];else{printf("Command-line parameter error: unknown option %s\n", argv[i]);return -1;}}if( !img1_filename || !img2_filename ){printf("Command-line parameter error: both left and right images must be specified\n");return -1;}if( (intrinsic_filename != 0) ^ (extrinsic_filename != 0) ){printf("Command-line parameter error: either both intrinsic and extrinsic parameters must be specified, or none of them (when the stereo pair is already rectified)\n");return -1;}if( extrinsic_filename == 0 && point_cloud_filename ){printf("Command-line parameter error: extrinsic and intrinsic parameters must be specified to compute the point cloud\n");return -1;}int color_mode = alg == STEREO_BM ? 0 : -1;Mat img1 = imread(img1_filename, color_mode);Mat img2 = imread(img2_filename, color_mode);if (img1.empty()){printf("Command-line parameter error: could not load the first input image file\n");return -1;}if (img2.empty()){printf("Command-line parameter error: could not load the second input image file\n");return -1;}if (scale != 1.f){Mat temp1, temp2;int method = scale < 1 ? INTER_AREA : INTER_CUBIC;resize(img1, temp1, Size(), scale, scale, method);img1 = temp1;resize(img2, temp2, Size(), scale, scale, method);img2 = temp2;}Size img_size = img1.size();Rect roi1, roi2;Mat Q;if( intrinsic_filename ){// reading intrinsic parametersFileStorage fs(intrinsic_filename, CV_STORAGE_READ);if(!fs.isOpened()){printf("Failed to open file %s\n", intrinsic_filename);return -1;}Mat M1, D1, M2, D2;fs["M1"] >> M1;fs["D1"] >> D1;fs["M2"] >> M2;fs["D2"] >> D2;M1 *= scale;M2 *= scale;fs.open(extrinsic_filename, CV_STORAGE_READ);if(!fs.isOpened()){printf("Failed to open file %s\n", extrinsic_filename);return -1;}Mat R, T, R1, P1, R2, P2;fs["R"] >> R;fs["T"] >> T;stereoRectify( M1, D1, M2, D2, img_size, R, T, R1, R2, P1, P2, Q, CALIB_ZERO_DISPARITY, -1, img_size, &roi1, &roi2 );Mat map11, map12, map21, map22;initUndistortRectifyMap(M1, D1, R1, P1, img_size, CV_16SC2, map11, map12);initUndistortRectifyMap(M2, D2, R2, P2, img_size, CV_16SC2, map21, map22);Mat img1r, img2r;remap(img1, img1r, map11, map12, INTER_LINEAR);remap(img2, img2r, map21, map22, INTER_LINEAR);img1 = img1r; img2 = img2r;}numberOfDisparities = numberOfDisparities > 0 ? numberOfDisparities : ((img_size.width/8) + 15) & -16;bm.state->roi1 = roi1;bm.state->roi2 = roi2;bm.state->preFilterCap = 31;bm.state->SADWindowSize = SADWindowSize > 0 ? SADWindowSize : 9;bm.state->minDisparity = 0;bm.state->numberOfDisparities = numberOfDisparities;bm.state->textureThreshold = 10;bm.state->uniquenessRatio = 15;bm.state->speckleWindowSize = 100;bm.state->speckleRange = 32;bm.state->disp12MaxDiff = 1;sgbm.preFilterCap = 63;sgbm.SADWindowSize = SADWindowSize > 0 ? SADWindowSize : 3;int cn = img1.channels();sgbm.P1 = 8*cn*sgbm.SADWindowSize*sgbm.SADWindowSize;sgbm.P2 = 32*cn*sgbm.SADWindowSize*sgbm.SADWindowSize;sgbm.minDisparity = 0;sgbm.numberOfDisparities = numberOfDisparities;sgbm.uniquenessRatio = 10;sgbm.speckleWindowSize = bm.state->speckleWindowSize;sgbm.speckleRange = bm.state->speckleRange;sgbm.disp12MaxDiff = 1;sgbm.fullDP = alg == STEREO_HH;var.levels = 3; // ignored with USE_AUTO_PARAMSvar.pyrScale = 0.5; // ignored with USE_AUTO_PARAMSvar.nIt = 25;var.minDisp = -numberOfDisparities;var.maxDisp = 0;var.poly_n = 3;var.poly_sigma = 0.0;var.fi = 15.0f;var.lambda = 0.03f;var.penalization = var.PENALIZATION_TICHONOV; // ignored with USE_AUTO_PARAMSvar.cycle = var.CYCLE_V; // ignored with USE_AUTO_PARAMSvar.flags = var.USE_SMART_ID | var.USE_AUTO_PARAMS | var.USE_INITIAL_DISPARITY | var.USE_MEDIAN_FILTERING ;Mat disp, disp8;//Mat img1p, img2p, dispp;//copyMakeBorder(img1, img1p, 0, 0, numberOfDisparities, 0, IPL_BORDER_REPLICATE);//copyMakeBorder(img2, img2p, 0, 0, numberOfDisparities, 0, IPL_BORDER_REPLICATE);int64 t = getTickCount();if( alg == STEREO_BM )bm(img1, img2, disp);else if( alg == STEREO_VAR ) {var(img1, img2, disp);}else if( alg == STEREO_SGBM || alg == STEREO_HH )sgbm(img1, img2, disp);t = getTickCount() - t;printf("Time elapsed: %fms\n", t*1000/getTickFrequency());//disp = dispp.colRange(numberOfDisparities, img1p.cols);waitKey();if( alg != STEREO_VAR )disp.convertTo(disp8, CV_8U, 255/(numberOfDisparities*16.));elsedisp.convertTo(disp8, CV_8U);if( !no_display ){namedWindow("左相机", 0);imshow("左相机", img1);namedWindow("右相机", 0);imshow("右相机", img2); imshow("左右相机视差图", disp8);printf("press any key to continue...");fflush(stdout);waitKey();printf("\n");}if(disparity_filename)imwrite(disparity_filename, disp8);if(point_cloud_filename){printf("storing the point cloud...");fflush(stdout);Mat xyz;reprojectImageTo3D(disp, xyz, Q, true);saveXYZ(point_cloud_filename, xyz);printf("\n");}return 0;

}

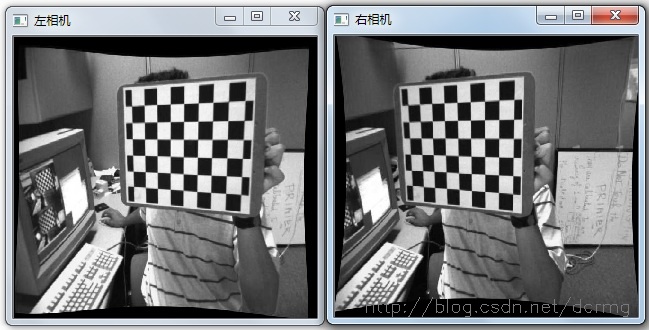

左右相机校正效果:

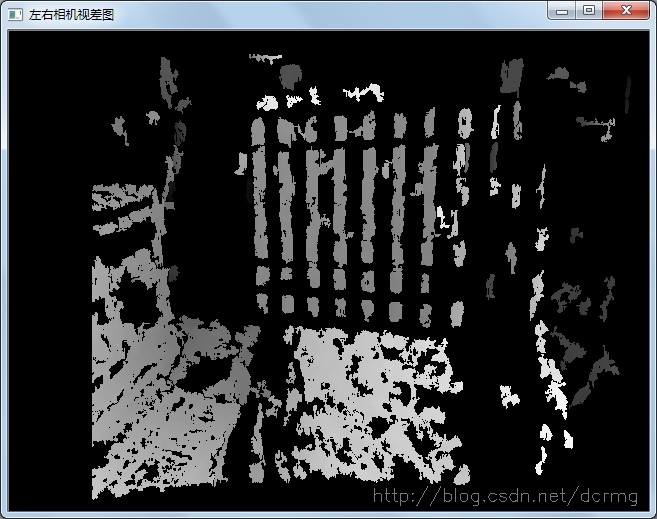

左右相机视差图:

视差用亮度表示,越亮表示当前位置距离相机越远。

这篇关于双目相机标定以及立体测距原理及OpenCV实现的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!