bidirectional专题

论文阅读:Agreement-Based Joint Training for Bidirectional Attention-Based Neural Machine Translation

双向注意力模型,尽可能使注意力在两个方向上保持一致 模型的中心思想就是对于相同的training data,使source-to-target和target-to-source两个模型在alignment matrices上保持一致。这样能够去掉一些注意力噪声,使注意力更加集中、准确。 这篇文章胜在idea,很巧妙地想到了让正反向的注意力一致来改进attention。

机器人路径规划:基于双向A*算法(bidirectional a star)的机器人路径规划(提供Python代码)

一、双向A*算法简介 传统A*算法是一种静态路网中求解最短路径最有效的方法, 它结合了BFS 算法和迪杰斯特拉算法(Dijkstra)的优点。 和迪杰斯特拉算法(Dijkstra)一样, A*算法能够用于 搜索最短路径; 和BFS 算法一样, A*算法可以用 启发式函数引导给出当下的最佳解。 传统A*算法的重点在于扩展下一个节点时引入了启发式函数 h(n), 对当前节点到目标节点的距离代价进行了

Bidirectional A*

双向A*。 A* : A*(A star) 从起点和终点同时开始A*搜索,直至交汇。 从起点端:代价值Fs=起点到当前点的距离Gs+当前点到终点的启发式代价值Hs 从终点端:代价值Fe=终点到当前点的距离Ge+当前点到起点的启发式代价值He 最终整个路径的代价值=Gs+Ge 图示 特点 比A*更快 其他路径规划算法: 路径规划算法总览

视频超分:BRCN(Video Super-Resolution via Bidirectional Recurrent Convolutional Networks)

论文:应用双向循环卷积网络的视频超分辨率方法 代码:https://github.com/linan142857/BRCN 文章检索出处:IEEE TPAMI 2017 看点 考虑到RNN可以很好地模拟视频序列的长期时间依赖性,本文提出了一种双向循环卷积网络(BRCN)。主要贡献如下: 1)提出了一种适用于多帧SR的双向循环卷积网络,其中时间依赖性可以通过循环卷积和三维前馈卷积有效地建模

基于Bidirectional AttentionFlow的机器阅读理解实践

机器阅读是实现机器认知智能的重要技术之一。机器阅读任务主要有两大类:完形填空和阅读理解。 (1)完型填空类型的问答,简单来说就是一个匹配问题。问题的求解思路基本是: 1) 获取文档中词的表示 2) 获取问题的表示 3) 计算文档中词和问题的匹配得分,选出最优 (2)文本段类型的问答,与完型填空类型的问答,在思想上非常类似,主要区别在于:完形填空的目标是文档中的一个词,文本阅读理解的目

Accurate prediction of protein contact maps by coupling residual two-dimensional bidirectional long

论文题目:Accurate prediction of protein contact maps by coupling residual two-dimensional bidirectional long short-term memory with convolutional neural networks 下载链接:https://academic.oup.com/bioinformat

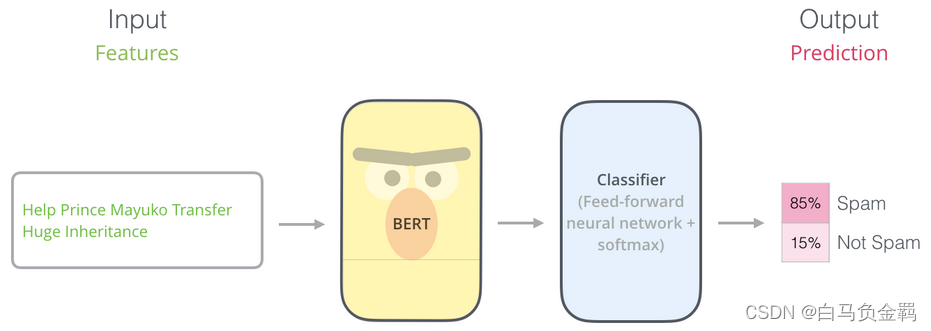

BERT(从理论到实践): Bidirectional Encoder Representations from Transformers【3】

这是本系列文章中的第3弹,请确保你已经读过并了解之前文章所讲的内容,因为对于已经解释过的概念或API,本文不会再赘述。 本文要利用BERT实现一个“垃圾邮件分类”的任务,这也是NLP中一个很常见的任务:Text Classification。我们的实验环境仍然是Python3+Tensorflow/Keras。 一、数据准备 首先,载入必要的packages/libraries。

BERT(从理论到实践): Bidirectional Encoder Representations from Transformers【1】

预训练模型:A pre-trained model is a saved network that was previously trained on a large dataset, typically on a large-scale image-classification task. You either use the pretrained model as is or use tran

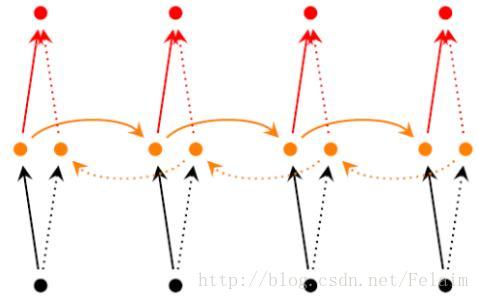

Tensorflow实现基于Bidirectional LSTM Classifier (双向LSTM)

1.双向递归神经网络简介 双向递归神经网络(Bidirectional Recurrent Neural Networks, Bi-RNN),是由Schuster和Paliwal于1997年首次提出的,和LSTM是在同一年被提出的。Bi-RNN的主要目标是增加RNN可利用的信息。RNN无法利用某个历史输入的未来信息,Bi-RNN则正好相反,它可以同时使用时序数据中某个输入的历史及未来数据。

8.1.2 Hibernate:一对一双向关联(bidirectional)

1 定义映射类 1.1 表 phone 的映射类定义: package hibernate;import javax.persistence.CascadeType;import javax.persistence.Column;import javax.persistence.Entity;import javax.persistence.FetchType;import javax

BERT-Bidirectional Encoder Representations from Transformers

BERT, or Bidirectional Encoder Representations from Transformers BERT是google最新提出的NLP预训练方法,在大型文本语料库(如维基百科)上训练通用的“语言理解”模型,然后将该模型用于我们关心的下游NLP任务(如分类、阅读理解)。 BERT优于以前的方法,因为它是用于预训练NLP的第一个**无监督,深度双向**系统。

经典文献阅读之--Bidirectional Camera-LiDAR Fusion(Camera-LiDAR双向融合新范式)

0. 简介 对于激光雷达和视觉摄像头而言,两者之间的多模态融合都是非常重要的,而本文《Learning Optical Flow and Scene Flow with Bidirectional Camera-LiDAR Fusion》则提出一种多阶段的双向融合的框架,并基于RAFT和PWC两种架构构建了CamLiRAFT和CamLiPWC这两个模型。相关代码可以在 https://githu

经典文献阅读之--Bidirectional Camera-LiDAR Fusion(Camera-LiDAR双向融合新范式)

0. 简介 对于激光雷达和视觉摄像头而言,两者之间的多模态融合都是非常重要的,而本文《Learning Optical Flow and Scene Flow with Bidirectional Camera-LiDAR Fusion》则提出一种多阶段的双向融合的框架,并基于RAFT和PWC两种架构构建了CamLiRAFT和CamLiPWC这两个模型。相关代码可以在 https://githu

论文浅尝|《Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification》

导读 这是一篇2016年的ACL论文,题目为《Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification》,介绍了基于注意力机制的双向长短时记忆关系分类网络。 这篇文章的代码开放可用,在https://paperswithcode.com/dataset/semeval-20

bidirectional lstm keras_基于LSTM的多变量多步序列预测模型实战「超详细实现说明讲解」...

个人博客地址: https://yishuihancheng.blog.csdn.net 【CSDN博客专家】 大家好,欢迎大家关注我的CSDN博客【Together_CZ】,我是沂水寒城,很高兴作为一名技术人员能够持续地学习、收获和分享进步的心路历程与技术积累。 本文主要是基于LSTM(Long Short-Term Memory)长短期记忆神经网络来实践多变量序列预测,并完成对未来指定步长