本文主要是介绍[论文精读]How Powerful are Graph Neural Networks?,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

论文原文:[1810.00826] How Powerful are Graph Neural Networks? (arxiv.org)

英文是纯手打的!论文原文的summarizing and paraphrasing。可能会出现难以避免的拼写错误和语法错误,若有发现欢迎评论指正!文章偏向于笔记,谨慎食用!

1. 省流版

1.1. 心得

①Emm, 数学上的解释性确实很强了

1.2. 论文框架图

2. 论文逐段精读

2.1. Abstract

①Even though the occurrence of Graph Neural Networks (GNNs) changes graph representation learning to a large extent, it and its variants are all limited in representation abilities.

2.2. Introduction

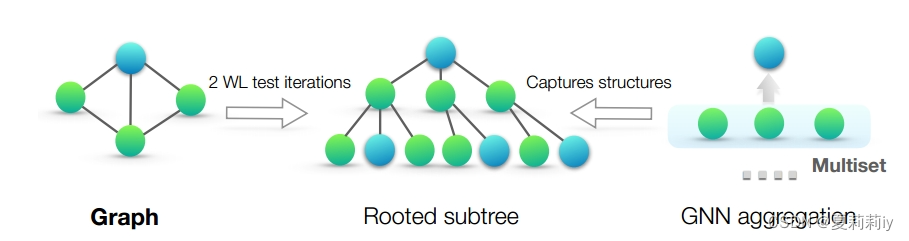

①Briefly introduce how GNN works (combining node information from k-hop neighbors and then pooling)

②The authors hold the view that ⭐ other graph models mostly based on plenty experimental trial-and-errors rather than theoretical understanding

③They combine GNNs and the Weisfeiler-Lehman (WL) graph isomorphism test to build a new framework, which relys on multisets

④GIN is excellent in distinguish, capturing and representaion

heuristics n.[U] (formal) 探索法;启发式

heuristic adj.(教学或教育)启发式的

2.3. Preliminaries

(1)Their definition

①They define two tasks: node classicifation with node label and graph classification with graph label

(2)Other models

①The authors display the function of GNN in the -th layer:

where only is initialized to

(其余细节就不多说了,在GNN的笔记里都有)

②Pooling layer of GraphSAGE, the AGGREGATE function is:

where MAX is element-wise max-pooling operator;

is learnable weight matrix;

and followed by concatenated COMBINE and linear mapping

③AGGREGATE and COMBINE areintegrated in GCN:

④Lastly follows a READOUT layer to get final prediction answer:

where the READOUT function can be different forms

(3)Weisfeiler-Lehman (WL) test

①WL firstly aggregates nodes and their neighborhoods and then hashs the labels (??hash?这好吗)

②Based on WL, WL subtree kernel was proposed to evaluate the similarity between graphs

③A subtree of height 's root node is the node at

-th iteration

permutation n.置换;排列(方式);组合(方式)

2.4. Theoretical framework: overview

①The framework overview

②Multiset: is a 2-tuple , where "where

is the underlying set of

that is formed from its distinct elements, and

gives the multiplicity of the elements" (我没有太懂这句话欸)

③They are not allowed that GNN map different neighbors to the same representation. Thus, the aggregation must be injective (我也不造为啥)

2.5. Building powerful graph neural networks

①They define Lemma 2, namely WL graph isomorphism test is able to correctly distinguish non-isomorphic graphs

②Theorem 3 完全没看懂

③Lemma 4: If input feature space is countable, then the space of node hidden features is also countable

2.5.1. Graph isomorphism network (GIN)

①Lemma 5: there is , which makes

unique in

. Also there is

②Corollary 6: there is unique and

.

③Finally, the update function of GIN can be:

2.5.2. Graph-level readout of GIN

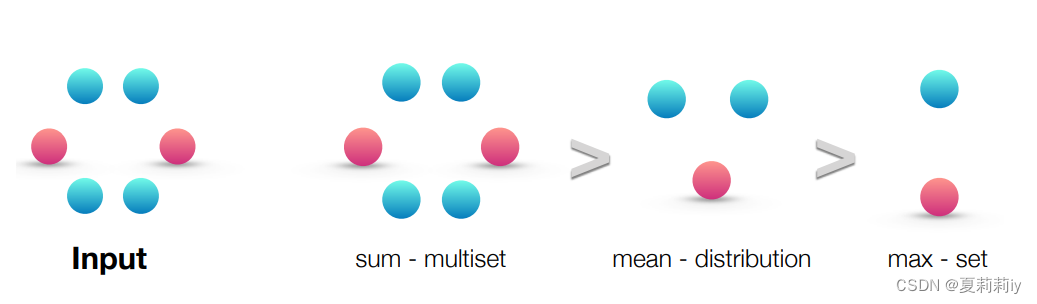

①Sum, mean and max aggregators:

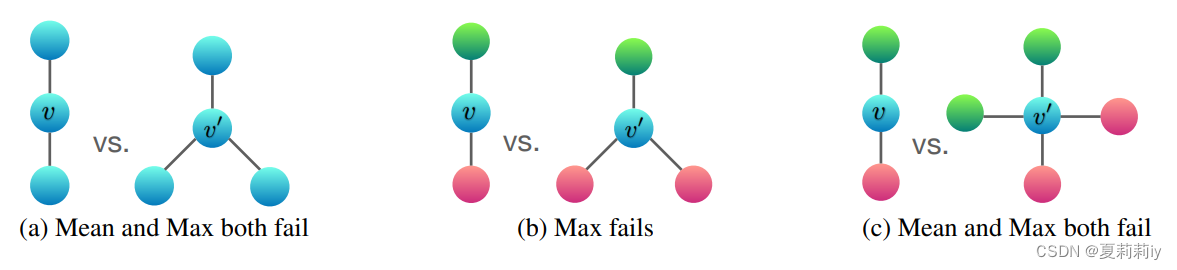

②The fail examples when the different and

map the same embedding:

③They change the READOUT layer to:

2.6. Less powerful but still interesting GNNs

They designed ablation studies

2.6.1. 1-layer perceptrons are not sufficient

①1-layer perceptrons are akin to linear mapping, which is far insufficient for distinguishing

②Lemma 7: notwithstanding multiset is different from

, they might get the same results:

2.6.2. Structures that confuse mean and max-pooling

2.6.3. Mean learns distributions

2.6.4. Max-pooling learns sets with distinct elements

2.6.5. Remarks on other aggregators

2.7. Other related work

2.8. Experiments

2.8.1. Results

2.9. Conclusion

3. 知识补充

4. Reference List

Xu, K. et al. (2019) 'How Powerful are Graph Neural Networks?', ICLR 2019. doi: https://doi.org/10.48550/arXiv.1810.00826

这篇关于[论文精读]How Powerful are Graph Neural Networks?的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!

![[论文笔记]LLM.int8(): 8-bit Matrix Multiplication for Transformers at Scale](https://img-blog.csdnimg.cn/img_convert/172ed0ed26123345e1773ba0e0505cb3.png)