reduce专题

Java Stream.reduce()方法操作实际案例讲解

《JavaStream.reduce()方法操作实际案例讲解》reduce是JavaStreamAPI中的一个核心操作,用于将流中的元素组合起来产生单个结果,:本文主要介绍JavaStream.... 目录一、reduce的基本概念1. 什么是reduce操作2. reduce方法的三种形式二、reduce

JavaScript中的reduce方法执行过程、使用场景及进阶用法

《JavaScript中的reduce方法执行过程、使用场景及进阶用法》:本文主要介绍JavaScript中的reduce方法执行过程、使用场景及进阶用法的相关资料,reduce是JavaScri... 目录1. 什么是reduce2. reduce语法2.1 语法2.2 参数说明3. reduce执行过程

Python map以及reduce函数

# -*- coding: utf-8 -*-# 函数作为参数传入,这样的函数称为高阶函数,函数式编程就是指这种高度抽象的编程范式from functools import reducedef normalize(name):name = str.capitalize(name)return namel1 = ['adam', 'LISA', 'barTa']l2 = list(map(no

Python基础知识(十):高阶函数【map()、reduce()、filter()、lambda、sorted】【高阶函数:可接收其他函数作为参数的函数】

高阶函数:一个函数可以作为参数传给另外一个函数,或者一个函数的返回值为另外一个函数(若返回值为该函数本身,则为递归),满足其一则为高阶函数。 一、map函数 map函数接收的是两个参数,一个函数,一个序列,其功能是将序列中的值处理再依次返回至列表内。其返回值为一个迭代器对象–》例如:<map object at 0x00000214EEF40BA8>。其用法如图: 接下来我们看一下map函数

filter过滤器和reduce求和以及

1. filter()过滤器 filter() 是 JavaScript 数组的一个方法,而不是 Vue.js 或 UniApp 特有的过滤器。filter() 方法用于创建一个新数组,其包含通过所提供函数实现的测试的所有元素。 <template>{{sum}}</template><script setup>import {computed,ref}} from 'vue';// 初始

Tensorflow中的降维函数总结:tf.reduce_*

在使用tensorflow时常常会使用到tf.reduce_*这类的函数,在此对一些常见的函数进行汇总 1.tf.reduce_sum tf.reduce_sum(input_tensor , axis = None , keep_dims = False , name = None , reduction_indices = None) 参数: input_tensor:要减少的张量。应

hadoop2提交到Yarn: Mapreduce执行过程reduce分析3

原文 问题导读: 1.Reduce类主要有哪三个步骤? 2.Reduce的Copy都包含什么过程? 3.Sort主要做了哪些工作? 4.4 Reduce类4.4.1 Reduce介绍 整完了Map,接下来就是Reduce了。YarnChild.main()—>ReduceTask.run()。ReduceTask.run方法开始和MapTask类似,包括initialize()初

Spark RDD Actions操作之reduce()

textFile.map(line => line.split(" ").size).reduce((a, b) => if (a > b) a else b) The arguments to reduce() are Scala function literals (closures)。 reduce将RDD中元素两两传递给输入函数? 同时产生一个新的值,新产生的值与RDD中下一个

深入理解 Python 的 `map`、`filter` 和 `reduce` 函数

深入理解 Python 的 map、filter 和 reduce 函数 在 Python 编程中,map、filter 和 reduce 是三个非常重要的高阶函数,它们可以帮助我们以更简洁和优雅的方式处理数据。这篇文章将深入探讨这三个函数的用法,并通过示例来展示它们的实际应用。 一、map 函数 1.1 概述 map 函数用于将指定函数应用于给定可迭代对象的每个元素,并返回一个迭代器。其

JS中【reduce】方法讲解

reduce 是 JavaScript 数组中的一个高阶函数,用于对数组中的每个元素依次执行回调函数,并将其结果汇总为单一的值。reduce 方法非常强大,可以用来实现累加、累乘、对象合并、数组展平等各种复杂操作。 基本语法 array.reduce(callback, initialValue) callback:用于对数组每个元素执行的回调函数,它接受四个参数: accumulato

spark 大型项目实战(四十四):troubleshooting之控制shuffle reduce端缓冲大小以避免OOM

1. map端的task是不断的输出数据的,数据量可能是很大的。 但是,其实reduce端的task,并不是等到map端task将属于自己的那份数据全部写入磁盘文件之后,再去拉取的。map端写一点数据,reduce端task就会拉取一小部分数据,立即进行后面的聚合、算子函数的应用。 每次reduece能够拉取多少数据,就由buffer来决定。因为拉取过来的数据,都是先放在buffer中的。然

Python高阶函数map、reduce、filter应用

定义 map映射函数 map()通过接收一个函数F和一个可迭代序列,作用是F依次作用序列的每个元素,并返回一个新的list。reduce递归映射函数 reduce()把一个函数作用在一个序列上,这个函数必须接收两个参数,reduce把结果继续和序列的下一个元素做函数运算。filter过滤函数 filter()与map()类似,接收一个函数F和一个可迭代序列,只不过这里的函数F是条件判断函数。

MongoDB Map-Reduce 简介

MongoDB Map-Reduce 简介 MongoDB 是一个流行的 NoSQL 数据库,它使用文档存储数据,这些数据以 JSON 格式存储。MongoDB 提供了多种数据处理方法,其中 Map-Reduce 是一种用于批量处理和聚合数据的功能强大的工具。Map-Reduce 允许用户对大量数据进行自定义的聚合操作,适用于复杂的查询和数据转换任务。 Map-Reduce 的基本概念 Ma

Spark算子:RDDAction操作–first/count/reduce/collect/collectAsMap

first def first(): T first返回RDD中的第一个元素,不排序。 scala> var rdd1 = sc.makeRDD(Array(("A","1"),("B","2"),("C","3")),2)rdd1: org.apache.spark.rdd.RDD[(String, String)] = ParallelCollectionRDD[33] at mak

Hadoop3:MapReduce中Reduce阶段自定义OutputFormat逻辑

一、情景描述 我们知道,在MapTask阶段开始时,需要InputFormat来读取数据 而在ReduceTask阶段结束时,将处理完成的数据,输出到磁盘,此时就要用到OutputFormat 在之前的程序中,我们都没有设置过这部分配置 所以,采用的是默认输出格式:TextOutputFormat 在实际工作中,我们的输出不一定是到磁盘,可能是输出到MySQL、HBase等 那么,如何实现

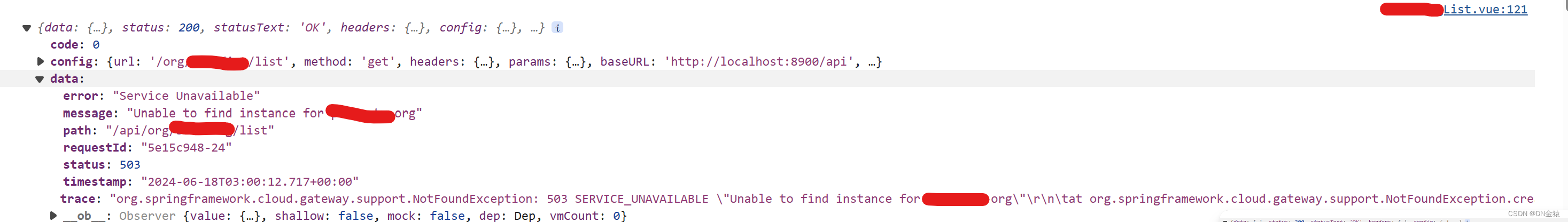

vue页面前端初始化表格数据时报错TypeError: data.reduce is not a function

这是初始化表格数据时报的错 。 [Vue warn]: Invalid prop: type check failed for prop "data". Expected Array, got Object found in---> <ElTable> at packages/table/src/table.vue<List> at src/views/org/List.vue<Catalogu

np.add.reduce()函数

这里简单地介绍一下 numpy 模块中的 reduce() 函数的用法. 代码如下: # -*- coding: utf-8 -*-import numpy as npclass Debug:def __init__(self):self.array1 = np.array([1, 2, 3, 4])self.array2 = np.array([5, 6, 7, 8])self.array3

Hadoop Map/Reduce Implementation

原文链接: http://horicky.blogspot.com/2008/11/hadoop-mapreduce-implementation.html HadoopMap/Reduce Implementation In my previous post, I talk aboutthe methodology of transforming a sequential algo

MapReduce的Reduce Size Join

mapper side join 这个没仔细讲,但是是在每个Mapper里来做的。 reduce side join 老师讲的非常清楚了,比如说CustomerMapper和OrderMapper,我都是处理出一个key-value值,这个key就是两个表都有的字段比如说Customer_Id。当然,order这边可能一个Customer会有多个订单,所以是多个订单记录组成的va

Python函数式编程之map() reduce()

map Python函数式编程之map使用(一个seq) # 使用mapprint map( lambda x: x%3, range(6) ) # [0, 1, 2, 0, 1, 2]#使用列表解析print [x%3 for x in range(6)] # [0, 1, 2, 0, 1, 2] Python函数式编程之map使用(多个seq) prin

The Python Tutorial学习笔记(2)--map、reduce、filter介绍

The Python Tutorial学习笔记(2)–map、reduce、filter介绍 filter ==filter(function, iterable)== function表示返回布尔值的函数。如果function为空表示返回的布尔值为True,也就是没有过滤掉对象。 iterable可以是一个序列,一个容器支持迭代,迭代器 也就是说不仅仅适用于序列。 ```def f(x

13.3 Spark调优-JVM调优,shuffle调优, Reduce OOM

JVM调优: Executor JVM堆内存 分为三块 静态资源划分 (60%(RDD以及广播变量存储的位置)+20%(运行内存)+20%(reduce 聚合内存))*90%+10%(JVM自身预留) = JVM堆内存 JVM的gc回收流程(属于运行内存中): 在task中创建出来的对象首先往eden和survior1种存放,survior2是空闲的。当eden和survior1区域放

reduce过滤递归符合条件的数据

图片展示 <!DOCTYPE html><html lang="en"><head><meta charset="UTF-8"><meta name="viewport" content="width=device-width, initial-scale=1.0"><title>Document</title></head><body><script>let newArray =

深入解析 MongoDB Map-Reduce:强大数据聚合与分析的利器

Map-Reduce 是一种用于处理和生成大数据集的方法,MongoDB 支持 Map-Reduce 操作以执行复杂的数据聚合任务。Map-Reduce 操作由两个阶段组成:Map 阶段和 Reduce 阶段。 基本语法 在 MongoDB 中,可以使用 db.collection.mapReduce() 方法执行 Map-Reduce 操作。其基本语法如下: db.collection.m