本文主要是介绍2020.3 Enhanced meta-learning for cross-lingual named entity recognition with minimal resources 阅读笔记,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

Motivation

- Problem Setting:

- a) One source language with rich labeled data.

- b) No labeled data in the target language.

- 现有的 Cross-lingula NER 方法可以分为两大类:

- a) Label projection (generate labeled data in target languages)

- parallel data + word alignment information + label projection

- word-to-word or phrase-to-phrase translation + label projection

- b) Direct model transfer (exploit language-independent features)

- Cross-lingual word representations/clusters/wikifier features/gazetteers.

- STOA: multilingual BERT (direct model transfer)

- a) Label projection (generate labeled data in target languages)

- 本文提出:direct model transfer 用到的 source-trained 模型可以进一步提升,因为:

- a) Given test example, 相比于 directly test on it, 还可以 fine-tune the source-trained model with the similar examples.

- 如何 retreive similar examples ? => 利用 cross-lingual 的 sentence representation model 计算source-target sentence pair 之间的 cosine simialarity.

- 以何种方式 similar? => In structure or semantics.

- b) 由于 retrieve across different languages, 所以 the set size of the similar examples 很小。

- c) 所以只能 finetune with a small set and only a few update steps.

- => Fast adapt to new tasks (languages here) with very limited data.

- => Apply meta-learning! (Learn a good parameter initialization of a model (more sentitive to the new task/data)

- a) Given test example, 相比于 directly test on it, 还可以 fine-tune the source-trained model with the similar examples.

- 进一步提出:

- a) masking scheme

- b) a max-loss term

Methodology

- i) 构建 Pseudo-Meta-NER Tasks:

- 把每个 example 看做一个独立的 pseudo test set.

- 用 mBERT [CLS] 做为 sentence representation 计算 cosine similarity.

- 相应的 similar examples 作为 pseudo training set.

- 由此构建 N个 pseuso tasks.

- ii) Meta-training and Adaptation with Pseudo Tasks:

- iii) Masking Scheme:

- Motivation: The learned representations of infrequent entities across different languages are not well-aligned in the shared space. (infrequent entities 在 mBERT 的 training corpus 中出现的比较少)

- How to? => Mask entities in each training examples with a certain probability, to encourage the model to predict through context information.

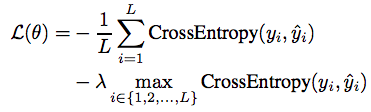

- iv) Max Loss

- Motivation: 对所有 token 的 loss 进行 average 会弱化对于 loss 最高的那个 token 的学习。=> Put more effort in learning from high-loss tokens, which would probably

be corrected during meta-training.

- Motivation: 对所有 token 的 loss 进行 average 会弱化对于 loss 最高的那个 token 的学习。=> Put more effort in learning from high-loss tokens, which would probably

Experimental Results

这篇关于2020.3 Enhanced meta-learning for cross-lingual named entity recognition with minimal resources 阅读笔记的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!