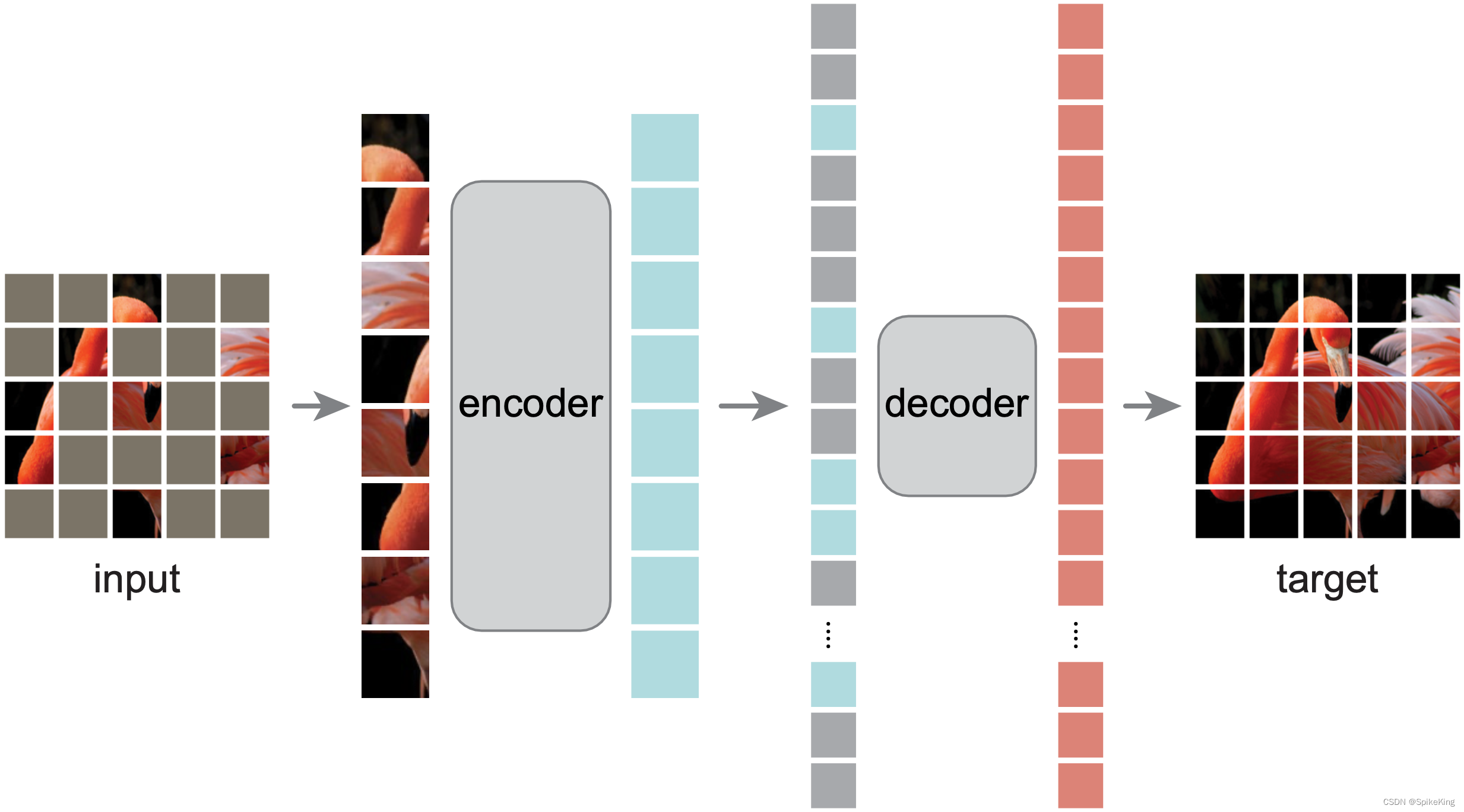

本文主要是介绍PyTorch随笔 - MAE(Masked Autoencoders)推理脚本,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

MAE推理脚本:

- 需要安装:pip install timm==0.4.5

- 需要下载:mae_visualize_vit_base.pth,447M

源码:

#!/usr/bin/env python

# -- coding: utf-8 --

"""

Copyright (c) 2022. All rights reserved.

Created by C. L. Wang on 2022/10/21

"""import osimport matplotlib.pyplot as plt

import numpy as np

import torch

from PIL import Imageimport models_mae

# import sys

# sys.path.append("..")

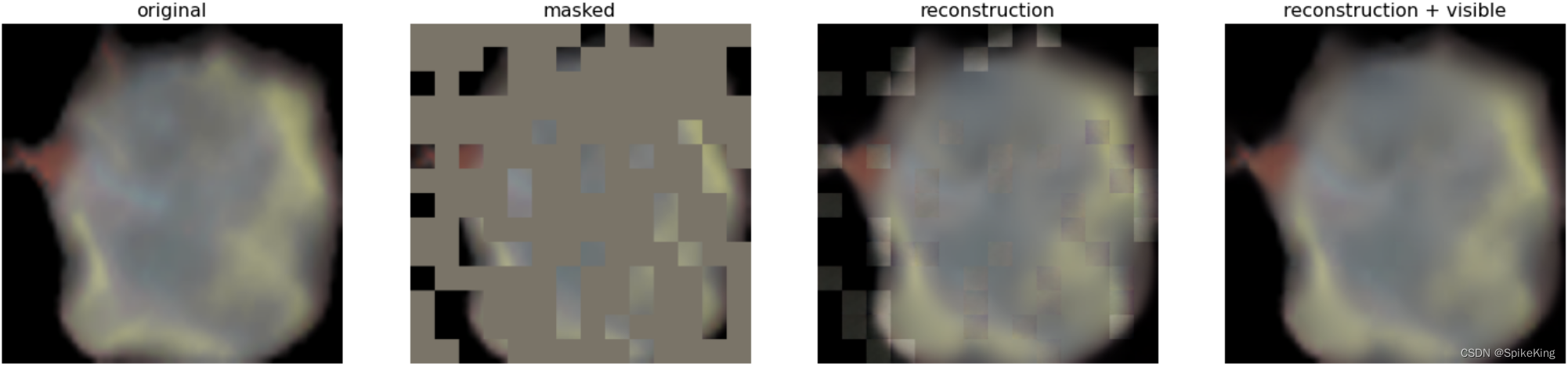

from root_dir import DATA_DIRclass MaeInfer(object):"""MAE的默认推理文件"""def __init__(self):self.imagenet_mean = np.array([0.485, 0.456, 0.406])self.imagenet_std = np.array([0.229, 0.224, 0.225])def show_image(self, image, title=''):# image is [H, W, 3]assert image.shape[2] == 3plt.imshow(torch.clip((image * self.imagenet_std + self.imagenet_mean) * 255, 0, 255).int())plt.title(title, fontsize=16)plt.axis('off')# plt.show()return@staticmethoddef prepare_model(chkpt_dir, arch='mae_vit_large_patch16'):# build modelmodel = getattr(models_mae, arch)()# load modelcheckpoint = torch.load(chkpt_dir, map_location='cpu')msg = model.load_state_dict(checkpoint['model'], strict=False)print(msg)return modeldef run_one_image(self, img, model):x = torch.tensor(img)# make it a batch-likex = x.unsqueeze(dim=0)x = torch.einsum('nhwc->nchw', x)# run MAEloss, y, mask = model(x.float(), mask_ratio=0.75)y = model.unpatchify(y)y = torch.einsum('nchw->nhwc', y).detach().cpu()# visualize the maskmask = mask.detach()mask = mask.unsqueeze(-1).repeat(1, 1, model.patch_embed.patch_size[0] ** 2 * 3) # (N, H*W, p*p*3)mask = model.unpatchify(mask) # 1 is removing, 0 is keepingmask = torch.einsum('nchw->nhwc', mask).detach().cpu()x = torch.einsum('nchw->nhwc', x)# masked imageim_masked = x * (1 - mask)# MAE reconstruction pasted with visible patchesim_paste = x * (1 - mask) + y * mask# make the plt figure largerplt.rcParams['figure.figsize'] = [24, 24]plt.subplot(1, 4, 1)self.show_image(x[0], "original")plt.subplot(1, 4, 2)self.show_image(im_masked[0], "masked")plt.subplot(1, 4, 3)self.show_image(y[0], "reconstruction")plt.subplot(1, 4, 4)self.show_image(im_paste[0], "reconstruction + visible")plt.show()def process(self):img = Image.open(os.path.join(DATA_DIR, "imgs", "l1-b11-p1-r03c05f04p01_0.png"))img = img.resize((224, 224))img = np.array(img) / 255.assert img.shape == (224, 224, 3)# normalize by ImageNet mean and stdimg = img - self.imagenet_meanimg = img / self.imagenet_stdplt.rcParams['figure.figsize'] = [5, 5]self.show_image(torch.tensor(img))chkpt_dir = os.path.join(DATA_DIR, "models", "mae_visualize_vit_base.pth")model_mae = self.prepare_model(chkpt_dir, 'mae_vit_base_patch16')print('Model loaded.')torch.manual_seed(2)print('MAE with pixel reconstruction:')self.run_one_image(img, model_mae)def main():mi = MaeInfer()mi.process()if __name__ == '__main__':main()

输出:

这篇关于PyTorch随笔 - MAE(Masked Autoencoders)推理脚本的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!