本文主要是介绍卷积篇 | YOLOv8改进之C2f模块融合SCConv | 即插即用的空间和通道维度重构卷积,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

前言:Hello大家好,我是小哥谈。SCConv是一种用于减少特征冗余的卷积神经网络模块。相对于其他流行的SOTA方法,SCConv可以以更低的计算成本获得更高的准确率。它通过在空间和通道维度上进行重构,从而减少了特征图中的冗余信息。这种模块的设计可以提高卷积神经网络的性能。本篇文章所作的改进即在YOLOv8主干网络中的C2f模块融合SCConv!~🌈

目录

🚀1.基础概念

🚀2.网络结构

🚀3.添加步骤

🚀4.改进方法

🍀🍀步骤1:block.py文件修改

🍀🍀步骤2:__init__.py文件修改

🍀🍀步骤3:tasks.py文件修改

🍀🍀步骤4:创建自定义yaml文件

🍀🍀步骤5:新建train.py文件

🍀🍀步骤6:模型训练测试

🚀1.基础概念

SCConv是一种用于减少特征冗余的卷积神经网络模块。相对于其他流行的SOTA方法,SCConv可以以更低的计算成本获得更高的准确率。它通过在空间和通道维度上进行重构,从而减少了特征图中的冗余信息。这种模块的设计可以提高卷积神经网络的性能。

SCConv可以替换各种卷积神经网络中的标准卷积。SCConv的主要思想是在标准卷积中引入了一个可学习的缩放因子,以增强卷积核的表达能力。这个缩放因子可以根据输入数据自适应地学习,从而提高了卷积神经网络的性能。

SCConv的优点包括:

- 可以直接替换各种卷积神经网络中的标准卷积,使用方便。

- 可以自适应地学习缩放因子,提高了卷积核的表达能力。

- 在多个图像分类和目标检测任务中都取得了优异的性能。

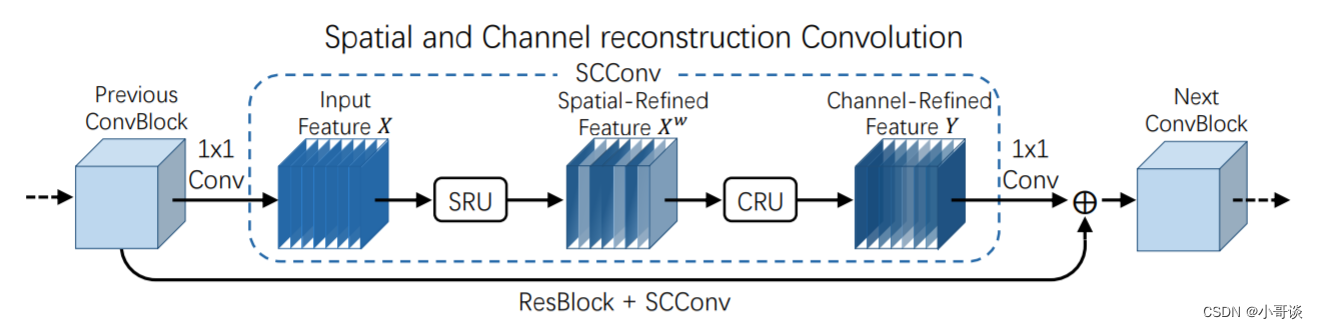

关于SCConv的论文的原理可参考下图。该论文尝试利用特征之间的空间和通道冗余来进行CNN压缩,并提出了一种高效的卷积模块,称为SCConv (spatial and channel reconstruction convolution),以减少冗余计算并促进代表性特征的学习。论文所提出的SCConv由空间重构单元(SRU)和信道重构单元(CRU)两个单元组成。

🍀(1)SRU根据权重分离冗余特征并进行重构,以抑制空间维度上的冗余,增强特征的表征。

🍀(2)CRU采用分裂变换和融合策略来减少信道维度的冗余以及计算成本和存储。

🍀(3)SCConv是一种即插即用的架构单元,可直接用于替代各种卷积神经网络中的标准卷积。

实验结果表明,SCConv嵌入模型能够通过减少冗余特征来获得更好的性能,并且显著降低了复杂度和计算成本。✅

论文题目:《SCConv: Spatial and Channel Reconstruction Convolution for Feature Redundancy》

论文地址: SCConv:用于特征冗余的空间和通道重构卷积

代码实现: GitHub - cheng-haha/ScConv: SCConv: Spatial and Channel Reconstruction Convolution for Feature Redundancy

🚀2.网络结构

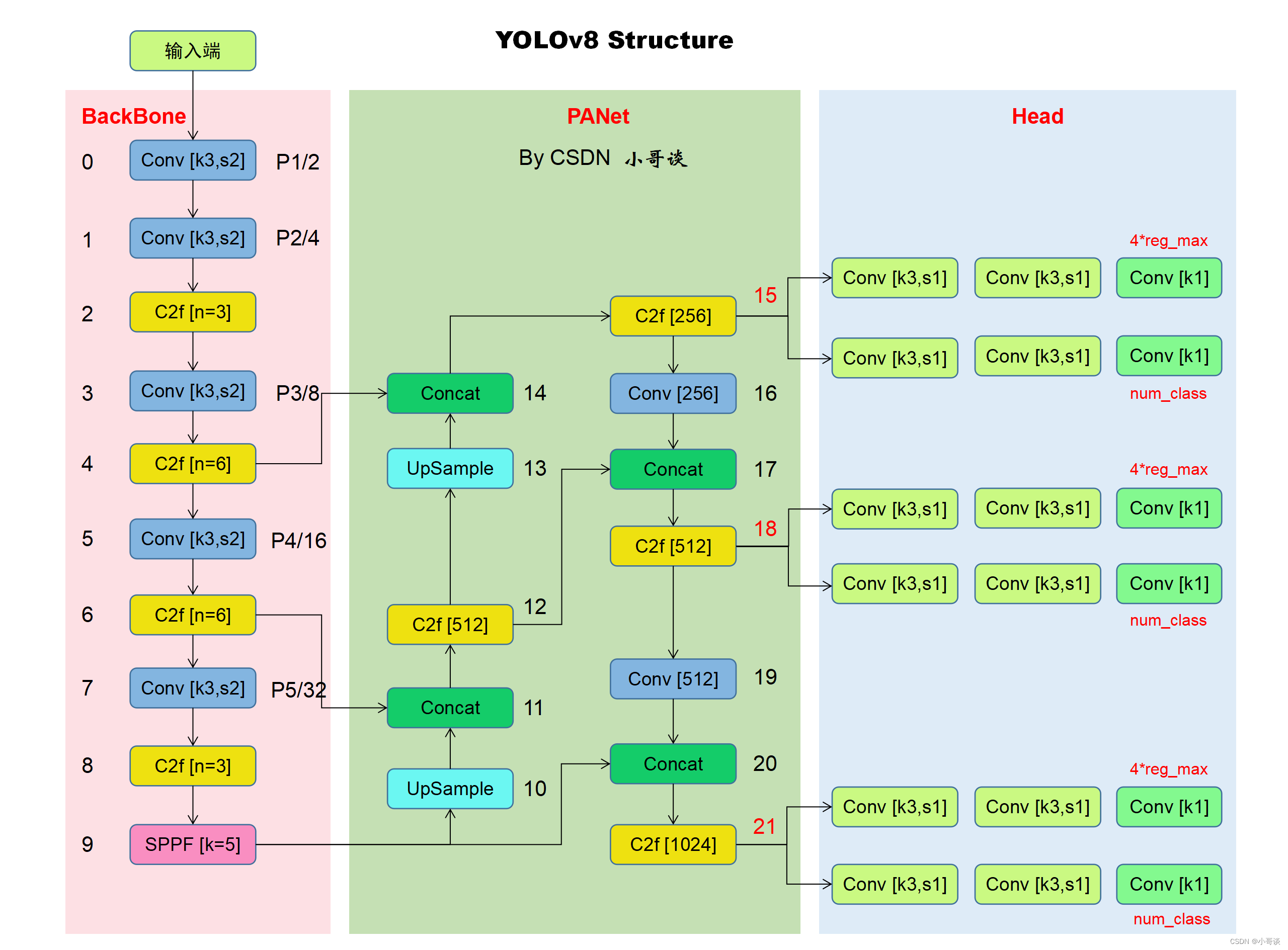

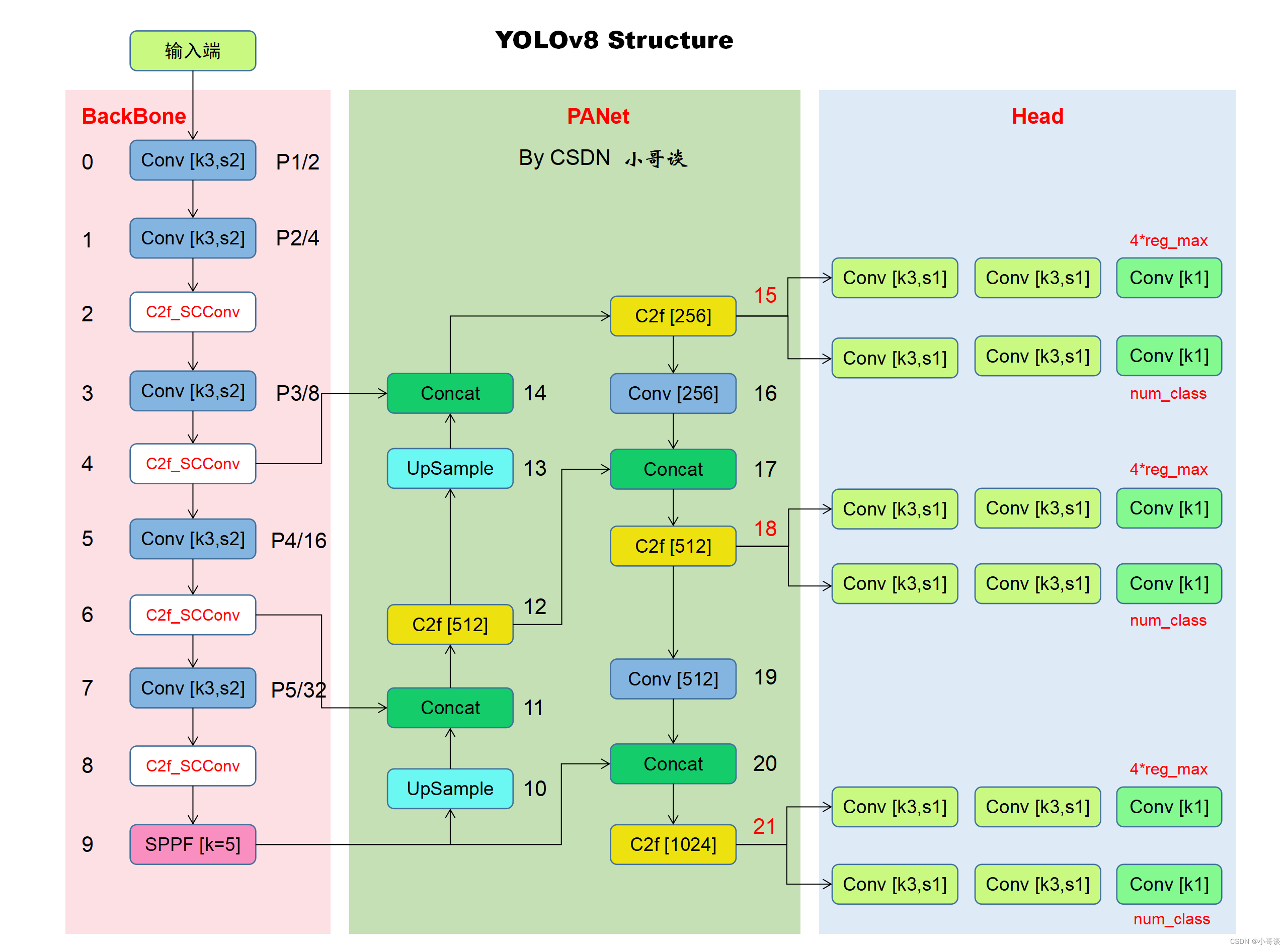

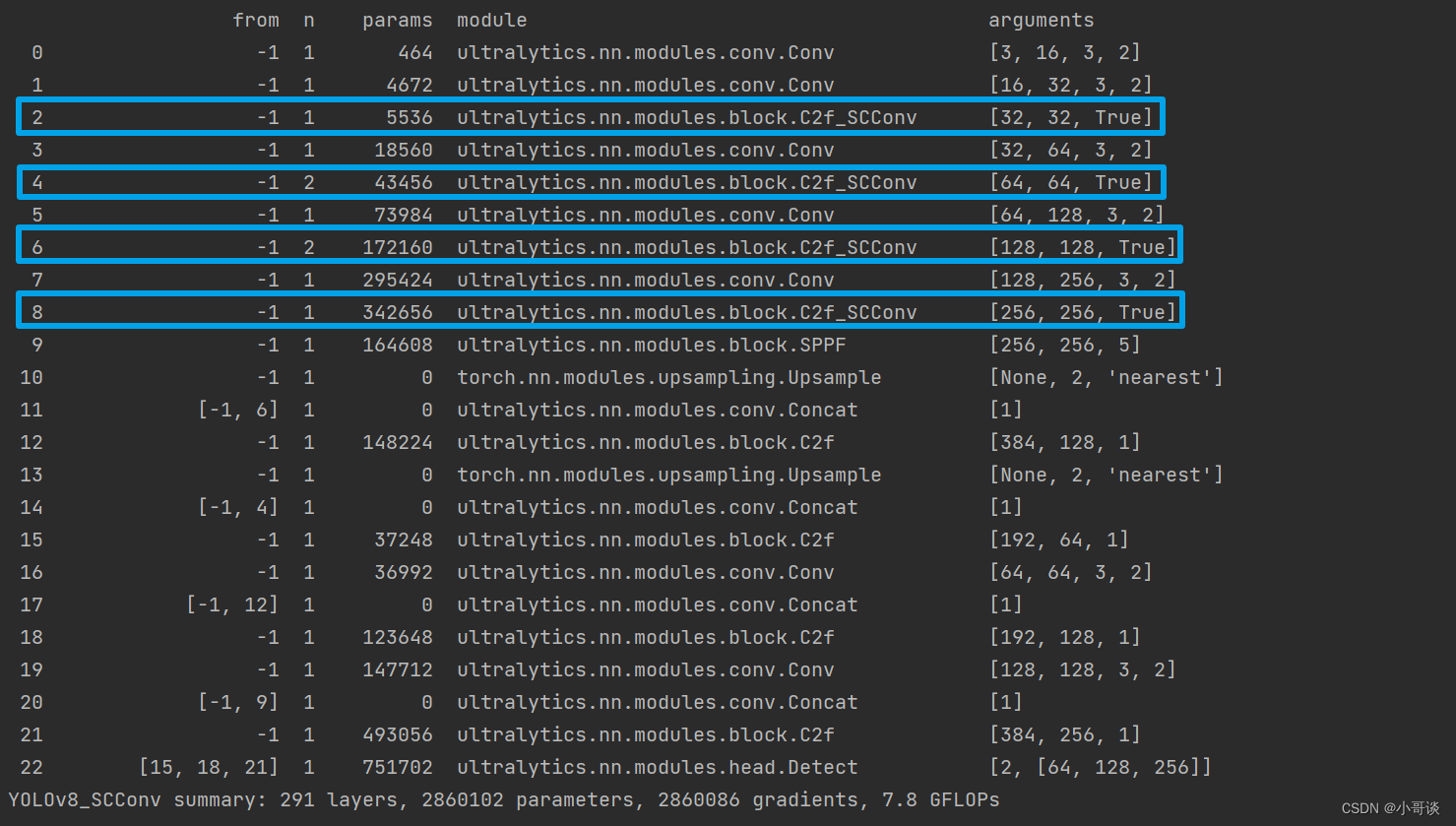

本文的改进是基于YOLOv8,关于其网络结构具体如下图所示:

YOLOv8官方仓库地址:

GitHub - ultralytics/ultralytics: NEW - YOLOv8 🚀 in PyTorch > ONNX > OpenVINO > CoreML > TFLite

本文所作出的改进是在主干网络中的C2f模块融合SCConv,改进后的网络结构图具体如下图所示:

🚀3.添加步骤

针对本文的改进,具体步骤如下所示:👇

步骤1:block.py文件修改

步骤2:__init__.py文件修改

步骤3:tasks.py文件修改

步骤4:创建自定义yaml文件

步骤5:新建train.py文件

步骤6:模型训练测试

🚀4.改进方法

🍀🍀步骤1:block.py文件修改

在源码中找到block.py文件,具体位置是ultralytics/nn/modules/block.py,然后将SCConv模块代码添加到block.py文件末尾位置。

SCConv模块代码:

# C2f模块融合SCConv

# By CSDN 小哥谈

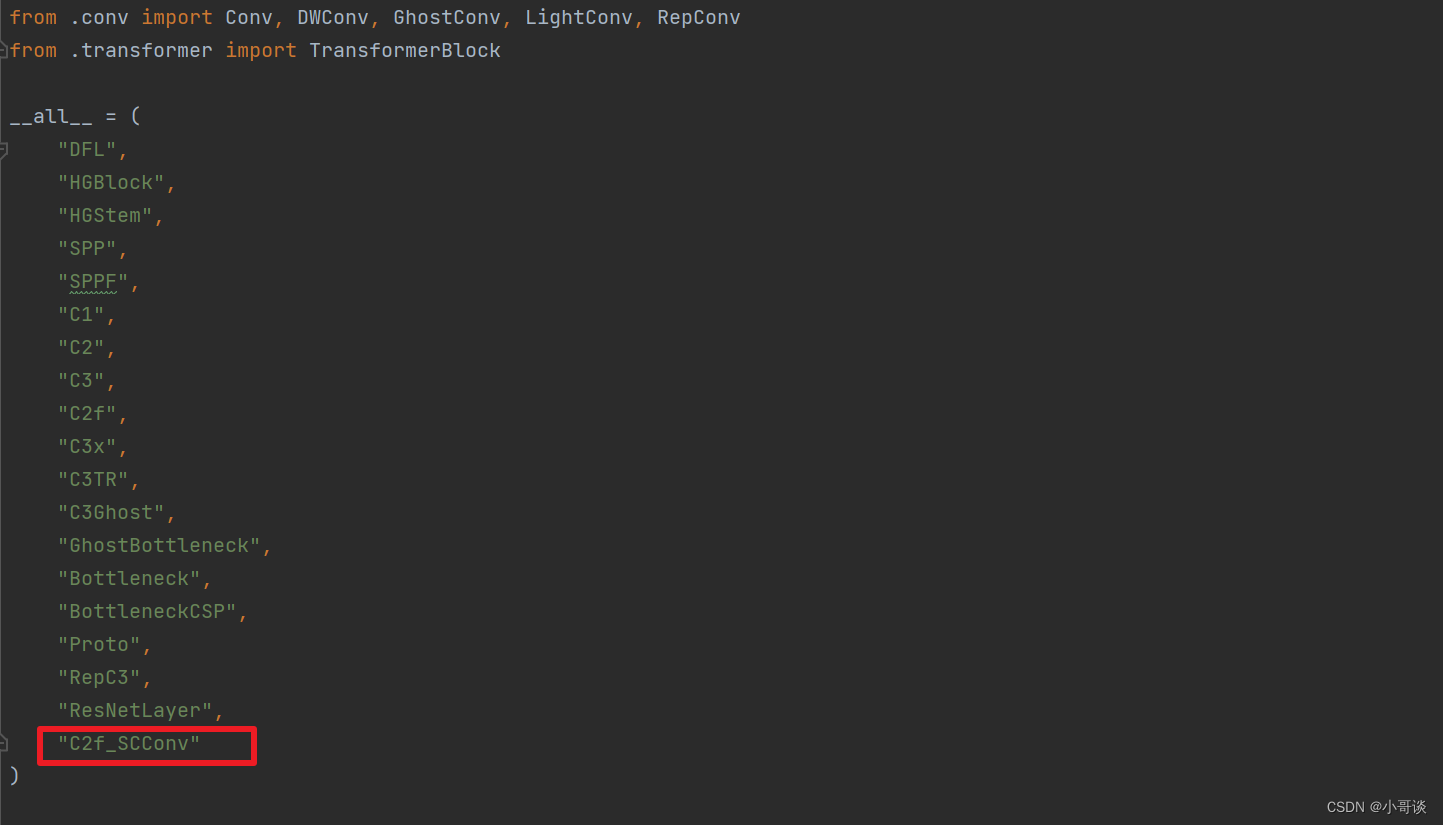

class GroupBatchnorm2d(nn.Module):def __init__(self, c_num: int,group_num: int = 16,eps: float = 1e-10):super(GroupBatchnorm2d, self).__init__()assert c_num >= group_numself.group_num = group_numself.gamma = nn.Parameter(torch.randn(c_num, 1, 1))self.beta = nn.Parameter(torch.zeros(c_num, 1, 1))self.eps = epsdef forward(self, x):N, C, H, W = x.size()x = x.view(N, self.group_num, -1)mean = x.mean(dim=2, keepdim=True)std = x.std(dim=2, keepdim=True)x = (x - mean) / (std + self.eps)x = x.view(N, C, H, W)return x * self.gamma + self.betaclass SRU(nn.Module):def __init__(self,oup_channels: int,group_num: int = 16,gate_treshold: float = 0.5):super().__init__()self.gn = GroupBatchnorm2d(oup_channels, group_num=group_num)self.gate_treshold = gate_tresholdself.sigomid = nn.Sigmoid()def forward(self, x):gn_x = self.gn(x)w_gamma = self.gn.gamma / sum(self.gn.gamma)reweigts = self.sigomid(gn_x * w_gamma)# Gateinfo_mask = reweigts >= self.gate_tresholdnoninfo_mask = reweigts < self.gate_tresholdx_1 = info_mask * xx_2 = noninfo_mask * xx = self.reconstruct(x_1, x_2)return xdef reconstruct(self, x_1, x_2):x_11, x_12 = torch.split(x_1, x_1.size(1) // 2, dim=1)x_21, x_22 = torch.split(x_2, x_2.size(1) // 2, dim=1)return torch.cat([x_11 + x_22, x_12 + x_21], dim=1)class CRU(nn.Module):'''alpha: 0<alpha<1'''def __init__(self,op_channel: int,alpha: float = 1 / 2,squeeze_radio: int = 2,group_size: int = 2,group_kernel_size: int = 3,):super().__init__()self.up_channel = up_channel = int(alpha * op_channel)self.low_channel = low_channel = op_channel - up_channelself.squeeze1 = nn.Conv2d(up_channel, up_channel // squeeze_radio, kernel_size=1, bias=False)self.squeeze2 = nn.Conv2d(low_channel, low_channel // squeeze_radio, kernel_size=1, bias=False)# upself.GWC = nn.Conv2d(up_channel // squeeze_radio, op_channel, kernel_size=group_kernel_size, stride=1,padding=group_kernel_size // 2, groups=group_size)self.PWC1 = nn.Conv2d(up_channel // squeeze_radio, op_channel, kernel_size=1, bias=False)# lowself.PWC2 = nn.Conv2d(low_channel // squeeze_radio, op_channel - low_channel // squeeze_radio, kernel_size=1,bias=False)self.advavg = nn.AdaptiveAvgPool2d(1)def forward(self, x):# Splitup, low = torch.split(x, [self.up_channel, self.low_channel], dim=1)up, low = self.squeeze1(up), self.squeeze2(low)# TransformY1 = self.GWC(up) + self.PWC1(up)Y2 = torch.cat([self.PWC2(low), low], dim=1)# Fuseout = torch.cat([Y1, Y2], dim=1)out = F.softmax(self.advavg(out), dim=1) * outout1, out2 = torch.split(out, out.size(1) // 2, dim=1)return out1 + out2class SCConv(nn.Module):# https://github.com/cheng-haha/ScConv/blob/main/ScConv.pydef __init__(self,op_channel: int,group_num: int = 16,gate_treshold: float = 0.5,alpha: float = 1 / 2,squeeze_radio: int = 2,group_size: int = 2,group_kernel_size: int = 3,):super().__init__()self.SRU = SRU(op_channel,group_num=group_num,gate_treshold=gate_treshold)self.CRU = CRU(op_channel,alpha=alpha,squeeze_radio=squeeze_radio,group_size=group_size,group_kernel_size=group_kernel_size)def forward(self, x):x = self.SRU(x)x = self.CRU(x)return xclass Bottleneck_SCConv(Bottleneck):def __init__(self, c1, c2, shortcut=True, g=1, k=(3, 3), e=0.5):super().__init__(c1, c2, shortcut, g, k, e)c_ = int(c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, k[0], 1)self.cv2 = SCConv(c2)class C2f_SCConv(C2f):def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)self.m = nn.ModuleList(Bottleneck_SCConv(self.c, self.c, shortcut, g, k=(3, 3), e=1.0) for _ in range(n))再然后,在block.py文件最上方下图所示位置加入C2f_SCConv。

🍀🍀步骤2:__init__.py文件修改

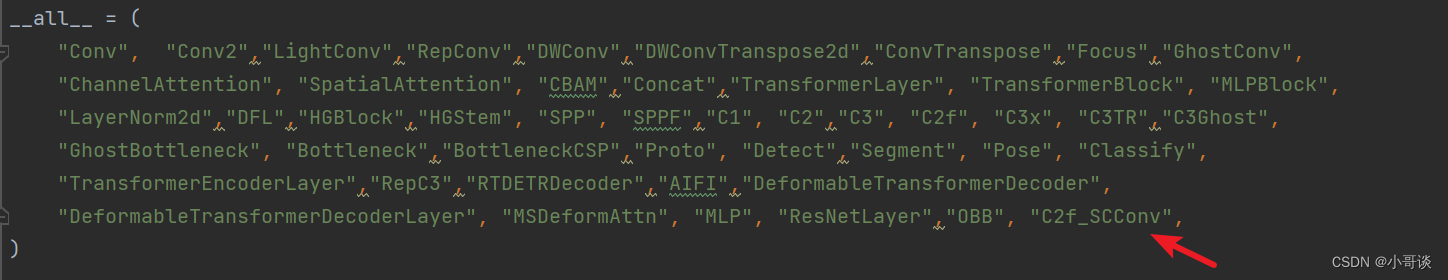

在源码中找到__init__.py文件,具体位置是ultralytics/nn/modules/__init__.py。

修改1:加入C2f_SCConv,具体如下图所示:

修改2:加入C2f_SCConv,具体如下图所示:

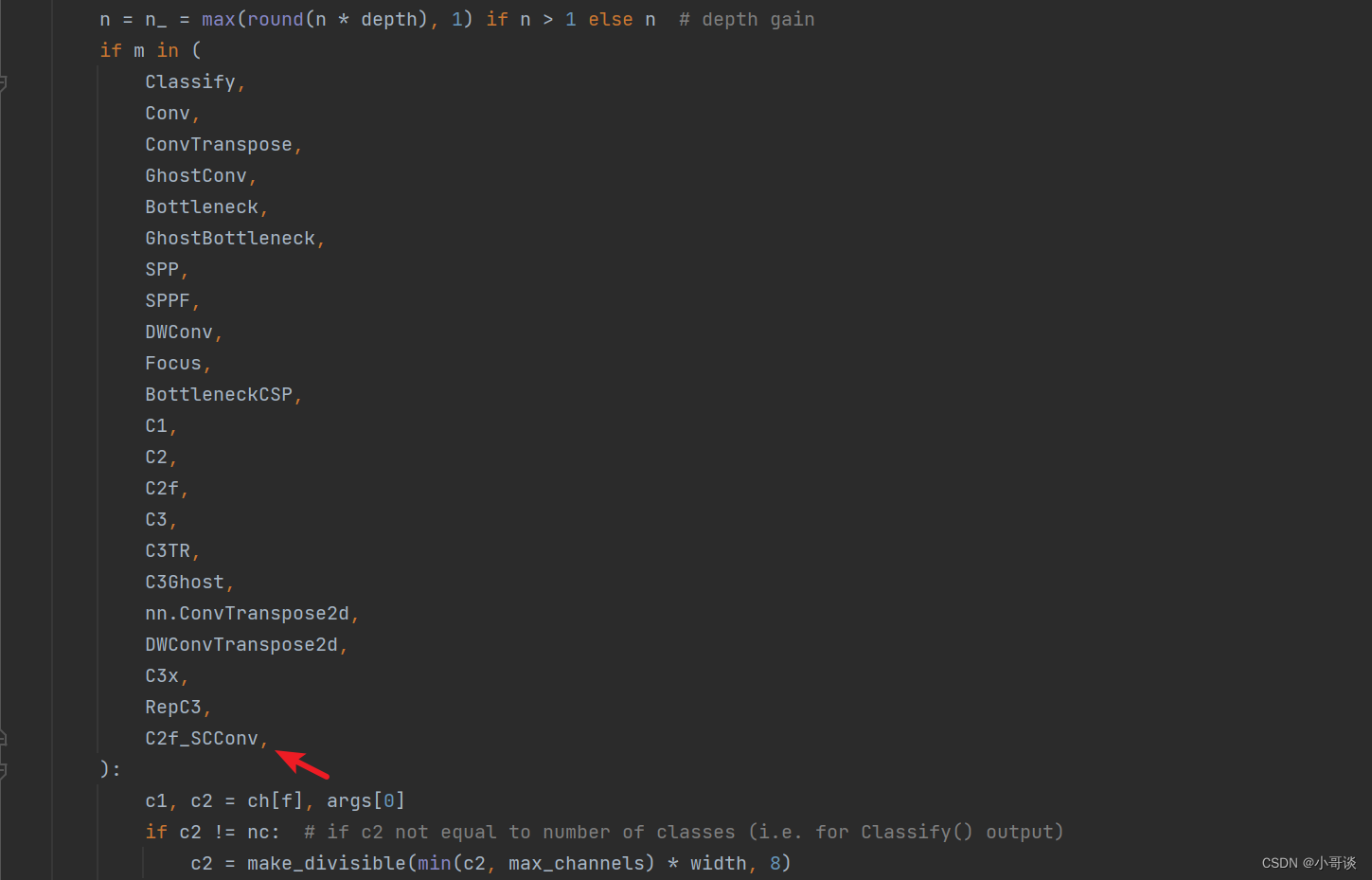

🍀🍀步骤3:tasks.py文件修改

在源码中找到tasks.py文件,具体位置是ultralytics/nn/tasks.py。

然后找到parse_model函数(736行左右),在下图中所示位置添加C2f_SCConv。

最后,在本文件中导入该模块,具体代码如下:

from ultralytics.nn.modules.block import C2f_SCConv🍀🍀步骤4:创建自定义yaml文件

在源码ultralytics/cfg/models/v8目录下创建yaml文件,并命名为:yolov8_SCConv.yaml。具体如下图所示:

yolov8_SCConv.yaml文件完整代码如下所示:

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'# [depth, width, max_channels]n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPss: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPsm: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPsl: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPsx: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs# YOLOv8.0n backbone

backbone:# [from, repeats, module, args]- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4- [-1, 3, C2f_SCConv, [128, True]]- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8- [-1, 6, C2f_SCConv, [256, True]]- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16- [-1, 6, C2f_SCConv, [512, True]]- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32- [-1, 3, C2f_SCConv, [1024, True]]- [-1, 1, SPPF, [1024, 5]] # 9# YOLOv8.0n head

head:- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [[-1, 6], 1, Concat, [1]] # cat backbone P4- [-1, 3, C2f, [512]] # 12- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [[-1, 4], 1, Concat, [1]] # cat backbone P3- [-1, 3, C2f, [256]] # 15 (P3/8-small)- [-1, 1, Conv, [256, 3, 2]]- [[-1, 12], 1, Concat, [1]] # cat head P4- [-1, 3, C2f, [512]] # 18 (P4/16-medium)- [-1, 1, Conv, [512, 3, 2]]- [[-1, 9], 1, Concat, [1]] # cat head P5- [-1, 3, C2f, [1024]] # 21 (P5/32-large)- [[15, 18, 21], 1, Detect, [nc]] # Detect(P3, P4, P5)🍀🍀步骤5:新建train.py文件

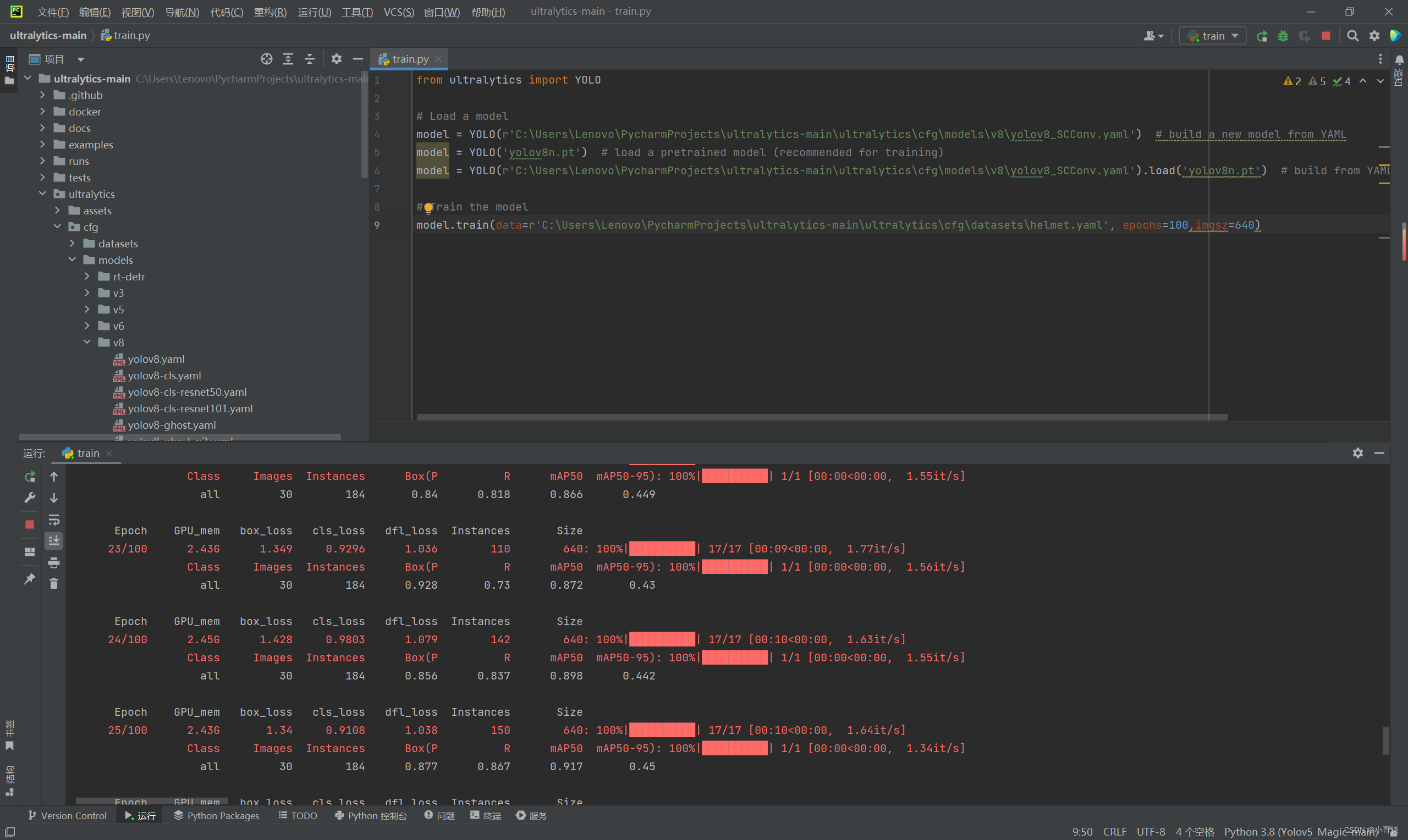

在源码根目录下新建train.py文件,文件完整代码如下所示:

from ultralytics import YOLO# Load a model

model = YOLO(r'C:\Users\Lenovo\PycharmProjects\ultralytics-main\ultralytics\cfg\models\v8\yolov8_SCConv.yaml') # build a new model from YAML

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

model = YOLO(r'C:\Users\Lenovo\PycharmProjects\ultralytics-main\ultralytics\cfg\models\v8\yolov8_SCConv.yaml').load('yolov8n.pt') # build from YAML and transfer weights# Train the model

model.train(data=r'C:\Users\Lenovo\PycharmProjects\ultralytics-main\ultralytics\cfg\datasets\helmet.yaml', epochs=100,imgsz=640)注意:一定要用绝对路径,以防发生报错。

🍀🍀步骤6:模型训练测试

在train.py文件,点击“运行”,在作者自制的安全帽佩戴检测数据集上,模型可以正常训练。

模型训练过程:

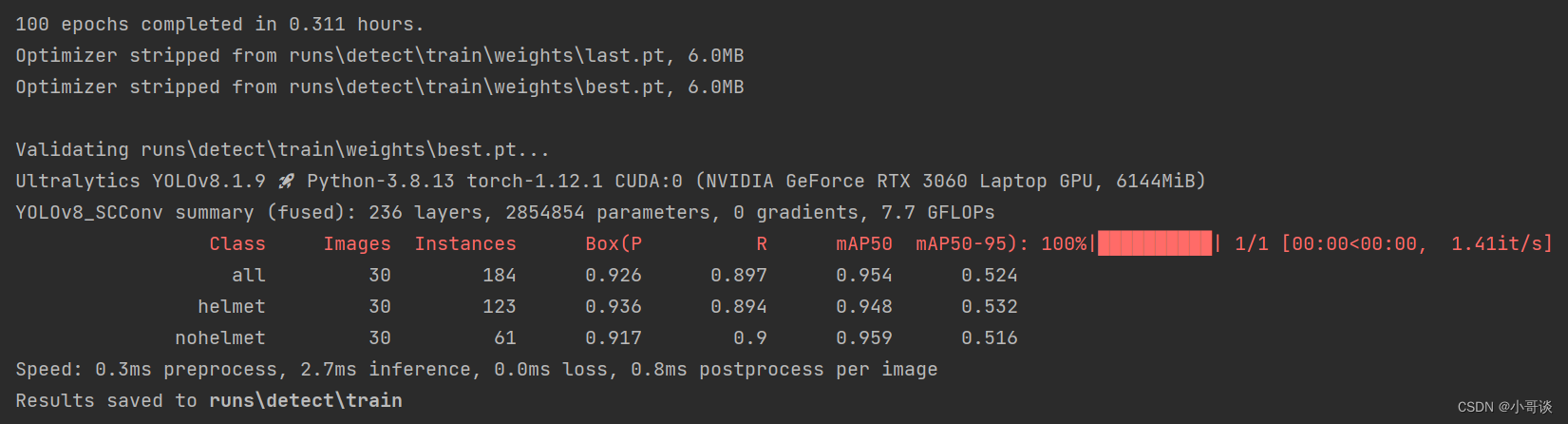

模型训练结果:

这篇关于卷积篇 | YOLOv8改进之C2f模块融合SCConv | 即插即用的空间和通道维度重构卷积的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!