本文主要是介绍关于Reuters Corpora(路透社语料库),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

首先在命令行窗口中进入python编辑环境,输入

>>import nltk

>>nltk.download()

然后加载出:

在Corpora中所有的文件下载到C:\nltk_data中,大小在2.78G左右。

在Corpora中所有的文件下载到C:\nltk_data中,大小在2.78G左右。

然后开始对其玩弄啦。

加载

from nltk.corpus import reuters

files = reuters.fileids()

#print(files)

words16097 = reuters.words(['test/16097'])

print(words16097)#输出test16097文件中的单词列表

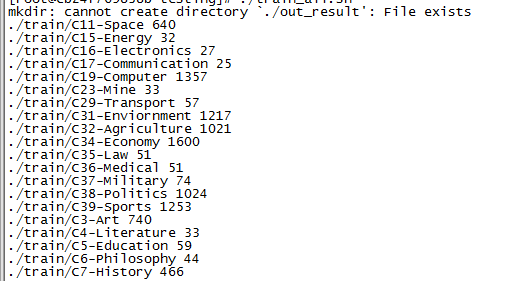

输出结果

太多没显示完全…

太多没显示完全…

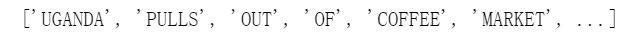

#输出路透社语料库中的主题种类

reutersGenres = reuters.categories()

print(reutersGenres)

输出结果:

#输出‘bop’和‘cocoa’种类中的单词

for w in reuters.words(categories = ['bop','cocoa']):print(w + ' ',end = ' ')if w == '.':print()

输出结果:

brown语料库的特殊疑问词频率计算

import nltk

from nltk.corpus import brown

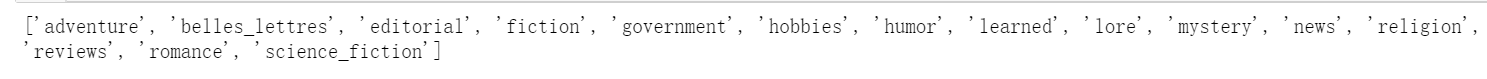

print(brown.categories())#brown语料库所有类型列表

输出结果:

genres = brown.categories()

whwords = ['what','which','how','why','when','where','who']

for i in range(0,len(genres)):genre = genres[i]print()print("Analysing '" + genre + "' wh words")genre_text = brown.words(categories = genre)#将genre类别的整个文本作为列表放在genre_text中fdist = nltk.FreqDist(genre_text) #FreqDist函数接受一个单词列表返回一个对象,包含映射词和其在列表中的频率for wh in whwords:print(wh + ':',fdist[wh], end = ' ')

输出结果:

webtext的词频分布

import nltk

from nltk.corpus import webtext

print(webtext.fileids())#webtext中有6个txt文件

输出结果:

fileids = ['firefox.txt', 'grail.txt', 'overheard.txt', 'pirates.txt', 'singles.txt', 'wine.txt']

fileid = fileids[4]#选择'singles.txt'文件作为我们的探索

wbt_words = webtext.words(fileid)

fdist = nltk.FreqDist(wbt_words)#先随机统计6个单词的频率O(∩_∩)O

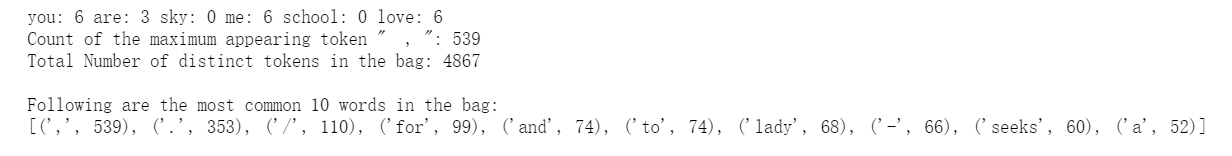

word = ['you','are','sky','me','school','love']

for i in word:print(i + ':' ,fdist[i],end = ' ')

print()#------------------------------------------------------

#显示最常出现的单词(fdist.max())和计数(fdist[fdist.max()])

print('Count of the maximum appearing token " ',fdist.max(),'":',fdist[fdist.max()])#

print('Total Number of distinct tokens in the bag:',fdist.N())#最常见的x个单词

print()

print('Following are the most common 10 words in the bag:')

print(fdist.most_common(10))fdist.plot(cumulative = True)#画出词频分布(不忍直视...)输出结果:

词语多义与上下位词

#词语歧义

from nltk.corpus import wordnet as wn

word = 'chair'#以chair为例

#访问Wordnet数据库API接口,获得word相关含义

word_synsets = wn.synsets(word)

print('Synsets/Senses of',word,':',word_synsets,'\n\n')for synset in word_synsets:print(synset,': ')print('Definition: ',synset.definition())#定义print('Lemmas/Synonymous words: ',synset.lemma_names())#同义词print('Example: ',synset.examples())#例句print()

输出结果:

#上下位词

from nltk.corpus import wordnet as wn

woman = wn.synset('woman.n.02')

bed = wn.synset('bed.n.01')#woman的上位词

print('\n\nTypes of woman(Hypernyms): ',woman.hypernyms())#返回woman具有直系关系的同义词集

woman_paths = woman.hypernym_paths()

for idx,path in enumerate(woman_paths):print('\n\nHypernym Path :',idx + 1)for synset in path:print(synset.name(),',',end = ' ')#bed的下位词

type_of_beds = bed.hyponyms()

print('\n\nTypes of beds(Hyponyms): ',type_of_beds)

print('\n')

print(sorted(set(lemma.name() for synset in type_of_beds for lemma in synset.lemmas())))

输出结果:

平均多义性

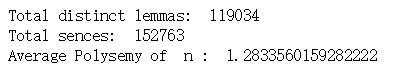

from nltk.corpus import wordnet as wn

type = 'n'#选定词性,n为名词

synsets = wn.all_synsets(type)#返回数据库中type类型的所有同义词集

lemmas = []

for synset in synsets:for lemma in synset.lemmas():lemmas.append(lemma.name())

lemmas = set(lemmas)#消重

count = 0

for lemma in lemmas:count = count + len(wn.synsets(lemma,type))print('Total distinct lemmas: ',len(lemmas))

print('Total sences: ',count)

print('Average Polysemy of ',type,': ',count/len(lemmas))

输出结果:

这篇关于关于Reuters Corpora(路透社语料库)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!