本文主要是介绍【论文记录】Stochastic gradient descent with differentially private updates,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

记录几条疑问

- The sample size required for a target utility level increases with the privacy constraint.

- Optimization methods for large data sets must also be scalable.

- SGD algorithms satisfy asymptotic guarantees

Introduction

-

主要工作简介:

\quad In this paper we derive differentially private versions of single-point SGD and mini-batch SGD, and evaluate them on real and synthetic data sets. -

更多运用SGD的原因:

\quad Stochastic gradient descent (SGD) algorithms are simple and satisfy the same asymptotic guarantees as more computationally intensive learning methods. -

由于asymptotic guarantees带来的影响:

\quad to obtain reasonable performance on finite data sets practitioners must take care in setting parameters such as the learning rate (step size) for the updates. -

上述影响的应对之策:

\quad Grouping updates into “minibatches” to alleviate some of this sensitivity and improve the performance of SGD. This can improve the robustness of the updating at a moderate expense in terms of computation, but also introduces the batch size as a free parameter.

Preliminaries

- 优化目标:

\quad solve a regularized convex optimization problem : w ∗ = argmin w ∈ R d λ 2 ∥ w ∥ 2 + 1 n Σ i = 1 n l ( w , x i , y i ) w^* = \mathop{ \textbf{argmin} } \limits_{ w \in \mathbb{R}^d} \frac{\lambda}{2} \Vert w \Vert ^2 + \frac{1}{n} \mathop{ \Sigma }\limits_{i=1}^n \mathbb{l} (w,x_i,y_i) w∗=w∈Rdargmin2λ∥w∥2+n1i=1Σnl(w,xi,yi)

\quad where w w w is the normal vector to the hyperplane separator, and l \mathbb{l} l is a convex loss function.

\quad 若 l \mathbb{l} l 选为 logistic loss, 即 l ( w , x , y ) = l o g ( 1 + e − y w T x ) \mathbb{l} (w,x,y)=log(1+e^{-yw^Tx}) l(w,x,y)=log(1+e−ywTx), 则 ⇒ \Rightarrow ⇒ Logistic Regression

\quad 若 l \mathbb{l} l 选为 hinge loss, 即 l ( w , x , y ) = \mathbb{l} (w,x,y)= l(w,x,y)= max ( 0 , 1 − y w T x ) (0,1-yw^Tx) (0,1−ywTx), 则 ⇒ \Rightarrow ⇒ SVM

- 优化算法:

\quad SGD with mini-batch updates : w t + 1 = w t − η t ( λ w t + 1 b Σ ( x i , y i ) ∈ B t ▽ l ( w t , x i , y i ) ) w_{t+1} = w_t - \eta_t \Big( \lambda w_t + \frac{1}{b} \mathop{\Sigma}\limits_{ (x_i,y_i) \in B_t} \triangledown \mathbb{l} (w_t,x_i,y_i) \Big) wt+1=wt−ηt(λwt+b1(xi,yi)∈BtΣ▽l(wt,xi,yi))

\quad where η t \eta_t ηt is a learning rate, the update at each step t t t is based on a small subset B t B_t Bt of examples of size b b b.

SGD with Differential Privacy

- 满足差分隐私的 mini-batch SGD :

\quad A differentially-private version of the mini-batch update : w t + 1 = w t − η t ( λ w t + 1 b Σ ( x i , y i ) ∈ B t ▽ l ( w t , x i , y i ) + 1 b Z t ) w_{t+1} = w_t - \eta_t \Big( \lambda w_t + \frac{1}{b} \mathop{\Sigma}\limits_{ (x_i,y_i) \in B_t} \triangledown \mathbb{l} (w_t,x_i,y_i) \,+ \frac{1}{b}Z_t \Big) wt+1=wt−ηt(λwt+b1(xi,yi)∈BtΣ▽l(wt,xi,yi)+b1Zt)

\quad where Z t Z_t Zt is a random noise vector in R d \mathbb R ^d Rd drawn independently from the density: ρ ( z ) ∝ e − ( α / 2 ) ∥ z ∥ \rho(z) \propto e^{-(\alpha/2) \|z\|} ρ(z)∝e−(α/2)∥z∥

- 使用上式的 mini-batch update 时, 此种updates满足 α \alpha α-differentially private的条件:

\quad T h e o r e m \mathcal{Theorem \,} Theorem If the initialization point w o w_o wo is chosen independent of the sensitive data, the batches B t B_t Bt are disjoint, and if ∥ ▽ l ( w , x , y ) ∥ ≤ 1 \| \triangledown \mathbb l(w,x,y)\| \leq 1 ∥▽l(w,x,y)∥≤1 for all w w w, and all ( x i , y i ) (x_i,y_i) (xi,yi), then SGD with mini-batch updates is α \alpha α-differentially private.

Experiments

-

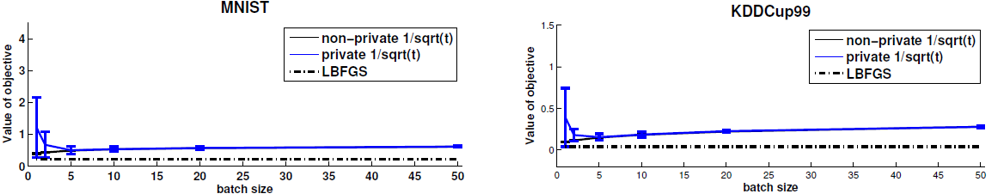

实验现象:

\quad batch size 为1时DP-SGD的方差比普通的SGD更大。但 batch size 调大后则方差减小了很多。

-

由此而总结出的经验:

\quad In terms of objective value, guaranteeing differential privacy can come for “free” using SGD with moderate batch size.

-

实际上 batch size 带来的影响是先减后增

\quad increasing the batch size improved the performance of private SGD, but there is a limit , much larger batch sizes actually degrade performance.

额外记录几条经验

- 数据维度 d d d与隐私保护参数会影响实验所需的数据量:

\quad Differentially private learning algorithms often have a sample complexity that scales linearly with the data dimension d d d and inversely with the privacy risk α \alpha α. Thus a moderate reduction in α \alpha α or increase in d d d may require more data.

Ref

S. Song, K. Chaudhuri, and A. Sarwate. Stochastic gradient descent with differentially private updates. In GlobalSIP Conference, 2013.

这篇关于【论文记录】Stochastic gradient descent with differentially private updates的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!