本文主要是介绍《Python自然语言处理(第二版)-Steven Bird等》学习笔记:第07章 从文本提取信息,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

第07章 从文本提取信息

- 7.1 信息提取

- 信息提取结构

- 7.2 分块

- 名词短语分块

- 标记模式

- 探索文本语料库

- 加缝隙

- 块的表示:标记与树

- 7.3 开发和评估分块器

- 读取IOB 格式与CoNLL2000分块语料库

- 简单评估和基准

- 训练基于分类器的分块器

- 7.4 语言结构中的递归

- 用级联分块器构建嵌套结构

- 树

- 树遍历

- 7.5 命名实体识别

- 7.6 关系抽取

- 7.7 小结

import nltk, re, pprint

回答下列问题:

- 我们如何能构建一个系统,从非结构化文本中提取结构化数据?

- 有哪些稳健的方法识别一个文本中描述的实体和关系?

- 哪些语料库适合这项工作,我们如何使用它们来训练和评估我们的模型?

7.1 信息提取

信息提取结构

信息提取系统的简单的流水线结构。以一个文档的原始文本作为其输入,生成(entity, relation, entity)元组的一个链表作为输出。例如:假设一个文档表明Georgia-Pacific 公司位于Atlanta,它可能产生元组([ORG: ‘Georgia-Pacific’] ‘in’ [LOC: ‘Atlanta’])。

def ie_preprocess(document):sentences = nltk.sent_tokenize(document) # 句子分割器sentences = [nltk.word_tokenize(sent) for sent in sentences] #分词器sentences = [nltk.pos_tag(sent) for sent in sentences] # 词性标注器

7.2 分块

分块(chunk)就像分词忽略空白符,通常选择标识符的一个子集,分块构成的源文本中的片段不能重叠。

名词短语分块

NP-分块(NP-chunking),NP-块用方括号标记:例如:

[ The/DT market/NN ] for/IN [ system-management/NN software/NN ] for/

IN [ Digital/NNP ] [ ’s/POS hardware/NN ] is/VBZ fragmented/JJ enough/RB

that/IN [ a/DT giant/NN ] such/JJ as/IN [ Computer/NNP Associates/NNPS ]

should/MD do/VB well/RB there/RB ./.

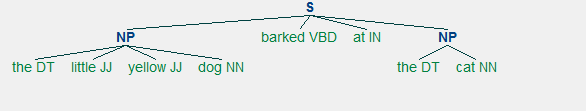

NP-分块信息最有用的来源之一是词性标记。为了创建一个NP-块,首先定义一个块语法,规则句子应如何分块。下例中将用一个正则表达式规则定义一个简单的语法。这条规则是说一个NP-块由一个可选的限定词(DT)

后面跟着任何数目的形容词(JJ)然后是一个名词(NN)组成。使用此语法,我们创建了一个块分析器,测试例句。结果是一棵树,我们可以输出或图形显示。

例7-1. 一个简单的基于正则表达式的NP 分块器的例子

sentence = [("the", "DT"), ("little", "JJ"), ("yellow", "JJ"), ("dog", "NN"), ("barked", "VBD"), ("at", "IN"), ("the", "DT"), ("cat", "NN")]

grammar = "NP: {<DT>?<JJ>*<NN>}"

cp = nltk.RegexpParser(grammar)

result = cp.parse(sentence)

print(result)

(S(NP the/DT little/JJ yellow/JJ dog/NN)barked/VBDat/IN(NP the/DT cat/NN))

result.draw()

标记模式

组成一个块语法的规则使用标记模式来描述已标注的词的序列。一个标记模式是一个用尖括号分隔的词性标记序列,如

?*。another/DT sharp/JJ dive/NN

trade/NN figures/NNS

any/DT new/JJ policy/NN measures/NNS

earlier/JJR stages/NNS

Panamanian/JJ dictator/NN Manuel/NNP Noriega/NNP

?例7-2 显示了一个由2 个规则组成的简单的块语法。第一条规则匹配一个可选的限定词或所有格代名词,零个或多个形容词,然后跟一个名词。第二条规则匹配一个或多个专有名词。

例7-2. 简单的名词短语分块器。

grammar = r"""

NP: {<DT|PP\$>?<JJ>*<NN>} # chunk determiner/possessive, adjectives and nouns

{<NNP>+} # chunk sequences of proper nouns

"""

cp = nltk.RegexpParser(grammar)

sentence = [("Rapunzel", "NNP"), ("let", "VBD"), ("down", "RP"),("her", "PP$"), ("long", "JJ"), ("golden", "JJ"), ("hair", "NN")]

print(cp.parse(sentence))

(S(NP Rapunzel/NNP)let/VBDdown/RP(NP her/PP$ long/JJ golden/JJ hair/NN))

注意:KaTeX parse error: Expected 'EOF', got '\来' at position 25: …的一个特殊字符,必须使用转义符\̲来̲匹配PP标记。

如果标记模式匹配位置重叠,最左边的匹配优先。

nouns = [("money", "NN"), ("market", "NN"), ("fund", "NN")]

grammar = "NP: {<NN><NN>} # Chunk two consecutive nouns"

cp = nltk.RegexpParser(grammar)

print(cp.parse(nouns))

(S (NP money/NN market/NN) fund/NN)

grammar = "NP: {<NN>+}" #更加宽容的块规则

cp = nltk.RegexpParser(grammar)

print(cp.parse(nouns))

(S (NP money/NN market/NN fund/NN))

探索文本语料库

cp = nltk.RegexpParser('CHUNK: {<V.*> <TO> <V.*>}')

brown = nltk.corpus.brown

for sent in brown.tagged_sents():tree = cp.parse(sent)for subtree in tree.subtrees():if subtree.label() == 'CHUNK': print(subtree)

(CHUNK combined/VBN to/TO achieve/VB)

(CHUNK continue/VB to/TO place/VB)

(CHUNK serve/VB to/TO protect/VB)(CHUNK transfer/VB to/TO ride/VB)

(CHUNK managed/VBN to/TO automate/VB)(CHUNK want/VB to/TO buy/VB)

cp = nltk.RegexpParser('CHUNK: {<V.*> <TO><V.*>}')

for sent in brown.tagged_sents():tree = cp.parse(sent)for subtree in tree.subtrees():if subtree.label() == 'CHUNK': print(subtree)

(CHUNK combined/VBN to/TO achieve/VB)

(CHUNK continue/VB to/TO place/VB)

(CHUNK serve/VB to/TO protect/VB)(CHUNK refused/VBN to/TO permit/VB)

(CHUNK refuse/VB to/TO exercis

加缝隙

加缝隙是从一大块中去除一个标识符序列的过程。如果匹配的标识符序列贯穿一整块,那么这一整块会被去除;如果标识符序列出现在块中间,这些标识符会被去除,在以前只有一个块的地方留下两个块。如果序列在块的周边,这些标记被去除,留下一个较小的块。

例7-3. 简单的加缝器

grammar = r"""

NP:

{<.*>+} # Chunk everything

}<VBD|IN>+{ # Chink sequences of VBD and IN

"""

sentence = [("the", "DT"), ("little", "JJ"), ("yellow", "JJ"),

("dog", "NN"), ("barked", "VBD"), ("at", "IN"), ("the", "DT"), ("cat", "NN")]

cp = nltk.RegexpParser(grammar)

print(cp.parse(sentence))

(S(NP the/DT little/JJ yellow/JJ dog/NN)barked/VBDat/IN(NP the/DT cat/NN))

块的表示:标记与树

块结构可以使用标记或树来表示。使用最广泛的表示是IOB标记。在这个方案中,每个标识符被用三个特殊的块标签之一标注,I(inside,内部),O(outside,外部)或B(begin,开始)。一个标识符被标注为B,如果它标志着一个块的开始。块内的标识符子序列被标注为I。所有其他的标识符被标注为O。B和I标记加块类型的后缀,如B-NP, I-NP。

7.3 开发和评估分块器

读取IOB 格式与CoNLL2000分块语料库

import nltk

text = '''

... he PRP B-NP

... accepted VBD B-VP

... the DT B-NP

... position NN I-NP

... of IN B-PP

... vice NN B-NP

... chairman NN I-NP

... of IN B-PP

... Carlyle NNP B-NP

... Group NNP I-NP

... , , O

... a DT B-NP

... merchant NN I-NP

... banking NN I-NP

... concern NN I-NP

... . . O

... '''

nltk.chunk.conllstr2tree(text, chunk_types=['NP']).draw()

from nltk.corpus import conll2000

print(conll2000.chunked_sents('train.txt')[99])

(S(PP Over/IN)(NP a/DT cup/NN)(PP of/IN)(NP coffee/NN),/,(NP Mr./NNP Stone/NNP)(VP told/VBD)(NP his/PRP$ story/NN)./.)

简单评估和基准

from nltk.corpus import conll2000

cp = nltk.RegexpParser("") #建立一个基准(baseline)

test_sents = conll2000.chunked_sents('test.txt', chunk_types=['NP'])

print(cp.evaluate(test_sents))

ChunkParse score:IOB Accuracy: 43.4%%Precision: 0.0%%Recall: 0.0%%F-Measure: 0.0%%

IOB 标记准确性表明超过三分之一的词被标注为O,即没有在NP 块中。然而,由于我们的标注器没有找到任何块,其精度、召回率和F-度量均为零。

grammar = r"NP: {<[CDJNP].*>+}" #以名词短语标记的特征字母(如CD、DT 和JJ)开头的标记

cp = nltk.RegexpParser(grammar)

print(cp.evaluate(test_sents))

ChunkParse score:IOB Accuracy: 87.7%%Precision: 70.6%%Recall: 67.8%%F-Measure: 69.2%%

例7-4. 使用unigram 标注器对名词短语分块

class UnigramChunker(nltk.ChunkParserI):def __init__(self, train_sents):train_data = [[(t,c) for w,t,c in nltk.chunk.tree2conlltags(sent)]for sent in train_sents]self.tagger = nltk.UnigramTagger(train_data)def parse(self, sentence):pos_tags = [pos for (word,pos) in sentence]tagged_pos_tags = self.tagger.tag(pos_tags)chunktags = [chunktag for (pos, chunktag) in tagged_pos_tags]conlltags = [(word, pos, chunktag) for ((word,pos),chunktag)in zip(sentence, chunktags)]return nltk.chunk.conlltags2tree(conlltags)

test_sents = conll2000.chunked_sents('test.txt', chunk_types=['NP'])

train_sents = conll2000.chunked_sents('train.txt', chunk_types=['NP'])

unigram_chunker = UnigramChunker(train_sents)

print(unigram_chunker.evaluate(test_sents))

ChunkParse score:IOB Accuracy: 92.9%%Precision: 79.9%%Recall: 86.8%%F-Measure: 83.2%%

这个分块器相当不错,达到整体F 度量83%的得分。

通过使用unigram 标注器分配一个标记给每个语料库中出现的词性标记,它学到了什么:

postags = sorted(set(pos for sent in train_sents

... for (word,pos) in sent.leaves()))

print(unigram_chunker.tagger.tag(postags))

[('#', 'B-NP'), ('$', 'B-NP'), ("''", 'O'), ('(', 'O'), (')', 'O'), (',', 'O'), ('.', 'O'), (':', 'O'), ('CC', 'O'), ('CD', 'I-NP'), ('DT', 'B-NP'), ('EX', 'B-NP'), ('FW', 'I-NP'), ('IN', 'O'), ('JJ', 'I-NP'), ('JJR', 'B-NP'), ('JJS', 'I-NP'), ('MD', 'O'), ('NN', 'I-NP'), ('NNP', 'I-NP'), ('NNPS', 'I-NP'), ('NNS', 'I-NP'), ('PDT', 'B-NP'), ('POS', 'B-NP'), ('PRP', 'B-NP'), ('PRP$', 'B-NP'), ('RB', 'O'), ('RBR', 'O'), ('RBS', 'B-NP'), ('RP', 'O'), ('SYM', 'O'), ('TO', 'O'), ('UH', 'O'), ('VB', 'O'), ('VBD', 'O'), ('VBG', 'O'), ('VBN', 'O'), ('VBP', 'O'), ('VBZ', 'O'), ('WDT', 'B-NP'), ('WP', 'B-NP'), ('WP$', 'B-NP'), ('WRB', 'O'), ('``', 'O')]

建立了一个unigram 分块器,很容易建立一个bigram 分块器:我们只需要改变类的名称为BigramChunker

class BigramChunker(nltk.ChunkParserI):def __init__(self, train_sents):train_data = [[(t,c) for w,t,c in nltk.chunk.tree2conlltags(sent)]for sent in train_sents]self.tagger = nltk.BigramTagger(train_data)def parse(self, sentence):pos_tags = [pos for (word,pos) in sentence]tagged_pos_tags = self.tagger.tag(pos_tags)chunktags = [chunktag for (pos, chunktag) in tagged_pos_tags]conlltags = [(word, pos, chunktag) for ((word,pos),chunktag)in zip(sentence, chunktags)]return nltk.chunk.conlltags2tree(conlltags)

bigram_chunker = BigramChunker(train_sents)

print(bigram_chunker.evaluate(test_sents))

ChunkParse score:IOB Accuracy: 93.3%%Precision: 82.3%%Recall: 86.8%%F-Measure: 84.5%%

训练基于分类器的分块器

例7-5. 使用连续分类器对名词短语分块

import nltk

class ConsecutiveNPChunkTagger(nltk.TaggerI):def __init__(self, train_sents):train_set = []for tagged_sent in train_sents:untagged_sent = nltk.tag.untag(tagged_sent)history = []for i, (word, tag) in enumerate(tagged_sent):featureset = npchunk_features(untagged_sent, i, history)train_set.append( (featureset, tag) )history.append(tag)self.classifier = nltk.MaxentClassifier.train(train_set, algorithm='megam', trace=0)def tag(self, sentence):history = []for i, word in enumerate(sentence):featureset = npchunk_features(sentence, i, history)tag = self.classifier.classify(featureset)history.append(tag)return zip(sentence, history)

class ConsecutiveNPChunker(nltk.ChunkParserI):def __init__(self, train_sents):tagged_sents = [[((w,t),c) for (w,t,c) innltk.chunk.tree2conlltags(sent)]for sent in train_sents]self.tagger = ConsecutiveNPChunkTagger(tagged_sents)def parse(self, sentence):tagged_sents = self.tagger.tag(sentence)conlltags = [(w,t,c) for ((w,t),c) in tagged_sents]return nltk.chunk.conlltags2tree(conlltags)

def npchunk_features(sentence, i, history):word, pos = sentence[i]return {"pos": pos}

from nltk.classify import MaxentClassifier

nltk.config_megam(’./megam_32.opt’)

备注,因本人环境为64位,以下错误暂时不能解决!!!!

#chunker = ConsecutiveNPChunker(train_sents)

#print(chunker.evaluate(test_sents))

def npchunk_features(sentence, i, history):word, pos = sentence[i]if i == 0:prevword, prevpos = "<START>", "<START>"else:prevword, prevpos = sentence[i-1]return {"pos": pos, "word": word, "prevpos": prevpos}

#chunker = ConsecutiveNPChunker(train_sents)

#print(chunker.evaluate(test_sents))

def npchunk_features(sentence, i, history):word, pos = sentence[i]if i == 0:prevword, prevpos = "<START>", "<START>"else:prevword, prevpos = sentence[i-1]if i == len(sentence)-1:nextword, nextpos = "<END>", "<END>"else:nextword, nextpos = sentence[i+1]return {"pos": pos,"word": word,"prevpos": prevpos,"nextpos": nextpos,"prevpos+pos": "%s+%s" % (prevpos, pos),"pos+nextpos": "%s+%s" % (pos, nextpos),"tags-since-dt": tags_since_dt(sentence, i)}

def tags_since_dt(sentence, i):tags = set()for word, pos in sentence[:i]:if pos == 'DT':tags = set()else:tags.add(pos)return '+'.join(sorted(tags))

#chunker = ConsecutiveNPChunker(train_sents)

#print(chunker.evaluate(test_sents))

7.4 语言结构中的递归

用级联分块器构建嵌套结构

例7-6. 一个分块器,处理NP,PP,VP 和S

grammar = r"""

NP: {<DT|JJ|NN.*>+} # Chunk sequences of DT, JJ, NN

PP: {<IN><NP>} # Chunk prepositions followed by NP

VP: {<VB.*><NP|PP|CLAUSE>+$} # Chunk verbs and their arguments

CLAUSE: {<NP><VP>} # Chunk NP, VP

"""

cp = nltk.RegexpParser(grammar)

sentence = [("Mary", "NN"), ("saw", "VBD"), ("the", "DT"), ("cat", "NN"),

("sit", "VB"), ("on", "IN"), ("the", "DT"), ("mat", "NN")]

print(cp.parse(sentence))

(S(NP Mary/NN)saw/VBD(CLAUSE(NP the/DT cat/NN)(VP sit/VB (PP on/IN (NP the/DT mat/NN)))))

sentence = [("John", "NNP"), ("thinks", "VBZ"), ("Mary", "NN"),

("saw", "VBD"), ("the", "DT"), ("cat", "NN"), ("sit", "VB"),

("on", "IN"), ("the", "DT"), ("mat", "NN")]

print(cp.parse(sentence))

(S(NP John/NNP)thinks/VBZ(NP Mary/NN)saw/VBD(CLAUSE(NP the/DT cat/NN)(VP sit/VB (PP on/IN (NP the/DT mat/NN)))))

cp = nltk.RegexpParser(grammar, loop=2)

print(cp.parse(sentence))

(S(NP John/NNP)thinks/VBZ(CLAUSE(NP Mary/NN)(VPsaw/VBD(CLAUSE(NP the/DT cat/NN)(VP sit/VB (PP on/IN (NP the/DT mat/NN)))))))

树

tree1 = nltk.Tree('NP', ['Alice'])

print(tree1)

(NP Alice)

tree2 = nltk.Tree('NP', ['the', 'rabbit'])

print(tree2)

(NP the rabbit)

tree3 = nltk.Tree('VP', ['chased', tree2])

tree4 = nltk.Tree('S', [tree1, tree3])

print(tree4)

(S (NP Alice) (VP chased (NP the rabbit)))

print(tree4[1])

(VP chased (NP the rabbit))

tree4[1].label()

'VP'

tree4.leaves()

['Alice', 'chased', 'the', 'rabbit']

tree4[1][1][1]

'rabbit'

树遍历

例7-7. 递归函数遍历树

def traverse(t):try:t.label()except AttributeError:print(t, end=" ")else:# Now we know that t.node is definedprint('(', t.label(), end=" ")for child in t:traverse(child)print(')', end=" ")

#t = nltk.Tree('(S (NP Alice) (VP chased (NP the rabbit)))')

#traverse(t)

7.5 命名实体识别

sent = nltk.corpus.treebank.tagged_sents()

#print(nltk.ne_chunk(sent, binary=True))

#print(nltk.ne_chunk(sent))

7.6 关系抽取

IN = re.compile(r'.*\bin\b(?!\b.+ing)')

for doc in nltk.corpus.ieer.parsed_docs('NYT_19980315'):for rel in nltk.sem.extract_rels('ORG', 'LOC', doc,corpus='ieer', pattern = IN):print(nltk.sem.rtuple(rel))

[ORG: 'WHYY'] 'in' [LOC: 'Philadelphia']

[ORG: 'McGlashan & Sarrail'] 'firm in' [LOC: 'San Mateo']

[ORG: 'Freedom Forum'] 'in' [LOC: 'Arlington']

[ORG: 'Brookings Institution'] ', the research group in' [LOC: 'Washington']

[ORG: 'Idealab'] ', a self-described business incubator based in' [LOC: 'Los Angeles']

[ORG: 'Open Text'] ', based in' [LOC: 'Waterloo']

[ORG: 'WGBH'] 'in' [LOC: 'Boston']

[ORG: 'Bastille Opera'] 'in' [LOC: 'Paris']

[ORG: 'Omnicom'] 'in' [LOC: 'New York']

[ORG: 'DDB Needham'] 'in' [LOC: 'New York']

[ORG: 'Kaplan Thaler Group'] 'in' [LOC: 'New York']

[ORG: 'BBDO South'] 'in' [LOC: 'Atlanta']

[ORG: 'Georgia-Pacific'] 'in' [LOC: 'Atlanta']

from nltk.corpus import conll2002

vnv = """(is/V| # 3rd sing present andwas/V| # past forms of the verb zijn ('be')werd/V| # and also presentwordt/V # past of worden ('become)).* # followed by anythingvan/Prep # followed by van ('of')"""

VAN = re.compile(vnv, re.VERBOSE)

for doc in conll2002.chunked_sents('ned.train'):for r in nltk.sem.extract_rels('PER', 'ORG', doc,corpus='conll2002', pattern=VAN):print(nltk.sem.clause(r, relsym="VAN"))

VAN("cornet_d'elzius", 'buitenlandse_handel')

VAN('johan_rottiers', 'kardinaal_van_roey_instituut')

VAN('annie_lennox', 'eurythmics')

7.7 小结

- 信息提取系统搜索大量非结构化文本,寻找特定类型的实体和关系,并用它们来填充有组织的数据库。这些数据库就可以用来寻找特定问题的答案。

- 信息提取系统的典型结构以断句开始,然后是分词和词性标注。接下来在产生的数据中搜索特定类型的实体。最后,信息提取系统着眼于文本中提到的相互临近的实体,并试 图确定这些实体之间是否有指定的关系。

- 实体识别通常采用分块器,它分割多标识符序列,并用适当的实体类型给它们加标签。 常见的实体类型包括组织、人员、地点、日期、时间、货币、GPE(地缘政治实体)。

- 用基于规则的系统可以构建分块器,例如:NLTK 中提供的RegexpParser 类;或使用机器学习技术,如本章介绍的ConsecutiveNPChunker。在这两种情况中,词性标 记往往是搜索块时的一个非常重要的特征。

- 虽然分块器专门用来建立相对平坦的数据结构,其中没有任何两个块允许重叠,但它们可以被串联在一起,建立嵌套结构。

- 关系抽取可以使用基于规则的系统,它通常查找文本中的连结实体和相关的词的特定模式;或使用机器学习系统,通常尝试从训练语料自动学习这种模式。

致谢

《Python自然语言处理》123 4,作者:Steven Bird, Ewan Klein & Edward Loper,是实践性很强的一部入门读物,2009年第一版,2015年第二版,本学习笔记结合上述版本,对部分内容进行了延伸学习、练习,在此分享,期待对大家有所帮助,欢迎加我微信(验证:NLP),一起学习讨论,不足之处,欢迎指正。

参考文献

http://nltk.org/ ↩︎

Steven Bird, Ewan Klein & Edward Loper,Natural Language Processing with Python,2009 ↩︎

(英)伯德,(英)克莱因,(美)洛普,《Python自然语言处理》,2010年,东南大学出版社 ↩︎

Steven Bird, Ewan Klein & Edward Loper,Natural Language Processing with Python,2015 ↩︎

这篇关于《Python自然语言处理(第二版)-Steven Bird等》学习笔记:第07章 从文本提取信息的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!