本文主要是介绍Opencv c++对比度增强(1.多尺度Retinex;2.LICE-Retinex;3.自适应对数映射。),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

常用对比度增强:直方图均衡化、gamma变换、对数变换,基本可以解决常见的工业问题。

本期主要讲述的是三种效果较好的对比度增强算法:1.多尺度Retinex;2. LICE -Retinex;2. 自适应对数映射。

-

- 多尺度Retinex

Retinex 理论的基本内容是物体的颜色是由物体对长波(红)、中波(绿)和短波(蓝)光线的反射能力决定的,而不是由反射光强度的绝对值决定的;物体的色彩不受光照非均性的影响,具有一致性,即Retinex理论是以色感一致性(颜色恒常性)为基础的。如下图所示,观察者所看到的物体的图像S是由物体表面对入射光L反射得到的,反射率R由物体本身决定,不受入射光L变化。

由于SSR 单尺度需要在颜色保真度和细节保持度上追去一个完美的平衡,而这个平衡在 应对不同图像的时候一般都有差别,所以针对这个清况,Jobson 和 Rahman 等人再次提出了 多尺度的 Retinex 算法(MSR),即对一幅图像在不同的尺度上利用高斯进行滤波,然后在对不同尺度上的滤波结果进行平均加权,获得所估计的照度图像。MSR 简单来说就是相当于做 多次的 SSR。

1.2 LICE-Retinex(低亮度图像Retinex图像增强)

这个算法中有几个比较重要的点:

- 该算法分为两个部分,第一部分是全局的自适应,第二部分是局部的自适应;

- 该算法是基于Retinex算法的;

- 该算法的局部自适应部分使用了导向滤波器。

1.2.1全局自适应

本节并非基于Retinex,而是通过首先计算图像像素的对数平均亮度值和最大亮度值,并以对数函数的形式压缩图像的动态范围来提高图像的对比度。

1.2.2区域自适应

难点在于Retinex和导向滤波的理解。

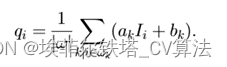

2.1Guide Filter导向滤波

导向滤波(Guided Fliter)显式地利用 guidance image 计算输出图像,其中 guidance image 可以是输入图像本身或者其他图像。导向滤波比起双边滤波来说在边界附近效果较好;另外,它还具有 O(N) 的线性时间的速度优势。和双边滤波的仿真对比效果如下:

基本原理如下:

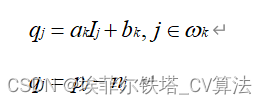

其中,p为输入图像,I 为导向图,q 为输出图像。在这里我们认为输出图像可以看成导向图I 的一个局部线性变换,其中k是局部化的窗口的中点,因此属于窗口 ωkωk 的pixel,都可以用导向图对应的pixel通过(ak,bk)的系数进行变换计算出来。同时,我们认为输入图像 p 是由 q 加上我们不希望的噪声或纹理得到的,因此有p = q + n 。

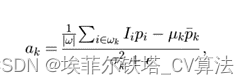

接下来就是解出这样的系数,使得p和q的差别尽量小,而且还可以保持局部线性模型。这里利用了带有正则项的 linear ridge regression(岭回归).

求解以上方程得到a和b在局部的值,对于一个要求的pixel可能含在多个window中,因此平均后得到:

cv::Mat GuidedFilter(cv::Mat& I, cv::Mat& p, int r, float eps)

{

#define _cv_type_ CV_32FC1cv::Mat _I;I.convertTo(_I, _cv_type_);I = _I;cv::Mat _p;p.convertTo(_p, _cv_type_);p = _p;//[hei, wid] = size(I); int hei = I.rows;int wid = I.cols;r = 2 * r + 1;//因为opencv自带的boxFilter()中的Size,比如9x9,我们说半径为4 //mean_I = boxfilter(I, r) ./ N; cv::Mat mean_I;cv::boxFilter(I, mean_I, _cv_type_, cv::Size(r, r));//mean_p = boxfilter(p, r) ./ N; cv::Mat mean_p;cv::boxFilter(p, mean_p, _cv_type_, cv::Size(r, r));//mean_Ip = boxfilter(I.*p, r) ./ N; cv::Mat mean_Ip;cv::boxFilter(I.mul(p), mean_Ip, _cv_type_, cv::Size(r, r));//cov_Ip = mean_Ip - mean_I .* mean_p; % this is the covariance of (I, p) in each local patch. cv::Mat cov_Ip = mean_Ip - mean_I.mul(mean_p);//mean_II = boxfilter(I.*I, r) ./ N; cv::Mat mean_II;cv::boxFilter(I.mul(I), mean_II, _cv_type_, cv::Size(r, r));//var_I = mean_II - mean_I .* mean_I; cv::Mat var_I = mean_II - mean_I.mul(mean_I);//a = cov_Ip ./ (var_I + eps); % Eqn. (5) in the paper; cv::Mat a = cov_Ip / (var_I + eps);//b = mean_p - a .* mean_I; % Eqn. (6) in the paper; cv::Mat b = mean_p - a.mul(mean_I);//mean_a = boxfilter(a, r) ./ N; cv::Mat mean_a;cv::boxFilter(a, mean_a, _cv_type_, cv::Size(r, r));//mean_b = boxfilter(b, r) ./ N; cv::Mat mean_b;cv::boxFilter(b, mean_b, _cv_type_, cv::Size(r, r));//q = mean_a .* I + mean_b; % Eqn. (8) in the paper; cv::Mat q = mean_a.mul(I) + mean_b;return q;

}cv::Mat LICE_retinex(const cv::Mat& img, bool LocalAdaptation = false, bool ContrastCorrect = true)

{const int cx = img.cols / 2;const int cy = img.rows / 2;Mat temp, Lw;img.convertTo(temp, CV_32FC3);cvtColor(temp, Lw, COLOR_BGR2GRAY);// global adaptation double LwMax;cv::minMaxLoc(Lw, NULL, &LwMax);Mat Lw_;const int num = img.rows * img.cols;cv::log(Lw + 1e-3f, Lw_);float LwAver = exp(cv::sum(Lw_)[0] / num);// globally compress the dynamic range of a HDR scene we use the following function in(4) presented in[5].Mat Lg;cv::log(Lw / LwAver + 1.f, Lg);cv::divide(Lg, log(LwMax / LwAver + 1.f), Lg);// local adaptation Mat Lout;if (LocalAdaptation) {int kernelSize = floor(std::max(3, std::max(img.rows / 100, img.cols / 100)));Mat Lp, kernel = cv::getStructuringElement(MORPH_RECT, Size(kernelSize, kernelSize));cv::dilate(Lg, Lp, kernel);Mat Hg = GuidedFilter(Lg, Lp, 10, 0.01f);double eta = 36;double LgMax;cv::minMaxLoc(Lg, NULL, &LgMax);Mat alpha = 1.0f + Lg * (eta / LgMax);Mat Lg_;cv::log(Lg + 1e-3f, Lg_);float LgAver = exp(cv::sum(Lg_)[0] / num);float lambda = 10;float beta = lambda * LgAver;cv::log(Lg / Hg + beta, Lout);cv::multiply(alpha, Lout, Lout);cv::normalize(Lout, Lout, 0, 255, NORM_MINMAX);}else {cv::normalize(Lg, Lout, 0, 255, NORM_MINMAX);}Mat gain(img.rows, img.cols, CV_32F);for (int i = 0; i < img.rows; i++) {for (int j = 0; j < img.cols; j++) {float x = Lw.at<float>(i, j);float y = Lout.at<float>(i, j);if (0 == x) gain.at<float>(i, j) = y;else gain.at<float>(i, j) = y / x;}}Mat out;Mat bgr[3];cv::split(temp, bgr);if (ContrastCorrect) {bgr[0] = (gain.mul(bgr[0] + Lw) + bgr[0] - Lw) * 0.5f;bgr[1] = (gain.mul(bgr[1] + Lw) + bgr[1] - Lw) * 0.5f;bgr[2] = (gain.mul(bgr[2] + Lw) + bgr[2] - Lw) * 0.5f;}else {cv::multiply(bgr[0], gain, bgr[0]);cv::multiply(bgr[1], gain, bgr[1]);cv::multiply(bgr[2], gain, bgr[2]);}cv::merge(bgr, 3, out);out.convertTo(out, CV_8UC3);return out;

}算法具体过程如下:

接下来上原图及仿真图:

原图

仿真图

1.2 自适应对数映射

本文描述了一种快速、高质量的色调映射技术,用于在亮度值动态范围有限的设备上显示高对比度图像。该方法基于亮度值的对数压缩,模仿人类对光的反应。引入了一个偏置功率函数来自适应地改变对数基底,从而保持了良好的细节和对比度。为了提高暗区域的对比度,建议对伽马校正程序进行更改。我们的自适应对数映射技术能够产生具有高动态内容的感知调谐图像,并以交互速度工作。我们展示了我们的色调映射技术在高动态范围视频播放器中的成功应用,该播放器能够调整任何类型显示器的最佳观看条件,同时考虑用户对亮度、对比度压缩和细节再现的偏好。

废话不多说,上图。

颜色饱和程度比LICE-Retinex要高。

cv::Mat adaptive_logarithmic_mapping(const cv::Mat& img)

{Mat ldrDrago, result;img.convertTo(ldrDrago, CV_32FC3, 1.0f / 255);cvtColor(ldrDrago, ldrDrago, cv::COLOR_BGR2XYZ);Ptr<TonemapDrago> tonemapDrago = createTonemapDrago(1.f, 1.f, 0.85f);tonemapDrago->process(ldrDrago, result);cvtColor(result, result, cv::COLOR_XYZ2BGR);result.convertTo(result, CV_8UC3, 255);return result;

}

最后,总的仿真代码,复制后就能运行,博主用的是vs2019+opencv4.2.0

#include<opencv2/opencv.hpp>

#include<iostream>using namespace std;

using namespace cv;static void gaussian_filter(cv::Mat& img, double sigma)

{int filter_size;// Reject unreasonable demandsif (sigma > 300) sigma = 300;// get needed filter size (enforce oddness)filter_size = (int)floor(sigma * 6) / 2;filter_size = filter_size * 2 + 1;// If 3 sigma is less than a pixel, why bother (ie sigma < 2/3)if (filter_size < 3) return;// FilterGaussianBlur(img, img, cv::Size(filter_size, filter_size), 0);

}cv::Mat multi_scale_retinex(const cv::Mat& img, const std::vector<double>& weights, const std::vector<double>& sigmas, int gain, int offset)

{cv::Mat fA, fB, fC;img.convertTo(fB, CV_32FC3);cv::log(fB, fA);// Normalize according to given weightsdouble weight = 0;size_t num = weights.size();for (int i = 0; i < num; i++)weight += weights[i];if (weight != 1.0f)fA *= weight;// Filter at each scalefor (int i = 0; i < num; i++){cv::Mat blur = fB.clone();gaussian_filter(blur, sigmas[i]);cv::log(blur, fC);// Compute weighted differencefC *= weights[i];fA -= fC;}

#if 0// Color restorationfloat restoration_factor = 6;float color_gain = 2;cv::normalize(fB, fC, restoration_factor, cv::NORM_L1);cv::log(fC + 1, fC);cv::multiply(fA, fC, fA, color_gain);

#endif// RestoreMat result = (fA * gain) + offset;result.convertTo(result, CV_8UC3);return result;

}cv::Mat GuidedFilter(cv::Mat& I, cv::Mat& p, int r, float eps)

{

#define _cv_type_ CV_32FC1cv::Mat _I;I.convertTo(_I, _cv_type_);I = _I;cv::Mat _p;p.convertTo(_p, _cv_type_);p = _p;//[hei, wid] = size(I); int hei = I.rows;int wid = I.cols;r = 2 * r + 1;//因为opencv自带的boxFilter()中的Size,比如9x9,我们说半径为4 //mean_I = boxfilter(I, r) ./ N; cv::Mat mean_I;cv::boxFilter(I, mean_I, _cv_type_, cv::Size(r, r));//mean_p = boxfilter(p, r) ./ N; cv::Mat mean_p;cv::boxFilter(p, mean_p, _cv_type_, cv::Size(r, r));//mean_Ip = boxfilter(I.*p, r) ./ N; cv::Mat mean_Ip;cv::boxFilter(I.mul(p), mean_Ip, _cv_type_, cv::Size(r, r));//cov_Ip = mean_Ip - mean_I .* mean_p; % this is the covariance of (I, p) in each local patch. cv::Mat cov_Ip = mean_Ip - mean_I.mul(mean_p);//mean_II = boxfilter(I.*I, r) ./ N; cv::Mat mean_II;cv::boxFilter(I.mul(I), mean_II, _cv_type_, cv::Size(r, r));//var_I = mean_II - mean_I .* mean_I; cv::Mat var_I = mean_II - mean_I.mul(mean_I);//a = cov_Ip ./ (var_I + eps); % Eqn. (5) in the paper; cv::Mat a = cov_Ip / (var_I + eps);//b = mean_p - a .* mean_I; % Eqn. (6) in the paper; cv::Mat b = mean_p - a.mul(mean_I);//mean_a = boxfilter(a, r) ./ N; cv::Mat mean_a;cv::boxFilter(a, mean_a, _cv_type_, cv::Size(r, r));//mean_b = boxfilter(b, r) ./ N; cv::Mat mean_b;cv::boxFilter(b, mean_b, _cv_type_, cv::Size(r, r));//q = mean_a .* I + mean_b; % Eqn. (8) in the paper; cv::Mat q = mean_a.mul(I) + mean_b;return q;

}cv::Mat LICE_retinex(const cv::Mat& img, bool LocalAdaptation = false, bool ContrastCorrect = true)

{const int cx = img.cols / 2;const int cy = img.rows / 2;Mat temp, Lw;img.convertTo(temp, CV_32FC3);cvtColor(temp, Lw, COLOR_BGR2GRAY);// global adaptation double LwMax;cv::minMaxLoc(Lw, NULL, &LwMax);Mat Lw_;const int num = img.rows * img.cols;cv::log(Lw + 1e-3f, Lw_);float LwAver = exp(cv::sum(Lw_)[0] / num);// globally compress the dynamic range of a HDR scene we use the following function in(4) presented in[5].Mat Lg;cv::log(Lw / LwAver + 1.f, Lg);cv::divide(Lg, log(LwMax / LwAver + 1.f), Lg);// local adaptation Mat Lout;if (LocalAdaptation) {int kernelSize = floor(std::max(3, std::max(img.rows / 100, img.cols / 100)));Mat Lp, kernel = cv::getStructuringElement(MORPH_RECT, Size(kernelSize, kernelSize));cv::dilate(Lg, Lp, kernel);Mat Hg = GuidedFilter(Lg, Lp, 10, 0.01f);double eta = 36;double LgMax;cv::minMaxLoc(Lg, NULL, &LgMax);Mat alpha = 1.0f + Lg * (eta / LgMax);Mat Lg_;cv::log(Lg + 1e-3f, Lg_);float LgAver = exp(cv::sum(Lg_)[0] / num);float lambda = 10;float beta = lambda * LgAver;cv::log(Lg / Hg + beta, Lout);cv::multiply(alpha, Lout, Lout);cv::normalize(Lout, Lout, 0, 255, NORM_MINMAX);}else {cv::normalize(Lg, Lout, 0, 255, NORM_MINMAX);}Mat gain(img.rows, img.cols, CV_32F);for (int i = 0; i < img.rows; i++) {for (int j = 0; j < img.cols; j++) {float x = Lw.at<float>(i, j);float y = Lout.at<float>(i, j);if (0 == x) gain.at<float>(i, j) = y;else gain.at<float>(i, j) = y / x;}}Mat out;Mat bgr[3];cv::split(temp, bgr);if (ContrastCorrect) {bgr[0] = (gain.mul(bgr[0] + Lw) + bgr[0] - Lw) * 0.5f;bgr[1] = (gain.mul(bgr[1] + Lw) + bgr[1] - Lw) * 0.5f;bgr[2] = (gain.mul(bgr[2] + Lw) + bgr[2] - Lw) * 0.5f;}else {cv::multiply(bgr[0], gain, bgr[0]);cv::multiply(bgr[1], gain, bgr[1]);cv::multiply(bgr[2], gain, bgr[2]);}cv::merge(bgr, 3, out);out.convertTo(out, CV_8UC3);return out;

}cv::Mat adaptive_logarithmic_mapping(const cv::Mat& img)

{Mat ldrDrago, result;img.convertTo(ldrDrago, CV_32FC3, 1.0f / 255);cvtColor(ldrDrago, ldrDrago, cv::COLOR_BGR2XYZ);Ptr<TonemapDrago> tonemapDrago = createTonemapDrago(1.f, 1.f, 0.85f);tonemapDrago->process(ldrDrago, result);cvtColor(result, result, cv::COLOR_XYZ2BGR);result.convertTo(result, CV_8UC3, 255);return result;

}int main(int argc, char** argv)

{vector<double> sigemas;vector<double> weights;for (int i = 0; i < 3; i++){weights.push_back(1.f / 3);}sigemas.push_back(30);sigemas.push_back(150);sigemas.push_back(300);Mat rgb = imread("C:\\Users\\tangzy\\Desktop\\2.png");//Mat out3 = multi_scale_retinex(rgb, weights, sigemas,1,1);Mat out2 = adaptive_logarithmic_mapping(rgb);Mat out1 = LICE_retinex(rgb);waitKey(0);return 0;

}这篇关于Opencv c++对比度增强(1.多尺度Retinex;2.LICE-Retinex;3.自适应对数映射。)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!