本文主要是介绍鱼眼相机标定-基于张正友标定法,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

鱼眼相机标定

前段时间曾经做过一段时间的摄像头标定,这里对以前做的事情做一个总结。这里介绍一下鱼眼相机的标定吧,也是相机标定的第二部分,主要还是代码解析和一些细节说明,为了让自己更好的理解相机标定,标定目的是为了实现坐标转换,通过摄像头测定相机的内参和外参之后,需要基于公式得到精确的坐标转换矩阵。(涉及公司项目,这里就不贴图了)

思路详解

相机代码见 https://github.com/wisdom-bob/Camera_calibration

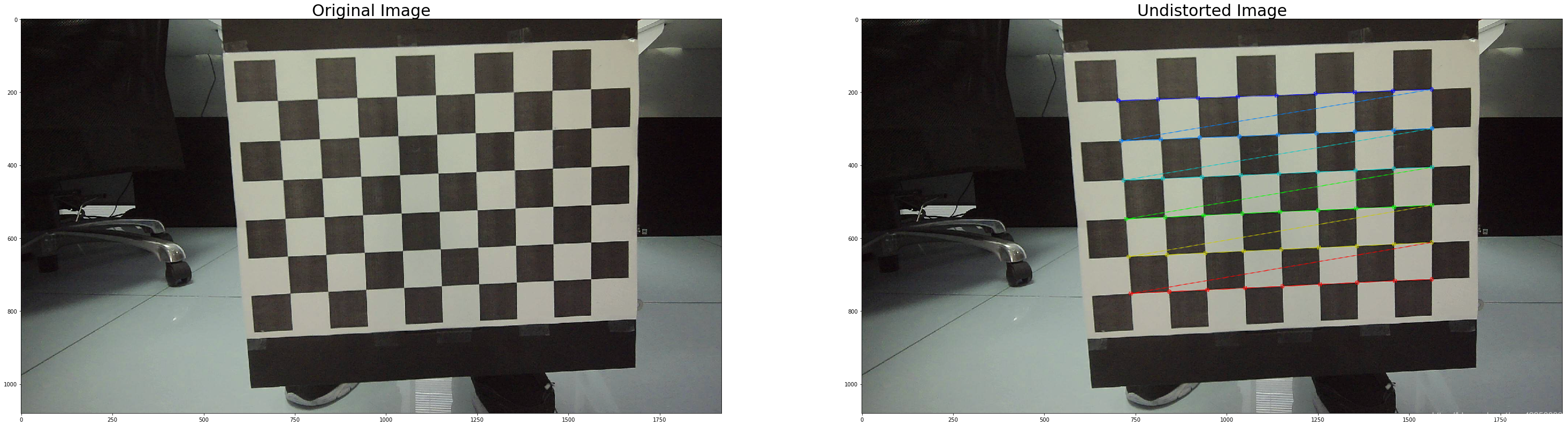

基于张正友标定法,通过opencv的cv2.fisheye完成摄像头标定(matlab对于鱼眼标定是没什么办法了),但单纯的鱼眼标定似乎结果误差还是比较大的,均方根误差近似3像素,于是采用了二次标定的方法,提高标定精度,最终降低均方根误差到0.07。这里把摄像头标定分为以下几个步骤:

Step1:采集若干张图片,筛选照片

Step2:基于图片利用cv2.fisheye.calibrate计算摄像头内参

Step3:基于鱼眼内参对图像进行undistort,基于undistort_img进行标定,得到需要的内外参

(对比鱼眼内外参和二次标定内外参结果有细微差别,但这一点点就是误差校正量)

Step4:取出摄像头实际工作环境的外参,这里由于我默认只求地面的点,则应该取图片平放置地面的那组参数

Step5:基于单应性标定法,利用dist,mtx以及外参求出对应坐标变换矩阵

这里我就直接开始配合着代码讲内容吧!

# 声明全局变量和一些调试参数

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 300, 0.00001)

find_flag = cv2.CALIB_CB_ADAPTIVE_THRESH+cv2.CALIB_CB_FAST_CHECK+cv2.CALIB_CB_NORMALIZE_IMAGE

calib_flags = cv2.fisheye.CALIB_RECOMPUTE_EXTRINSIC+cv2.fisheye.CALIB_CHECK_COND+cv2.fisheye.CALIB_FIX_SKEW

global mtx, dist

采集照片,筛选

张正友标定法是通过一种机器学习的方法,利用大量匹配的数据点像素坐标、世界坐标,基于极大似然估计拟合得到一个最优解,所以一定程度上,数据越多,结果就越准确,但是这里也需要注意,采集数据应该要分布均匀,尽可能均匀分布相机视界的所有位置,也就是尽量满足机器学习数据的独立同分布要求~~。

如下图所示,就差不多这样,但是要多拍一些,大概60张左右,可以让自己从容的筛照片。(鱼眼相机标定条件比针孔相机更加严格,图片的不合格率更高,所以照片要拍的更多一些,此外由于畸变,实际上有一些图像在去畸变后,视界范围发生变化,影响二次标定,也是不合格率高的原因)

# input fisheye imgs, catch objpoints and imgpoints, the same as pinhole camera

# prepare object points, like (0,0,0), (1,0,0), (2,0,0) ....,(6,5,0)

objp = np.zeros((1, 6 * 9, 3), np.float32)

objp[0,:,:2] = 40*np.mgrid[0:9, 0:6].T.reshape(-1, 2)# Arrays to store object points and image points from all the images.

objpoints = [] # 3d points in real world space

imgpoints = [] # 2d points in image plane.

img_with_corners = []# Make a list of calibration images

images = glob.glob('./other/fish*.jpg')# Step through the list and search for chessboard corners

for i in range(len(images)):img = cv2.imread(images[i])gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) ret, corners = cv2.findChessboardCorners(gray, (9, 6), None)#find_flag# If found, add object points, image pointsif ret == True: cv2.cornerSubPix(gray,corners,(15,15),(-1,-1),criteria)img = cv2.drawChessboardCorners(img, (9, 6), corners, ret)img_with_corners.append(img)objpoints.append(objp)imgpoints.append(corners)

以上的函数就是为了创造数据,用于标定摄像头的。样本数据是cv2.findChessboard以及cv2.cornerSubPix函数在图像上找到的精确标定板的黑白格子交点集X,ground_truth为世界坐标点集,即objp。

ps.cv2.cornerSubPix作用是基于确定的像素坐标,在其一定范围内进行亚像素级搜索,计算出更合适,拟合度更高的像素坐标值。(可见,在统计学角度上来说,这个结果是更加准确,但是如果单张有效点数太少,那统计结果的可靠性也不高)

基于图像计算摄像头内参和外参

# calculate the internal parameters by cv2.fisheye.calibrate.

# Extrinsic parameter is useless

num = len(objpoints)

mtxfish = np.zeros((3, 3))

distfish = np.zeros((4, 1))

rvecs = [np.zeros((1, 1, 3), dtype=np.float32) for i in range(num)]

tvecs = [np.zeros((1, 1, 3), dtype=np.float32) for i in range(num)]

img = cv2.imread('calibration/RT.jpg')

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

img_size = gray.shape[::-1]rms, mtxfish,distfish, rvecs, tvecs = cv2.fisheye.calibrate(objpoints, imgpoints, img_size, mtxfish,distfish, rvecs, tvecs, calib_flags, criteria)

基于cv2.fisheye.calibrate得到鱼眼相机的内参与图像匹配外参。这里,应该大部分人都会遇到ILL_Condition的问题,这就表示在这张图片上对应的objp和X并不能计算出鱼眼内参或者说计算出的内参与其他图片差别太大,总之就是这张图片不能用,把它删了就好了。这个过程会循环很多次,直到跑通,你就得到了fishmtx,fishdist。

二次标定取得内参和外参

对于不太精确要求的话,这样的结果已经足够了,如果需要坐标标定的,那么二次标定我觉得还是非常必要的。

# undist imgs and based on undist_imgs to try calibration

def __get_short_name(fname):"""该函数用于获取文件前缀名,输入包含路径的全名"""fpath, tempfname = os.path.split(fname)shortname, extension = os.path.splitext(tempfname) return shortname# prepare object points, like (0,0,0), (1,0,0), (2,0,0) ....,(6,5,0)

objp = np.zeros((6 * 9, 3), np.float32)

objp[:,:2] = 40*np.mgrid[0:9, 0:6].T.reshape(-1, 2)# Arrays to store object points and image points from all the images.

objpoints = [] # 3d points in real world space

imgpoints = [] # 2d points in image plane.

img_with_corners = []# Make a list of calibration images

images = glob.glob('./other/8*.jpg')# Step through the list and search for chessboard corners

for i in range(len(images)):img = cv2.imread(images[i])map1, map2 = cv2.fisheye.initUndistortRectifyMap(mtxfish, distfish, np.eye(3), mtxfish, img_size, cv2.CV_16SC2)undistorted_img = cv2.remap(img, map1, map2, interpolation=cv2.INTER_LINEAR,borderMode=cv2.BORDER_CONSTANT)gray = cv2.cvtColor(undistorted_img,cv2.COLOR_BGR2GRAY)ret, corners = cv2.findChessboardCorners(gray, (9,6), None)

# print(images[i],ret)# If found, add object points, image pointsif ret == True:cv2.cornerSubPix(gray,corners,(15,15),(1,-1),criteria)imge = cv2.drawChessboardCorners(undistorted_img, (9,6), corners, ret)img_with_corners.append(imge)objpoints.append(objp)imgpoints.append(corners)#去畸变图片存储shortname = __get_short_name(images[i]) # 获取文件前缀dst_fname = './calibration/undistort_img' + '/' + shortname + '.jpg'cv2.imwrite(dst_fname, imge) # 生成图片# calculate the internal parameters and extrinsic parameters....

img = cv2.imread('calibration/undistort_img/cRT.jpg')

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

img_size = gray.shape[::-1]

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, img_size, None, None)print("dist",dist)

print("mtx",mtx)

基于fishmtx,fishdist,把原始图片去畸变,再基于针孔相机标定的方法标定一下,采集objp和X。再次标定,得到mtx和dist。此时你也可以用cv2.projectPoints判断一下,均方根误差为0.045,原来为3.453。

取出摄像头实际工作环境的外参

# undist imgs and based on undist_imgs to try calibration

def __get_short_name(fname):"""该函数用于获取文件前缀名,输入包含路径的全名"""fpath, tempfname = os.path.split(fname)shortname, extension = os.path.splitext(tempfname) return shortname# prepare object points, like (0,0,0), (1,0,0), (2,0,0) ....,(6,5,0)

objp = np.zeros((3 * 4, 3), np.float32)

objp[:,:2] = 900*np.mgrid[0:4, 0:3].T.reshape(-1, 2)# Arrays to store object points and image points from all the images.

img = cv2.imread('./other/crt.jpg')

map1, map2 = cv2.fisheye.initUndistortRectifyMap(mtxfish, distfish, np.eye(3), mtxfish, img_size, cv2.CV_16SC2)

undistorted_img = cv2.remap(img, map1, map2, interpolation=cv2.INTER_LINEAR,borderMode=cv2.BORDER_CONSTANT)

gray = cv2.cvtColor(undistorted_img,cv2.COLOR_BGR2GRAY)

ret, corners = cv2.findChessboardCorners(gray, (4,3), None)

print(ret)# If found, add object points, image points

if ret == True:cv2.cornerSubPix(gray,corners,(15,15),(1,-1),criteria)imge = cv2.drawChessboardCorners(undistorted_img, (4,3), corners, ret)#去畸变图片存储dst_fname = './other/undistort_img/crt.jpg'cv2.imwrite(dst_fname, imge) # 生成图片# 对于结果不太理想的corners,通过人为测定,修正结果

corners[0]=[1214.56,649.829]

corners[2]=[848.306,659.974]

corners[1]=[1034.87,655.358]

corners[3]=[670.579,663.482]

retval,rvec,tvec = cv2.solvePnP(objp, corners, mtx, dist)

同样,这里也需要一张平铺在地面的标定板,基于cv2.solvePnP计算出目标外参。

基于单适性因子求出坐标转换矩阵

整理一下,目前我们已经得到摄像头fishmtx、fishdist,内参mtx,畸变参数dist,对应的外参rvec,tvec。现在我们要基于这些得到坐标变换矩阵。

map1, map2 = cv2.fisheye.initUndistortRectifyMap(mtxfish, distfish, np.eye(3), mtxfish, img_size, cv2.CV_16SC2)

def cal_undistort(img):# convert image into undistort scaleundistorted_img = cv2.remap(img, map1, map2, interpolation=cv2.INTER_LINEAR,borderMode=cv2.BORDER_CONSTANT)undist = cv2.undistort(undistorted_img , mtx, dist, None, mtx)return undistrvec_test=rvec

tvec_test=np.mat(tvec)

mtx_test=np.hstack((mtx,np.mat([0,0,0]).T))rmat,_ =cv2.Rodrigues(rvec_test)

RT=np.hstack((rmat,tvec_test))

KRT=np.vstack((RT,[0,0,0,1]))temp = KRT

temp=np.delete(temp,2,axis=1)

temp=np.delete(temp,3,axis=0)

temp=mtx*temp

tempI=temp.I# 以下代码不要同时跑,x,y为补偿量(x横向补偿量,y纵向补偿量)

# 图像转世界

stemp=np.array(tempI*tpts.T).T

results=[]

for i in range(len(stemp)):TTemp=stemp[i]/stemp[i][2]TTemp[0] = -(TTemp[0]+x)TTemp[1] = (TTemp[1]+y)results.append(TTemp)

print(np.mat(results))# 世界转图像

results=[]

for i in range(len(apts)):TTemp=apts[i]TTemp[0]=-1*(TTemp[0]-1190)TTemp[1]-=3490TTemp=temp*np.array([TTemp]).TTTemp/=TTemp[2]results.append(TTemp)

基于张正友单应性方法,通过矩阵操作,得到最后的变换矩阵temp(世界->图像)和tempI(图像->世界)。关于具体的理论见[^1],里面讲的很详细。

如有侵权,请私戳,感谢~

[^1]:https://blog.csdn.net/u010128736/article/details/52860364

这篇关于鱼眼相机标定-基于张正友标定法的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!