本文主要是介绍对话式AI——多轮对话拼接,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

1 介绍

oppo 举办的上下文拼接算法 比赛官网

1.1 比赛任务:

本次比赛使用OPPO小布助手开放的“对话式指代消解与省略恢复”数据集。数据集中包括了3万条对话交互数据。每条数据样本提供三轮对话,分别是上轮query、上轮应答和本轮query,选手需要使用算法技术将本轮query(即第三轮)处理成上下文无关的query。

1.2 数据介绍:

本数据集为训练集,包含以下内容:

每行采用json格式,用于表示一个样本。每条训练数据由query-01、response-01、query-02、query-02-rewrite四部分组成,分别是上轮query、上轮应答,本轮query,本轮query对应的上下文无关的query。具体格式举例:

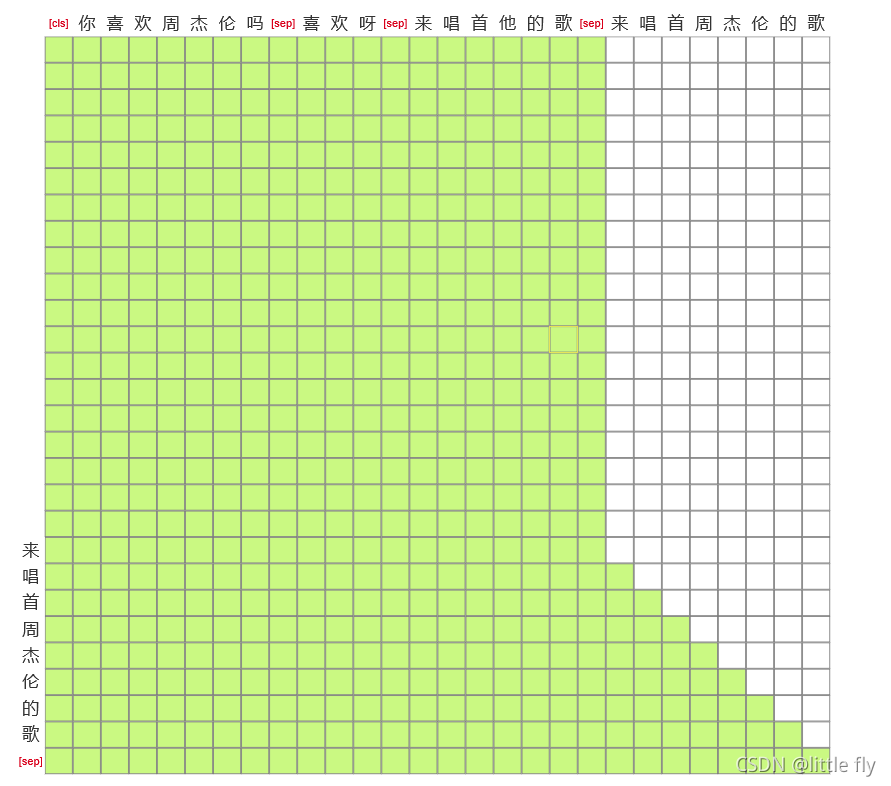

{"query-01": "你喜欢周杰伦吗","response-01": "喜欢呀","query-02": "来唱首他的歌","query-02-rewrite": "来唱首周杰伦的歌"

}

# 输入:query-01、response-01、query-02

# 输出:query-02-rewrite

2 数据预处理

将数据传入迭代器

class data_generator(DataGenerator):"""数据生成器"""def __iter__(self, random=False):batch_token_ids, batch_segment_ids = [], []for is_end, item in self.sample(random):q1 = item['query-01']r1 = item['response-01']q2 = item['query-02']qr = item['query-02-rewrite']token_ids, segment_ids = [tokenizer._token_start_id], [0]ids_q1 = tokenizer.encode(q1)[0][1:]ids_r1 = tokenizer.encode(r1)[0][1:]ids_q2 = tokenizer.encode(q2)[0][1:]ids_qr = tokenizer.encode(qr)[0][1:]token_ids.extend(ids_q1)segment_ids.extend([0] * len(ids_q1))token_ids.extend(ids_r1)segment_ids.extend([1] * len(ids_r1))token_ids.extend(ids_q2)segment_ids.extend([0] * len(ids_q2))token_ids.extend(ids_qr)segment_ids.extend([1] * len(ids_qr))batch_token_ids.append(token_ids)batch_segment_ids.append(segment_ids)if len(batch_token_ids) == self.batch_size or is_end:batch_token_ids = sequence_padding(batch_token_ids)batch_segment_ids = sequence_padding(batch_segment_ids)yield [batch_token_ids, batch_segment_ids], Nonebatch_token_ids, batch_segment_ids = [], []

3 模型

使用苏神bert4keras框架,模型是unilm,直接用单个Bert的架构做Seq2Seq,详细介绍请转入从语言模型到Seq2Seq。

使用unilm搭建成本次比赛的模型,模型结构如下:

模型搭建代码:

class CrossEntropy(Loss):"""交叉熵作为loss,并mask掉输入部分"""def compute_loss(self, inputs, mask=None):y_true, y_mask, y_pred = inputsy_true = y_true[:, 1:] # 目标token_idsy_mask = y_mask[:, 1:] # segment_ids,刚好指示了要预测的部分y_pred = y_pred[:, :-1] # 预测序列,错开一位loss = K.sparse_categorical_crossentropy(y_true, y_pred)loss = K.sum(loss * y_mask) / K.sum(y_mask)return lossmodel = build_transformer_model(config_path,checkpoint_path,application='unilm',keep_tokens=keep_tokens, # 只保留keep_tokens中的字,精简原字表

)

output = CrossEntropy(2)(model.inputs + model.outputs)

model = Model(model.inputs, output)

model.compile(optimizer=Adam(1e-5))

model.summary()

4 解码器

使用beam_search来解码

class AutoTitle(AutoRegressiveDecoder):"""seq2seq解码器"""@AutoRegressiveDecoder.wraps(default_rtype='probas')def predict(self, inputs, output_ids, states):token_ids, segment_ids = inputstoken_ids = np.concatenate([token_ids, output_ids], 1)segment_ids = np.concatenate([segment_ids, np.ones_like(output_ids)], 1)return self.last_token(model).predict([token_ids, segment_ids])def generate(self, item, topk=1):q1 = item['query-01']r1 = item['response-01']q2 = item['query-02']qr = item['query-02-rewrite']token_ids, segment_ids = [tokenizer._token_start_id], [0]ids_q1 = tokenizer.encode(q1)[0][1:]ids_r1 = tokenizer.encode(r1)[0][1:]ids_q2 = tokenizer.encode(q2)[0][1:]ids_qr = tokenizer.encode(qr)[0][1:]token_ids.extend(ids_q1)segment_ids.extend([0] * len(ids_q1))token_ids.extend(ids_r1)segment_ids.extend([1] * len(ids_r1))token_ids.extend(ids_q2)segment_ids.extend([0] * len(ids_q2))# token_ids.extend(ids_qr)# segment_ids.extend([1] * len(ids_qr))output_ids = self.beam_search([token_ids, segment_ids], topk=topk) # 基于beam searchreturn tokenizer.decode(output_ids)autotitle = AutoTitle(start_id=None, end_id=tokenizer._token_end_id, maxlen=32)

5 训练

class Evaluator(keras.callbacks.Callback):"""评估与保存"""def __init__(self):self.lowest = 1e10def on_epoch_end(self, epoch, logs=None):# 保存最优if logs['loss'] <= self.lowest:self.lowest = logs['loss']save_name = r'data/best_model_%d.weights' % (epoch)model.save_weights(save_name)# 演示效果just_show()file = open('data/train.txt', 'r', encoding='utf-8')

train_data = [json.loads(line, encoding='utf-8') for line in file.read().split('\n')]

# train_data = train_data[:100]

evaluator = Evaluator()

train_generator = data_generator(train_data, batch_size)

model.fit(train_generator.forfit(),steps_per_epoch=len(train_generator),epochs=epochs,callbacks=[evaluator]

)

本次比赛主要是参与为主,没有花费太多时间,只提交了5次,官网中的准确率在86%以上。

这篇关于对话式AI——多轮对话拼接的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!