本文主要是介绍【综述】Diffusion Models: A Comprehensive Survey of Methods and Applications,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

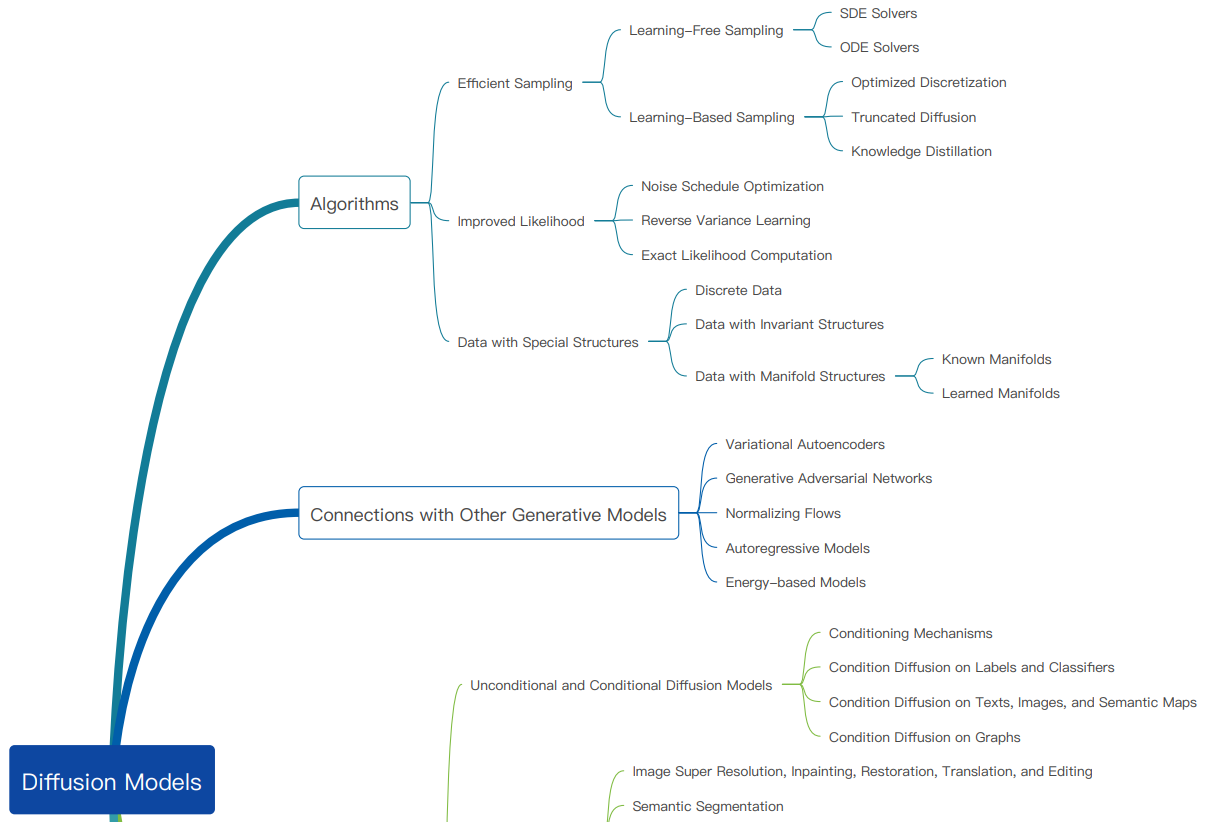

Diffusion Models: A Comprehensive Survey of Methods and Applications

论文:https://arxiv.org/abs/2209.00796

github:https://github.com/YangLing0818/Diffusion-Models-Papers-Survey-Taxonomy

目录

Diffusion Models: A Comprehensive Survey of Methods and Applications

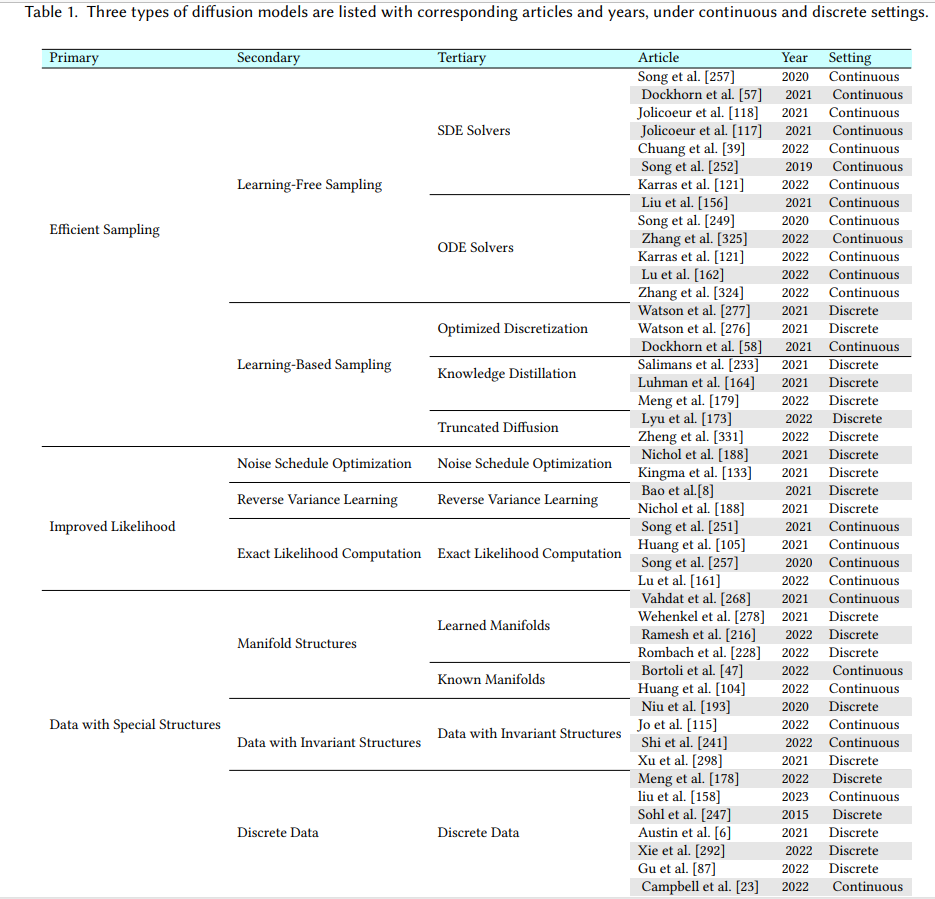

Algorithm Taxonomy

1. Efficient Sampling

1.1 Learning-Free Sampling

1.1.1 SDE Solver

1.1.2 ODE Solver

1.2 Learning-Based Sampling

1.2.1 Optimized Discretization

1.2.2 Knowledge Distillation

1.2.3 Truncated Diffusion

2. Improved Likelihood

2.1. Noise Schedule Optimization

2.2. Reverse Variance Learning

2.3. Exact Likelihood Computation

3. Data with Special Structures

3.1. Data with Manifold Structures

3.1.1 Known Manifolds

3.1.2 Learned Manifolds

3.2. Data with Invariant Structures

3.3 Discrete Data

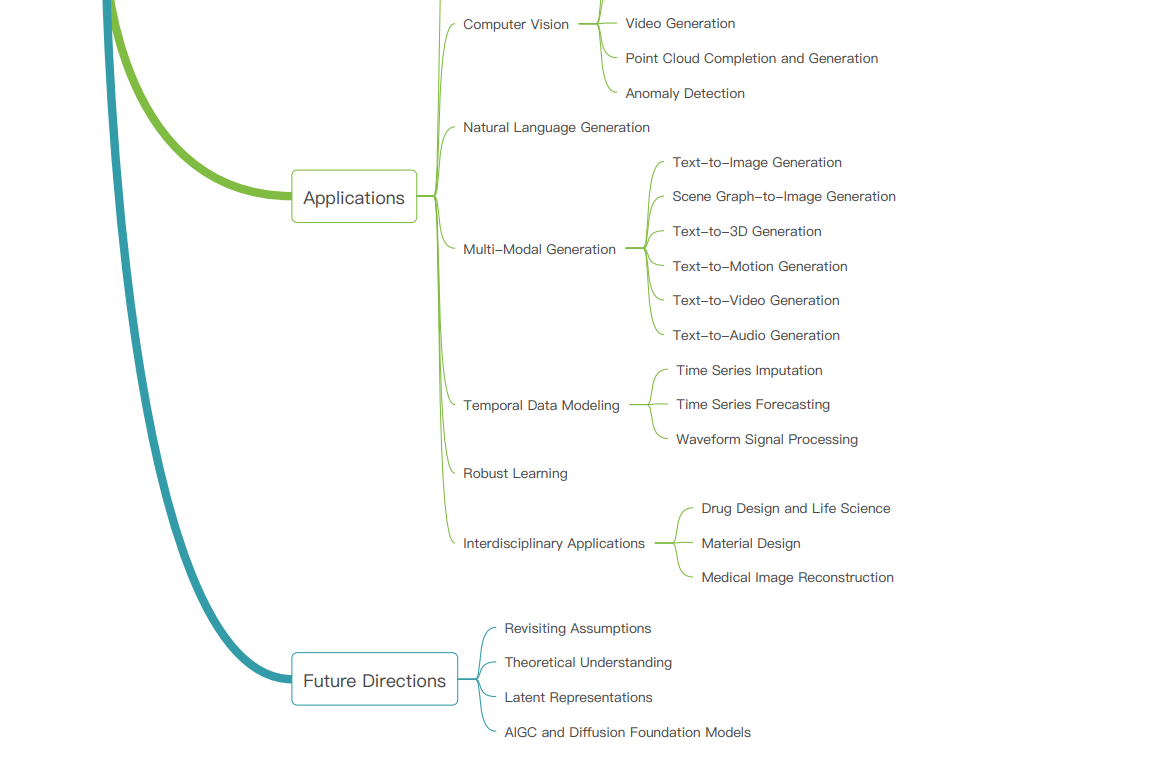

Application Taxonomy

1. Computer Vision

2. Natural Language Processing

3. Temporal Data Modeling

4. Multi-Modal Learning

5. Robust Learning

6. Molecular Graph Modeling

7. Material Design

8. Medical Image Reconstruction

Connections with Other Generative Models

1. Variational Autoencoder

2. Generative Adversarial Network

3. Normalizing Flow

4. Autoregressive Models

5. Energy-Based Models

Algorithm Taxonomy

1. Efficient Sampling

1.1 Learning-Free Sampling

1.1.1 SDE Solver

Score-Based Generative Modeling through Stochastic Differential Equations

Adversarial score matching and improved sampling for image generation

Come-closer-diffuse-faster: Accelerating conditional diffusion models for inverse problems through stochastic contraction

Score-Based Generative Modeling with Critically-Damped Langevin Diffusion

Gotta Go Fast When Generating Data with Score-Based Models

Elucidating the Design Space of Diffusion-Based Generative Models

Generative modeling by estimating gradients of the data distribution

1.1.2 ODE Solver

Denoising Diffusion Implicit Models

gDDIM: Generalized denoising diffusion implicit models

Elucidating the Design Space of Diffusion-Based Generative Models

DPM-Solver: A Fast ODE Solver for Diffusion Probabilistic Model Sampling in Around 10 Step

Pseudo Numerical Methods for Diffusion Models on Manifolds

Fast Sampling of Diffusion Models with Exponential Integrator

Poisson flow generative models

1.2 Learning-Based Sampling

1.2.1 Optimized Discretization

Learning to Efficiently Sample from Diffusion Probabilistic Models

GENIE: Higher-Order Denoising Diffusion Solvers

Learning fast samplers for diffusion models by differentiating through sample quality

1.2.2 Knowledge Distillation

Progressive Distillation for Fast Sampling of Diffusion Models

Knowledge Distillation in Iterative Generative Models for Improved Sampling Speed

1.2.3 Truncated Diffusion

Accelerating Diffusion Models via Early Stop of the Diffusion Process

Truncated Diffusion Probabilistic Models

2. Improved Likelihood

2.1. Noise Schedule Optimization

Improved denoising diffusion probabilistic models

Variational diffusion models

2.2. Reverse Variance Learning

Analytic-DPM: an Analytic Estimate of the Optimal Reverse Variance in Diffusion Probabilistic Models

Improved denoising diffusion probabilistic models

Stable Target Field for Reduced Variance Score Estimation in Diffusion Models

2.3. Exact Likelihood Computation

Score-Based Generative Modeling through Stochastic Differential Equations

Maximum likelihood training of score-based diffusion models

A variational perspective on diffusion-based generative models and score matching

Score-Based Generative Modeling through Stochastic Differential Equations

Maximum Likelihood Training for Score-based Diffusion ODEs by High Order Denoising Score Matching

Maximum Likelihood Training of Implicit Nonlinear Diffusion Models

3. Data with Special Structures

3.1. Data with Manifold Structures

3.1.1 Known Manifolds

Riemannian Score-Based Generative Modeling

Riemannian Diffusion Models

3.1.2 Learned Manifolds

Score-based generative modeling in latent space

Diffusion priors in variational autoencoders

Hierarchical text-conditional image generation with clip latents

High-resolution image synthesis with latent diffusion models

3.2. Data with Invariant Structures

GeoDiff: A Geometric Diffusion Model for Molecular Conformation Generation

Permutation invariant graph generation via score-based generative modeling

Score-based Generative Modeling of Graphs via the System of Stochastic Differential Equations

DiGress: Discrete Denoising diffusion for graph generation

Learning gradient fields for molecular conformation generation

Graphgdp: Generative diffusion processes for permutation invariant graph generation

SwinGNN: Rethinking Permutation Invariance in Diffusion Models for Graph Generation

3.3 Discrete Data

Vector quantized diffusion model for text-to-image synthesis

Structured Denoising Diffusion Models in Discrete State-Spaces

Vector Quantized Diffusion Model with CodeUnet for Text-to-Sign Pose Sequences Generation

Deep Unsupervised Learning using Non equilibrium Thermodynamics.

A Continuous Time Framework for Discrete Denoising Models

Application Taxonomy

1. Computer Vision

-

Conditional Image Generation (Image Super Resolution, Inpainting, Translation, Manipulation)

-

SRDiff: Single Image Super-Resolution with Diffusion Probabilistic Models

-

Image Super-Resolution via Iterative Refinement

-

High-Resolution Image Synthesis with Latent Diffusion Models

-

Repaint: Inpainting using denoising diffusion probabilistic models.

-

Palette: Image-to-image diffusion models.

-

Generative Visual Prompt: Unifying Distributional Control of Pre-Trained Generative Models

-

Cascaded Diffusion Models for High Fidelity Image Generation.

-

Conditional image generation with score-based diffusion models

-

Unsupervised Medical Image Translation with Adversarial Diffusion Models

-

Score-based diffusion models for accelerated MRI

-

Solving Inverse Problems in Medical Imaging with Score-Based Generative Models

-

MR Image Denoising and Super-Resolution Using Regularized Reverse Diffusion

-

Sdedit: Guided image synthesis and editing with stochastic differential equations

-

Soft diffusion: Score matching for general corruptions

-

Diffusion-Based Scene Graph to Image Generation with Masked Contrastive Pre-Training

-

ControlNet: Adding Conditional Control to Text-to-Image Diffusion Models

-

Image Restoration with Mean-Reverting Stochastic Differential Equations

-

- Semantic Segmentation

- Label-Efficient Semantic Segmentation with Diffusion Models.

- Decoder Denoising Pretraining for Semantic Segmentation.

- Diffusion models as plug-and-play priors

- Video Generation

- Flexible Diffusion Modeling of Long Videos

- Video diffusion models

- Diffusion probabilistic modeling for video generation

- MotionDiffuse: Text-Driven Human Motion Generation with Diffusion Model.

- 3D Generation

- 3d shape generation and completion through point-voxel diffusion

- Diffusion probabilistic models for 3d point cloud generation

- A Conditional Point Diffusion-Refinement Paradigm for 3D Point Cloud Completion

- Let us Build Bridges: Understanding and Extending Diffusion Generative Models.

- LION: Latent Point Diffusion Models for 3D Shape Generation

- Make-It-3D: High-Fidelity 3D Creation from A Single Image with Diffusion Prior

- Score Jacobian Chaining: Lifting Pretrained 2D Diffusion Models for 3D Generation

- RenderDiffusion: Image Diffusion for 3D Reconstruction, Inpainting and Generation

- HOLODIFFUSION: Training a 3D Diffusion Model using 2D Images

- Latent-NeRF for Shape-Guided Generation of 3D Shapes and Textures

- DiffRF: Rendering-Guided 3D Radiance Field Diffusion

- DiffusioNeRF: Regularizing Neural Radiance Fields with Denoising Diffusion Models

- 3D Neural Field Generation using Triplane Diffusion

- Anomaly Detection

- AnoDDPM: Anomaly Detection With Denoising Diffusion Probabilistic Models Using Simplex Noise

- Remote Sensing Change Detection (Segmentation) using Denoising Diffusion Probabilistic Models.

- Object Detection

- DiffusionDet: Diffusion Model for Object Detection

2. Natural Language Processing

- Structured denoising diffusion models in discrete state-spaces

- Diffusion-LM Improves Controllable Text Generation.

- Analog Bits: Generating Discrete Data using Diffusion Models with Self-Conditioning

- DiffuSeq: Sequence to Sequence Text Generation with Diffusion Models

3. Temporal Data Modeling

- Time Series Imputation

- CSDI: Conditional score-based diffusion models for probabilistic time series imputation

- Diffusion-based Time Series Imputation and Forecasting with Structured State Space Models

- Neural Markov Controlled SDE: Stochastic Optimization for Continuous-Time Data

- Time Series Forecasting

- Autoregressive denoising diffusion models for multivariate probabilistic time series forecasting

- Diffusion-based Time Series Imputation and Forecasting with Structured State Space Models

- Waveform Signal Processing

- WaveGrad: Estimating Gradients for Waveform Generation.

- DiffWave: A Versatile Diffusion Model for Audio Synthesis

4. Multi-Modal Learning

- Text-to-Image Generation

- Blended diffusion for text-driven editing of natural images

- Hierarchical Text-Conditional Image Generation with CLIP Latents

- Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding

- GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models

- Vector quantized diffusion model for text-to-image synthesis.

- Frido: Feature Pyramid Diffusion for Complex Image Synthesis.

- DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation

- Imagic: Text-Based Real Image Editing with Diffusion Models

- UniTune: Text-Driven Image Editing by Fine Tuning an Image Generation Model on a Single Image

- DiffusionCLIP: Text-Guided Diffusion Models for Robust Image Manipulation

- One Transformer Fits All Distributions in Multi-Modal Diffusion at Scale

- TextDiffuser: Diffusion Models as Text Painters

- Text-to-3D Generation

- Magic3D: High-Resolution Text-to-3D Content Creation

- DreamFusion: Text-to-3D using 2D Diffusion

- Make-It-3D: High-Fidelity 3D Creation from A Single Image with Diffusion Prior

- Shap·E: Generating Conditional 3D Implicit Functions

- Fantasia3D: Disentangling Geometry and Appearance for High-quality Text-to-3D Content Creation

- Dream3D: Zero-Shot Text-to-3D Synthesis Using 3D Shape Prior and Text-to-Image Diffusion Models

- ProlificDreamer: High-Fidelity and Diverse Text-to-3D Generation with Variational Score Distillation

- Scene Graph-to-Image Generation

- Diffusion-Based Scene Graph to Image Generation with Masked Contrastive Pre-Training

- Text-to-Audio Generation

- Grad-TTS: A Diffusion Probabilistic Model for Text-to-Speech

- Guided-TTS 2: A Diffusion Model for High-quality Adaptive Text-to-Speech with Untranscribed Data

- Diffsound: Discrete Diffusion Model for Text-to-sound Generation

- ItôTTS and ItôWave: Linear Stochastic Differential Equation Is All You Need For Audio Generation

- Zero-Shot Voice Conditioning for Denoising Diffusion TTS Models

- EdiTTS: Score-based Editing for Controllable Text-to-Speech.

- ProDiff: Progressive Fast Diffusion Model For High-Quality Text-to-Speech.

- Text-to-Audio Generation using Instruction-Tuned LLM and Latent Diffusion Model

- Text-to-Motion Generation

- Human motion diffusion model

- Motiondiffuse: Text-driven human motion generation with diffusion model

- Flame: Free-form language-based motion synthesis & editing

- Text-to-Video Generation/Editting

- Make-a-video: Text-to-video generation without text-video data

- Tune-A-Video: One-Shot Tuning of Image Diffusion Models for Text-to-Video Generation

- FateZero: Fusing Attentions for Zero-shot Text-based Video Editing

- Imagen video: High definition video generation with diffusion models

- Conditional Image-to-Video Generation with Latent Flow Diffusion Models

- Text2Video-Zero: Text-to-Image Diffusion Models are Zero-Shot Video Generators

- Zero-Shot Video Editing Using Off-The-Shelf Image Diffusion Models

- Follow Your Pose: Pose-Guided Text-to-Video Generation using Pose-Free Videos

- Text2Video-Zero: Text-to-Image Diffusion Models are Zero-Shot Video Generators

- ControlVideo: Training-free Controllable Text-to-Video Generation

5. Robust Learning

- Data Purification

- Diffusion Models for Adversarial Purification

- Adversarial purification with score-based generative models

- Threat Model-Agnostic Adversarial Defense using Diffusion Models

- Guided Diffusion Model for Adversarial Purification

- Guided Diffusion Model for Adversarial Purification from Random Noise

- PointDP: Diffusion-driven Purification against Adversarial Attacks on 3D Point Cloud Recognition.

- Generating Synthetic Data for Robust Learning

- Generating high fidelity data from low-density regions using diffusion models

- Don’t Play Favorites: Minority Guidance for Diffusion Models

- Better diffusion models further improve adversarial training

6. Molecular Graph Modeling

- Torsional Diffusion for Molecular Conformer Generation.

- Equivariant Diffusion for Molecule Generation in 3D

- Protein Structure and Sequence Generation with Equivariant Denoising Diffusion Probabilistic Models

- GeoDiff: A Geometric Diffusion Model for Molecular Conformation Generation

- Diffusion probabilistic modeling of protein backbones in 3D for the motif-scaffolding problem

- Diffusion-based Molecule Generation with Informative Prior Bridge

- Learning gradient fields for molecular conformation generation

- Predicting molecular conformation via dynamic graph score matching.

- DiffDock: Diffusion Steps, Twists, and Turns for Molecular Docking

- 3D Equivariant Diffusion for Target-Aware Molecule Generation and Affinity Prediction

- Learning Joint 2D & 3D Diffusion Models for Complete Molecule Generation

7. Material Design

- Crystal Diffusion Variational Autoencoder for Periodic Material Generation

- Antigen-specific antibody design and optimization with diffusion-based generative models

8. Medical Image Reconstruction

- Solving Inverse Problems in Medical Imaging with Score-Based Generative Models

- MR Image Denoising and Super-Resolution Using Regularized Reverse Diffusion

- Score-based diffusion models for accelerated MRI

- Towards performant and reliable undersampled MR reconstruction via diffusion model sampling

- Come-closer-diffuse-faster: Accelerating conditional diffusion models for inverse problems through stochastic contraction

Connections with Other Generative Models

1. Variational Autoencoder

- Understanding Diffusion Models: A Unified Perspective

- A variational perspective on diffusion-based generative models and score matching

- Score-based generative modeling in latent space

2. Generative Adversarial Network

- Diffusion-GAN: Training GANs with Diffusion.

- Tackling the generative learning trilemma with denoising diffusion gans

3. Normalizing Flow

- Diffusion Normalizing Flow

- Interpreting diffusion score matching using normalizing flow

- Maximum Likelihood Training of Implicit Nonlinear Diffusion Models

4. Autoregressive Models

- Autoregressive Diffusion Models.

- Autoregressive Denoising Diffusion Models for Multivariate Probabilistic Time Series Forecasting.

5. Energy-Based Models

- Learning Energy-Based Models by Diffusion Recovery Likelihood

- Latent Diffusion Energy-Based Model for Interpretable Text Modeling

这篇关于【综述】Diffusion Models: A Comprehensive Survey of Methods and Applications的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!