本文主要是介绍LoongArch单机Ceph Bcache加速4K随机写性能测试,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

LoongArch单机Ceph Bcache加速4K随机写性能测试

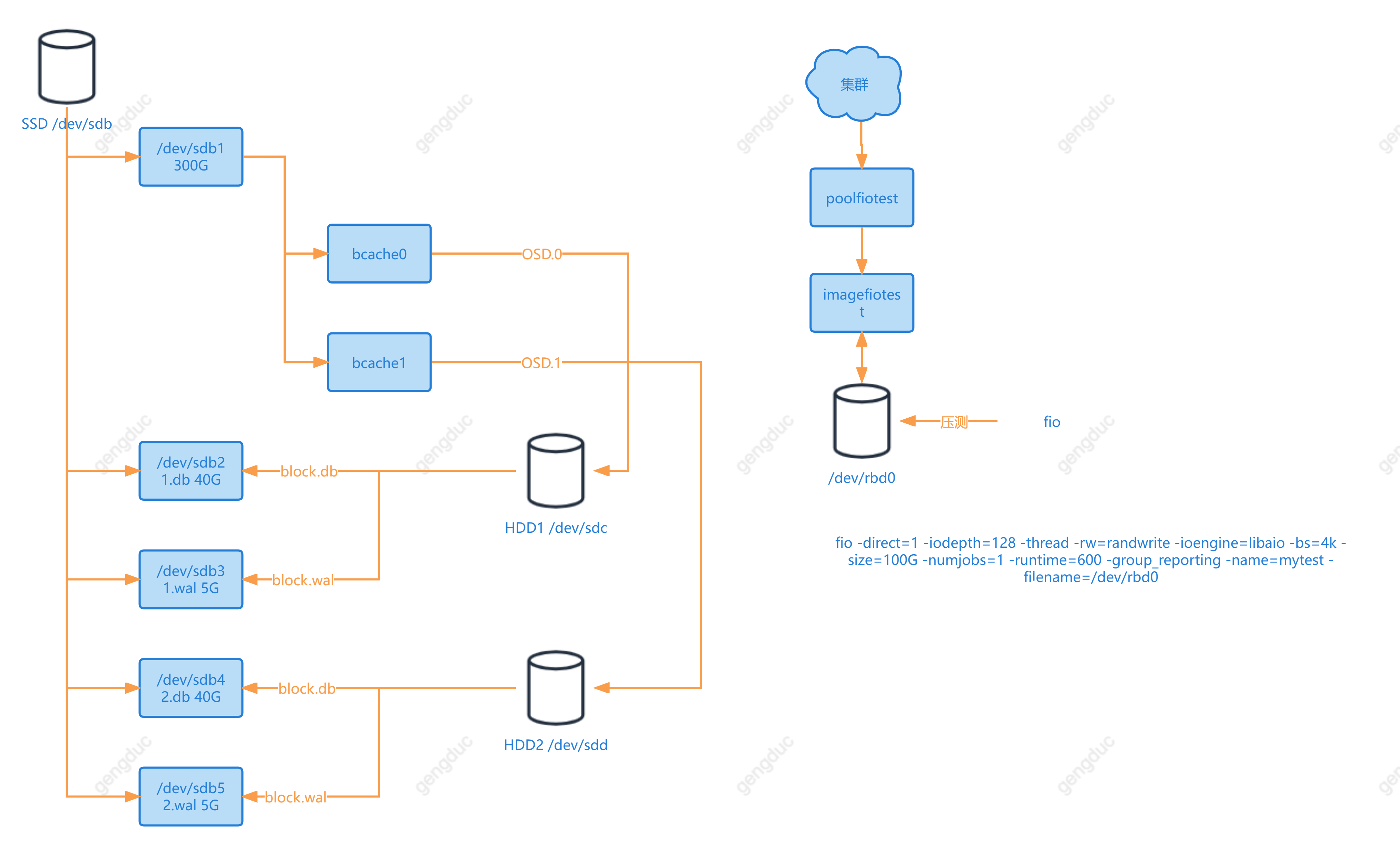

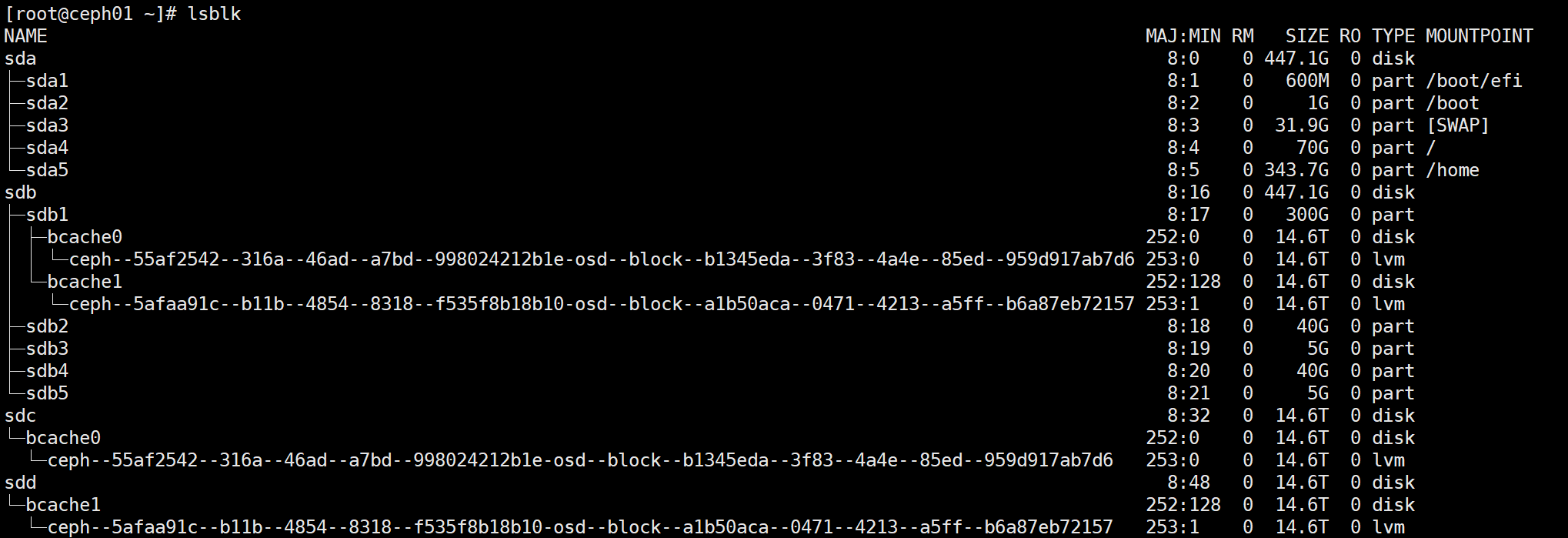

两块HDD做OSD

[root@ceph01 ~]# fio -direct=1 -iodepth=128 -thread -rw=randwrite -ioengine=libaio -bs=4k -size=100G -numjobs=1 -runtime=600 -group_reporting -name=mytest -filename=/dev/rbd0

mytest: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=128

fio-3.22

Starting 1 thread

Jobs: 1 (f=1): [w(1)][100.0%][w=1605KiB/s][w=401 IOPS][eta 00m:00s]

mytest: (groupid=0, jobs=1): err= 0: pid=83763: Mon Oct 16 03:44:45 2023write: IOPS=404, BW=1620KiB/s (1659kB/s)(950MiB/600262msec); 0 zone resetsslat (usec): min=3, max=116, avg= 5.76, stdev= 4.41clat (msec): min=36, max=947, avg=316.05, stdev=71.26lat (msec): min=36, max=947, avg=316.06, stdev=71.26clat percentiles (msec):| 1.00th=[ 180], 5.00th=[ 215], 10.00th=[ 239], 20.00th=[ 264],| 30.00th=[ 279], 40.00th=[ 296], 50.00th=[ 309], 60.00th=[ 326],| 70.00th=[ 342], 80.00th=[ 363], 90.00th=[ 397], 95.00th=[ 435],| 99.00th=[ 542], 99.50th=[ 609], 99.90th=[ 793], 99.95th=[ 810],| 99.99th=[ 944]bw ( KiB/s): min= 232, max= 3072, per=100.00%, avg=1622.36, stdev=394.76, samples=1198iops : min= 58, max= 768, avg=405.58, stdev=98.69, samples=1198lat (msec) : 50=0.02%, 100=0.01%, 250=14.65%, 500=83.73%, 750=1.44%lat (msec) : 1000=0.15%cpu : usr=0.11%, sys=0.30%, ctx=16672, majf=0, minf=0IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%issued rwts: total=0,243095,0,0 short=0,0,0,0 dropped=0,0,0,0latency : target=0, window=0, percentile=100.00%, depth=128Run status group 0 (all jobs):WRITE: bw=1620KiB/s (1659kB/s), 1620KiB/s-1620KiB/s (1659kB/s-1659kB/s), io=950MiB (996MB), run=600262-600262msecDisk stats (read/write):rbd0: ios=0/242996, merge=0/0, ticks=0/76720597, in_queue=76980152, util=100.00%

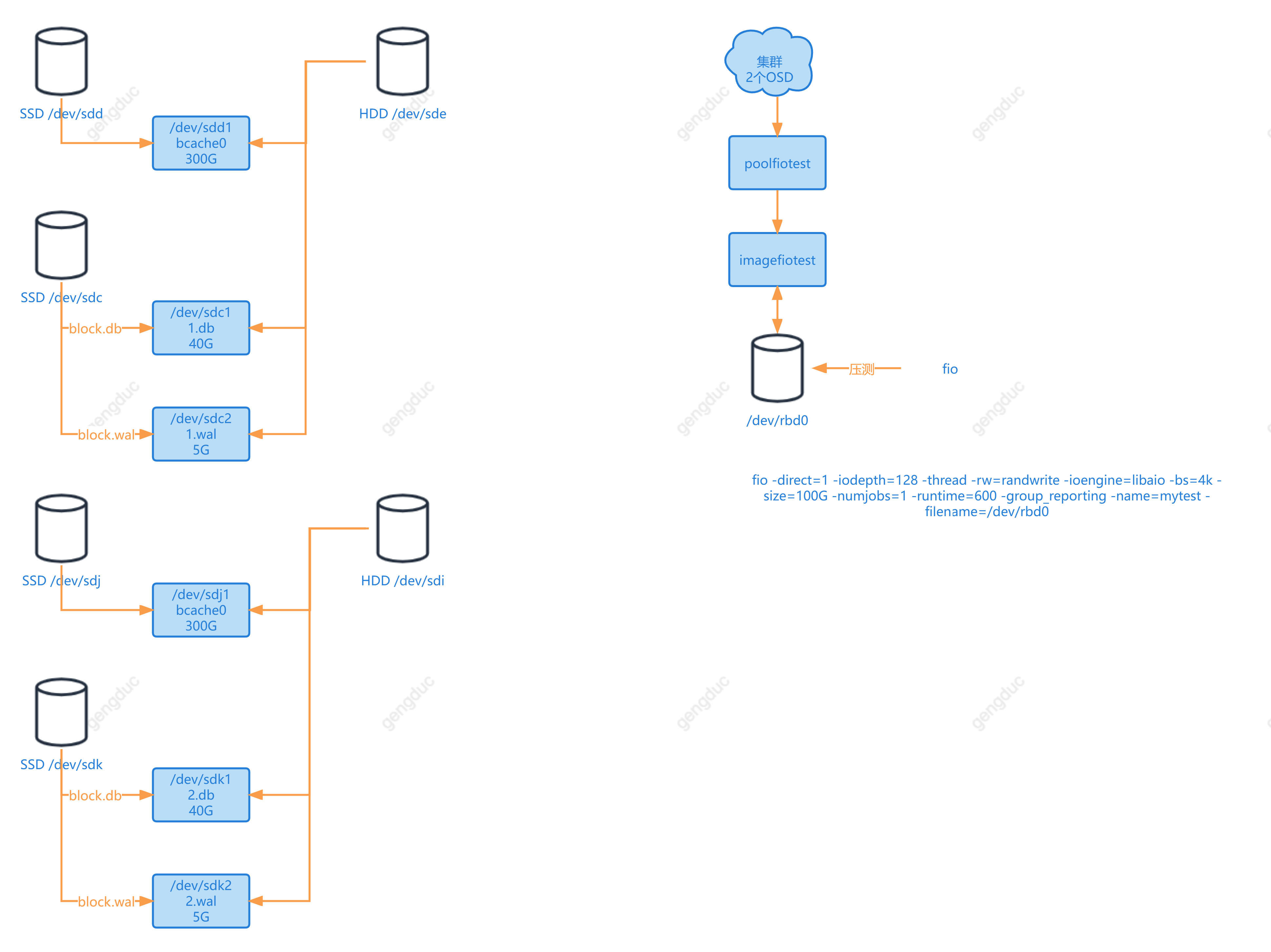

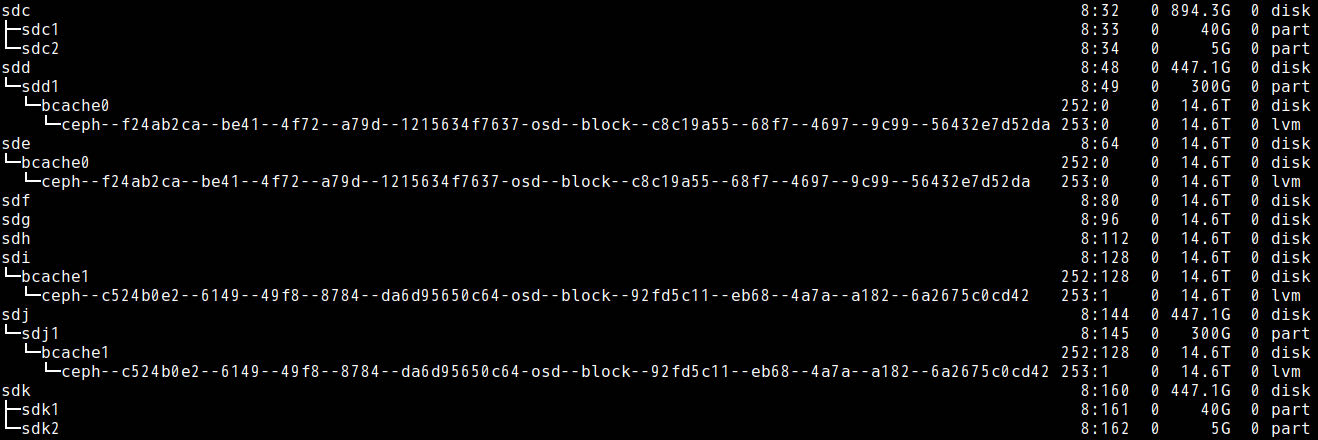

【Bcache】一块SSD加速两块HDD(OSD)

[root@ceph01 ceph]# fio -direct=1 -iodepth=128 -thread -rw=randwrite -ioengine=libaio -bs=4k -size=100G -numjobs=1 -runtime=600 -group_reporting -name=mytest -filename=/dev/rbd0

mytest: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=128

fio-3.22

Starting 1 thread

Jobs: 1 (f=1): [w(1)][10.7%][w=12.1MiB/s][w=3097 IOPS][eta 08m:56s]

mytest: (groupid=0, jobs=1): err= 0: pid=37245: Thu Oct 12 22:08:43 2023write: IOPS=4065, BW=15.9MiB/s (16.7MB/s)(1024MiB/64475msec); 0 zone resetsslat (usec): min=3, max=173, avg= 5.80, stdev= 3.99clat (msec): min=9, max=336, avg=31.47, stdev=21.69lat (msec): min=9, max=336, avg=31.48, stdev=21.69clat percentiles (msec):| 1.00th=[ 17], 5.00th=[ 19], 10.00th=[ 21], 20.00th=[ 24],| 30.00th=[ 26], 40.00th=[ 27], 50.00th=[ 28], 60.00th=[ 29],| 70.00th=[ 31], 80.00th=[ 33], 90.00th=[ 37], 95.00th=[ 48],| 99.00th=[ 146], 99.50th=[ 180], 99.90th=[ 268], 99.95th=[ 284],| 99.99th=[ 334]bw ( KiB/s): min= 4216, max=20288, per=100.00%, avg=16284.49, stdev=3300.36, samples=128iops : min= 1054, max= 5072, avg=4071.00, stdev=825.06, samples=128lat (msec) : 10=0.01%, 20=8.54%, 50=87.00%, 100=2.20%, 250=2.13%lat (msec) : 500=0.14%cpu : usr=0.95%, sys=3.21%, ctx=28915, majf=0, minf=0IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%issued rwts: total=0,262144,0,0 short=0,0,0,0 dropped=0,0,0,0latency : target=0, window=0, percentile=100.00%, depth=128Run status group 0 (all jobs):WRITE: bw=15.9MiB/s (16.7MB/s), 15.9MiB/s-15.9MiB/s (16.7MB/s-16.7MB/s), io=1024MiB (1074MB), run=64475-64475msecDisk stats (read/write):rbd0: ios=0/261860, merge=0/0, ticks=0/8185334, in_queue=8198080, util=100.00%

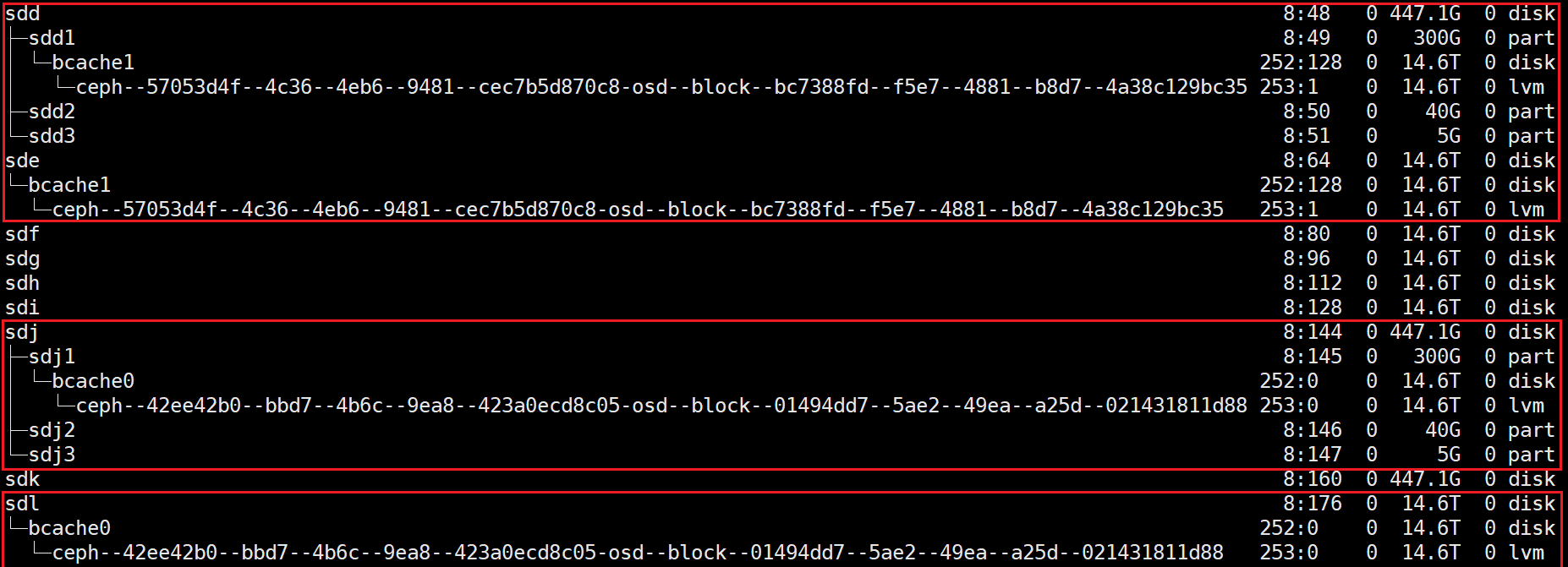

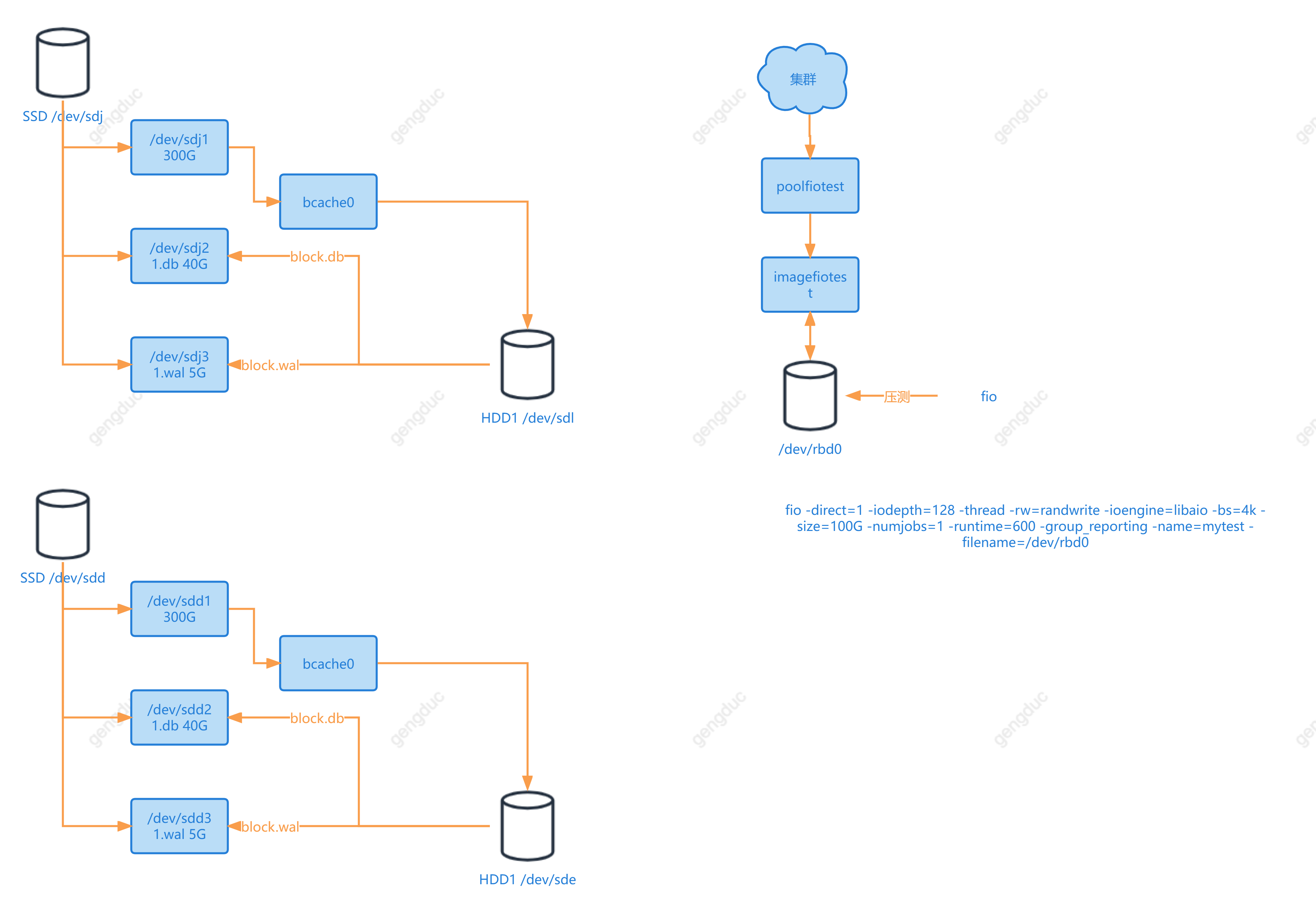

【Bcache】两块SSD加速两块HDD(OSD)

[root@ceph01 ceph]# fio -direct=1 -iodepth=128 -thread -rw=randwrite -ioengine=libaio -bs=4k -size=100G -numjobs=1 -runtime=600 -group_reporting -name=mytest -filename=/dev/rbd0

mytest: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=128

fio-3.22

Starting 1 thread

Jobs: 1 (f=1): [w(1)][10.5%][w=15.8MiB/s][w=4047 IOPS][eta 08m:57s]

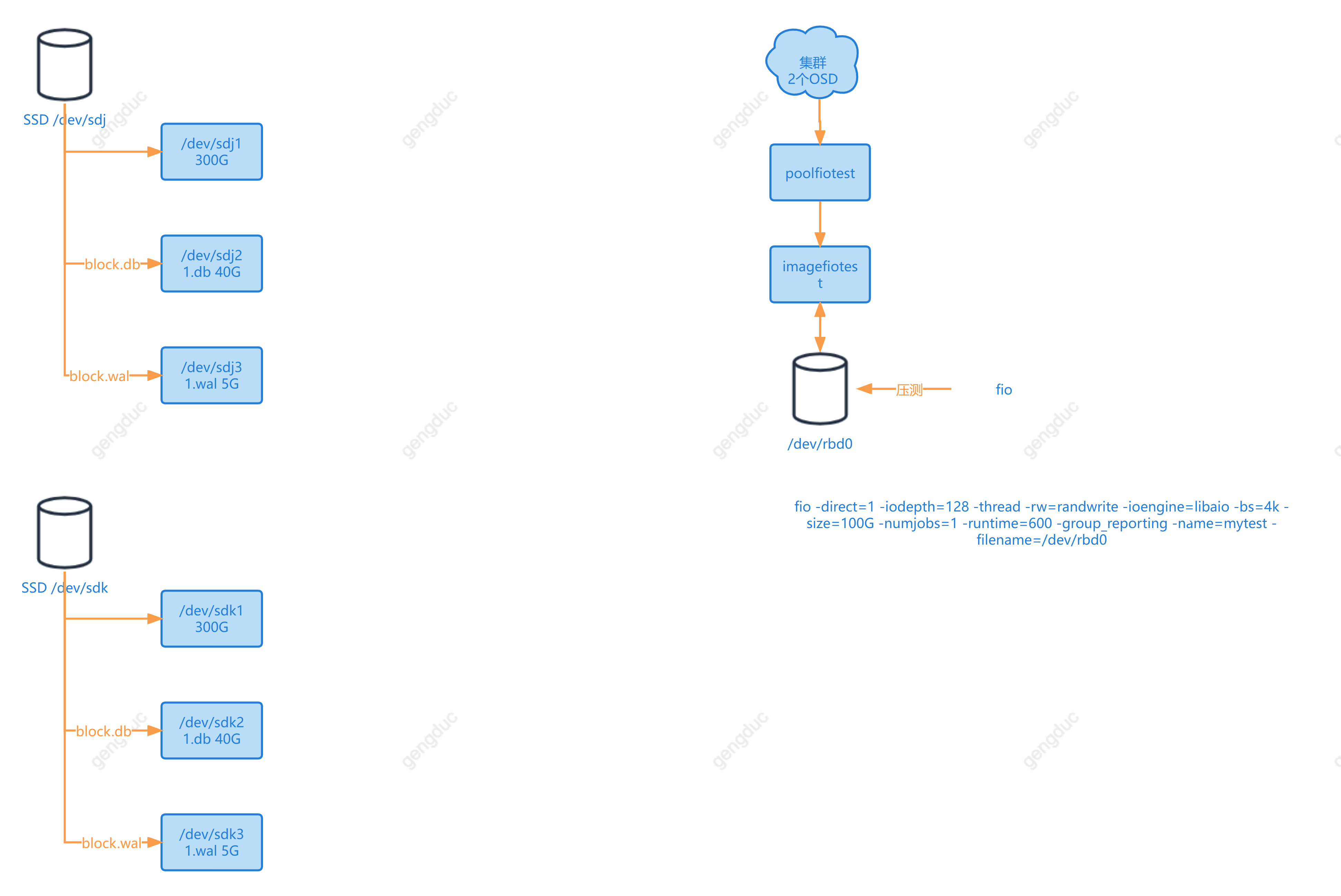

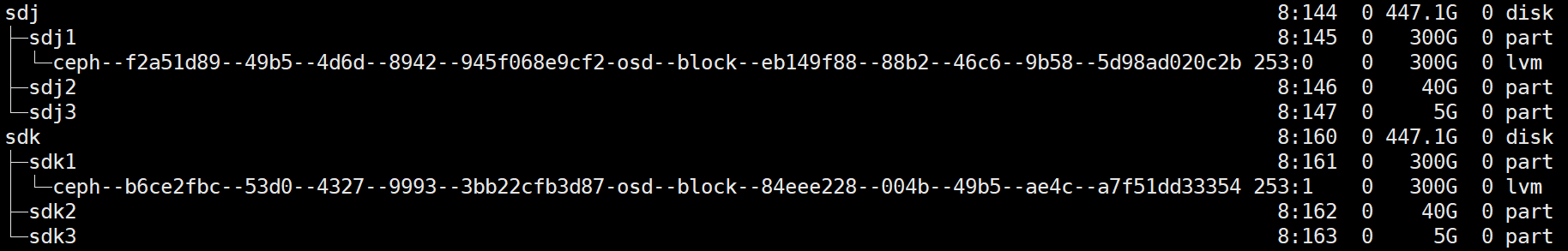

mytest: (groupid=0, jobs=1): err= 0: pid=363441: Sat Oct 14 11:19:49 2023write: IOPS=4158, BW=16.2MiB/s (17.0MB/s)(1024MiB/63037msec); 0 zone resetsslat (usec): min=3, max=624, avg= 5.76, stdev= 4.10clat (msec): min=10, max=359, avg=30.77, stdev=18.74lat (msec): min=10, max=359, avg=30.78, stdev=18.74clat percentiles (msec):| 1.00th=[ 17], 5.00th=[ 19], 10.00th=[ 21], 20.00th=[ 23],| 30.00th=[ 25], 40.00th=[ 27], 50.00th=[ 28], 60.00th=[ 29],| 70.00th=[ 31], 80.00th=[ 33], 90.00th=[ 38], 95.00th=[ 51],| 99.00th=[ 111], 99.50th=[ 153], 99.90th=[ 262], 99.95th=[ 266],| 99.99th=[ 359]bw ( KiB/s): min= 3768, max=21016, per=100.00%, avg=16674.80, stdev=3007.06, samples=125iops : min= 942, max= 5254, avg=4168.57, stdev=751.74, samples=125lat (msec) : 20=9.54%, 50=85.45%, 100=3.49%, 250=1.38%, 500=0.13%cpu : usr=0.99%, sys=3.24%, ctx=28037, majf=0, minf=0IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%issued rwts: total=0,262144,0,0 short=0,0,0,0 dropped=0,0,0,0latency : target=0, window=0, percentile=100.00%, depth=128Run status group 0 (all jobs):WRITE: bw=16.2MiB/s (17.0MB/s), 16.2MiB/s-16.2MiB/s (17.0MB/s-17.0MB/s), io=1024MiB (1074MB), run=63037-63037msecDisk stats (read/write):rbd0: ios=0/261835, merge=0/0, ticks=0/7989253, in_queue=7996052, util=100.00%【Bcache】两块SSD加速两块HDD(OSD)+两块SSD加速block.db和block.wal

[root@ceph01 ~]# fio -direct=1 -iodepth=128 -thread -rw=randwrite -ioengine=libaio -bs=4k -size=100G -numjobs=1 -runtime=600 -group_reporting -name=mytest -filename=/dev/rbd0

mytest: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=128

fio-3.22

Starting 1 thread

Jobs: 1 (f=1): [w(1)][10.5%][w=16.3MiB/s][w=4162 IOPS][eta 08m:57s]

mytest: (groupid=0, jobs=1): err= 0: pid=73003: Mon Oct 16 02:34:47 2023write: IOPS=4124, BW=16.1MiB/s (16.9MB/s)(1024MiB/63559msec); 0 zone resetsslat (usec): min=3, max=109, avg= 5.69, stdev= 3.75clat (msec): min=10, max=294, avg=31.03, stdev=17.27lat (msec): min=10, max=294, avg=31.03, stdev=17.27clat percentiles (msec):| 1.00th=[ 17], 5.00th=[ 19], 10.00th=[ 21], 20.00th=[ 23],| 30.00th=[ 25], 40.00th=[ 27], 50.00th=[ 28], 60.00th=[ 30],| 70.00th=[ 32], 80.00th=[ 34], 90.00th=[ 40], 95.00th=[ 52],| 99.00th=[ 110], 99.50th=[ 136], 99.90th=[ 226], 99.95th=[ 249],| 99.99th=[ 284]bw ( KiB/s): min= 6200, max=20376, per=100.00%, avg=16508.00, stdev=2659.19, samples=126iops : min= 1550, max= 5094, avg=4126.88, stdev=664.77, samples=126lat (msec) : 20=9.65%, 50=85.11%, 100=3.87%, 250=1.33%, 500=0.04%cpu : usr=1.00%, sys=3.13%, ctx=25141, majf=0, minf=0IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%issued rwts: total=0,262144,0,0 short=0,0,0,0 dropped=0,0,0,0latency : target=0, window=0, percentile=100.00%, depth=128Run status group 0 (all jobs):WRITE: bw=16.1MiB/s (16.9MB/s), 16.1MiB/s-16.1MiB/s (16.9MB/s-16.9MB/s), io=1024MiB (1074MB), run=63559-63559msecDisk stats (read/write):rbd0: ios=0/261407, merge=0/0, ticks=0/8062837, in_queue=8075472, util=100.00%

两块SSD做OSD

[root@ceph01 ~]# fio -direct=1 -iodepth=128 -thread -rw=randwrite -ioengine=libaio -bs=4k -size=100G -numjobs=1 -runtime=600 -group_reporting -name=mytest -filename=/dev/rbd0

mytest: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=128

fio-3.22

Starting 1 thread

Jobs: 1 (f=1): [w(1)][4.3%][w=34.7MiB/s][w=8883 IOPS][eta 09m:35s]

mytest: (groupid=0, jobs=1): err= 0: pid=125901: Fri Oct 13 11:06:55 2023write: IOPS=10.2k, BW=39.8MiB/s (41.7MB/s)(1024MiB/25751msec); 0 zone resetsslat (nsec): min=3310, max=78980, avg=5364.31, stdev=3425.80clat (usec): min=2965, max=33393, avg=12565.10, stdev=3428.92lat (usec): min=2970, max=33400, avg=12570.90, stdev=3428.60clat percentiles (usec):| 1.00th=[ 6652], 5.00th=[ 7963], 10.00th=[ 8717], 20.00th=[ 9765],| 30.00th=[10552], 40.00th=[11207], 50.00th=[11994], 60.00th=[12780],| 70.00th=[13829], 80.00th=[15139], 90.00th=[17171], 95.00th=[19006],| 99.00th=[22676], 99.50th=[24511], 99.90th=[27657], 99.95th=[28705],| 99.99th=[31589]bw ( KiB/s): min=33628, max=44247, per=99.99%, avg=40717.55, stdev=2014.23, samples=51iops : min= 8407, max=11061, avg=10179.18, stdev=503.57, samples=51lat (msec) : 4=0.01%, 10=23.01%, 20=73.64%, 50=3.35%cpu : usr=2.23%, sys=7.41%, ctx=20485, majf=0, minf=0IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%issued rwts: total=0,262144,0,0 short=0,0,0,0 dropped=0,0,0,0latency : target=0, window=0, percentile=100.00%, depth=128Run status group 0 (all jobs):WRITE: bw=39.8MiB/s (41.7MB/s), 39.8MiB/s-39.8MiB/s (41.7MB/s-41.7MB/s), io=1024MiB (1074MB), run=25751-25751msecDisk stats (read/write):rbd0: ios=0/260926, merge=0/0, ticks=0/3220267, in_queue=3224032, util=99.89%

结论

测试环境为单机双副本!!

| 测试 | IOPS | BW | lat |

|---|---|---|---|

| 两块HDD做OSD | 404 | 1659kB/s | 316.06ms |

| 【Bcache】一块SSD加速两块HDD(OSD) | 4065 | 16.7MB/s | 31.48ms |

| 【Bcache】两块SSD加速两块HDD(OSD) | 4158 | 17.0MB/s | 30.78ms |

| 【Bcache】两块SSD加速两块HDD(OSD)+两块SSD加速block.db和block.wal | 4124 | 16.9MB/s | 31.03ms |

| 两块SSD做OSD | 10.2k | 41.7MB/s | 12.57ms |

| 对比数据 | 16405 | 67MB/s | 7.80ms |

这篇关于LoongArch单机Ceph Bcache加速4K随机写性能测试的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!