本文主要是介绍用Cri-O,Sealos CLI,Kubeadm方式部署K8s高可用集群,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

3.6 Cri-O方式部署K8s集群

注意:基于Kubernetes基础环境

3.6.1 所有节点安装配置cri-o

[root@k8s-all ~]# VERSION=1.28

[root@k8s-all ~]# curl -L -o /etc/yum.repos.d/devel:kubic:libcontainers:stable.repo https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/CentOS_7/devel:kubic:libcontainers:stable.repo

[root@k8s-all ~]# curl -L -o /etc/yum.repos.d/devel:kubic:libcontainers:stable:cri-o:${VERSION}.repo https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable:cri-o:${VERSION}/CentOS_7/devel:kubic:libcontainers:stable:cri-o:${VERSION}.repo

[root@k8s-all ~]# yum install cri-o -y

[root@k8s-all ~]# vim /etc/crio/crio.conf

509 pause_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3."548 insecure_registries = [

549 "docker.mirrors.ustc.edu.cn","dockerhub.azk8s.cn","hub-mirror.c.163.com"

550 ][root@k8s-all ~]# systemctl daemon-reload

[root@k8s-all ~]# systemctl enable --now crio

-

修改/etc/sysconfig/kubelet

[root@k8s-all ~]# vim /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS="--container-runtime=remote --cgroup-driver=systemd -- container-runtime-endpoint='unix:///var/run/crio/crio.sock' --runtime-req uest-timeout=5m" [root@k8s-all ~]# systemctl daemon-reload [root@k8s-all ~]# systemctl restart kubelet.service

3.6.2 集群初始化

[root@k8s-master-01 ~]# kubeadm init --kubernetes-version=v1.28.2 --pod-network-cidr=10.224.0.0/16 \ --apiserver-advertise-address=192.168.110.21 \ --service-cidr=10.96.0.0/12 \ --cri-socket unix:///var/run/crio/crio.sock \ --image-repository registry.aliyuncs.com/google_containers To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.110.21:6443 --token tct0yt.1bf5docmg9loxj8m \--discovery-token-ca-cert-hash sha256:b504dc351e052f7ebe92162c0989088b09c2243467ee510d172187e87caf9b74 [root@k8s-master-01 ~]# mkdir -p $HOME/.kube [root@k8s-master-01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master-01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

3.6.3 Work结点加入集群

[root@k8s-node-01 ~]# kubeadm join 192.168.110.21:6443 --token tct0yt.1bf5docmg9loxj8m \ --discovery-token-ca-cert-hash sha256:b504dc351e052f7ebe92162c0989088b09c2243467ee510d172187e87caf9b74 [root@k8s-node-02 ~]# kubeadm join 192.168.110.21:6443 --token tct0yt.1bf5docmg9loxj8m \ --discovery-token-ca-cert-hash sha256:b504dc351e052f7ebe92162c0989088b09c2243467ee510d172187e87caf9b74

3.6.4 安装网络插件

[root@k8s-master-01 ~]# wget -c https://docs.projectcalico.org/v3.25/manifests/calico.yaml - name: CALICO_IPV4POOL_CIDRvalue: "10.244.0.0/16" - name: CLUSTER_TYPEvalue: "k8s,bgp" # 下方为新增 - name: IP_AUTODETECTION_METHODvalue: "interface=ens33"[root@k8s-all ~]# crictl pull docker.io/calico/cni:v3.25.0 [root@k8s-all ~]# crictl pull docker.io/calico/node:v3.25.0 [root@k8s-all ~]# crictl pull docker.io/calico/kube-controllers:v3.25.0 [root@k8s-master-01 ~]# kubectl apply -f calico.yaml

3.6.5 检测

[root@k8s-master-01 ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-658d97c59c-q6b5z 1/1 Running 0 33s calico-node-88hrv 1/1 Running 0 33s calico-node-ph7sw 1/1 Running 0 33s calico-node-ws2h5 1/1 Running 0 33s coredns-66f779496c-gt4zg 1/1 Running 0 22m coredns-66f779496c-jsqcw 1/1 Running 0 22m etcd-k8s-master-01 1/1 Running 0 22m kube-apiserver-k8s-master-01 1/1 Running 0 22m kube-controller-manager-k8s-master-01 1/1 Running 0 22m kube-proxy-5v4sr 1/1 Running 0 19m kube-proxy-b8xvq 1/1 Running 0 22m kube-proxy-vl5k2 1/1 Running 0 19m kube-scheduler-k8s-master-01 1/1 Running 0 22m

2. Sealos CLI部署K8s集群

注意:基于3.3 Kubernetes基础环境部署

2..1 获取Sealos CLI工具

-

地址:Releases · labring/sealos · GitHub

-

版本对应关系

-

集群镜像版本支持说明

-

支持 Containerd 的 K8s

-

推荐使用 Containerd 作为容器运行时 (CRI) 的集群镜像版本,Containerd 是一种轻量级、高性能的容器运行时,与 Docker 兼容。使用 Containerd 的 Kubernetes 镜像可以提供更高的性能和资源利用率。

-

以下是支持 Containerd 的集群镜像版本支持说明:

-

| K8s 版本 | Sealos 版本 | CRI 版本 | 集群镜像版本 |

|---|---|---|---|

>=1.25 | >=v4.1.0 | v1alpha2 | labring/kubernetes:v1.25.0 |

>=1.26 | >=v4.1.4-rc3 | v1 | labring/kubernetes:v1.26.0 |

>=1.27 | >=v4.2.0-alpha3 | v1 | labring/kubernetes:v1.27.0 |

<1.25 | >=v4.0.0 | v1alpha2 | labring/kubernetes:v1.24.0 |

>=1.28 | >=v5.0.0 | v1 | labring/kubernetes:v1.28.0 |

-

根据 Kubernetes 版本的不同,您可以选择不同的 Sealos 版本和 CRI 版本。例如,如果您要使用 Kubernetes v1.26.0 版本,您可以选择 sealos v4.1.4-rc3 及更高版本,并使用 v1 CRI 版本。

-

支持 Docker 的 K8s

-

当然,也可以选择使用 Docker 作为容器运行时,以下是支持 Docker 的集群镜像版本支持说明:

| K8s 版本 | Sealos 版本 | CRI 版本 | 集群镜像版本 |

|---|---|---|---|

<1.25 | >=v4.0.0 | v1alpha2 | labring/kubernetes-docker:v1.24.0 |

>=1.25 | >=v4.1.0 | v1alpha2 | labring/kubernetes-docker:v1.25.0 |

>=1.26 | >=v4.1.4-rc3 | v1 | labring/kubernetes-docker:v1.26.0 |

>=1.27 | >=v4.2.0-alpha3 | v1 | labring/kubernetes-docker:v1.27.0 |

>=1.28 | >=v5.0.0 | v1 | labring/kubernetes-docker:v1.28.0 |

-

与支持 Containerd 的 Kubernetes 镜像类似,可以根据 Kubernetes 版本的不同选择不同的 Sealos 版本和 CRI 版本。

-

例如,如果要使用 Kubernetes v1.26.0 版本,您可以选择 sealos v4.1.4-rc3 及更高版本,并使用 v1 CRI 版本。

2..2 Sealos安装部署

[root@k8s-master-01 ~]# yum install https://github.com/labring/sealos/releases/download/v5.0.0-beta5/sealos_5.0.0-beta5_linux_amd64.rpm -y

2..3 使用Sealos CLI部署K8S集群

[root@k8s-master-01 ~]# sealos run registry.cn-shanghai.aliyuncs.com/labring/kubernetes-docker:v1.28.2 registry.cn-shanghai.aliyuncs.com/labring/helm:v3..4 registry.cn-shanghai.aliyuncs.com/labring/calico:v3.24.1 \ --masters 192.168.110.21 \ --nodes 192.168.110.22,192.168.110.23 -p 123456

参数说明:

| 参数名 | 参数值示例 | 参数说明 |

|---|---|---|

| --masters | 192.168.110.22 | K8s master 节点地址列表 |

| --nodes | 192.168.110.23 | K8s node 节点地址列表 |

| --ssh-passwd | [your-ssh-passwd] | ssh 登录密码(这里环境为免密钥) |

| kubernetes | labring/kubernetes-docker:v1.28.2 | K8s 集群镜像 |

注意:时间同步,kubernetes要求集群中的节点时间必须精确一致

-

看到这个界面就成功了

2024-05-31T10:12:57 info succeeded in creating a new cluster, enjoy it! 2024-05-31T10:12:57 info ___ ___ ___ ___ ___ ___/\ \ /\ \ /\ \ /\__\ /\ \ /\ \/::\ \ /::\ \ /::\ \ /:/ / /::\ \ /::\ \/:/\ \ \ /:/\:\ \ /:/\:\ \ /:/ / /:/\:\ \ /:/\ \ \_\:\~\ \ \ /::\~\:\ \ /::\~\:\ \ /:/ / /:/ \:\ \ _\:\~\ \ \/\ \:\ \ \__\ /:/\:\ \:\__\ /:/\:\ \:\__\ /:/__/ /:/__/ \:\__\ /\ \:\ \ \__\\:\ \:\ \/__/ \:\~\:\ \/__/ \/__\:\/:/ / \:\ \ \:\ \ /:/ / \:\ \:\ \/__/\:\ \:\__\ \:\ \:\__\ \::/ / \:\ \ \:\ /:/ / \:\ \:\__\\:\/:/ / \:\ \/__/ /:/ / \:\ \ \:\/:/ / \:\/:/ /\::/ / \:\__\ /:/ / \:\__\ \::/ / \::/ /\/__/ \/__/ \/__/ \/__/ \/__/ \/__/ Website: https://www.sealos.io/Address: github.com/labring/sealosVersion: 5.0.0-beta5-a0b3363d9

2..4 查看集群

[root@k8s-master-01 ~]# kubectl get cs Warning: v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy ok [root@k8s-master-01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master-01 Ready control-plane 87s v1.28.2 k8s-node-01 Ready <none> 64s v1.28.2 k8s-node-02 Ready <none> 64s v1.28.2 [root@k8s-master-01 ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-5dd5756b68-2gwbb 1/1 Running 0 2m35s coredns-5dd5756b68-hm8v9 1/1 Running 0 2m35s etcd-k8s-master-01 1/1 Running 0 2m47s kube-apiserver-k8s-master-01 1/1 Running 0 2m47s kube-controller-manager-k8s-master-01 1/1 Running 0 2m47s kube-proxy-m9fjz 1/1 Running 0 2m35s kube-proxy-rx66m 1/1 Running 0 2m28s kube-proxy-v82dd 1/1 Running 0 2m28s kube-scheduler-k8s-master-01 1/1 Running 0 2m47s kube-sealos-lvscare-k8s-node-01 1/1 Running 0 2m23s kube-sealos-lvscare-k8s-node-02 1/1 Running 0 2m23s

2..5 Sealos相关命令

-

增加节点

1、增加 node 节点: sealos add --nodes 192.168.110.25,192.168.110.26 2、增加 master 节点: sealos add --masters 192.168.110.22,192.168.110.23

-

删除节点

1、删除 node 节点: sealos delete --nodes 192.168.110.25,192.168.110.26 2、删除 master 节点: sealos delete --masters 192.168.110.22,192.168.110.23

-

清理集群

$ sealos reset

3. Kubeadm方式部署K8s高可用集群

3..1 集群架构

1. 高可用拓扑

可以设置 HA 集群:

-

使用堆叠(stacked)控制平面节点,其中 etcd 节点与控制平面节点共存;

-

使用外部 etcd 节点,其中 etcd 在与控制平面不同的节点上运行;

-

在设置 HA 集群之前,应该仔细考虑每种拓扑的优缺点。

2. 堆叠(Stacked) etcd 拓扑

主要特点:

-

etcd 分布式数据存储集群堆叠在 kubeadm 管理的控制平面节点上,作为控制平面的一个组件运行。

-

每个控制平面节点运行 kube-apiserver,kube-scheduler 和 kube-controller-manager 实例。

-

kube-apiserver 使用 LB 暴露给工作节点。

-

每个控制平面节点创建一个本地 etcd 成员(member),这个 etcd 成员只与该节点的 kube-apiserver 通信。这同样适用于本地 kube-controller-manager 和 kube-scheduler 实例。

简单概况:每个 master 节点上运行一个 apiserver 和 etcd, etcd 只与本节点 apiserver 通信。

这种拓扑将控制平面和 etcd 成员耦合在同一节点上。相对使用外部 etcd 集群,设置起来更简单,而且更易于副本管理。

然而堆叠集群存在耦合失败的风险。如果一个节点发生故障,则 etcd 成员和控制平面实例都将丢失,并且冗余会受到影响。可以通过添加更多控制平面节点来降低此风险。应该为 HA 集群运行至少三个堆叠的控制平面节点(防止脑裂)。

这是 kubeadm 中的默认拓扑。当使用 kubeadm init 和 kubeadm join --control-plane 时,在控制平面节点上会自动创建本地 etcd 成员。

3. 外部 Etcd 拓扑

主要特点:

-

具有外部 etcd 的 HA 集群是一种这样的拓扑,其中 etcd 分布式数据存储集群在独立于控制平面节点的其他节点上运行。

-

就像堆叠的 etcd 拓扑一样,外部 etcd 拓扑中的每个控制平面节点都运行 kube-apiserver,kube-scheduler 和 kube-controller-manager 实例。

-

同样 kube-apiserver 使用负载均衡器暴露给工作节点。但是,etcd 成员在不同的主机上运行,每个 etcd 主机与每个控制平面节点的 kube-apiserver 通信。

简单概况: etcd 集群运行在单独的主机上,每个 etcd 都与 apiserver 节点通信。

-

这种拓扑结构解耦了控制平面和 etcd 成员。因此,它提供了一种 HA 设置,其中失去控制平面实例或者 etcd 成员的影响较小,并且不会像堆叠的 HA 拓扑那样影响集群冗余。

-

但是,此拓扑需要两倍于堆叠 HA 拓扑的主机数量。具有此拓扑的 HA 集群至少需要三个用于控制平面节点的主机和三个用于 etcd 节点的主机。需要单独设置外部 etcd 集群。

3..2 基础环境部署

-

Kubernetes版本:1.28.2

| 主机 | IP地址 | 操作系统 | 配置 |

|---|---|---|---|

| k8s-master-01 | 192.168.110.21 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

| k8s-master-02 | 192.168.110.22 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

| k8s-master-03 | 192.168.110.23 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

| k8s-node-01 | 192.168.110.24 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

| k8s-node-02 | 192.168.110.25 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

| k8s-node-03 | 192.168.110.26 | CentOS Linux release 7.9.2009 | 4颗CPU 8G内存 100G硬盘 |

-

关闭防火墙和SElinux

[root@k8s-all ~]# systemctl disable --now firewalld.service [root@k8s-all ~]# sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config [root@k8s-all ~]# setenforce 0

-

所有节点配置Hosts解析

[root@k8s-all ~]# cat >> /etc/hosts << EOF 192.168.110.21 k8s-master-01 192.168.110.22 k8s-master-02 192.168.110.23 k8s-master-03 192.168.110.24 k8s-node-01 192.168.110.25 k8s-node-02 192.168.110.26 k8s-node-03 EOF

-

k8s-master-01生成密钥,其他节点可以免密钥访问

[root@k8s-master-01 ~]# ssh-keygen -f ~/.ssh/id_rsa -N '' -q [root@k8s-master-01 ~]# ssh-copy-id k8s-master-02 [root@k8s-master-01 ~]# ssh-copy-id k8s-master-03 [root@k8s-master-01 ~]# ssh-copy-id k8s-node-01 [root@k8s-master-01 ~]# ssh-copy-id k8s-node-02 [root@k8s-master-01 ~]# ssh-copy-id k8s-node-03

-

配置NTP时间同步

[root@k8s-all ~]# sed -i '3,6 s/^/# /' /etc/chrony.conf [root@k8s-all ~]# sed -i '6 a server ntp.aliyun.com iburst' /etc/chrony.conf [root@k8s-all ~]# systemctl restart chronyd.service [root@k8s-all ~]# chronyc sources 210 Number of sources = 1 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 203.107.6.88 2 6 17 13 -230us[-2619us] +/- 25ms

-

禁用Swap交换分区

[root@k8s-all ~]# swapoff -a #临时关闭 [root@k8s-all ~]# sed -i 's/.*swap.*/# &/' /etc/fstab #永久关闭

-

升级操作系统内核

[root@k8s-all ~]# wget -c http://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/kernel-ml-6.0.3-1.el7.elrepo.x86_64.rpm

[root@k8s-all ~]# wget -c http://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/kernel-ml-devel-6.0.3-1.el7.elrepo.x86_64.rpm

[root@k8s-all ~]# rpm -ivh kernel-ml-6.0.3-1.el7.elrepo.x86_64.rpm

[root@k8s-all ~]# rpm -ivh kernel-ml-devel-6.0.3-1.el7.elrepo.x86_64.rpm

[root@k8s-all ~]# awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg

CentOS Linux (6.0.3-1.el7.elrepo.x86_64) 7 (Core)

CentOS Linux (3.10.0-1160.119.1.el7.x86_64) 7 (Core)

CentOS Linux (3.10.0-1160.71.1.el7.x86_64) 7 (Core)

CentOS Linux (0-rescue-35f6b014eff0419881bbf71f1d9d4943) 7 (Core)

[root@k8s-all ~]# grub2-set-default 0

[root@k8s-all ~]# reboot

[root@k8s-all ~]# uname -r

6.0.3-1.el7.elrepo.x86_64

-

配置内核转发及网桥过滤

[root@k8s-all ~]# echo net.ipv4.ip_forward = 1 >> /etc/sysctl.conf [root@k8s-all ~]# sysctl -p net.ipv4.ip_forward = 1 [root@K8s-all ~]# cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 vm.swappiness = 0 EOF [root@K8s-all ~]# modprobe br-netfilter [root@K8s-all ~]# sysctl -p /etc/sysctl.d/k8s.conf

-

开启IPVS

[root@K8s-all ~]# yum install ipset ipvsadm -y [root@K8s-all ~]# cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_vip ip_vs_sed ip_vs_ftp nf_conntrack" for kernel_module in $ipvs_modules; do/sbin/modinfo -F filename $kernel_module >/dev/null 2>&1if [ $? -eq 0 ]; then/sbin/modprobe $kernel_modulefi done chmod 755 /etc/sysconfig/modules/ipvs.modules EOF [root@K8s-all ~]# bash /etc/sysconfig/modules/ipvs.modules

-

配置国内镜像源

[root@K8s-all ~]# cat >> /etc/yum.repos.d/kubernetes.repo <<EOF [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

-

安装软件包

[root@K8s-all ~]# yum install kubeadm kubelet kubectl -y #这里安装版本为1.28.2

[root@K8s-all ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"28", GitVersion:"v1.28.2", GitCommit:"89a4ea3e1e4ddd7f7572286090359983e0387b2f", GitTreeState:"clean", BuildDate:"2023-09-13T09:34:32Z", GoVersion:"go1.20.8", Compiler:"gc", Platform:"linux/amd64"}

#为了实现docker使用的cgroupdriver与kubelet使用的cgroup的一致性,修改如下文件内容

[root@K8s-all ~]# cat <<EOF > /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

EOF

[root@K8s-all ~]# systemctl enable kubelet.service

-

kubectl命令自动补全

[root@K8s-all ~]# yum install -y bash-completion [root@K8s-all ~]# source /usr/share/bash-completion/bash_completion [root@K8s-all ~]# source <(kubectl completion bash) [root@K8s-all ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

3..3 容器运行时工具安装及运行

所有主机都安装

Containerd安装部署

-

安装基本工具

[root@K8s-all ~]# yum install yum-utils device-mapper-persistent-data lvm2 -y

-

下载Docker-ce的源

[root@K8s-all ~]# wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

-

替换仓库源

[root@K8s-all ~]# sed -i 's+download.docker.com+mirrors.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo [root@K8s-all ~]# sed -i 's/$releasever/7Server/g' /etc/yum.repos.d/docker-ce.repo

-

安装Containerd

[root@K8s-all ~]# yum install containerd -y

-

初始化默认配置

[root@K8s-all ~]# containerd config default | tee /etc/containerd/config.toml [root@K8s-all ~]# sed -i "s#SystemdCgroup\ \=\ false#SystemdCgroup\ \=\ true#g" /etc/containerd/config.toml [root@K8s-all ~]# sed -i "s#registry.k8s.io#registry.aliyuncs.com/google_containers#g" /etc/containerd/config.toml #配置crictl [root@K8s-all ~]# cat <<EOF | tee /etc/crictl.yaml runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false EOF [root@K8s-all ~]# systemctl daemon-reload [root@K8s-all ~]# systemctl enable containerd --now

-

测试

[root@K8s-node-all ~]# crictl pull nginx:alpine Image is up to date for sha256:f4215f6ee683f29c0a4611b02d1adc3b7d986a96ab894eb5f7b9437c862c9499 [root@K8s-node-all ~]# crictl images IMAGE TAG IMAGE ID SIZE docker.io/library/nginx alpine f4215f6ee683f 20.5MB [root@K8s-node-all ~]# crictl rmi nginx:alpine Deleted: docker.io/library/nginx:alpine

3..4 Master 节点部署高可用

只在三台Master上做

-

安装Nginx和Keepalived

[root@k8s-master-all ~]# wget -c https://nginx.org/packages/rhel/7/x86_64/RPMS/nginx-1.24.0-1.el7.ngx.x86_64.rpm [root@k8s-master-all ~]# yum install nginx-1.24.0-1.el7.ngx.x86_64.rpm keepalived.x86_64 -y

-

修改 Nginx 配置文件

3台master操作。

3 台 master 节点修改/etc/nginx/nginx.conf 配置文件,在envents位置后面添加stream部分内容:

[root@k8s-master-01 ~]# vim /etc/nginx/nginx.conf

events {worker_connections 1024;

}

stream {log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';access_log /var/log/nginx/k8s-access.log main;upstream k8s-apiserver {server 192.168.110.21:6443;server 192.168.110.22:6443;server 192.168.110.23:6443;}server {listen 16443;proxy_pass k8s-apiserver;}

}

-

修改 Keepalived 配置文件

只在 k8s-master-01 上做

覆盖修改 k8s-master-01 节点配置文件/etc/keepalived/keepalived.conf

[root@k8s-master-01 ~]# cat > /etc/keepalived/keepalived.conf<<EOF

! Configuration File for keepalived

global_defs {router_id master1script_user rootenable_script_security

}

vrrp_script check_nginx {script "/etc/keepalived/check_nginx.sh"interval 3fall 3rise 2

}

vrrp_instance Nginx {state MASTERinterface ens33virtual_router_id 51priority 200advert_int 1authentication {auth_type PASSauth_pass XCZKXY}track_script {check_nginx}virtual_ipaddress {192.168.110.20/24}

}

EOF

-

编写健康检测脚本

[root@k8s-master-01 ~]# cat > /etc/keepalived/check_nginx.sh<<EOF #!/bin/sh # nginx down pid=`ps -C nginx --no-header | wc -l` if [ $pid -eq 0 ] thensystemctl start nginxsleep 5if [ `ps -C nginx --no-header | wc -l` -eq 0 ]thensystemctl stop nginxelseexit 0fi fi EOF [root@k8s-master-01 ~]# chmod +x /etc/keepalived/check_nginx.sh

[root@k8s-master-01 ~]# scp /etc/keepalived/{check_nginx.sh,keepalived.conf} k8s-master-02:/etc/keepalived/

[root@k8s-master-01 ~]# scp /etc/keepalived/{check_nginx.sh,keepalived.conf} k8s-master-03:/etc/keepalived/

复制文件和脚本,k8s-master-01-02 和 k8s-master-01-03 配置如上,注意字段 state 修改为 BACKUP,降低 priority,例如

k8s-master-01-02 的 priority 值为 150,k8s-master-01-03 的 priority 值为 100。

-

修改其他两台Master

[root@k8s-master-02 ~]# sed -i 's/MASTER/BACKUP/' /etc/keepalived/keepalived.conf [root@k8s-master-02 ~]# sed -i 's/200/150/' /etc/keepalived/keepalived.conf [root@k8s-master-03 ~]# sed -i 's/MASTER/BACKUP/' /etc/keepalived/keepalived.conf [root@k8s-master-03 ~]# sed -i 's/200/100/' /etc/keepalived/keepalived.conf

-

启动 nginx 和 Keepalived

3台master操作

[root@k8s-master-all ~]# systemctl enable nginx --now [root@k8s-master-all ~]# systemctl enable keepalived --now

-

高可用切换验证

[root@k8s-master-01 ~]# ip a | grep 192.168.110.20/24inet 192.168.110.20/24 scope global secondary ens33# 模拟Keepalived宕机 [root@k8s-master-01 ~]# systemctl stop keepalived [root@k8s-master-02 ~]# ip a | grep 192.168.110.20/24 # VIP漂移到master-02inet 192.168.110.20/24 scope global secondary ens33 [root@k8s-master-03 ~]# ip a | grep 192.168.110.20/24 # master-02宕机inet 192.168.110.20/24 scope global secondary ens33[root@k8s-master-03 ~]# ip a | grep 192.168.110.20/24inet 192.168.110.20/24 scope global secondary ens33 # VIP漂移到master-03[root@k8s-master-01 ~]# systemctl start keepalived.service # 恢复后正常 [root@k8s-master-01 ~]# ip a | grep 192.168.110.20/24inet 192.168.110.20/24 scope global secondary ens33[root@k8s-all ~]# ping -c 2 192.168.110.20 #确保集群内部可以通讯 PING 192.168.110.20 (192.168.110.20) 56(84) bytes of data. 64 bytes from 192.168.110.20: icmp_seq=1 ttl=64 time=1.03 ms 64 bytes from 192.168.110.20: icmp_seq=2 ttl=64 time=2.22 ms --- 192.168.110.20 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1018ms rtt min/avg/max/mdev = 1.034/1.627/2.220/0.593 ms

3..5 集群初始化

只在master01节点上操作

# 创建初始化文件 kubeadm-init.yaml [root@k8s-master-01 ~]# kubeadm config print init-defaults > kubeadm-init.yaml [root@k8s-master-01 ~]# sed -i 's/1.2.3.4/192.168.110.21/' kubeadm-init.yaml # 控制平面地址修改为Master主机 [root@k8s-master-01 ~]# sed -i 's/: node/: k8s-master-01/' kubeadm-init.yaml # 修改名称 [root@k8s-master-01 ~]# sed -i '24 a controlPlaneEndpoint: 192.168.110.20:16443' kubeadm-init.yaml # 添加虚拟IP [root@k8s-master-01 ~]# sed -i 's#registry.k8s.io#registry.aliyuncs.com/google_containers#' kubeadm-init.yaml # 替换镜像源 [root@k8s-master-01 ~]# sed -i 's/1.28.0/1.28.2/' kubeadm-init.yaml # 替换为安装的斑斑 [root@k8s-master-01 ~]# cat >> kubeadm-init.yaml << EOF --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs EOF 注意:如果使用Docker做容器运行时,需要修改套接字文件为unix:///var/run/cri-dockerd.sock

-

根据配置文件启动 kubeadm 初始化 k8s

[root@k8s-master-01 ~]# kubeadm init --config=kubeadm-init.yaml --upload-certs --v=6 --ignore-preflight-errors="FileContent--proc-sys-net-bridge-bridge-nf-call-iptables" # 这里忽略防火墙报的错 Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join 192.168.110.20:16443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:28d3d73a81af5468649d287d7269a3c3eca9aa3990c3897f4db04aac805de38a \--control-plane --certificate-key 6f7027ad52defa430f33c5d25e7a0d4c1a96f3c316bb58d564e04f04330e6fb5 Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.110.20:16443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:28d3d73a81af5468649d287d7269a3c3eca9aa3990c3897f4db04aac805de38a

在 k8s-master-01-01 节点执行以下命令,为 kubectl 复制初始化生成的 config 文件,在该配置文件中,记录了 API Server 的访问地址,所以后面直接执行 kubectl 命令就可以正常连接到 API Server 中。

[root@k8s-master-01 ~]# mkdir -p $HOME/.kube [root@k8s-master-01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master-01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

3..6 其他Master节点加入

-

在 k8s-master-01-02 和 k8s-master-01-03 创建用于存放证书的文件夹

[root@k8s-master-02 ~]# mkdir -p /etc/kubernetes/pki/etcd [root@k8s-master-03 ~]# mkdir -p /etc/kubernetes/pki/etcd

-

传递证书到 k8s-master-0102 和 k8s-master-0103 节点

[root@k8s-master-01 ~]# scp /etc/kubernetes/pki/ca.* root@k8s-master-02:/etc/kubernetes/pki/ [root@k8s-master-01 ~]# scp /etc/kubernetes/pki/ca.* root@k8s-master-03:/etc/kubernetes/pki/[root@k8s-master-01 ~]# scp /etc/kubernetes/pki/sa.* root@k8s-master-02:/etc/kubernetes/pki/ [root@k8s-master-01 ~]# scp /etc/kubernetes/pki/sa.* root@k8s-master-03:/etc/kubernetes/pki/ [root@k8s-master-01 ~]# scp /etc/kubernetes/pki/front-proxy-ca.* root@k8s-master-02:/etc/kubernetes/pki/ [root@k8s-master-01 ~]# scp /etc/kubernetes/pki/front-proxy-ca.* root@k8s-master-03:/etc/kubernetes/pki/[root@k8s-master-01 ~]# scp /etc/kubernetes/pki/etcd/ca.* root@k8s-master-02:/etc/kubernetes/pki/etcd/ [root@k8s-master-01 ~]# scp /etc/kubernetes/pki/etcd/ca.* root@k8s-master-03:/etc/kubernetes/pki/etcd/ # 这个文件master和node节点都需要 [root@k8s-master-01 ~]# scp /etc/kubernetes/admin.conf k8s-master-01-02:/etc/kubernetes/ [root@k8s-master-01 ~]# scp /etc/kubernetes/admin.conf k8s-master-01-03:/etc/kubernetes/ [root@k8s-master-01 ~]# scp /etc/kubernetes/admin.conf k8s-node-01:/etc/kubernetes/ [root@k8s-master-01 ~]# scp /etc/kubernetes/admin.conf k8s-node-02:/etc/kubernetes/ [root@k8s-master-01 ~]# scp /etc/kubernetes/admin.conf k8s-node-03:/etc/kubernetes/

-

其他 master 节点加入集群

[root@k8s-master-02 ~]# kubeadm join 192.168.110.20:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:28d3d73a81af5468649d287d7269a3c3eca9aa3990c3897f4db04aac805de38a \ --control-plane --certificate-key 6f7027ad52defa430f33c5d25e7a0d4c1a96f3c316bb58d564e04f04330e6fb5 \ --ignore-preflight-errors="FileContent--proc-sys-net-bridge-bridge-nf-call-iptables,FileContent--proc-sys-net-ipv4-ip_forward" To start administering your cluster from this node, you need to run the following as a regular user: mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config Run 'kubectl get nodes' to see this node join the cluster. [root@k8s-master-03 ~]# kubeadm join 192.168.110.20:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:28d3d73a81af5468649d287d7269a3c3eca9aa3990c3897f4db04aac805de38a \ --control-plane --certificate-key 6f7027ad52defa430f33c5d25e7a0d4c1a96f3c316bb58d564e04f04330e6fb5 \ --ignore-preflight-errors="FileContent--proc-sys-net-bridge-bridge-nf-call-iptables,FileContent--proc-sys-net-ipv4-ip_forward" To start administering your cluster from this node, you need to run the following as a regular user: mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config Run 'kubectl get nodes' to see this node join the cluster.

-

在 k8s-master-02 和 k8s-master-03 节点执行以下命令,复制 kubeconfig 文件

[root@k8s-master-02 ~]# mkdir -p $HOME/.kube [root@k8s-master-02 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master-02 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config [root@k8s-master-03 ~]# mkdir -p $HOME/.kube [root@k8s-master-03 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-master-03 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

3..7 工作节点加入集群

注意:Docker做容器运行时需要添加 --cri-socket unix:///var/run/cri-dockerd.sock

[root@k8s-node-all ~]# kubeadm join 192.168.110.20:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:28d3d73a81af5468649d287d7269a3c3eca9aa3990c3897f4db04aac805de38a \ --ignore-preflight-errors="FileContent--proc-sys-net-bridge-bridge-nf-call-iptables,FileContent--proc-sys-net-ipv4-ip_forward" # 查看node [root@k8s-master-01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master-01 NotReady control-plane 7m19s v1.28.2 k8s-master-02 NotReady control-plane 80s v1.28.2 k8s-master-03 NotReady control-plane 2m1s v1.28.2 k8s-node-01 NotReady <none> 13s v1.28.2 k8s-node-02 NotReady <none> 13s v1.28.2 k8s-node-03 NotReady <none> 13s v1.28.2

3..8 安装集群网络插件

只在master01上操作

[root@k8s-master-01 ~]# wget -c https://gitee.com/kong-xiangyuxcz/svn/releases/download/V3.25.0/calico.yaml [root@k8s-master-01 ~]# kubectl apply -f calico.yaml [root@k8s-master-01 ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master-01 Ready control-plane 14m v1.28.2 k8s-master-02 Ready control-plane 10m v1.28.2 k8s-master-03 Ready control-plane 10m v1.28.2 k8s-node-01 Ready <none> 9m50s v1.28.2 k8s-node-02 Ready <none> 9m50s v1.28.2 k8s-node-03 Ready <none> 9m50s v1.28.2 [root@k8s-master-01 ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-658d97c59c-8mw6g 1/1 Running 0 5m47s calico-node-5hmzv 1/1 Running 0 5m47s calico-node-5qpwt 1/1 Running 0 5m47s calico-node-9r72w 1/1 Running 0 5m47s calico-node-xgds5 1/1 Running 0 5m47s calico-node-z56q4 1/1 Running 0 5m47s calico-node-zcrrw 1/1 Running 0 5m47s coredns-66f779496c-9jldk 1/1 Running 0 13m coredns-66f779496c-dxsgv 1/1 Running 0 13m etcd-k8s-master-01-01 1/1 Running 0 13m etcd-k8s-master-01-02 1/1 Running 0 9m33s etcd-k8s-master-01-03 1/1 Running 0 9m39s kube-apiserver-k8s-master-01 1/1 Running 0 13m kube-apiserver-k8s-master-01 1/1 Running 0 9m48s kube-apiserver-k8s-master-01 1/1 Running 0 9m39s kube-controller-manager-k8s-master-01 1/1 Running 0 13m kube-controller-manager-k8s-master-01 1/1 Running 0 9m49s kube-controller-manager-k8s-master-01 1/1 Running 0 9m36s kube-proxy-565m4 1/1 Running 0 8m56s kube-proxy-hfk4w 1/1 Running 0 8m56s kube-proxy-n94sx 1/1 Running 0 8m56s kube-proxy-s75md 1/1 Running 0 9m49s kube-proxy-t5xl2 1/1 Running 0 9m48s kube-proxy-vqkxk 1/1 Running 0 13m kube-scheduler-k8s-master-01 1/1 Running 0 13m kube-scheduler-k8s-master-01 1/1 Running 0 9m48s kube-scheduler-k8s-master-01 1/1 Running 0 9m39s # 如果镜像拉不下来,可以尝试手动拉 [root@k8s-master-01 ~]# crictl pull docker.io/calico/cni:v3.25.0 [root@k8s-master-01 ~]# crictl pull docker.io/calico/node:v3.25.0 [root@k8s-master-01 ~]# crictl pull docker.io/calico/kube-controllers:v3.25.0

3..9 应用部署验证及访问验证

-

下载etcdctl客户端工具

[root@k8s-master-01 ~]# wget -c https://github.com/etcd-io/etcd/releases/download/v3.4.29/etcd-v3.4.29-linux-amd64.tar.gz [root@k8s-master-01 ~]# tar xf etcd-v3.4.29-linux-amd64.tar.gz -C /usr/local/src/ [root@k8s-master-01 ~]# mv /usr/local/src/etcd-v3.4.29-linux-amd64/etcdctl /usr/local/bin/

-

查看etcd集群健康状态

[root@k8s-master-01 ~]# ETCDCTL_API=3 etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --write-out=table --endpoints=k8s-master-01:2379,k8s-master-01-02:2379,k8s-master-01-03:2379 endpoint health +--------------------+--------+-------------+-------+ | ENDPOINT | HEALTH | TOOK | ERROR | +--------------------+--------+-------------+-------+ | k8s-master-01:2379 | true | 22.678298ms | | | k8s-master-02:2379 | true | 22.823849ms | | | k8s-master-03:2379 | true | 28.292332ms | | +--------------------+--------+-------------+-------+

-

查看etcd集群可用列表

[root@k8s-master-01 ~]# ETCDCTL_API=3 etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --write-out=table --endpoints=k8s-master-01:2379,k8s-master-01-02:2379,k8s-master-01-03:2379 member list +------------------+---------+---------------+-----------------------------+-----------------------------+------------+ | ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER | +------------------+---------+---------------+-----------------------------+-----------------------------+------------+ | 409450d991a8d0ba | started | k8s-master-03 | https://192.168.110.23:2380 | https://192.168.110.23:2379 | false | | a1a70c91a1d895bf | started | k8s-master-01 | https://192.168.110.21:2380 | https://192.168.110.21:2379 | false | | cc2c3b0e11f3279a | started | k8s-master-02 | https://192.168.110.22:2380 | https://192.168.110.22:2379 | false | +------------------+---------+---------------+-----------------------------+-----------------------------+------------+

-

查看etcd集群leader状态

[root@k8s-master-01 ~]# ETCDCTL_API=3 etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --write-out=table --endpoints=k8s-master-01:2379,k8s-master-01-02:2379,k8s-master-01-03:2379 endpoint status +--------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +--------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | k8s-master-01:2379 | a1a70c91a1d895bf | 1..9 | 5.1 MB | true | false | 3 | 5124 | 5124 | | | k8s-master-02:2379 | cc2c3b0e11f3279a | 1..9 | 5.1 MB | false | false | 3 | 5124 | 5124 | | | k8s-master-03:2379 | 409450d991a8d0ba | 1..9 | 5.1 MB | false | false | 3 | 5124 | 5124 | | +--------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

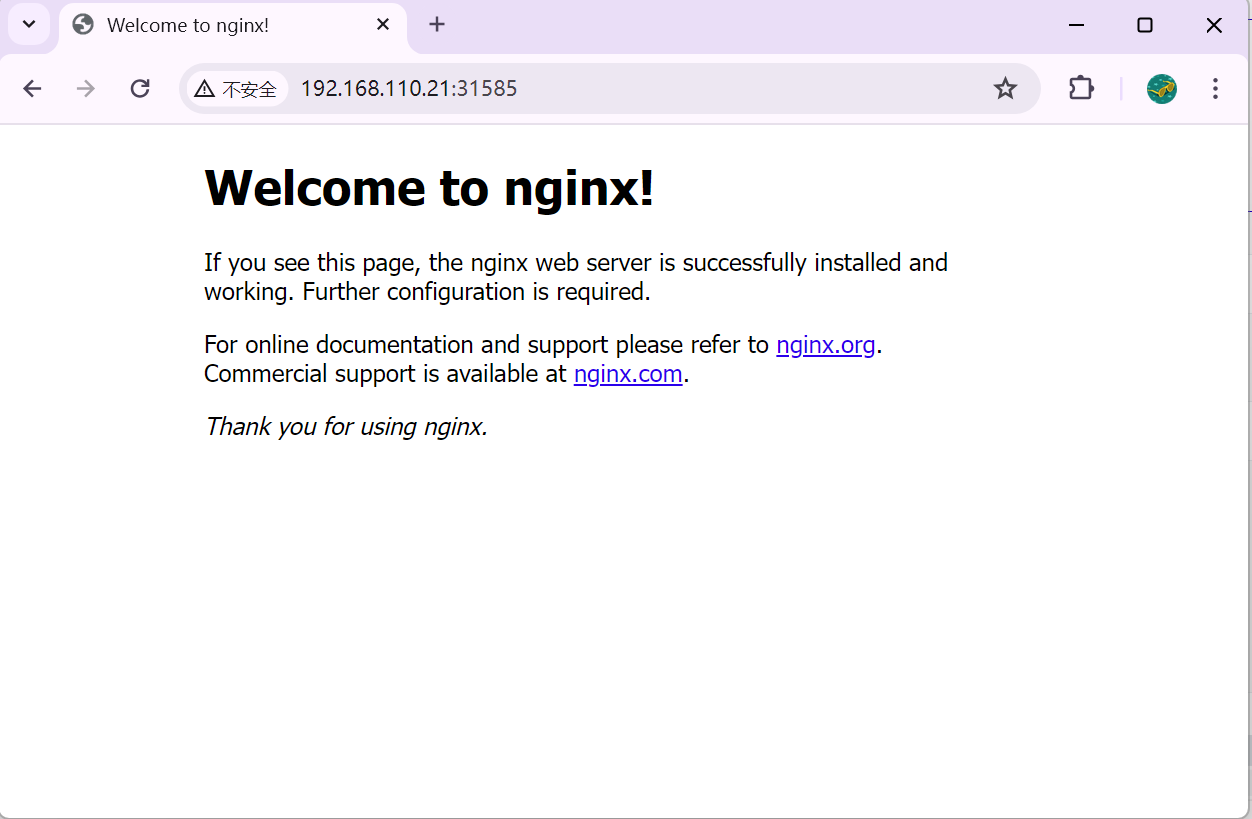

3..10 部署应用及验证

[root@k8s-master-01 ~]# kubectl create deployment nginx --image=nginx deployment.apps/nginx created [root@k8s-master-01 ~]# kubectl expose deployment nginx --port=80 --type=NodePort service/nginx exposed [root@k8s-master-01 ~]# kubectl get pod,svc NAME READY STATUS RESTARTS AGE pod/nginx-7854ff8877-7wxc8 1/1 Running 0 73s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 28m service/nginx NodePort 10.102.85.72 <none> 80:31585/TCP 72s

这篇关于用Cri-O,Sealos CLI,Kubeadm方式部署K8s高可用集群的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!