本文主要是介绍YOLOv8改进 | 模块缝合 | C2f 融合REPVGGOREPA提升检测性能【详细步骤 完整代码】,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

秋招面试专栏推荐 :深度学习算法工程师面试问题总结【百面算法工程师】——点击即可跳转

💡💡💡本专栏所有程序均经过测试,可成功执行💡💡💡

专栏目录 :《YOLOv8改进有效涨点》专栏介绍 & 专栏目录 | 目前已有100+篇内容,内含各种Head检测头、损失函数Loss、Backbone、Neck、NMS等创新点改进——点击即可跳转

结构重参数化技术在计算机视觉领域日益受到重视,它能在不增加推理成本的情况下提升深度学习模型性能。本文将介绍了一种C2f融合REPVGGOREPA的方法,通过将复杂训练模块简化为单次卷积来降低训练成本。能显著减少内存消耗和加快训练速度。文章在介绍主要的原理后,将手把手教学如何进行模块的代码添加和修改,并将修改后的完整代码放在文章的最后,方便大家一键运行,小白也可轻松上手实践。以帮助您更好地学习深度学习目标检测YOLO系列的挑战。

专栏地址:YOLOv8改进——更新各种有效涨点方法——点击即可跳转

目录

1. 原理

2. 将C2f_REPVGGOREPA添加到yolov8网络中

2.1 C2f_REPVGGOREPA代码实现

2.2 C2f_REPVGGOREPA的神经网络模块代码解析

2.3 更改init.py文件

2.4 添加yaml文件

2.5 注册模块

2.6 执行程序

3. 完整代码分享

4. GFLOPs

5. 进阶

6. 总结

1. 原理

论文地址:Online Convolutional Re-parameterization——点击即可跳转

官方代码:官方代码仓库——点击即可跳转

REPVGG和OREPA的主要原理可以概括为结构重参数化的应用与优化。以下是两者的主要原理解释:

1. REPVGG的原理

REPVGG是一种基于VGG的卷积神经网络,应用了结构重参数化(Structural Re-parameterization)的概念。具体来说,它在训练阶段使用复杂的多分支结构以提升模型的表现力,而在推理阶段,将这些复杂的结构整合为一个简单的VGG-like卷积块,从而在保持高精度的同时提高了推理效率。这种方法在训练阶段引入了更多的计算成本,但通过在推理阶段将这些成本“折叠”成一个简单的结构,从而在推理时保持较高的效率。

2. OREPA的原理

OREPA(Online Convolutional Re-parameterization)是对传统结构重参数化方法的改进。它主要通过以下方式优化了训练效率:

-

在线重参数化(Online Re-parameterization):OREPA在训练过程中简化了复杂的训练结构,通过去除非线性层(如Batch Normalization),引入线性缩放层来替代,从而在保持多分支优化方向的多样性的同时,实现了在线的结构简化。

-

块压缩(Block Squeezing):在OREPA中,经过线性化后的块可以在训练过程中被压缩为单个卷积核,从而显著减少训练时的计算和存储开销。这使得OREPA在保持高精度的同时,能够显著提高训练效率并降低显存占用。

主要区别

-

结构设计:REPVGG的多分支结构在训练阶段引入了更高的计算复杂度,而OREPA通过去除非线性层并引入线性缩放层,使得其训练时的计算开销大大降低。

-

训练成本:REPVGG的训练成本较高,而OREPA通过在线重参数化和块压缩,显著降低了训练成本。

总结来说,OREPA通过优化结构重参数化过程中的训练效率,保留了高效的推理能力,并能够在各种计算机视觉任务中提供一致的性能提升。

2. 将C2f_REPVGGOREPA添加到yolov8网络中

2.1 C2f_REPVGGOREPA代码实现

关键步骤一: 将下面代码粘贴到在/ultralytics/ultralytics/nn/modules/block.py中,并在该文件的__all__中添加“C2f_REPVGGOREPA”

import torch, math

import torch.nn as nn

import torch.nn.init as init

import torch.nn.functional as F

import numpy as npclass SEAttention(nn.Module):def __init__(self, channel=512,reduction=16):super().__init__()self.avg_pool = nn.AdaptiveAvgPool2d(1)self.fc = nn.Sequential(nn.Linear(channel, channel // reduction, bias=False),nn.ReLU(inplace=True),nn.Linear(channel // reduction, channel, bias=False),nn.Sigmoid())def init_weights(self):for m in self.modules():if isinstance(m, nn.Conv2d):init.kaiming_normal_(m.weight, mode='fan_out')if m.bias is not None:init.constant_(m.bias, 0)elif isinstance(m, nn.BatchNorm2d):init.constant_(m.weight, 1)init.constant_(m.bias, 0)elif isinstance(m, nn.Linear):init.normal_(m.weight, std=0.001)if m.bias is not None:init.constant_(m.bias, 0)def forward(self, x):b, c, _, _ = x.size()y = self.avg_pool(x).view(b, c)y = self.fc(y).view(b, c, 1, 1)return x * y.expand_as(x)def transI_fusebn(kernel, bn):gamma = bn.weightstd = (bn.running_var + bn.eps).sqrt()return kernel * ((gamma / std).reshape(-1, 1, 1, 1)), bn.bias - bn.running_mean * gamma / stddef transVI_multiscale(kernel, target_kernel_size):H_pixels_to_pad = (target_kernel_size - kernel.size(2)) // 2W_pixels_to_pad = (target_kernel_size - kernel.size(3)) // 2return F.pad(kernel, [W_pixels_to_pad, W_pixels_to_pad, H_pixels_to_pad, H_pixels_to_pad])class OREPA(nn.Module):def __init__(self,in_channels,out_channels,kernel_size=3,stride=1,padding=None,groups=1,dilation=1,act=True,internal_channels_1x1_3x3=None,deploy=False,single_init=False, weight_only=False,init_hyper_para=1.0, init_hyper_gamma=1.0):super(OREPA, self).__init__()self.deploy = deployself.nonlinear = Conv.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()self.weight_only = weight_onlyself.kernel_size = kernel_sizeself.in_channels = in_channelsself.out_channels = out_channelsself.groups = groupsself.stride = stridepadding = autopad(kernel_size, padding, dilation)self.padding = paddingself.dilation = dilationif deploy:self.orepa_reparam = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size, stride=stride,padding=padding, dilation=dilation, groups=groups, bias=True)else:self.branch_counter = 0self.weight_orepa_origin = nn.Parameter(torch.Tensor(out_channels, int(in_channels / self.groups), kernel_size, kernel_size))init.kaiming_uniform_(self.weight_orepa_origin, a=math.sqrt(0.0))self.branch_counter += 1self.weight_orepa_avg_conv = nn.Parameter(torch.Tensor(out_channels, int(in_channels / self.groups), 1,1))self.weight_orepa_pfir_conv = nn.Parameter(torch.Tensor(out_channels, int(in_channels / self.groups), 1,1))init.kaiming_uniform_(self.weight_orepa_avg_conv, a=0.0)init.kaiming_uniform_(self.weight_orepa_pfir_conv, a=0.0)self.register_buffer('weight_orepa_avg_avg',torch.ones(kernel_size,kernel_size).mul(1.0 / kernel_size / kernel_size))self.branch_counter += 1self.branch_counter += 1self.weight_orepa_1x1 = nn.Parameter(torch.Tensor(out_channels, int(in_channels / self.groups), 1,1))init.kaiming_uniform_(self.weight_orepa_1x1, a=0.0)self.branch_counter += 1if internal_channels_1x1_3x3 is None:internal_channels_1x1_3x3 = in_channels if groups <= 4 else 2 * in_channelsif internal_channels_1x1_3x3 == in_channels:self.weight_orepa_1x1_kxk_idconv1 = nn.Parameter(torch.zeros(in_channels, int(in_channels / self.groups), 1, 1))id_value = np.zeros((in_channels, int(in_channels / self.groups), 1, 1))for i in range(in_channels):id_value[i, i % int(in_channels / self.groups), 0, 0] = 1id_tensor = torch.from_numpy(id_value).type_as(self.weight_orepa_1x1_kxk_idconv1)self.register_buffer('id_tensor', id_tensor)else:self.weight_orepa_1x1_kxk_idconv1 = nn.Parameter(torch.zeros(internal_channels_1x1_3x3,int(in_channels / self.groups), 1, 1))id_value = np.zeros((internal_channels_1x1_3x3, int(in_channels / self.groups), 1, 1))for i in range(internal_channels_1x1_3x3):id_value[i, i % int(in_channels / self.groups), 0, 0] = 1id_tensor = torch.from_numpy(id_value).type_as(self.weight_orepa_1x1_kxk_idconv1)self.register_buffer('id_tensor', id_tensor)#init.kaiming_uniform_(#self.weight_orepa_1x1_kxk_conv1, a=math.sqrt(0.0))self.weight_orepa_1x1_kxk_conv2 = nn.Parameter(torch.Tensor(out_channels,int(internal_channels_1x1_3x3 / self.groups),kernel_size, kernel_size))init.kaiming_uniform_(self.weight_orepa_1x1_kxk_conv2, a=math.sqrt(0.0))self.branch_counter += 1expand_ratio = 8self.weight_orepa_gconv_dw = nn.Parameter(torch.Tensor(in_channels * expand_ratio, 1, kernel_size,kernel_size))self.weight_orepa_gconv_pw = nn.Parameter(torch.Tensor(out_channels, int(in_channels * expand_ratio / self.groups), 1, 1))init.kaiming_uniform_(self.weight_orepa_gconv_dw, a=math.sqrt(0.0))init.kaiming_uniform_(self.weight_orepa_gconv_pw, a=math.sqrt(0.0))self.branch_counter += 1self.vector = nn.Parameter(torch.Tensor(self.branch_counter, self.out_channels))if weight_only is False:self.bn = nn.BatchNorm2d(self.out_channels)self.fre_init()init.constant_(self.vector[0, :], 0.25 * math.sqrt(init_hyper_gamma)) #origininit.constant_(self.vector[1, :], 0.25 * math.sqrt(init_hyper_gamma)) #avginit.constant_(self.vector[2, :], 0.0 * math.sqrt(init_hyper_gamma)) #priorinit.constant_(self.vector[3, :], 0.5 * math.sqrt(init_hyper_gamma)) #1x1_kxkinit.constant_(self.vector[4, :], 1.0 * math.sqrt(init_hyper_gamma)) #1x1init.constant_(self.vector[5, :], 0.5 * math.sqrt(init_hyper_gamma)) #dws_convself.weight_orepa_1x1.data = self.weight_orepa_1x1.mul(init_hyper_para)self.weight_orepa_origin.data = self.weight_orepa_origin.mul(init_hyper_para)self.weight_orepa_1x1_kxk_conv2.data = self.weight_orepa_1x1_kxk_conv2.mul(init_hyper_para)self.weight_orepa_avg_conv.data = self.weight_orepa_avg_conv.mul(init_hyper_para)self.weight_orepa_pfir_conv.data = self.weight_orepa_pfir_conv.mul(init_hyper_para)self.weight_orepa_gconv_dw.data = self.weight_orepa_gconv_dw.mul(math.sqrt(init_hyper_para))self.weight_orepa_gconv_pw.data = self.weight_orepa_gconv_pw.mul(math.sqrt(init_hyper_para))if single_init:# Initialize the vector.weight of origin as 1 and others as 0. This is not the default setting.self.single_init() def fre_init(self):prior_tensor = torch.Tensor(self.out_channels, self.kernel_size,self.kernel_size)half_fg = self.out_channels / 2for i in range(self.out_channels):for h in range(3):for w in range(3):if i < half_fg:prior_tensor[i, h, w] = math.cos(math.pi * (h + 0.5) *(i + 1) / 3)else:prior_tensor[i, h, w] = math.cos(math.pi * (w + 0.5) *(i + 1 - half_fg) / 3)self.register_buffer('weight_orepa_prior', prior_tensor)def weight_gen(self):weight_orepa_origin = torch.einsum('oihw,o->oihw',self.weight_orepa_origin,self.vector[0, :])weight_orepa_avg = torch.einsum('oihw,hw->oihw', self.weight_orepa_avg_conv, self.weight_orepa_avg_avg)weight_orepa_avg = torch.einsum('oihw,o->oihw',torch.einsum('oi,hw->oihw', self.weight_orepa_avg_conv.squeeze(3).squeeze(2),self.weight_orepa_avg_avg), self.vector[1, :])weight_orepa_pfir = torch.einsum('oihw,o->oihw',torch.einsum('oi,ohw->oihw', self.weight_orepa_pfir_conv.squeeze(3).squeeze(2),self.weight_orepa_prior), self.vector[2, :])weight_orepa_1x1_kxk_conv1 = Noneif hasattr(self, 'weight_orepa_1x1_kxk_idconv1'):weight_orepa_1x1_kxk_conv1 = (self.weight_orepa_1x1_kxk_idconv1 +self.id_tensor).squeeze(3).squeeze(2)elif hasattr(self, 'weight_orepa_1x1_kxk_conv1'):weight_orepa_1x1_kxk_conv1 = self.weight_orepa_1x1_kxk_conv1.squeeze(3).squeeze(2)else:raise NotImplementedErrorweight_orepa_1x1_kxk_conv2 = self.weight_orepa_1x1_kxk_conv2if self.groups > 1:g = self.groupst, ig = weight_orepa_1x1_kxk_conv1.size()o, tg, h, w = weight_orepa_1x1_kxk_conv2.size()weight_orepa_1x1_kxk_conv1 = weight_orepa_1x1_kxk_conv1.view(g, int(t / g), ig)weight_orepa_1x1_kxk_conv2 = weight_orepa_1x1_kxk_conv2.view(g, int(o / g), tg, h, w)weight_orepa_1x1_kxk = torch.einsum('gti,gothw->goihw',weight_orepa_1x1_kxk_conv1,weight_orepa_1x1_kxk_conv2).reshape(o, ig, h, w)else:weight_orepa_1x1_kxk = torch.einsum('ti,othw->oihw',weight_orepa_1x1_kxk_conv1,weight_orepa_1x1_kxk_conv2)weight_orepa_1x1_kxk = torch.einsum('oihw,o->oihw', weight_orepa_1x1_kxk, self.vector[3, :])weight_orepa_1x1 = 0if hasattr(self, 'weight_orepa_1x1'):weight_orepa_1x1 = transVI_multiscale(self.weight_orepa_1x1,self.kernel_size)weight_orepa_1x1 = torch.einsum('oihw,o->oihw', weight_orepa_1x1,self.vector[4, :])weight_orepa_gconv = self.dwsc2full(self.weight_orepa_gconv_dw,self.weight_orepa_gconv_pw,self.in_channels, self.groups)weight_orepa_gconv = torch.einsum('oihw,o->oihw', weight_orepa_gconv,self.vector[5, :])weight = weight_orepa_origin + weight_orepa_avg + weight_orepa_1x1 + weight_orepa_1x1_kxk + weight_orepa_pfir + weight_orepa_gconvreturn weightdef dwsc2full(self, weight_dw, weight_pw, groups, groups_conv=1):t, ig, h, w = weight_dw.size()o, _, _, _ = weight_pw.size()tg = int(t / groups)i = int(ig * groups)ogc = int(o / groups_conv)groups_gc = int(groups / groups_conv)weight_dw = weight_dw.view(groups_conv, groups_gc, tg, ig, h, w)weight_pw = weight_pw.squeeze().view(ogc, groups_conv, groups_gc, tg)weight_dsc = torch.einsum('cgtihw,ocgt->cogihw', weight_dw, weight_pw)return weight_dsc.reshape(o, int(i/groups_conv), h, w)def forward(self, inputs=None):if hasattr(self, 'orepa_reparam'):return self.nonlinear(self.orepa_reparam(inputs))weight = self.weight_gen()if self.weight_only is True:return weightout = F.conv2d(inputs,weight,bias=None,stride=self.stride,padding=self.padding,dilation=self.dilation,groups=self.groups)return self.nonlinear(self.bn(out))def get_equivalent_kernel_bias(self):return transI_fusebn(self.weight_gen(), self.bn)def switch_to_deploy(self):if hasattr(self, 'or1x1_reparam'):returnkernel, bias = self.get_equivalent_kernel_bias()self.orepa_reparam = nn.Conv2d(in_channels=self.in_channels, out_channels=self.out_channels,kernel_size=self.kernel_size, stride=self.stride,padding=self.padding, dilation=self.dilation, groups=self.groups, bias=True)self.orepa_reparam.weight.data = kernelself.orepa_reparam.bias.data = biasfor para in self.parameters():para.detach_()self.__delattr__('weight_orepa_origin')self.__delattr__('weight_orepa_1x1')self.__delattr__('weight_orepa_1x1_kxk_conv2')if hasattr(self, 'weight_orepa_1x1_kxk_idconv1'):self.__delattr__('id_tensor')self.__delattr__('weight_orepa_1x1_kxk_idconv1')elif hasattr(self, 'weight_orepa_1x1_kxk_conv1'):self.__delattr__('weight_orepa_1x1_kxk_conv1')else:raise NotImplementedErrorself.__delattr__('weight_orepa_avg_avg') self.__delattr__('weight_orepa_avg_conv')self.__delattr__('weight_orepa_pfir_conv')self.__delattr__('weight_orepa_prior')self.__delattr__('weight_orepa_gconv_dw')self.__delattr__('weight_orepa_gconv_pw')self.__delattr__('bn')self.__delattr__('vector')def init_gamma(self, gamma_value):init.constant_(self.vector, gamma_value)def single_init(self):self.init_gamma(0.0)init.constant_(self.vector[0, :], 1.0)class OREPA_LargeConv(nn.Module):def __init__(self, in_channels, out_channels, kernel_size=1,stride=1, padding=None, groups=1, dilation=1, act=True, deploy=False):super(OREPA_LargeConv, self).__init__()assert kernel_size % 2 == 1 and kernel_size > 3padding = autopad(kernel_size, padding, dilation)self.stride = strideself.padding = paddingself.layers = int((kernel_size - 1) / 2)self.groups = groupsself.dilation = dilationself.kernel_size = kernel_sizeself.in_channels = in_channelsself.out_channels = out_channelsinternal_channels = out_channelsself.nonlinear = Conv.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()if deploy:self.or_large_reparam = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size, stride=stride,padding=padding, dilation=dilation, groups=groups, bias=True)else:for i in range(self.layers):if i == 0:self.__setattr__('weight'+str(i), OREPA(in_channels, internal_channels, kernel_size=3, stride=1, padding=1, groups=groups, weight_only=True))elif i == self.layers - 1:self.__setattr__('weight'+str(i), OREPA(internal_channels, out_channels, kernel_size=3, stride=self.stride, padding=1, weight_only=True))else:self.__setattr__('weight'+str(i), OREPA(internal_channels, internal_channels, kernel_size=3, stride=1, padding=1, weight_only=True))self.bn = nn.BatchNorm2d(out_channels)#self.unfold = torch.nn.Unfold(kernel_size=3, dilation=1, padding=2, stride=1)def weight_gen(self):weight = getattr(self, 'weight'+str(0)).weight_gen().transpose(0, 1)for i in range(self.layers - 1):weight2 = getattr(self, 'weight'+str(i+1)).weight_gen()weight = F.conv2d(weight, weight2, groups=self.groups, padding=2)return weight.transpose(0, 1)'''weight = getattr(self, 'weight'+str(0))(inputs=None).transpose(0, 1)for i in range(self.layers - 1):weight = self.unfold(weight)weight2 = getattr(self, 'weight'+str(i+1))(inputs=None)weight = torch.einsum('akl,bk->abl', weight, weight2.view(weight2.size(0), -1))k = i * 2 + 5weight = weight.view(weight.size(0), weight.size(1), k, k)return weight.transpose(0, 1)'''def forward(self, inputs):if hasattr(self, 'or_large_reparam'):return self.nonlinear(self.or_large_reparam(inputs))weight = self.weight_gen()out = F.conv2d(inputs, weight, stride=self.stride, padding=self.padding, dilation=self.dilation, groups=self.groups)return self.nonlinear(self.bn(out))def get_equivalent_kernel_bias(self):return transI_fusebn(self.weight_gen(), self.bn)def switch_to_deploy(self):if hasattr(self, 'or_large_reparam'):returnkernel, bias = self.get_equivalent_kernel_bias()self.or_large_reparam = nn.Conv2d(in_channels=self.in_channels, out_channels=self.out_channels,kernel_size=self.kernel_size, stride=self.stride,padding=self.padding, dilation=self.dilation, groups=self.groups, bias=True)self.or_large_reparam.weight.data = kernelself.or_large_reparam.bias.data = biasfor para in self.parameters():para.detach_()for i in range(self.layers):self.__delattr__('weight'+str(i))self.__delattr__('bn')class ConvBN(nn.Module):def __init__(self, in_channels, out_channels, kernel_size,stride=1, padding=0, dilation=1, groups=1, deploy=False, nonlinear=None):super().__init__()if nonlinear is None:self.nonlinear = nn.Identity()else:self.nonlinear = nonlinearif deploy:self.conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size,stride=stride, padding=padding, dilation=dilation, groups=groups, bias=True)else:self.conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size,stride=stride, padding=padding, dilation=dilation, groups=groups, bias=False)self.bn = nn.BatchNorm2d(num_features=out_channels)def forward(self, x):if hasattr(self, 'bn'):return self.nonlinear(self.bn(self.conv(x)))else:return self.nonlinear(self.conv(x))def switch_to_deploy(self):kernel, bias = transI_fusebn(self.conv.weight, self.bn)conv = nn.Conv2d(in_channels=self.conv.in_channels, out_channels=self.conv.out_channels, kernel_size=self.conv.kernel_size,stride=self.conv.stride, padding=self.conv.padding, dilation=self.conv.dilation, groups=self.conv.groups, bias=True)conv.weight.data = kernelconv.bias.data = biasfor para in self.parameters():para.detach_()self.__delattr__('conv')self.__delattr__('bn')self.conv = convclass OREPA_3x3_RepVGG(nn.Module):def __init__(self, in_channels, out_channels, kernel_size,stride=1, padding=None, groups=1, dilation=1, act=True,internal_channels_1x1_3x3=None,deploy=False):super(OREPA_3x3_RepVGG, self).__init__()self.deploy = deployself.nonlinear = Conv.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()self.kernel_size = kernel_sizeself.in_channels = in_channelsself.out_channels = out_channelsself.groups = groupspadding = autopad(kernel_size, padding, dilation)assert padding == kernel_size // 2self.stride = strideself.padding = paddingself.dilation = dilationself.branch_counter = 0self.weight_rbr_origin = nn.Parameter(torch.Tensor(out_channels, int(in_channels/self.groups), kernel_size, kernel_size))init.kaiming_uniform_(self.weight_rbr_origin, a=math.sqrt(1.0))self.branch_counter += 1if groups < out_channels:self.weight_rbr_avg_conv = nn.Parameter(torch.Tensor(out_channels, int(in_channels/self.groups), 1, 1))self.weight_rbr_pfir_conv = nn.Parameter(torch.Tensor(out_channels, int(in_channels/self.groups), 1, 1))init.kaiming_uniform_(self.weight_rbr_avg_conv, a=1.0)init.kaiming_uniform_(self.weight_rbr_pfir_conv, a=1.0)self.weight_rbr_avg_conv.dataself.weight_rbr_pfir_conv.dataself.register_buffer('weight_rbr_avg_avg', torch.ones(kernel_size, kernel_size).mul(1.0/kernel_size/kernel_size))self.branch_counter += 1else:raise NotImplementedErrorself.branch_counter += 1if internal_channels_1x1_3x3 is None:internal_channels_1x1_3x3 = in_channels if groups < out_channels else 2 * in_channels # For mobilenet, it is better to have 2X internal channelsif internal_channels_1x1_3x3 == in_channels:self.weight_rbr_1x1_kxk_idconv1 = nn.Parameter(torch.zeros(in_channels, int(in_channels/self.groups), 1, 1))id_value = np.zeros((in_channels, int(in_channels/self.groups), 1, 1))for i in range(in_channels):id_value[i, i % int(in_channels/self.groups), 0, 0] = 1id_tensor = torch.from_numpy(id_value).type_as(self.weight_rbr_1x1_kxk_idconv1)self.register_buffer('id_tensor', id_tensor)else:self.weight_rbr_1x1_kxk_conv1 = nn.Parameter(torch.Tensor(internal_channels_1x1_3x3, int(in_channels/self.groups), 1, 1))init.kaiming_uniform_(self.weight_rbr_1x1_kxk_conv1, a=math.sqrt(1.0))self.weight_rbr_1x1_kxk_conv2 = nn.Parameter(torch.Tensor(out_channels, int(internal_channels_1x1_3x3/self.groups), kernel_size, kernel_size))init.kaiming_uniform_(self.weight_rbr_1x1_kxk_conv2, a=math.sqrt(1.0))self.branch_counter += 1expand_ratio = 8self.weight_rbr_gconv_dw = nn.Parameter(torch.Tensor(in_channels*expand_ratio, 1, kernel_size, kernel_size))self.weight_rbr_gconv_pw = nn.Parameter(torch.Tensor(out_channels, in_channels*expand_ratio, 1, 1))init.kaiming_uniform_(self.weight_rbr_gconv_dw, a=math.sqrt(1.0))init.kaiming_uniform_(self.weight_rbr_gconv_pw, a=math.sqrt(1.0))self.branch_counter += 1if out_channels == in_channels and stride == 1:self.branch_counter += 1self.vector = nn.Parameter(torch.Tensor(self.branch_counter, self.out_channels))self.bn = nn.BatchNorm2d(out_channels)self.fre_init()init.constant_(self.vector[0, :], 0.25) #origininit.constant_(self.vector[1, :], 0.25) #avginit.constant_(self.vector[2, :], 0.0) #priorinit.constant_(self.vector[3, :], 0.5) #1x1_kxkinit.constant_(self.vector[4, :], 0.5) #dws_convdef fre_init(self):prior_tensor = torch.Tensor(self.out_channels, self.kernel_size, self.kernel_size)half_fg = self.out_channels/2for i in range(self.out_channels):for h in range(3):for w in range(3):if i < half_fg:prior_tensor[i, h, w] = math.cos(math.pi*(h+0.5)*(i+1)/3)else:prior_tensor[i, h, w] = math.cos(math.pi*(w+0.5)*(i+1-half_fg)/3)self.register_buffer('weight_rbr_prior', prior_tensor)def weight_gen(self):weight_rbr_origin = torch.einsum('oihw,o->oihw', self.weight_rbr_origin, self.vector[0, :])weight_rbr_avg = torch.einsum('oihw,o->oihw', torch.einsum('oihw,hw->oihw', self.weight_rbr_avg_conv, self.weight_rbr_avg_avg), self.vector[1, :])weight_rbr_pfir = torch.einsum('oihw,o->oihw', torch.einsum('oihw,ohw->oihw', self.weight_rbr_pfir_conv, self.weight_rbr_prior), self.vector[2, :])weight_rbr_1x1_kxk_conv1 = Noneif hasattr(self, 'weight_rbr_1x1_kxk_idconv1'):weight_rbr_1x1_kxk_conv1 = (self.weight_rbr_1x1_kxk_idconv1 + self.id_tensor).squeeze()elif hasattr(self, 'weight_rbr_1x1_kxk_conv1'):weight_rbr_1x1_kxk_conv1 = self.weight_rbr_1x1_kxk_conv1.squeeze()else:raise NotImplementedErrorweight_rbr_1x1_kxk_conv2 = self.weight_rbr_1x1_kxk_conv2if self.groups > 1:g = self.groupst, ig = weight_rbr_1x1_kxk_conv1.size()o, tg, h, w = weight_rbr_1x1_kxk_conv2.size()weight_rbr_1x1_kxk_conv1 = weight_rbr_1x1_kxk_conv1.view(g, int(t/g), ig)weight_rbr_1x1_kxk_conv2 = weight_rbr_1x1_kxk_conv2.view(g, int(o/g), tg, h, w)weight_rbr_1x1_kxk = torch.einsum('gti,gothw->goihw', weight_rbr_1x1_kxk_conv1, weight_rbr_1x1_kxk_conv2).view(o, ig, h, w)else:weight_rbr_1x1_kxk = torch.einsum('ti,othw->oihw', weight_rbr_1x1_kxk_conv1, weight_rbr_1x1_kxk_conv2)weight_rbr_1x1_kxk = torch.einsum('oihw,o->oihw', weight_rbr_1x1_kxk, self.vector[3, :])weight_rbr_gconv = self.dwsc2full(self.weight_rbr_gconv_dw, self.weight_rbr_gconv_pw, self.in_channels)weight_rbr_gconv = torch.einsum('oihw,o->oihw', weight_rbr_gconv, self.vector[4, :]) weight = weight_rbr_origin + weight_rbr_avg + weight_rbr_1x1_kxk + weight_rbr_pfir + weight_rbr_gconvreturn weightdef dwsc2full(self, weight_dw, weight_pw, groups):t, ig, h, w = weight_dw.size()o, _, _, _ = weight_pw.size()tg = int(t/groups)i = int(ig*groups)weight_dw = weight_dw.view(groups, tg, ig, h, w)weight_pw = weight_pw.squeeze().view(o, groups, tg)weight_dsc = torch.einsum('gtihw,ogt->ogihw', weight_dw, weight_pw)return weight_dsc.view(o, i, h, w)def forward(self, inputs):weight = self.weight_gen()out = F.conv2d(inputs, weight, bias=None, stride=self.stride, padding=self.padding, dilation=self.dilation, groups=self.groups)return self.nonlinear(self.bn(out))class RepVGGBlock_OREPA(nn.Module):def __init__(self, in_channels, out_channels, kernel_size,stride=1, padding=None, groups=1, dilation=1, act=True, deploy=False, use_se=False):super(RepVGGBlock_OREPA, self).__init__()self.deploy = deployself.groups = groupsself.in_channels = in_channelsself.out_channels = out_channelspadding = autopad(kernel_size, padding, dilation)self.padding = paddingself.dilation = dilationself.groups = groupsassert kernel_size == 3assert padding == 1self.nonlinearity = Conv.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()if use_se:self.se = SEAttention(out_channels, reduction=out_channels // 16)else:self.se = nn.Identity()if deploy:self.rbr_reparam = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size, stride=stride,padding=padding, dilation=dilation, groups=groups, bias=True)else:self.rbr_identity = nn.BatchNorm2d(num_features=in_channels) if out_channels == in_channels and stride == 1 else Noneself.rbr_dense = OREPA_3x3_RepVGG(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size, stride=stride, padding=padding, groups=groups, dilation=1)self.rbr_1x1 = ConvBN(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=stride, groups=groups, dilation=1)def forward(self, inputs):if hasattr(self, 'rbr_reparam'):return self.nonlinearity(self.se(self.rbr_reparam(inputs)))if self.rbr_identity is None:id_out = 0else:id_out = self.rbr_identity(inputs)out1 = self.rbr_dense(inputs)out2 = self.rbr_1x1(inputs)out3 = id_outout = out1 + out2 + out3return self.nonlinearity(self.se(out))# Optional. This improves the accuracy and facilitates quantization.# 1. Cancel the original weight decay on rbr_dense.conv.weight and rbr_1x1.conv.weight.# 2. Use like this.# loss = criterion(....)# for every RepVGGBlock blk:# loss += weight_decay_coefficient * 0.5 * blk.get_cust_L2()# optimizer.zero_grad()# loss.backward()# Not used for OREPAdef get_custom_L2(self):K3 = self.rbr_dense.weight_gen()K1 = self.rbr_1x1.conv.weightt3 = (self.rbr_dense.bn.weight / ((self.rbr_dense.bn.running_var + self.rbr_dense.bn.eps).sqrt())).reshape(-1, 1, 1, 1).detach()t1 = (self.rbr_1x1.bn.weight / ((self.rbr_1x1.bn.running_var + self.rbr_1x1.bn.eps).sqrt())).reshape(-1, 1, 1, 1).detach()l2_loss_circle = (K3 ** 2).sum() - (K3[:, :, 1:2, 1:2] ** 2).sum() # The L2 loss of the "circle" of weights in 3x3 kernel. Use regular L2 on them.eq_kernel = K3[:, :, 1:2, 1:2] * t3 + K1 * t1 # The equivalent resultant central point of 3x3 kernel.l2_loss_eq_kernel = (eq_kernel ** 2 / (t3 ** 2 + t1 ** 2)).sum() # Normalize for an L2 coefficient comparable to regular L2.return l2_loss_eq_kernel + l2_loss_circledef get_equivalent_kernel_bias(self):kernel3x3, bias3x3 = self._fuse_bn_tensor(self.rbr_dense)kernel1x1, bias1x1 = self._fuse_bn_tensor(self.rbr_1x1)kernelid, biasid = self._fuse_bn_tensor(self.rbr_identity)return kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid, bias3x3 + bias1x1 + biasiddef _pad_1x1_to_3x3_tensor(self, kernel1x1):if kernel1x1 is None:return 0else:return torch.nn.functional.pad(kernel1x1, [1,1,1,1])def _fuse_bn_tensor(self, branch):if branch is None:return 0, 0if not isinstance(branch, nn.BatchNorm2d):if isinstance(branch, OREPA_3x3_RepVGG):kernel = branch.weight_gen()elif isinstance(branch, ConvBN):kernel = branch.conv.weightelse:raise NotImplementedErrorrunning_mean = branch.bn.running_meanrunning_var = branch.bn.running_vargamma = branch.bn.weightbeta = branch.bn.biaseps = branch.bn.epselse:if not hasattr(self, 'id_tensor'):input_dim = self.in_channels // self.groupskernel_value = np.zeros((self.in_channels, input_dim, 3, 3), dtype=np.float32)for i in range(self.in_channels):kernel_value[i, i % input_dim, 1, 1] = 1self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device)kernel = self.id_tensorrunning_mean = branch.running_meanrunning_var = branch.running_vargamma = branch.weightbeta = branch.biaseps = branch.epsstd = (running_var + eps).sqrt()t = (gamma / std).reshape(-1, 1, 1, 1)return kernel * t, beta - running_mean * gamma / stddef switch_to_deploy(self):if hasattr(self, 'rbr_reparam'):returnkernel, bias = self.get_equivalent_kernel_bias()self.rbr_reparam = nn.Conv2d(in_channels=self.rbr_dense.in_channels, out_channels=self.rbr_dense.out_channels,kernel_size=self.rbr_dense.kernel_size, stride=self.rbr_dense.stride,padding=self.rbr_dense.padding, dilation=self.rbr_dense.dilation, groups=self.rbr_dense.groups, bias=True)self.rbr_reparam.weight.data = kernelself.rbr_reparam.bias.data = biasfor para in self.parameters():para.detach_()self.__delattr__('rbr_dense')self.__delattr__('rbr_1x1')if hasattr(self, 'rbr_identity'):self.__delattr__('rbr_identity')class Bottleneck_REPVGGOREPA(Bottleneck):"""Standard bottleneck with DCNV2."""def __init__(self, c1, c2, shortcut=True, g=1, k=(3, 3), e=0.5): # ch_in, ch_out, shortcut, groups, kernels, expandsuper().__init__(c1, c2, shortcut, g, k, e)c_ = int(c2 * e) # hidden channelsif k[0] == 1:self.cv1 = Conv(c1, c_, 1)else:self.cv1 = RepVGGBlock_OREPA(c1, c_, 3)self.cv2 = RepVGGBlock_OREPA(c_, c2, 3, groups=g)class C3_REPVGGOREPA(C3):def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)c_ = int(c2 * e) # hidden channelsself.m = nn.Sequential(*(Bottleneck_REPVGGOREPA(c_, c_, shortcut, g, k=(1, 3), e=1.0) for _ in range(n)))class C2f_REPVGGOREPA(C2f):def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)self.m = nn.ModuleList(Bottleneck_REPVGGOREPA(self.c, self.c, shortcut, g, k=(3, 3), e=1.0) for _ in range(n))2.2 C2f_REPVGGOREPA的神经网络模块代码解析

这段代码定义了一个名为 C2f_REPVGGOREPA 的自定义神经网络模块类,它继承自另一个类 C2f。以下是对该代码的详细解析:

1. 类的定义与继承

class C2f_REPVGGOREPA(C2f):-

C2f_REPVGGOREPA继承自C2f类,这意味着C2f_REPVGGOREPA将拥有C2f的所有属性和方法,但可以重写或扩展这些属性和方法。 -

C2f是父类,它可能定义了一些基础的神经网络结构或功能。

2. 初始化方法

def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)-

__init__是初始化方法,当创建C2f_REPVGGOREPA类的实例时会自动调用。 -

c1和c2可能表示输入和输出的通道数,n表示模块重复的次数,shortcut是是否使用残差连接的标志,g可能是组卷积的组数,e可能表示扩展因子。 -

super().__init__(c1, c2, n, shortcut, g, e)调用了父类C2f的初始化方法,确保C2f_REPVGGOREPA继承父类的初始化逻辑。

3. 模块列表

self.m = nn.ModuleList(Bottleneck_REPVGGOREPA(self.c, self.c, shortcut, g, k=(3, 3), e=1.0) for _ in range(n))-

self.m是一个nn.ModuleList,用于存储一系列神经网络层或模块。 -

Bottleneck_REPVGGOREPA是一个自定义的模块(实现了REPVGG和OREPA方法的瓶颈层),每个模块的输入和输出通道数都是self.c,shortcut、g和k=(3, 3)是这个模块的参数,其中k=(3, 3)可能表示使用的卷积核大小为 3x3,e=1.0表示扩展因子。 -

for _ in range(n)表示创建n个Bottleneck_REPVGGOREPA模块并将它们添加到self.m中,n是初始化方法中的参数。

4. 总结

C2f_REPVGGOREPA 类是一个自定义神经网络模块,继承自 C2f。在这个类中,它使用了 Bottleneck_REPVGGOREPA 模块,并通过 nn.ModuleList 将多个这样的模块组合在一起。这种设计允许通过重复使用 Bottleneck_REPVGGOREPA 模块来构建更深的神经网络结构,同时继承并扩展了 C2f 类的功能。

2.3 更改init.py文件

关键步骤二:修改modules文件夹下的__init__.py文件,先导入函数

然后在下面的__all__中声明函数

2.4 添加yaml文件

关键步骤三:在/ultralytics/ultralytics/cfg/models/v8下面新建文件yolov8_C2f_REPVGGOREPA.yaml文件,粘贴下面的内容

- OD【目标检测】

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'# [depth, width, max_channels]n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPss: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPsm: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPsl: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPsx: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs# YOLOv8.0n backbone

backbone:# [from, repeats, module, args]- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4- [-1, 3, C2f_REPVGGOREPA, [128, True]]- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8- [-1, 6, C2f_REPVGGOREPA, [256, True]]- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16- [-1, 6, C2f_REPVGGOREPA, [512, True]]- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32- [-1, 3, C2f_REPVGGOREPA, [1024, True]]- [-1, 1, SPPF, [1024, 5]] # 9# YOLOv8.0n head

head:- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [[-1, 6], 1, Concat, [1]] # cat backbone P4- [-1, 3, C2f_REPVGGOREPA, [512]] # 12- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [[-1, 4], 1, Concat, [1]] # cat backbone P3- [-1, 3, C2f_REPVGGOREPA, [256]] # 15 (P3/8-small)- [-1, 1, Conv, [256, 3, 2]]- [[-1, 12], 1, Concat, [1]] # cat head P4- [-1, 3, C2f_REPVGGOREPA, [512]] # 18 (P4/16-medium)- [-1, 1, Conv, [512, 3, 2]]- [[-1, 9], 1, Concat, [1]] # cat head P5- [-1, 3, C2f_REPVGGOREPA, [1024]] # 21 (P5/32-large)- [[15, 18, 21], 1, Detect, [nc]] # Detect(P3, P4, P5)- Seg【语义分割】

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'# [depth, width, max_channels]n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPss: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPsm: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPsl: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPsx: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs# YOLOv8.0n backbone

backbone:# [from, repeats, module, args]- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4- [-1, 3, C2f_REPVGGOREPA, [128, True]]- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8- [-1, 6, C2f_REPVGGOREPA, [256, True]]- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16- [-1, 6, C2f_REPVGGOREPA, [512, True]]- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32- [-1, 3, C2f_REPVGGOREPA, [1024, True]]- [-1, 1, SPPF, [1024, 5]] # 9# YOLOv8.0n head

head:- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [[-1, 6], 1, Concat, [1]] # cat backbone P4- [-1, 3, C2f_REPVGGOREPA, [512]] # 12- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [[-1, 4], 1, Concat, [1]] # cat backbone P3- [-1, 3, C2f_REPVGGOREPA, [256]] # 15 (P3/8-small)- [-1, 1, Conv, [256, 3, 2]]- [[-1, 12], 1, Concat, [1]] # cat head P4- [-1, 3, C2f_REPVGGOREPA, [512]] # 18 (P4/16-medium)- [-1, 1, Conv, [512, 3, 2]]- [[-1, 9], 1, Concat, [1]] # cat head P5- [-1, 3, C2f_REPVGGOREPA, [1024]] # 21 (P5/32-large)- [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Segment(P3, P4, P5)温馨提示:因为本文只是对yolov8基础上添加模块,如果要对yolov8n/l/m/x进行添加则只需要指定对应的depth_multiple 和 width_multiple。不明白的同学可以看这篇文章: yolov8yaml文件解读——点击即可跳转

# YOLOv8n

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.25 # layer channel multiple

max_channels: 1024 # max_channels# YOLOv8s

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

max_channels: 1024 # max_channels# YOLOv8l

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

max_channels: 512 # max_channels# YOLOv8m

depth_multiple: 0.67 # model depth multiple

width_multiple: 0.75 # layer channel multiple

max_channels: 768 # max_channels# YOLOv8x

depth_multiple: 1.33 # model depth multiple

width_multiple: 1.25 # layer channel multiple

max_channels: 512 # max_channels2.5 注册模块

关键步骤四:在task.py的parse_model函数中注册

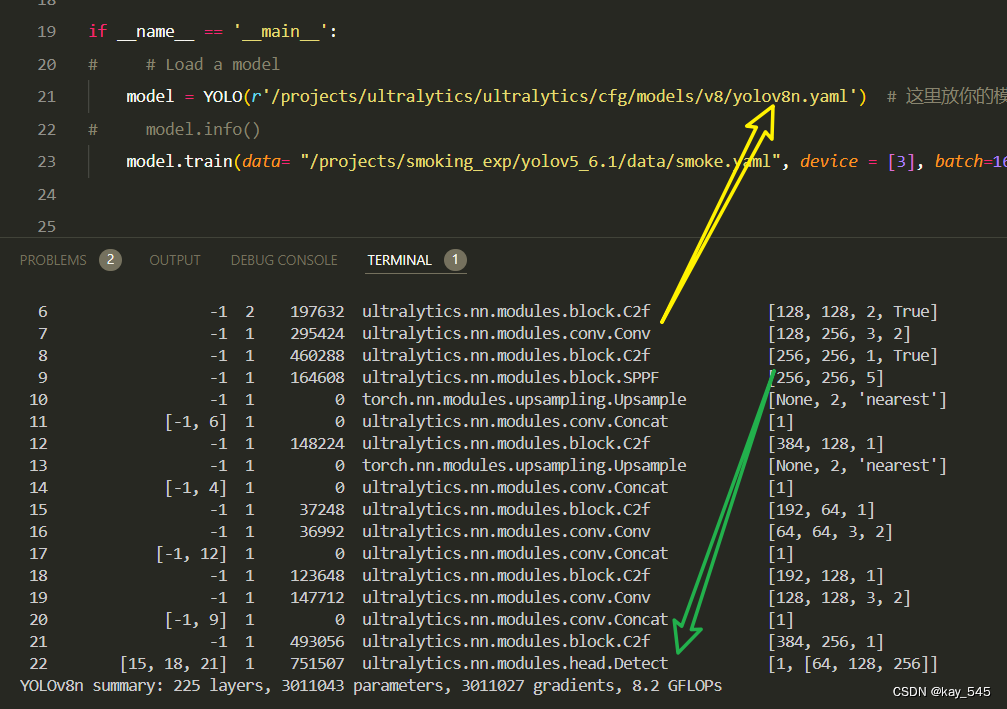

2.6 执行程序

在train.py中,将model的参数路径设置为yolov8_C2f_REPVGGOREPA.yaml的路径

建议大家写绝对路径,确保一定能找到

from ultralytics import YOLO

import warnings

warnings.filterwarnings('ignore')

from pathlib import Pathif __name__ == '__main__':# 加载模型model = YOLO("ultralytics/cfg/v8/yolov8.yaml") # 你要选择的模型yaml文件地址# Use the modelresults = model.train(data=r"你的数据集的yaml文件地址",epochs=100, batch=16, imgsz=640, workers=4, name=Path(model.cfg).stem) # 训练模型🚀运行程序,如果出现下面的内容则说明添加成功🚀

from n params module arguments0 -1 1 464 ultralytics.nn.modules.conv.Conv [3, 16, 3, 2]1 -1 1 4672 ultralytics.nn.modules.conv.Conv [16, 32, 3, 2]2 -1 1 20736 ultralytics.nn.modules.block.C2f_REPVGGOREPA [32, 32, 1, True]3 -1 1 18560 ultralytics.nn.modules.conv.Conv [32, 64, 3, 2]4 -1 2 146176 ultralytics.nn.modules.block.C2f_REPVGGOREPA [64, 64, 2, True]5 -1 1 73984 ultralytics.nn.modules.conv.Conv [64, 128, 3, 2]6 -1 2 562688 ultralytics.nn.modules.block.C2f_REPVGGOREPA [128, 128, 2, True]7 -1 1 295424 ultralytics.nn.modules.conv.Conv [128, 256, 3, 2]8 -1 1 1169408 ultralytics.nn.modules.block.C2f_REPVGGOREPA [256, 256, 1, True]9 -1 1 164608 ultralytics.nn.modules.block.SPPF [256, 256, 5]10 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']11 [-1, 6] 1 0 ultralytics.nn.modules.conv.Concat [1]12 -1 1 330752 ultralytics.nn.modules.block.C2f_REPVGGOREPA [384, 128, 1]13 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']14 [-1, 4] 1 0 ultralytics.nn.modules.conv.Concat [1]15 -1 1 85504 ultralytics.nn.modules.block.C2f_REPVGGOREPA [192, 64, 1]16 -1 1 36992 ultralytics.nn.modules.conv.Conv [64, 64, 3, 2]17 [-1, 12] 1 0 ultralytics.nn.modules.conv.Concat [1]18 -1 1 306176 ultralytics.nn.modules.block.C2f_REPVGGOREPA [192, 128, 1]19 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]20 [-1, 9] 1 0 ultralytics.nn.modules.conv.Concat [1]21 -1 1 1202176 ultralytics.nn.modules.block.C2f_REPVGGOREPA [384, 256, 1]22 [15, 18, 21] 1 897664 ultralytics.nn.modules.head.Detect [80, [64, 128, 256]]

YOLOv8_C2f_REPVGGOREPA summary: 345 layers, 5463696 parameters, 5463680 gradients, 6.8 GFLOPs3. 完整代码分享

https://pan.baidu.com/s/1PPV4wsq2R89EesnA780fGg?pwd=p27f提取码: p27f

4. GFLOPs

关于GFLOPs的计算方式可以查看:百面算法工程师 | 卷积基础知识——Convolution

未改进的YOLOv8nGFLOPs

改进后的GFLOPs

5. 进阶

可以与其他的注意力机制或者损失函数等结合,进一步提升检测效果

6. 总结

OREPA的主要原理在于通过在线卷积重参数化(Online Convolutional Re-parameterization)优化了传统结构重参数化方法的训练效率。在训练阶段,OREPA首先移除了复杂训练块中的非线性标准化层,替换为线性缩放层,从而保持了各分支优化方向的多样性,同时简化了结构。然后,OREPA将这些线性化的块在训练过程中进一步压缩为单个卷积核,从而显著降低了计算和存储开销。这一过程不仅大幅度减少了训练成本,还保留了模型的高表现力,使得在推理阶段能够实现高效且精准的模型部署。这种方法特别适用于需要在有限计算资源下执行的场景,如实时推理任务。

这篇关于YOLOv8改进 | 模块缝合 | C2f 融合REPVGGOREPA提升检测性能【详细步骤 完整代码】的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!