本文主要是介绍TensorFlow实战:Chapter-6(CNN-4-经典卷积神经网络(ResNet)),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

- ResNet

- ResNet简介

- 相关内容

- 论文分析

- 问题引出

- 解决办法

- 实现residual mapping

- 实验

- 实验结果

- ResNet在TensorFlow上的实现

ResNet

ResNet简介

ResNet(Residual Neural Network)由微软研究院的何凯明大神等4人提出,ResNet通过使用Residual Unit成功训练152层神经网络,在ILSCRC2015年比赛中获得3.75%的top-5错误率,获得冠军。ResNet的参数量少,且新增的Residual Unit单元可以极快地加速神经网络的训练,同时模型的准备率也有非常大的提升。本节重点分析KaiMing He大神的《Deep Residual Learning for Image Recognition》论文,以及如何用TensorFlow实现ResNet.

相关内容

在ResNet之前,瑞士教授Schmidhuber提出了Highway Network,原理和ResNet很像,Schmidhuber教授有着一个更出名的发明–LSTM网络。

Highway Network解决的问题?

通常认为神经网络的深度对其性能非常重要,而在增加网络深度的同时随之而来的是网络训练难度增大,Highway Network的目标就是解决极深的神经网络难以训练的问题。Highway Network的原理?

Highway Network相当于修改了每一层激活函数,此前的激活函数是对输入信号做了非线性变换y=H(x,W),Highway Network则允许保留一定比例的原始输入x,即y=H(x,W1)T(x,W2)+xC(x,W3),这里T为变换系数,C为保留系数。在这样的设定下,前面一层的信息,有一定比例的可以直接传输到下一层(不经过矩阵乘法和激活函数变换),如同网络传输中的一条高速公路,因此得名Highway Network。Highway Network主要通过gating units学习如何控制网络中的信息流,即学习原始信息应保留的比例。这个可学习的gatting机制,正是借鉴Schmidhuber教授早年的的LSTM网络中的gatting。这正是Highway Network的引入,使得几百层乃至上千次的网络可以训练了。ResNet网络和Highway Network有啥关系?

ResNet和Highway Network非常相似,都是针对网络随着深度的变化而引发的问题,而Highway Network的解决思路给ResNet提供了解决办法.(下面详解ResNet的问题引出)

论文分析

问题引出

首先抛出一个问题:一个网络性能的提升是否能够可以通过简单的堆叠网络的层数?

这个问题很难回答,因为在网络训练的过程中可能存在梯度消失/爆炸,现在通过规范初始化和中间层归一化技术,在配合以BP为基础的SGD基本上可以训练浅层网络。

但是当网络深度增加时,就会暴露出另一个问题:

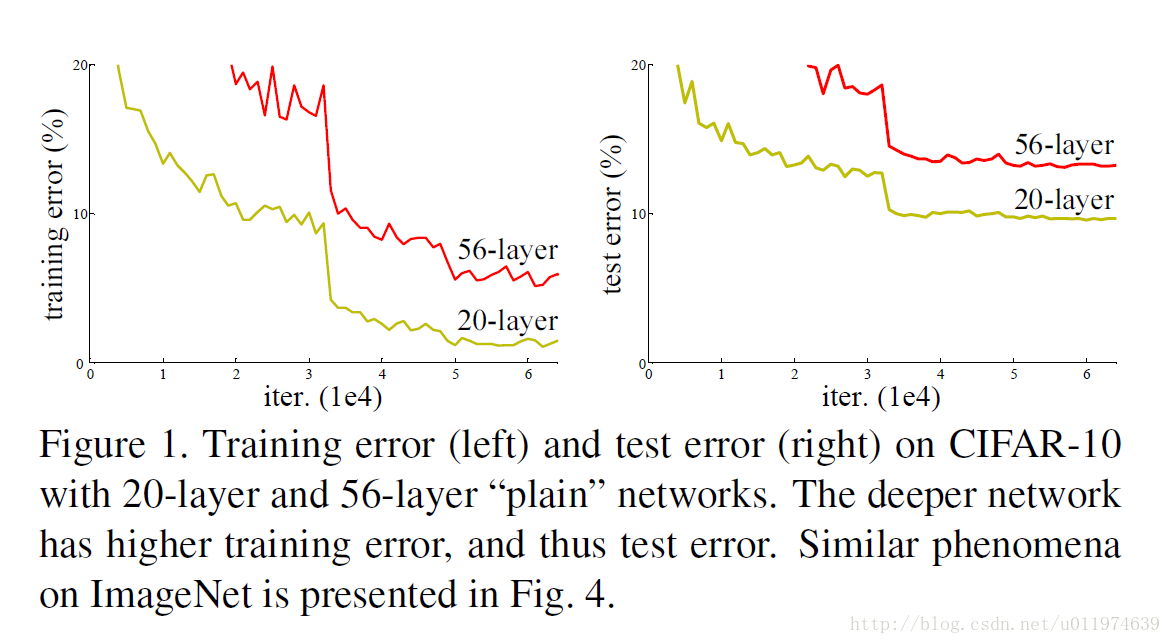

随着网络的深度增加,网络的错误率却在上升。参考下图

我们把这个问题用一个名词degradation表示,首先我们可以判断的是,引起degradation不是过拟合。因为从图上来看,随着网络层数的增加,网络在测试集上的的错误率在上升,但同时在训练集上的错误率也在上升,如果是过拟合,网络在训练集上的错误率不该有是上升的。所有引起degradation的不是因为网络的过拟合。

那么问题出在哪?

回答上面的问题,我们要分析degradation出现的原因:

degradation问题说明了不是所有的系统都是容易优化的,我们可以这么想,针对一个浅层的网络,如果这个网络达到了一定得性能后,我们在该网络的基础上叠加新的网络层(简称深层网络),那么深层网络应该比浅层网络的性能不差,因为如果浅层网络已经是性能最好的话,那么多叠加的网络层学习到后面都为恒等映射(identity mapping)即可,但是实际情况却是训练的误差也在上升,那么就是网络本身有问题了。

解决办法

针对网络本身的问题,文章提出了一个新的网络结构–deep residual learning framework(深度残差学习框架),下面就来详细讲解一下这个新的结构相比以前的网络有啥提升的.

我们将原先堆叠的网络层从一个直接的映射(desired underlying mapping)用一个新的映射代替了,这个新的映射 们称之为residual mapping,即如果我们假设我们期望的映射为H(x),设原本网络的非线性映射为F(x)=H(x)-x, 那么期望的映射就可以写成H(x) = F(x) + x,现在F(x) + x的结构就是本文的resudial mapping,resudial mapping与原本的F(x)的区别很明显,就在于多了一个x。

我们可以考虑极端情况,如果我们需要学习一个恒等映射(即H(x)= x),我们认为通过堆叠网络使得原本的非线性映射F(x)优化为0的难度要比F(x)优化到1要简单.

为了证明这个新的映射好使,文章剩下的部分就是做相关证明工作了。

要证明新的映射好,那么先要设计并实现新的网络架构。

实现residual mapping

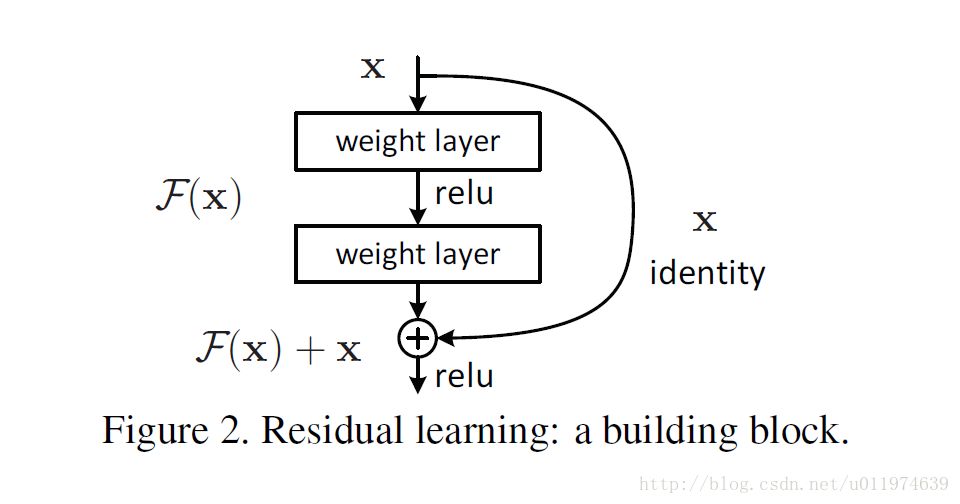

将residual mapping的每层用下图表示:

我们现在要优化的是一个残差结构,设定网络块为:

y = F(x,W) + x # y and y are the input and output vectors

这种结构的实现是在原来网络的基础上添加一个通道(控制里面的前馈),这里新添加的通道需要跳过一个或者多个网络层,可以看到新的网络块不需要添加新的参数,而且新的网络结构依据可以通过SGD来训练,且这种新的网络实现起来也很方便,我们可以等同的比较原本的网络和新的网络结合的性能。

实验

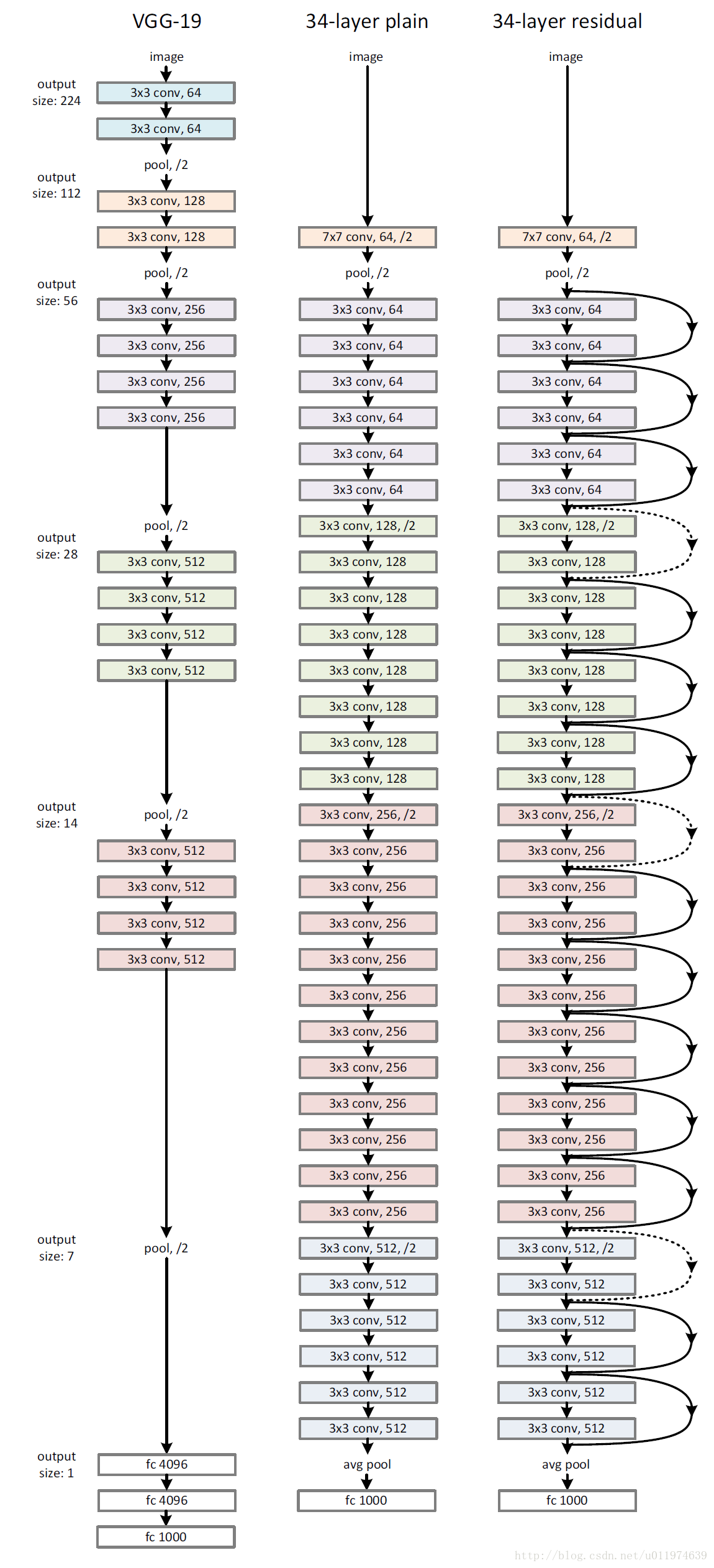

下面我们测试了三个网络:

左边:VGG-19 Model(19.6billion FLOPs);

中间:普通的网络:使用多个3*3的小卷积核(以VGG网络的思想设计),遵循着两个设计原则:

- 对于相同的输出特征图尺寸,层与滤波器的个数是相同的

- 如果输出特征图的尺寸减半,那么滤波器的个数加倍,保持时间复杂度

网络共34层,以全局平均池化层和1000个分类的softmax层结束。

普通的网络需要(3.6billion FLOPs)右边:residual model:这是建立在普通网络的基础上的,可以看到网络的旁边多了很多前馈线,我们把这些前馈线也称之为shortcut或skip connections. 这些前馈线代表的是恒等映射。

前馈线应用会遇到两种情况:- 输入和输出的维度一致,那可以直接连接

- 如果输出的维度增加了,有两种办法

- 恒等前馈线不足的维度添加padding,padding的值为0

- 借鉴Inception Net的思想,经过1*1卷积变换维度

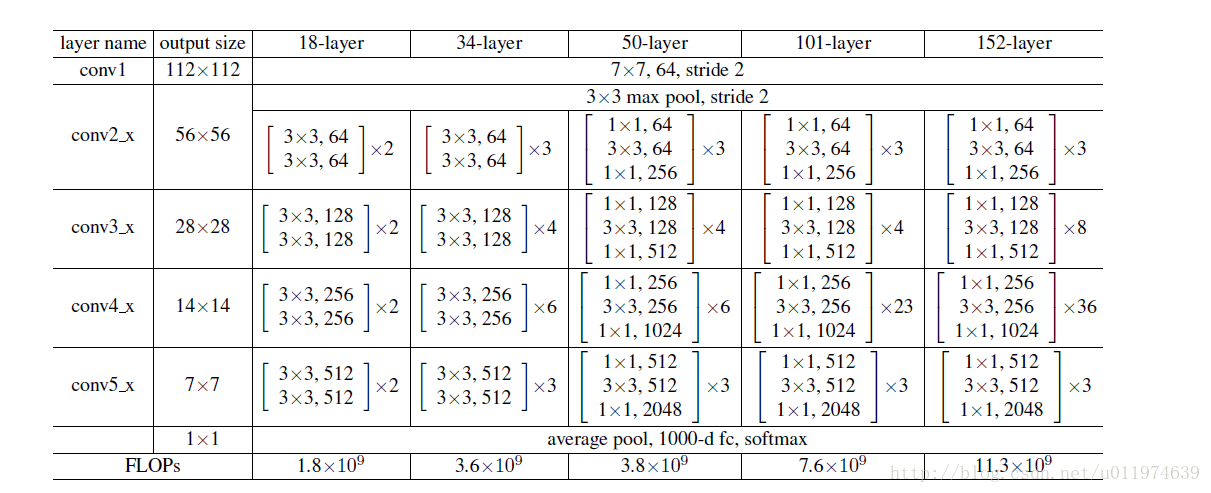

同时配置了不同层数的ResNet,如图:

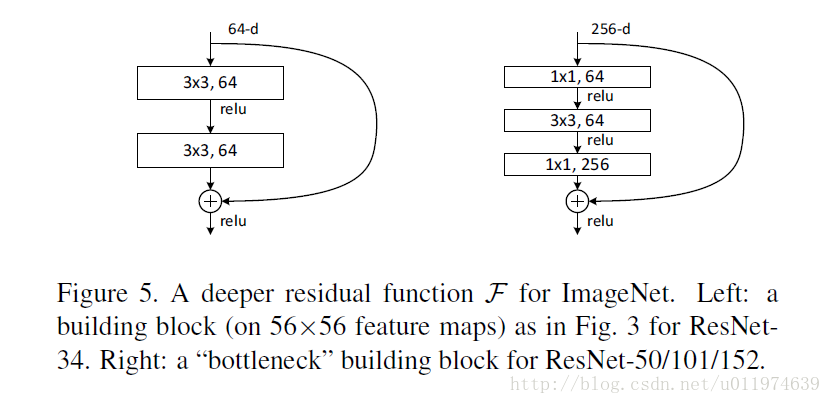

对于两层到三层的ResNet残差学习模块,设计如下:

设计好网络,下面该做实验,分析结果了

实验结果

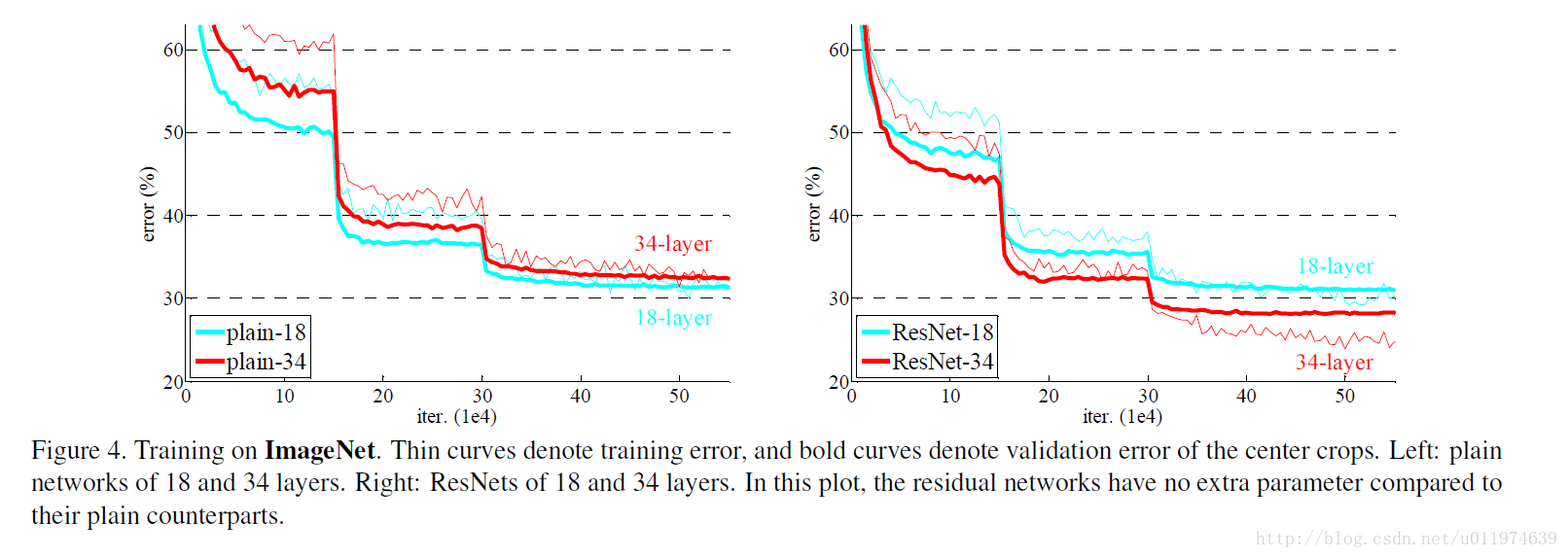

下图是ResNets与普通网络的对比,注意ResNets相对于普通网络是没有额外的参数的:

左边是普通网络随着迭代次数的增加,plain-18和plain-34随着迭代次数的增加,训练误差和验证误差的变化,加粗的是验证误差,细线为训练误差,可以看到无论是训练误差还是验证误差,随着迭代次数的增加,plain-34都比plain-18要大,这就是我们一开始说的degradation问题。

右边是residual net(使用的是零填充),可以看到随着迭代次数的增加 训练集和验证集的误差都继续下降了,说明residual net结构产生的效果确实比较好。

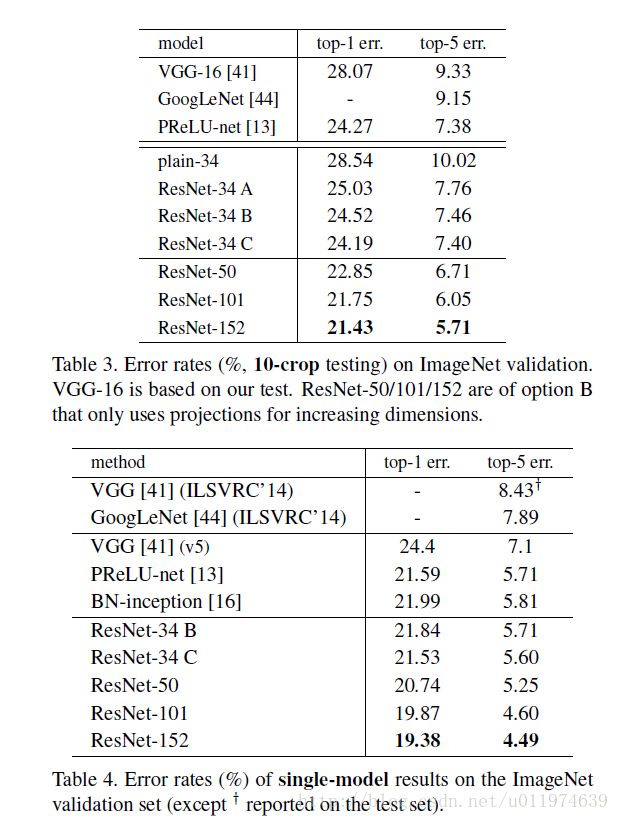

评估residual net在ImageNet数据集上的表现:

resNet-A是使用zero-padding,resNet-B是在等维使用恒等映射,否则使用shortcuts projection,resNet-C的所有shortcuts都是projection

可以看到residual net随着层数的增加,性能继续提升。

到这里论文分析就算结束了,下面分析ResNet在TensorFlow上的开源实现。

ResNet在TensorFlow上的实现

代码如下:

# coding:utf8# %%# Copyright 2016 The TensorFlow Authors. All Rights Reserved.## Licensed under the Apache License, Version 2.0 (the "License");# you may not use this file except in compliance with the License.# You may obtain a copy of the License at## http://www.apache.org/licenses/LICENSE-2.0## Unless required by applicable law or agreed to in writing, software# distributed under the License is distributed on an "AS IS" BASIS,# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.# See the License for the specific language governing permissions and# limitations under the License.# =============================================================================="""Typical use:from tensorflow.contrib.slim.nets import resnet_v2ResNet-101 for image classification into 1000 classes:# inputs has shape [batch, 224, 224, 3]with slim.arg_scope(resnet_v2.resnet_arg_scope(is_training)):net, end_points = resnet_v2.resnet_v2_101(inputs, 1000)ResNet-101 for semantic segmentation into 21 classes:# inputs has shape [batch, 513, 513, 3]with slim.arg_scope(resnet_v2.resnet_arg_scope(is_training)):net, end_points = resnet_v2.resnet_v2_101(inputs,21,global_pool=False,output_stride=16)"""import collectionsimport tensorflow as tfslim = tf.contrib.slimclass Block(collections.namedtuple('Block', ['scope', 'unit_fn', 'args'])):'''使用collections.namedtuple设计ResNet基本的Block模块组的named tuple只包含数据结构,包含具体方法需要传入三个参数[scope,unit_fn,args]以Block('block1',bottleneck,[(256,64,1)]x2 + [(256,64,2)])为例scope = 'block1' 这个Block的名称就是block1unit_fn = bottleneck, 就是ResNet的残差学习单元args = [(256,64,1)]x2 + [(256,64,2)]args是一个列表,每个元素都对应一个bottleneck残差学习单元前面两个元素都是(256,64,1),后一个元素是(256,64,2)每个元素都是一个三元的tuple,代表(depth,depth_bottleneck,stride)例如(256,64,2)代表构建的bottleneck残差学习单元(每个残差学习单元里面有三个卷积层)中,第三层输出通道数depth为256,前两层输出通道数depth_bottleneck为64,且中间层的步长stride为2.这个残差学习单元的结构为[(1x1/s1,64),(3x3/s2,64),(1x1/s1,256)]整个block1中有三个bottleneck残差学习单元,结构为[(1x1/s1,64),(3x3/s2,64),(1x1/s1,256)][(1x1/s1,64),(3x3/s2,64),(1x1/s1,256)][(1x1/s1,64),(3x3/s2,64),(1x1/s1,256)]'''"""A named tuple describing a ResNet block.Its parts are:scope: The scope of the `Block`.unit_fn: The ResNet unit function which takes as input a `Tensor` andreturns another `Tensor` with the output of the ResNet unit.args: A list of length equal to the number of units in the `Block`. The listcontains one (depth, depth_bottleneck, stride) tuple for each unit in theblock to serve as argument to unit_fn."""def subsample(inputs, factor, scope=None):'''降采样方法,如果factor=1,则不做修改返回inputs,不为1,则使用slim.max_pool2d最大池化实现,:param inputs::param factor: 采样因子:param scope::return:'''"""Subsamples the input along the spatial dimensions.Args:inputs: A `Tensor` of size [batch, height_in, width_in, channels].factor: The subsampling factor.scope: Optional variable_scope.Returns:output: A `Tensor` of size [batch, height_out, width_out, channels] with theinput, either intact (if factor == 1) or subsampled (if factor > 1)."""if factor == 1:return inputselse:return slim.max_pool2d(inputs, [1, 1], stride=factor, scope=scope)def conv2d_same(inputs, num_outputs, kernel_size, stride, scope=None):'''如果步长为1,直接使用slim.conv2d,使用conv2d的padding='SAME'如果步长大于1,需要显式的填充0(size已经扩大了),在使用conv2d取padding='VALID'(或者先直接SAME,再调用上面的subsample下采样):param inputs: [batch, height_in, width_in, channels].:param num_outputs: An integer, the number of output filters.:param kernel_size: An int with the kernel_size of the filters.:param stride: An integer, the output stride.:param scope::return:'''"""Strided 2-D convolution with 'SAME' padding.When stride > 1, then we do explicit zero-padding, followed by conv2d with'VALID' padding.Note thatnet = conv2d_same(inputs, num_outputs, 3, stride=stride)is equivalent tonet = slim.conv2d(inputs, num_outputs, 3, stride=1, padding='SAME')net = subsample(net, factor=stride)whereasnet = slim.conv2d(inputs, num_outputs, 3, stride=stride, padding='SAME')is different when the input's height or width is even, which is why we add thecurrent function. For more details, see ResnetUtilsTest.testConv2DSameEven().Args:inputs: A 4-D tensor of size [batch, height_in, width_in, channels].num_outputs: An integer, the number of output filters.kernel_size: An int with the kernel_size of the filters.stride: An integer, the output stride.rate: An integer, rate for atrous convolution.scope: Scope.Returns:output: A 4-D tensor of size [batch, height_out, width_out, channels] withthe convolution output."""if stride == 1:return slim.conv2d(inputs, num_outputs, kernel_size, stride=1,padding='SAME', scope=scope)else:# kernel_size_effective = kernel_size + (kernel_size - 1) * (rate - 1)pad_total = kernel_size - 1pad_beg = pad_total // 2pad_end = pad_total - pad_beginputs = tf.pad(inputs,[[0, 0], [pad_beg, pad_end], [pad_beg, pad_end], [0, 0]])return slim.conv2d(inputs, num_outputs, kernel_size, stride=stride,padding='VALID', scope=scope)@slim.add_arg_scopedef stack_blocks_dense(net, blocks,outputs_collections=None):'''定义堆叠Blocks函数,:param net: 为输入 [batch, height, width, channels]:param blocks: blocks为之前定义好的Blocks的class的列表,:param outputs_collections: 用来收集各个end_points和collections:return:使用两层循环,逐个Block,逐个Residual unit堆叠先使用variable_scope将残差单元命名改为block/unit_%d的形式在第二层,我们拿到每个Blocks中的Residual Unit的args,并展开再使用unit_fn残差学习单元生成函数顺序地创建并连接所有的残差学习单元最后,我们使用slim.utils.collect_named_outputs函数将输出net添加到collection'''"""Stacks ResNet `Blocks` and controls output feature density.First, this function creates scopes for the ResNet in the form of'block_name/unit_1', 'block_name/unit_2', etc.Args:net: A `Tensor` of size [batch, height, width, channels].blocks: A list of length equal to the number of ResNet `Blocks`. Eachelement is a ResNet `Block` object describing the units in the `Block`.outputs_collections: Collection to add the ResNet block outputs.Returns:net: Output tensor"""for block in blocks:with tf.variable_scope(block.scope, 'block', [net]) as sc:for i, unit in enumerate(block.args):with tf.variable_scope('unit_%d' % (i + 1), values=[net]):unit_depth, unit_depth_bottleneck, unit_stride = unitnet = block.unit_fn(net,depth=unit_depth,depth_bottleneck=unit_depth_bottleneck,stride=unit_stride)net = slim.utils.collect_named_outputs(outputs_collections, sc.name, net)return netdef resnet_arg_scope(is_training=True,weight_decay=0.0001,batch_norm_decay=0.997,batch_norm_epsilon=1e-5,batch_norm_scale=True):'''这里创建ResNet通过的arg_scope,用来定义某些函数的参数默认值先设置好BN的各项参数,然后通过slim.arg_scope将slim.conv2d的几个默认参数设置好::param is_training::param weight_decay: 权重衰减率:param batch_norm_decay: BN衰减率默认为0.997:param batch_norm_epsilon::param batch_norm_scale::return:'''"""Defines the default ResNet arg scope.TODO(gpapan): The batch-normalization related default values above areappropriate for use in conjunction with the reference ResNet modelsreleased at https://github.com/KaimingHe/deep-residual-networks. Whentraining ResNets from scratch, they might need to be tuned.Args:is_training: Whether or not we are training the parameters in the batchnormalization layers of the model.weight_decay: The weight decay to use for regularizing the model.batch_norm_decay: The moving average decay when estimating layer activationstatistics in batch normalization.batch_norm_epsilon: Small constant to prevent division by zero whennormalizing activations by their variance in batch normalization.batch_norm_scale: If True, uses an explicit `gamma` multiplier to scale theactivations in the batch normalization layer.Returns:An `arg_scope` to use for the resnet models."""batch_norm_params = {'is_training': is_training,'decay': batch_norm_decay,'epsilon': batch_norm_epsilon,'scale': batch_norm_scale,'updates_collections': tf.GraphKeys.UPDATE_OPS,}'''通过slim.arg_scope将slim.conv2d默认参数权重设置为L2正则权重初始化/激活函数设置/BN设置'''with slim.arg_scope([slim.conv2d],weights_regularizer=slim.l2_regularizer(weight_decay),weights_initializer=slim.variance_scaling_initializer(),activation_fn=tf.nn.relu,normalizer_fn=slim.batch_norm,normalizer_params=batch_norm_params):with slim.arg_scope([slim.batch_norm], **batch_norm_params):# The following implies padding='SAME' for pool1, which makes feature# alignment easier for dense prediction tasks. This is also used in# https://github.com/facebook/fb.resnet.torch. However the accompanying# code of 'Deep Residual Learning for Image Recognition' uses# padding='VALID' for pool1. You can switch to that choice by setting# slim.arg_scope([slim.max_pool2d], padding='VALID').with slim.arg_scope([slim.max_pool2d], padding='SAME') as arg_sc:return arg_sc@slim.add_arg_scopedef bottleneck(inputs, depth, depth_bottleneck, stride,outputs_collections=None, scope=None):'''bottleneck残差学习单元,这是ResNet V2论文中提到的Full Preactivation Residual Unit的一个变种, 它和V1中的残差学习单元的主要区别有两点:1. 在每一层前都用了Batch Normalization2. 对输入进行preactivation,而不是在卷积进行激活函数处理:param inputs::param depth::param depth_bottleneck::param stride::param outputs_collections::param scope::return:'''"""Bottleneck residual unit variant with BN before convolutions.This is the full preactivation residual unit variant proposed in [2]. SeeFig. 1(b) of [2] for its definition. Note that we use here the bottleneckvariant which has an extra bottleneck layer.When putting together two consecutive ResNet blocks that use this unit, oneshould use stride = 2 in the last unit of the first block.Args:inputs: A tensor of size [batch, height, width, channels].depth: The depth of the ResNet unit output.depth_bottleneck: The depth of the bottleneck layers.stride: The ResNet unit's stride. Determines the amount of downsampling ofthe units output compared to its input.rate: An integer, rate for atrous convolution.outputs_collections: Collection to add the ResNet unit output.scope: Optional variable_scope.Returns:The ResNet unit's output."""with tf.variable_scope(scope, 'bottleneck_v2', [inputs]) as sc:# 获取输入的最后一个维度,即输出通道数depth_in = slim.utils.last_dimension(inputs.get_shape(), min_rank=4)#先做BN操作,在使用ReLU做preactivationpreact = slim.batch_norm(inputs, activation_fn=tf.nn.relu, scope='preact')# 定义shortcut,如果残差单元的输入通道数depth_in和输出通道数depth一致,那么使用subsample#按步长为stride对inputs进行空间上的降采样(确保空间尺寸和残差一致,因为残差中间那层的卷积步长为stride)# 如果输入/输出通道数不一样,我们用步长stride的1*1卷积改变其通道数,使得与输出通道数一致if depth == depth_in:shortcut = subsample(inputs, stride, 'shortcut')else:shortcut = slim.conv2d(preact, depth, [1, 1], stride=stride,normalizer_fn=None, activation_fn=None,scope='shortcut')# 然后定义residual,这里residual有3层,先是一个1*1尺寸/步长为1/输出通道数为depth_bottleneck的卷积# 然后是一个3*3尺寸 -->最后还是一个1*1# 最终得到的residual,注意最后一层没有正则化也没有激活函数# 最后将residual和shortcut相加,得到最后的output,再添加到collectionresidual = slim.conv2d(preact, depth_bottleneck, [1, 1], stride=1,scope='conv1')residual = conv2d_same(residual, depth_bottleneck, 3, stride,scope='conv2')residual = slim.conv2d(residual, depth, [1, 1], stride=1,normalizer_fn=None, activation_fn=None,scope='conv3')output = shortcut + residualreturn slim.utils.collect_named_outputs(outputs_collections,sc.name,output)def resnet_v2(inputs,blocks,num_classes=None,global_pool=True,include_root_block=True,reuse=None,scope=None):''':param inputs::param blocks::param num_classes::param global_pool::param include_root_block::param reuse::param scope::return:'''"""Generator for v2 (preactivation) ResNet models.This function generates a family of ResNet v2 models. See the resnet_v2_*()methods for specific model instantiations, obtained by selecting differentblock instantiations that produce ResNets of various depths.Args:inputs: A tensor of size [batch, height_in, width_in, channels].blocks: A list of length equal to the number of ResNet blocks. Each elementis a resnet_utils.Block object describing the units in the block.num_classes: Number of predicted classes for classification tasks. If Nonewe return the features before the logit layer.include_root_block: If True, include the initial convolution followed bymax-pooling, if False excludes it. If excluded, `inputs` should be theresults of an activation-less convolution.reuse: whether or not the network and its variables should be reused. To beable to reuse 'scope' must be given.scope: Optional variable_scope.Returns:net: A rank-4 tensor of size [batch, height_out, width_out, channels_out].If global_pool is False, then height_out and width_out are reduced by afactor of output_stride compared to the respective height_in and width_in,else both height_out and width_out equal one. If num_classes is None, thennet is the output of the last ResNet block, potentially after globalaverage pooling. If num_classes is not None, net contains the pre-softmaxactivations.end_points: A dictionary from components of the network to the correspondingactivation.Raises:ValueError: If the target output_stride is not valid."""with tf.variable_scope(scope, 'resnet_v2', [inputs], reuse=reuse) as sc:end_points_collection = sc.original_name_scope + '_end_points'with slim.arg_scope([slim.conv2d, bottleneck,stack_blocks_dense],outputs_collections=end_points_collection):net = inputsif include_root_block:# We do not include batch normalization or activation functions in conv1# because the first ResNet unit will perform these. Cf. Appendix of [2].with slim.arg_scope([slim.conv2d],activation_fn=None, normalizer_fn=None):net = conv2d_same(net, 64, 7, stride=2, scope='conv1')net = slim.max_pool2d(net, [3, 3], stride=2, scope='pool1')net = stack_blocks_dense(net, blocks)# This is needed because the pre-activation variant does not have batch# normalization or activation functions in the residual unit output. See# Appendix of [2].net = slim.batch_norm(net, activation_fn=tf.nn.relu, scope='postnorm')if global_pool:# Global average pooling.net = tf.reduce_mean(net, [1, 2], name='pool5', keep_dims=True)if num_classes is not None:net = slim.conv2d(net, num_classes, [1, 1], activation_fn=None,normalizer_fn=None, scope='logits')# Convert end_points_collection into a dictionary of end_points.end_points = slim.utils.convert_collection_to_dict(end_points_collection)if num_classes is not None:end_points['predictions'] = slim.softmax(net, scope='predictions')return net, end_pointsdef resnet_v2_50(inputs,num_classes=None,global_pool=True,reuse=None,scope='resnet_v2_50'):"""ResNet-50 model of [1]. See resnet_v2() for arg and return description."""blocks = [Block('block1', bottleneck, [(256, 64, 1)] * 2 + [(256, 64, 2)]),Block('block2', bottleneck, [(512, 128, 1)] * 3 + [(512, 128, 2)]),Block('block3', bottleneck, [(1024, 256, 1)] * 5 + [(1024, 256, 2)]),Block('block4', bottleneck, [(2048, 512, 1)] * 3)]return resnet_v2(inputs, blocks, num_classes, global_pool,include_root_block=True, reuse=reuse, scope=scope)def resnet_v2_101(inputs,num_classes=None,global_pool=True,reuse=None,scope='resnet_v2_101'):"""ResNet-101 model of [1]. See resnet_v2() for arg and return description."""blocks = [Block('block1', bottleneck, [(256, 64, 1)] * 2 + [(256, 64, 2)]),Block('block2', bottleneck, [(512, 128, 1)] * 3 + [(512, 128, 2)]),Block('block3', bottleneck, [(1024, 256, 1)] * 22 + [(1024, 256, 2)]),Block('block4', bottleneck, [(2048, 512, 1)] * 3)]return resnet_v2(inputs, blocks, num_classes, global_pool,include_root_block=True, reuse=reuse, scope=scope)def resnet_v2_152(inputs,num_classes=None,global_pool=True,reuse=None,scope='resnet_v2_152'):"""ResNet-152 model of [1]. See resnet_v2() for arg and return description."""blocks = [Block('block1', bottleneck, [(256, 64, 1)] * 2 + [(256, 64, 2)]),Block('block2', bottleneck, [(512, 128, 1)] * 7 + [(512, 128, 2)]),Block('block3', bottleneck, [(1024, 256, 1)] * 35 + [(1024, 256, 2)]),Block('block4', bottleneck, [(2048, 512, 1)] * 3)]return resnet_v2(inputs, blocks, num_classes, global_pool,include_root_block=True, reuse=reuse, scope=scope)def resnet_v2_200(inputs,num_classes=None,global_pool=True,reuse=None,scope='resnet_v2_200'):"""ResNet-200 model of [2]. See resnet_v2() for arg and return description."""blocks = [Block('block1', bottleneck, [(256, 64, 1)] * 2 + [(256, 64, 2)]),Block('block2', bottleneck, [(512, 128, 1)] * 23 + [(512, 128, 2)]),Block('block3', bottleneck, [(1024, 256, 1)] * 35 + [(1024, 256, 2)]),Block('block4', bottleneck, [(2048, 512, 1)] * 3)]return resnet_v2(inputs, blocks, num_classes, global_pool,include_root_block=True, reuse=reuse, scope=scope)from datetime import datetimeimport mathimport timedef time_tensorflow_run(session, target, info_string):num_steps_burn_in = 10total_duration = 0.0total_duration_squared = 0.0for i in range(num_batches + num_steps_burn_in):start_time = time.time()_ = session.run(target)duration = time.time() - start_timeif i >= num_steps_burn_in:if not i % 10:print ('%s: step %d, duration = %.3f' %(datetime.now(), i - num_steps_burn_in, duration))total_duration += durationtotal_duration_squared += duration * durationmn = total_duration / num_batchesvr = total_duration_squared / num_batches - mn * mnsd = math.sqrt(vr)print ('%s: %s across %d steps, %.3f +/- %.3f sec / batch' %(datetime.now(), info_string, num_batches, mn, sd))batch_size = 32height, width = 224, 224inputs = tf.random_uniform((batch_size, height, width, 3))with slim.arg_scope(resnet_arg_scope(is_training=False)):net, end_points = resnet_v2_152(inputs, 1000)init = tf.global_variables_initializer()sess = tf.Session()sess.run(init)num_batches = 100time_tensorflow_run(sess, net, "Forward")

输出:

2017-08-05 21:21:23.997012: step 0, duration = 0.232

2017-08-05 21:21:26.310152: step 10, duration = 0.230

2017-08-05 21:21:28.625971: step 20, duration = 0.232

2017-08-05 21:21:30.948839: step 30, duration = 0.231

2017-08-05 21:21:33.273177: step 40, duration = 0.232

2017-08-05 21:21:35.608182: step 50, duration = 0.233

2017-08-05 21:21:37.941335: step 60, duration = 0.232

2017-08-05 21:21:40.276842: step 70, duration = 0.231

2017-08-05 21:21:42.609510: step 80, duration = 0.233

2017-08-05 21:21:44.934983: step 90, duration = 0.231

2017-08-05 21:21:47.031013: Forward across 100 steps, 0.233 +/- 0.002 sec / batch层数极深,但是训练的速度还是可以的,ResNet是一个实用的卷积神经网络机构~

这篇关于TensorFlow实战:Chapter-6(CNN-4-经典卷积神经网络(ResNet))的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!