本文主要是介绍Vision Transformer with Sparse Scan Prior,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

摘要

https://arxiv.org/pdf/2405.13335v1

In recent years, Transformers have achieved remarkable progress in computer vision tasks. However, their global modeling often comes with substantial computational overhead, in stark contrast to the human eye’s efficient information processing. Inspired by the human eye’s sparse scanning mechanism, we propose a Sparse Scan Self-Attention mechanism ( \left.\mathrm{S}^{3} \mathrm{~A}\right) . This mechanism predefines a series of Anchors of Interest for each token and employs local attention to efficiently model the spatial information around these anchors, avoiding redundant global modeling and excessive focus on local information. This approach mirrors the human eye’s functionality and significantly reduces the computational load of vision models. Building on \mathrm{S}^{3} \mathrm{~A} , we introduce the Sparse Scan Vision Transformer (SSViT). Extensive experiments demonstrate the outstanding performance of SSViT across a variety of tasks. Specifically, on ImageNet classification, without additional supervision or training data, SSViT achieves top-1 accuracies of \mathbf{8 4 . 4 % / 8 5 . 7 %} with 4.4G/18.2G FLOPs. SSViT also excels in downstream tasks such as object detection, instance segmentation, and semantic segmentation. Its robustness is further validated across diverse datasets. Code will be available at https:// github. com/qhfan/SSViT.

1 Introduction

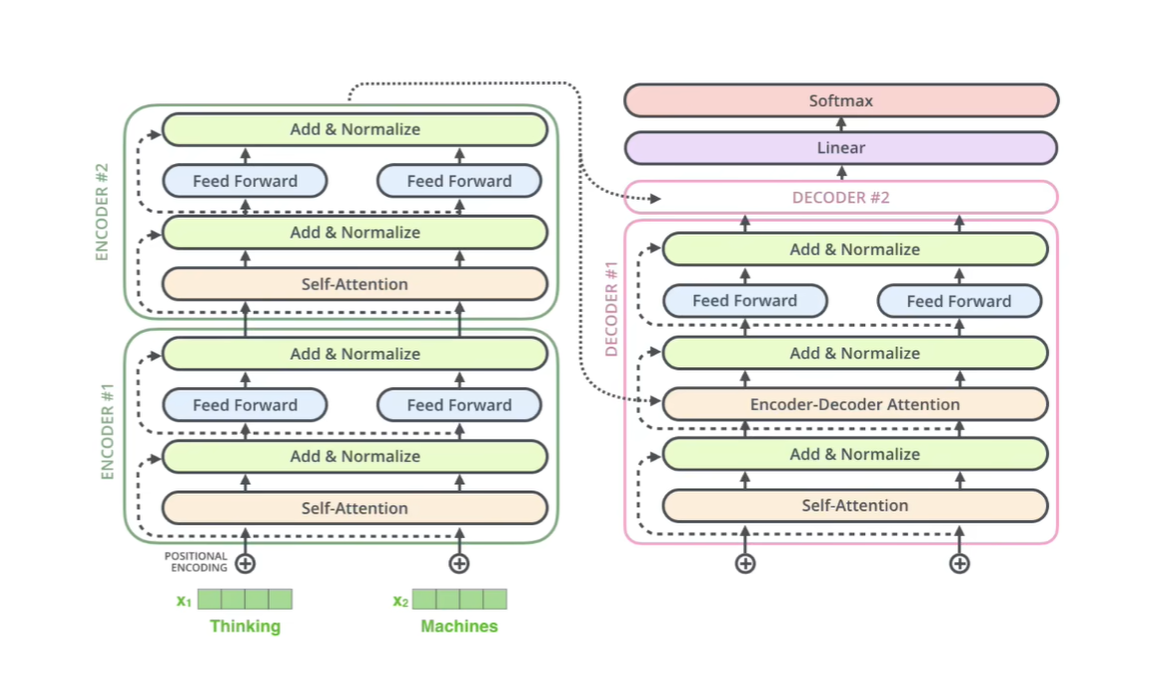

Since its inception, the Vision Transformer (ViT) [12] has attracted considerable attention from the research community, primarily owing to its exceptional capability in modeling long-range dependencies. However, the self-attention mechanism [61], as the core of ViT, imposes significant computational overhead, thus constraining its broader applicability. Several strategies have been proposed to alleviate this limitation of self-attention. For instance, methods such as Swin-Transformer [40, 11] group tokens for attention, reducing computational costs and enabling the model to focus more on local information. Techniques like PVT [63,64,18,16,29] down-sample tokens to shrink the size of the \mathrm{QK} matrix, thus lowering computational demands while retaining global information. Meanwhile, approaches such as UniFormer [35, 47] forgo attention operations in the early stages of visual modeling, opting instead for lightweight convolution. Furthermore, some models [50] enhance computational efficiency by pruning redundant tokens.

Despite these advancements, the majority of methods primarily focus on reducing the token count in self-attention operations to boost ViT efficiency, often neglecting the manner in which human eyes process visual information. The human visual system operates in a notably less intricate yet highly efficient manner compared to ViT models. Unlike the fine-grained local spatial information modeling in models like Swin [40], NAT [20], LVT [69], or the indistinct global information modeling seen in models like PVT [63], PVTv2 [64], CMT [18], human vision employs a sparse scanning

这篇关于Vision Transformer with Sparse Scan Prior的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!