本文主要是介绍K8s 使用 Ceph RBD 作为后端存储(静态供给、动态供给),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

一、K8s 使用 Ceph RBD

Ceph RBD(Rados Block Device)是 Ceph 存储集群中的一个重要组件,它提供了块级别的存储访问。RBD 允许用户创建虚拟块设备,并将其映射到客户端系统中,就像本地磁盘一样使用。

首先所有 k8s 节点都需要安装 ceph-common 工具:

yum -y install epel-release ceph-common

二、静态供给方式

静态供给方式需要提前创建好 RBD Image 给到 K8s 使用。

2.1 在 Ceph 中创建 RBD Image 和 授权用户

RBD 需要绑定一个存储池,创建一个名为 rbd-static-pool 的存储池:

ceph osd pool create rbd-static-pool 8

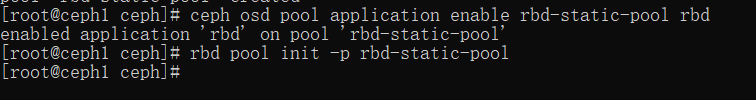

指定存储池的特性为 RBD,并初始化该存储池给 RBD 使用:

# 指定类型

ceph osd pool application enable rbd-static-pool rbd

# 初始化

rbd pool init -p rbd-static-pool

创建 RBD Image:

# 创建

rbd create rbd-static-volume -s 5G -p rbd-static-pool

# 关闭特征 ,格式 {pool name}/{image name}

rbd feature disable rbd-static-pool/rbd-static-volume object-map fast-diff deep-flatten

创建用户 rbd-static-user 并授权存储池 rbd-static-pool

ceph auth get-or-create client.rbd-static-user mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=rbd-static-pool' -o ceph.client.rbd-static-user.keyring

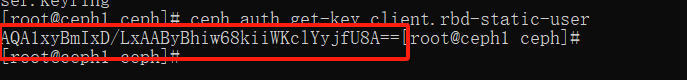

查看 rbd-static-user 用户的秘钥:

ceph auth get-key client.rbd-static-user

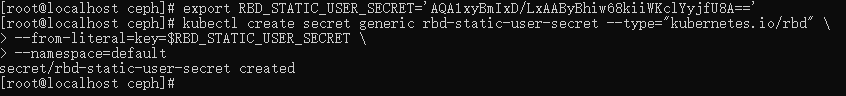

2.2 k8s 创建 secret 配置上面的 key

export RBD_STATIC_USER_SECRET='AQA1xyBmIxD/LxAAByBhiw68kiiWKclYyjfU8A=='kubectl create secret generic rbd-static-user-secret --type="kubernetes.io/rbd" \

--from-literal=key=$RBD_STATIC_USER_SECRET \

--namespace=default

2.3 pod 直接使用 RBD 存储

vi rbd-test-pod.yml

apiVersion: v1

kind: Pod

metadata:name: rbd-test-pod

spec:containers:- name: nginximage: nginximagePullPolicy: IfNotPresentvolumeMounts:- name: data-volumemountPath: /usr/share/nginx/html/volumes:- name: data-volumerbd:monitors: ["11.0.1.140:6789"]pool: rbd-static-poolimage: rbd-static-volumeuser: rbd-static-usersecretRef:name: rbd-static-user-secretfsType: ext4

kubectl apply -f rbd-test-pod.yml

查看 pod :

kubectl get pods

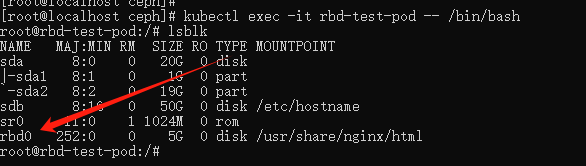

可以进到 pod 中查看磁盘情况:

kubectl exec -it rbd-test-pod -- /bin/bashlsblk

2.4 创建 PV 使用 RBD 存储

vi rbd-test-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:name: rbd-test-pv

spec:accessModes: ["ReadWriteOnce"]capacity:storage: 2GipersistentVolumeReclaimPolicy: Retainrbd:monitors: ["11.0.1.140:6789"]pool: rbd-static-poolimage: rbd-static-volumeuser: rbd-static-usersecretRef:name: rbd-static-user-secretfsType: ext4

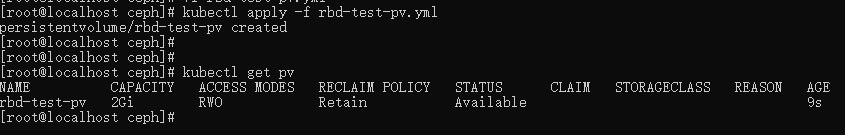

kubectl apply -f rbd-test-pv.yml

创建 PVC 绑定 PV :

vi rbd-test-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: rbd-test-pvc

spec:accessModes: ["ReadWriteOnce"]resources:requests:storage: 2Gi

kubectl apply -f rbd-test-pvc.yml

查看 pvc 和 pv :

kubectl get pvckubectl get pv

测试 pod 挂载 pvc:

vi rbd-test-pod1.yml

apiVersion: v1

kind: Pod

metadata:name: rbd-test-pod1

spec:containers:- name: nginximage: nginximagePullPolicy: IfNotPresentvolumeMounts:- name: data-volumemountPath: /usr/share/nginx/html/volumes:- name: data-volumepersistentVolumeClaim:claimName: rbd-test-pvcreadOnly: false

kubectl apply -f rbd-test-pod1.yml

查看 pod :

kubectl get pods

三、动态供给方式

使用动态存储时 controller-manager 需要使用 rbd 命令创建 image,但是官方controller-manager镜像里没有rbd命令,所以在开始前需要安装 rbd-provisioner 插件:

vi external-storage-rbd-provisioner.yml

apiVersion: v1

kind: ServiceAccount

metadata:name: rbd-provisionernamespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: rbd-provisioner

rules:- apiGroups: [""]resources: ["persistentvolumes"]verbs: ["get", "list", "watch", "create", "delete"]- apiGroups: [""]resources: ["persistentvolumeclaims"]verbs: ["get", "list", "watch", "update"]- apiGroups: ["storage.k8s.io"]resources: ["storageclasses"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["events"]verbs: ["create", "update", "patch"]- apiGroups: [""]resources: ["endpoints"]verbs: ["get", "list", "watch", "create", "update", "patch"]- apiGroups: [""]resources: ["services"]resourceNames: ["kube-dns"]verbs: ["list", "get"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: rbd-provisioner

subjects:- kind: ServiceAccountname: rbd-provisionernamespace: kube-system

roleRef:kind: ClusterRolename: rbd-provisionerapiGroup: rbac.authorization.k8s.io---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:name: rbd-provisionernamespace: kube-system

rules:

- apiGroups: [""]resources: ["secrets"]verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: rbd-provisionernamespace: kube-system

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: rbd-provisioner

subjects:

- kind: ServiceAccountname: rbd-provisionernamespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:name: rbd-provisionernamespace: kube-system

spec:selector:matchLabels:app: rbd-provisionerreplicas: 1strategy:type: Recreatetemplate:metadata:labels:app: rbd-provisionerspec:containers:- name: rbd-provisionerimage: "registry.cn-chengdu.aliyuncs.com/ives/rbd-provisioner:v2.0.0-k8s1.11"env:- name: PROVISIONER_NAMEvalue: ceph.com/rbdserviceAccount: rbd-provisioner

kubectl apply -f external-storage-rbd-provisioner.yml

查看 pod:

kubectl get pods -n kube-system

3.1 在 ceph 创建存储池 和 授权用户

由于 k8s 会自动创建 rbd 这就仅仅创建存储池即可:

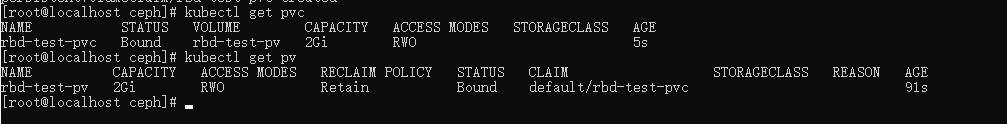

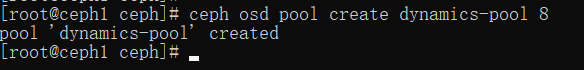

ceph osd pool create dynamics-pool 8

创建 k8s 访问用户:

ceph auth get-or-create client.dynamics-user mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=dynamics-pool' -o ceph.client.dynamics-user.keyring

查看 dynamics-user 的秘钥:

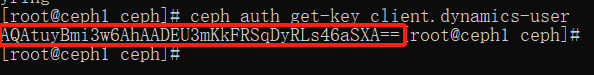

ceph auth get-key client.dynamics-user

k8s 动态供给还需要 admin 用户的秘钥:

ceph auth get-key client.admin

3.2 在 k8s 中创建 secret

export ADMIN_USER_SECRET='AQDdUB9mcTI3LRAAOBpt3e7AH5v9fiMtHKQpqA=='kubectl create secret generic ceph-admin-secret --type="kubernetes.io/rbd" \

--from-literal=key=$ADMIN_USER_SECRET \

--namespace=kube-systemexport DYNAMICS_USER_SECRET='AQAtuyBmi3w6AhAADEU3mKkFRSqDyRLs46aSXA=='kubectl create secret generic ceph-dynamics-user-secret --type="kubernetes.io/rbd" \

--from-literal=key=$DYNAMICS_USER_SECRET \

--namespace=default

3.3 创建 StorageClass

vi ceph-sc.yml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:name: dynamic-ceph-rdb

provisioner: ceph.com/rbd

parameters:monitors: 11.0.1.140:6789adminId: adminadminSecretName: ceph-admin-secretadminSecretNamespace: kube-systempool: dynamics-pooluserId: dynamics-useruserSecretName: ceph-dynamics-user-secretfsType: ext4imageFormat: "2"imageFeatures: "layering"

kubectl apply -f ceph-sc.yml

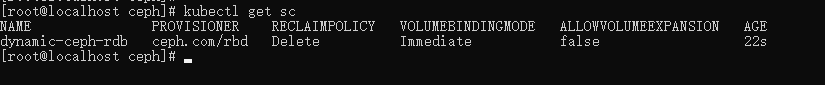

查看 SC:

kubectl get sc

3.4 测试创建 PVC

vi test-pvc.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: rbd-pvc1

spec:accessModes: - ReadWriteOncestorageClassName: dynamic-ceph-rdbresources:requests:storage: 2Gi

kubectl apply -f test-pvc.yml

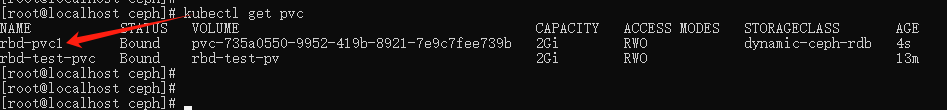

查看 pvc:

kubectl get pvc

创建 pod 使用上面 pvc :

vi rbd-test-pod2.yml

apiVersion: v1

kind: Pod

metadata:name: rbd-test-pod2

spec:containers:- name: nginximage: nginximagePullPolicy: IfNotPresentvolumeMounts:- name: data-volumemountPath: /usr/share/nginx/html/volumes:- name: data-volumepersistentVolumeClaim:claimName: rbd-pvc1readOnly: false

kubectl apply -f rbd-test-pod2.yml

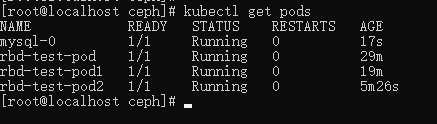

查看 pod :

kubectl get pods

3.5 测试使用 volumeClaimTemplates 动态创建 pv 和 pvc

vi mysql.yml

# headless service

apiVersion: v1

kind: Service

metadata:name: mysql-hlnamespace: mysqllabels:app: mysql-hl

spec:clusterIP: Noneports:- name: mysql-portport: 3306selector:app: mysql---

# NodePort service

apiVersion: v1

kind: Service

metadata:name: mysql-npnamespace: mysqllabels:app: mysql-np

spec:clusterIP: ports:- name: master-portport: 3306nodePort: 31306targetPort: 3306selector:app: mysqltype: NodePorttarget-port:externalTrafficPolicy: Cluster ---

apiVersion: apps/v1

kind: StatefulSet

metadata:name: mysql

spec:serviceName: "mysql-hl"replicas: 1selector: matchLabels: app: mysqltemplate:metadata:labels:app: mysqlspec:containers:- name: mysqlimage: mysql:8.0.20ports:- containerPort: 3306name: master-portenv:- name: MYSQL_ROOT_PASSWORDvalue: "root"- name: TZvalue: "Asia/Shanghai"volumeMounts: - name: mysql-datamountPath: /var/lib/mysql volumeClaimTemplates:- metadata:name: mysql-dataspec:accessModes: ["ReadWriteOnce"]storageClassName: dynamic-ceph-rdbresources:requests:storage: 2Gi

kubectl apply -f mysql.yml

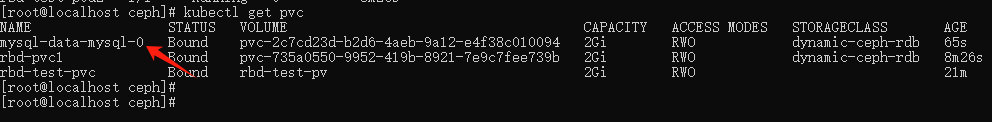

查看 pod :

查看 pvc :

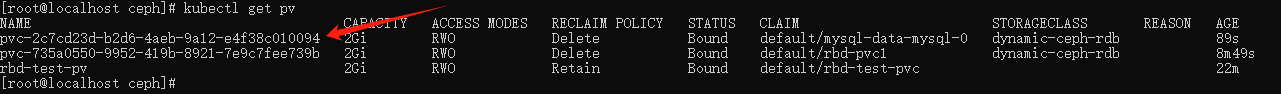

查看 pv:

这篇关于K8s 使用 Ceph RBD 作为后端存储(静态供给、动态供给)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!