本文主要是介绍【 网络爬虫】java 使用Socket, HttpUrlConnection方式抓取数据,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

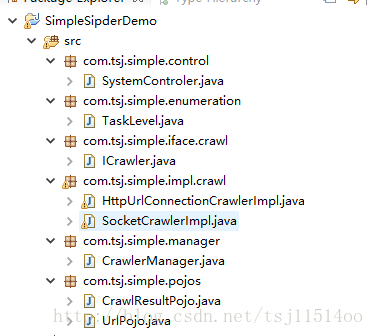

结构:

公共方法

- url任务的pojo类

import com.tsj.simple.enumeration.TaskLevel;

/*** url任务的pojo类* @author tsj-pc*/

public class UrlPojo {public UrlPojo(String url) {this.url = url;}@Overridepublic String toString() {return "UrlPojo [taskLevel=" + taskLevel + ", url=" + url + "]";}public UrlPojo(String url, TaskLevel taskLevel) {this.url = url;this.taskLevel = taskLevel;}private String url;private TaskLevel taskLevel = TaskLevel.MIDDLE;public String getUrl() {return url;}public void setUrl(String url) {this.url = url;}public TaskLevel getTaskLevel() {return taskLevel;}public void setTaskLevel(TaskLevel taskLevel) {this.taskLevel = taskLevel;}public HttpURLConnection getConnection() {try {URL url = new URL(this.url);URLConnection connection = url.openConnection();if (connection instanceof HttpURLConnection) {return (HttpURLConnection) connection;}else {throw new Exception("connection is errr!"); }} catch (Exception e) {e.printStackTrace();}return null;}public String getHost() {try {URL url = 这篇关于【 网络爬虫】java 使用Socket, HttpUrlConnection方式抓取数据的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!