本文主要是介绍(超详细)7-YOLOV5改进-添加 CoTAttention注意力机制,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

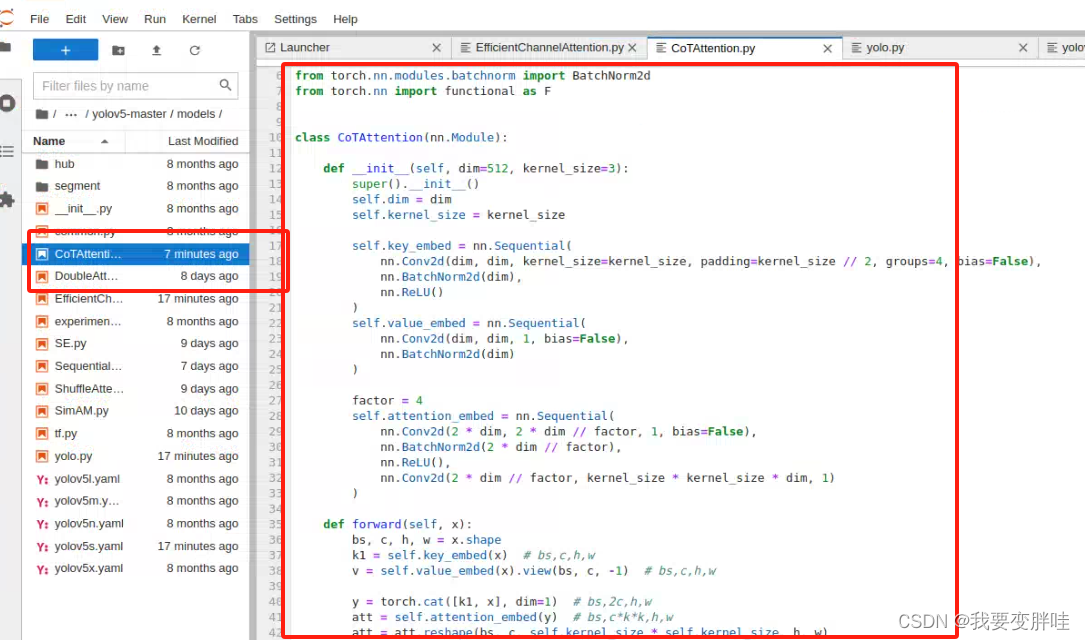

1、在yolov5/models下面新建一个CoTAttention.py文件,在里面放入下面的代码

代码如下:

import numpy as np

import torch

from torch import flatten, nn

from torch.nn import init

from torch.nn.modules.activation import ReLU

from torch.nn.modules.batchnorm import BatchNorm2d

from torch.nn import functional as Fclass CoTAttention(nn.Module):def __init__(self, dim=512, kernel_size=3):super().__init__()self.dim = dimself.kernel_size = kernel_sizeself.key_embed = nn.Sequential(nn.Conv2d(dim, dim, kernel_size=kernel_size, padding=kernel_size // 2, groups=4, bias=False),nn.BatchNorm2d(dim),nn.ReLU())self.value_embed = nn.Sequential(nn.Conv2d(dim, dim, 1, bias=False),nn.BatchNorm2d(dim))factor = 4self.attention_embed = nn.Sequential(nn.Conv2d(2 * dim, 2 * dim // factor, 1, bias=False),nn.BatchNorm2d(2 * dim // factor),nn.ReLU(),nn.Conv2d(2 * dim // factor, kernel_size * kernel_size * dim, 1))def forward(self, x):bs, c, h, w = x.shapek1 = self.key_embed(x) # bs,c,h,wv = self.value_embed(x).view(bs, c, -1) # bs,c,h,wy = torch.cat([k1, x], dim=1) # bs,2c,h,watt = self.attention_embed(y) # bs,c*k*k,h,watt = att.reshape(bs, c, self.kernel_size * self.kernel_size, h, w)att = att.mean(2, keepdim=False).view(bs, c, -1) # bs,c,h*wk2 = F.softmax(att, dim=-1) * vk2 = k2.view(bs, c, h, w)return k1 + k2

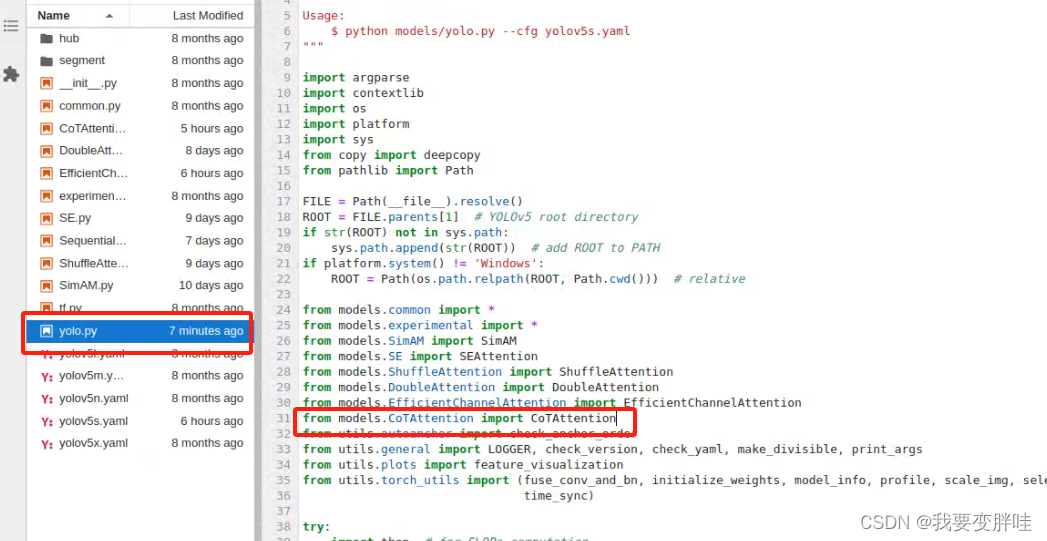

2、找到yolo.py文件,进行更改内容

在29行加一个from models.CoTAttention import CoTAttention, 保存即可

3、找到自己想要更改的yaml文件,我选择的yolov5s.yaml文件(你可以根据自己需求进行选择),将刚刚写好的模块CoTAttention加入到yolov5s.yaml里面,并更改一些内容。更改如下

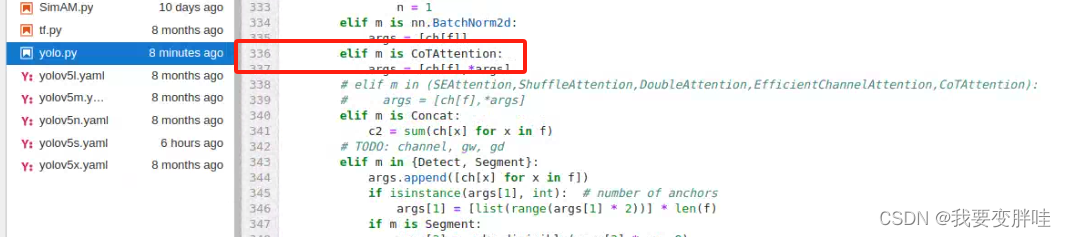

4、在yolo.py里面加入两行代码(335-337)

保存即可!

运行

这篇关于(超详细)7-YOLOV5改进-添加 CoTAttention注意力机制的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!