本文主要是介绍小白 Python爬取豆瓣短评 :以《山海情》为例,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

Python爬取豆瓣短评:以 《山海情》为例

纯小白一枚,尝试爬取豆瓣短评数据,用的是最简单的方法,别的方法还在学习中,把代码发出来,算是一个记录吧。

完整代码如下:

# 加载包

import requests

import pandas

from lxml import etree# 获取cookie值(略去cookie值则仅能爬取前几页数据)模拟登陆,cookie会随时间有变化

# 爬虫前添加请求头部 Mac

header = {"Cookie": 'gr_user_id=ec05fc26-5e3d-44f5-bc48-a0d2ff8e38c0; _vwo_uuid_v2=D2C7616C9FA22F09A30A43EBAC7B13818|7ebe5b59bdb4d6b1628909ccd51ba25f; bid="vYoSaWwjLcQ"; _ga=GA1.2.38368504.1556943753; ll="118348"; __yadk_uid=Ukxr7Xv6X9agJCEekF6ixuTDUOjhxJni; douban-fav-remind=1; __utmv=30149280.15023; douban-profile-remind=1; __gads=ID=78ef049bfc8f79dc-227bf73dd5c40036:T=1605834617:RT=1605834617:S=ALNI_MasYgFyDWZyx-7MP72of2z7lJKR9A; __utmc=30149280; __utmc=223695111; viewed="33386709_27091667_6862150_11741419_1174911_1265708_1174921_1174438_25981074_34889907"; __utmz=30149280.1611894754.75.64.utmcsr=baidu|utmccn=(organic)|utmcmd=organic; __utmz=223695111.1611894754.13.11.utmcsr=baidu|utmccn=(organic)|utmcmd=organic; dbcl2="150238169:mzx8V6OHHN0"; ck=xMfg; push_noty_num=0; push_doumail_num=0; _pk_ref.100001.4cf6=%5B%22%22%2C%22%22%2C1611932280%2C%22https%3A%2F%2Fwww.baidu.com%2Flink%3Furl%3DL6MugSLgjK2LzG4aFw1UKfJGTYtIXyDFgdAYOkxfSm86YLP7-4UDBhmYrAp1iv-8JCD6FB2gsd4MBkJZNdgXxK%26wd%3D%26eqid%3D976bcfb7000553520000000660138fde%22%5D; _pk_id.100001.4cf6=da0b3a84fc58cff1.1556943753.17.1611932280.1611925194.; ap_v=0,6.0; __utma=30149280.38368504.1556943753.1611925038.1611932280.79; __utma=223695111.1148274009.1556943753.1611925038.1611932280.17',"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36"}# 初始化用于保存短评的列表

comments = []

ids = []

times = []# 通过观察的url翻页的规律,使用for循环得到多个链接,保存到urls列表中

for i in range(0,24):urls = f"https://movie.douban.com/subject/35033654/comments?start={i*20}&limit=20&status=P&sort=new_score"# requests用get方法获取目标地址的html代码文本htmltext = requests.get(urls, headers=header).text# etree用html方法将html文本转化为html对象htmlelement = etree.HTML(htmltext)# comments 短评file = htmlelement.xpath('//*[@id="comments"]/div/div[2]/p/span/text()')comments = comments + file# ids 用户file = htmlelement.xpath('//*[@id="comments"]/div/div[2]/h3/span[2]/a/text()')ids = ids + fileprint(ids)# time 发布时间file = htmlelement.xpath('//*[@id="comments"]/div/div[2]/h3/span[2]/span[3]/text()')times = times + file# 将定位的内容转换为dataframe对象,数据框具备同样结构

df1 = pandas.DataFrame(comments)

df2 = pandas.DataFrame(ids)

df3 = pandas.DataFrame(times)# 合并dataframe

df = pandas.concat([df2,df1,df3],axis=1)# 将Datafrme对象转换为excel

df.to_excel('comments_douban1.xlsx')print("爬虫完成!")几点说明:

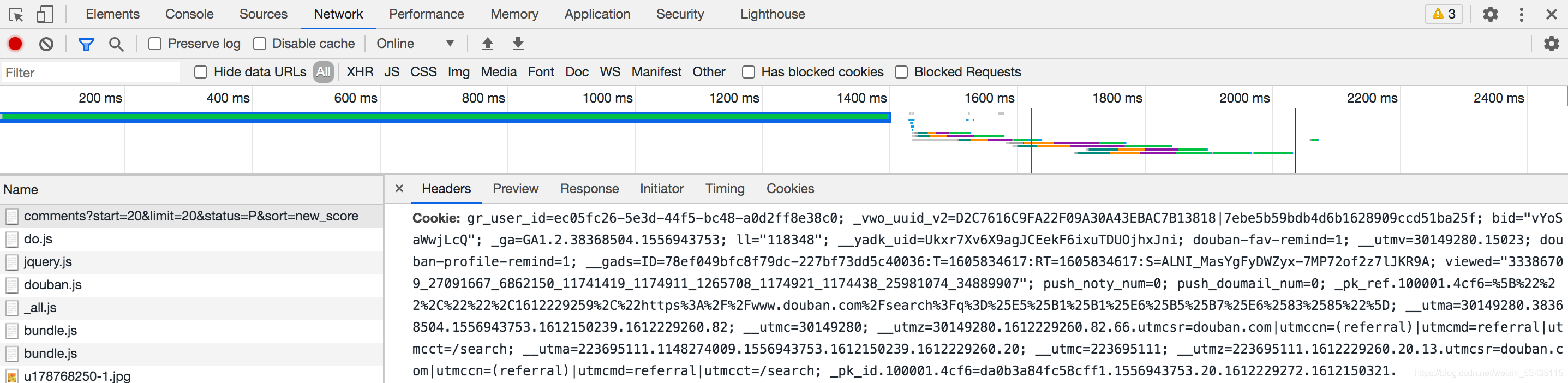

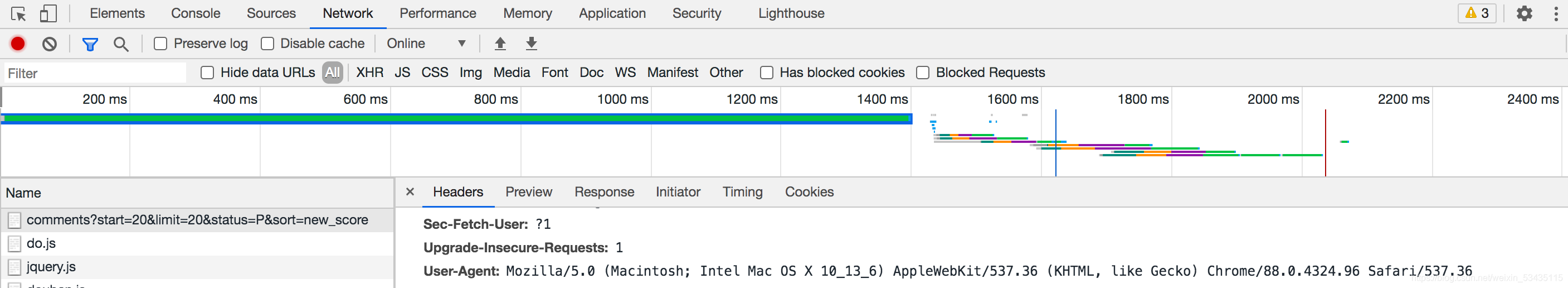

1、cookie和User-Agent获取方法

对应网页下,右键-检查→network

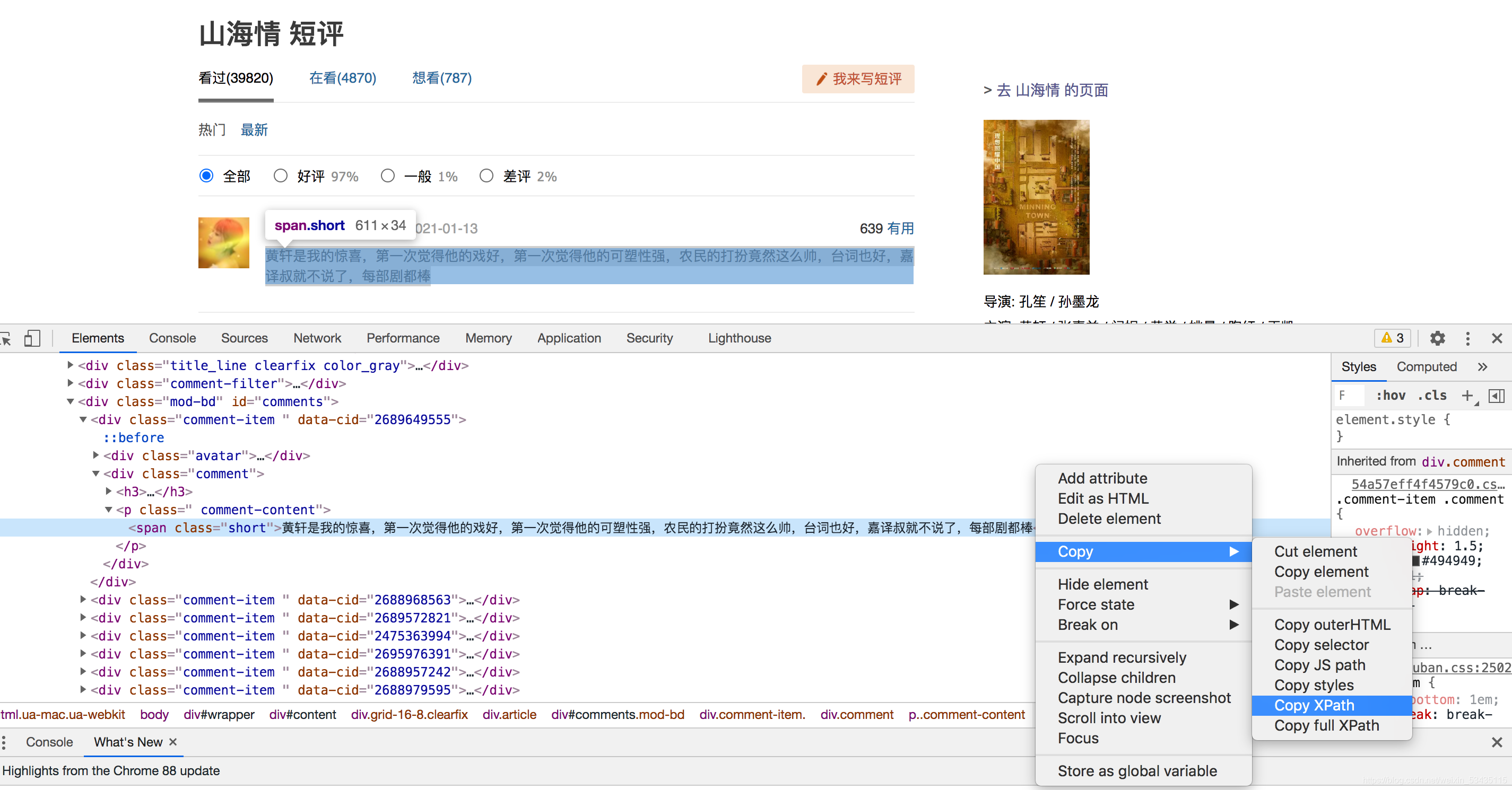

2、Xpath

定位到要分析内容的xpath位置,光标放置于对象上,右键→检查→copy Xpath,多复制几条寻找规律

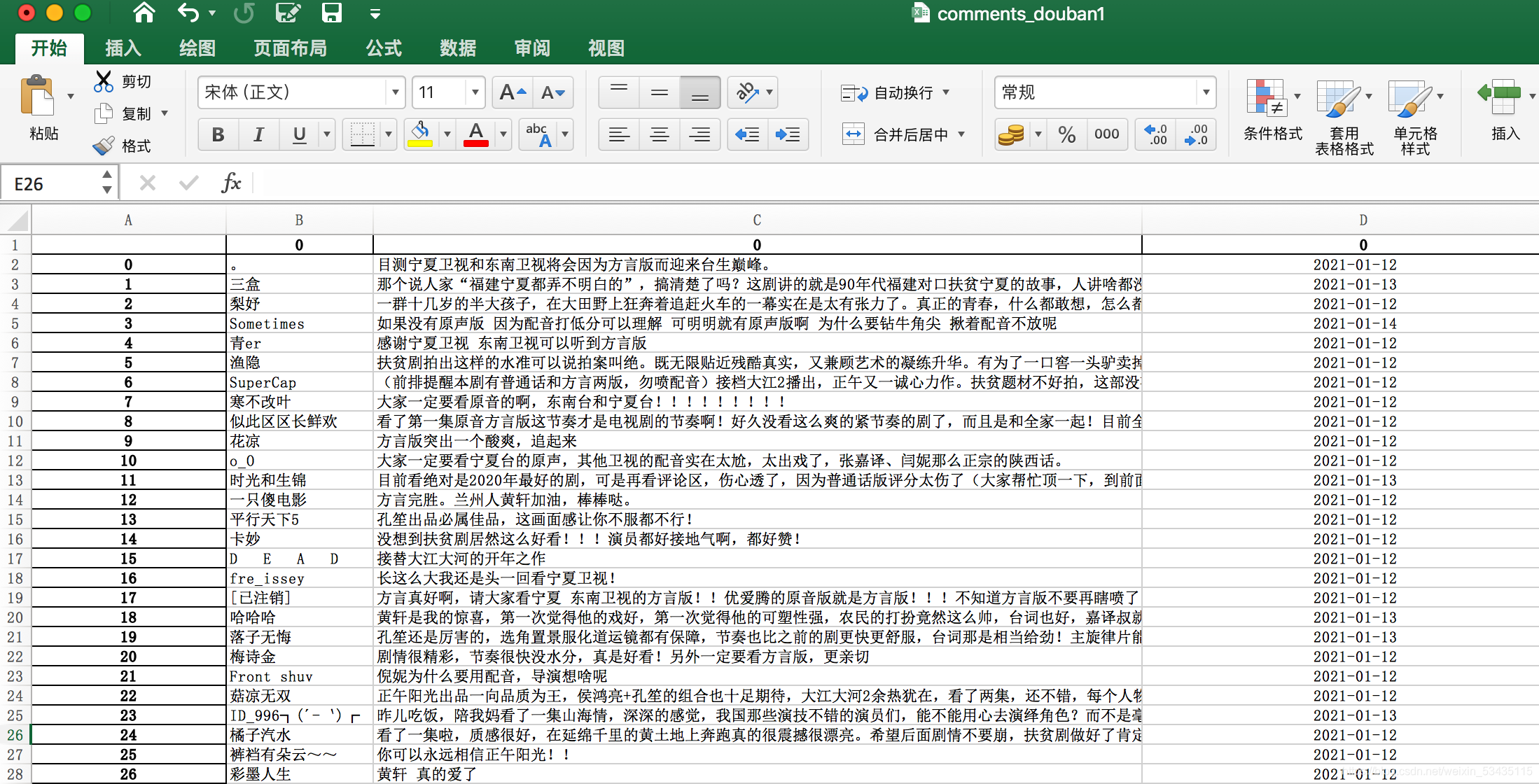

爬虫结果

这篇关于小白 Python爬取豆瓣短评 :以《山海情》为例的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!