本文主要是介绍【PyTorch】Kaggle深度学习实战之Skin Cancer MNIST: HAM10000,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

目录

- 数据集概述

- Description

- Overview

- Original Data Source

- From Authors

- 初次尝试 (CNN)

- 利用imblearn库处理不平衡样本

- 第二次尝试 (CNN)

- my_dataset.py

- train_CNN.py

- 第三次尝试 (ResNet18预训练模型)

- train_ResNet18.py

数据集概述

本次实战练习的数据集来自Kaggle的Skin Cancer MNIST: HAM10000。官方的Description如下:

Description

Overview

Another more interesting than digit classification dataset to use to get biology and medicine students more excited about machine learning and image processing.

Original Data Source

- Original Challenge: https://challenge2018.isic-archive.com

- https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/DBW86T

[1] Noel Codella, Veronica Rotemberg, Philipp Tschandl, M. Emre Celebi, Stephen Dusza, David Gutman, Brian Helba, Aadi Kalloo, Konstantinos Liopyris, Michael Marchetti, Harald Kittler, Allan Halpern: “Skin Lesion Analysis Toward Melanoma Detection 2018: A Challenge Hosted by the International Skin Imaging Collaboration (ISIC)”, 2018; https://arxiv.org/abs/1902.03368

[2] Tschandl, P., Rosendahl, C. & Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 5, 180161 doi:10.1038/sdata.2018.161 (2018).From Authors

Training of neural networks for automated diagnosis of pigmented skin lesions is hampered by the small size and lack of diversity of available dataset of dermatoscopic images. We tackle this problem by releasing the HAM10000 (“Human Against Machine with 10000 training images”) dataset. We collected dermatoscopic images from different populations, acquired and stored by different modalities. The final dataset consists of 10015 dermatoscopic images which can serve as a training set for academic machine learning purposes. Cases include a representative collection of all important diagnostic categories in the realm of pigmented lesions: Actinic keratoses and intraepithelial carcinoma / Bowen’s disease (akiec), basal cell carcinoma (bcc), benign keratosis-like lesions (solar lentigines / seborrheic keratoses and lichen-planus like keratoses, bkl), dermatofibroma (df), melanoma (mel), melanocytic nevi (nv) and vascular lesions (angiomas, angiokeratomas, pyogenic granulomas and hemorrhage, vasc).

More than 50% of lesions are confirmed through histopathology (histo), the ground truth for the rest of the cases is either follow-up examination (followup), expert consensus (consensus), or confirmation by in-vivo confocal microscopy (confocal). The dataset includes lesions with multiple images, which can be tracked by the lesionid-column within the HAM10000_metadata file.

The test set is not public, but the evaluation server remains running (see the challenge website). Any publications written using the HAM10000 data should be evaluated on the official test set hosted there, so that methods can be fairly compared.

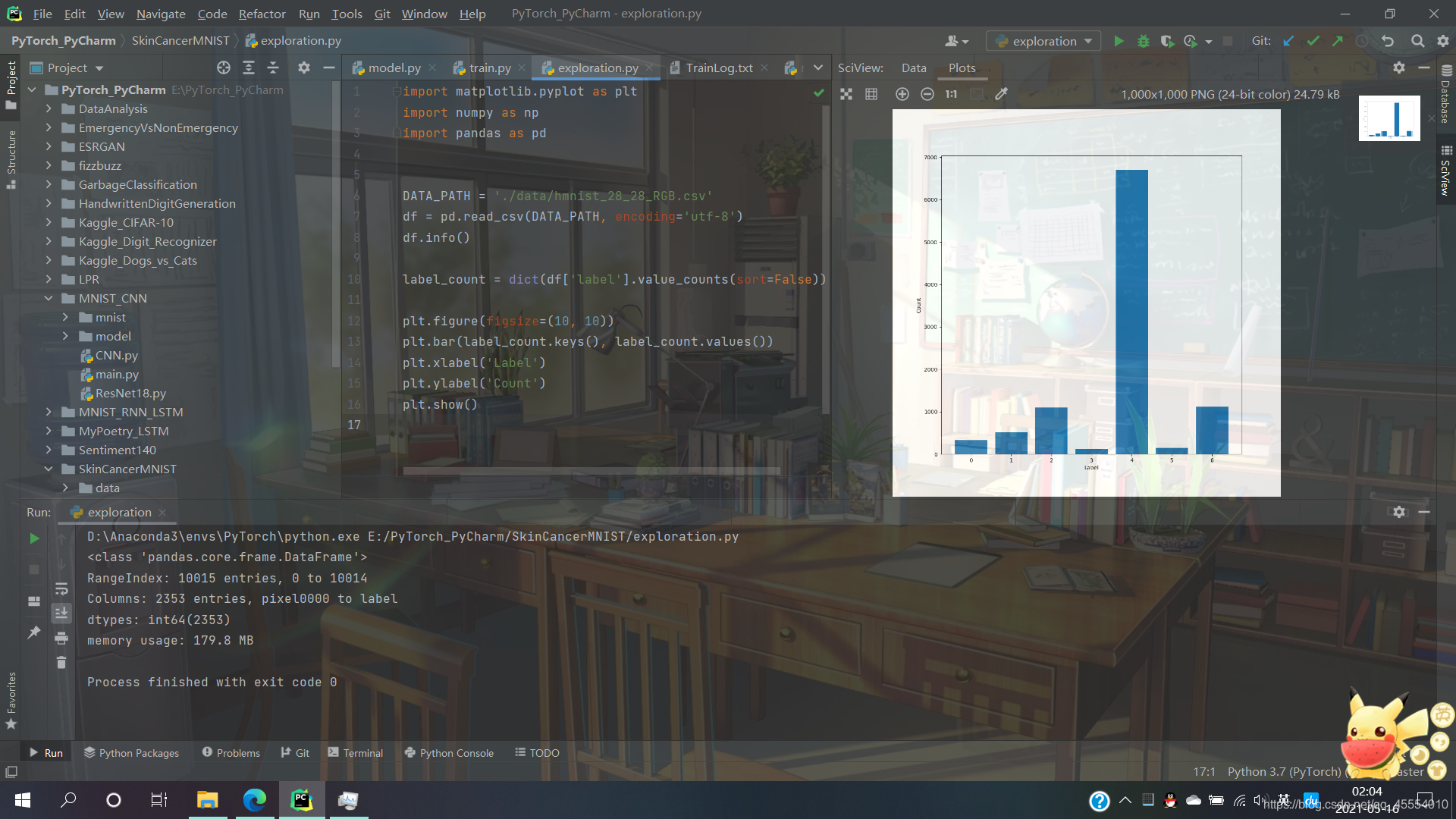

本次训练我使用的数据集为hmnist_28_28_RGB.csv,其中包含10016行2353列(含标题行),其中前2352列为每张图片各通道各像素点的灰度值 (28×28×3),最后1列为label,即该图像的标签。在这份数据集中,label的取值为[0, 1, 2, 3, 4, 5, 6]。

初次尝试 (CNN)

在观察过数据集之后,我的第一想法是可以把之前训练过的MNIST的模型拿来训练这份数据集,因为MNIST数据集每张图片的大小也是28×28,两份数据集最大的差异在于MNIST的图片是灰度图,而HAM10000的图片是RGB三通道的彩色图(Kaggle也提供了灰度图版本的HAM10000数据集)。另外,MNIST本质上是一个十分类问题,即将图片分类为[0, 1, 2, 3, 4, 5, 6, 7, 8, 9]这十个类别之一,而HAM10000本质上是一个七分类问题。

基于以上想法,我们可以将以前训练MNIST的模型修改一下:将第一个卷积层的in_channels参数由1修改为3,将最后一个nn.Linear的参数由(128, 10)修改为(128, 7)。

注:原先训练MNIST的CNN模型如下所示。此前该模型训练MNIST数据集可达到0.99875的泛化精度。

CNN((conv_unit1): Sequential((0): Conv2d(1, 16, kernel_size=(3, 3), stride=(1, 1))(1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(conv_unit2): Sequential((0): Conv2d(16, 32, kernel_size=(3, 3), stride=(1, 1))(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(conv_unit3): Sequential((0): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1))(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True))(conv_unit4): Sequential((0): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1))(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU(inplace=True)(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(fc_unit): Sequential((0): Linear(in_features=2048, out_features=1024, bias=True)(1): ReLU(inplace=True)(2): Dropout(p=0.5, inplace=False)(3): Linear(in_features=1024, out_features=128, bias=True)(4): ReLU(inplace=True)(5): Dropout(p=0.5, inplace=False)(6): Linear(in_features=128, out_features=10, bias=True)) )

修改后的train.py如下:

import torch.nn as nnclass CNN(nn.Module):def __init__(self):super(CNN, self).__init__()self.conv_unit1 = nn.Sequential(nn.Conv2d(in_channels=3, out_channels=16, kernel_size=3),nn.BatchNorm2d(16),nn.ReLU(inplace=True))self.conv_unit2 = nn.Sequential(nn.Conv2d(in_channels=16, out_channels=32, kernel_size=3),nn.BatchNorm2d(32),nn.ReLU(inplace=True),nn.MaxPool2d(kernel_size=2, stride=2))self.conv_unit3 = nn.Sequential(nn.Conv2d(in_channels=32, out_channels=64, kernel_size=3),nn.BatchNorm2d(64),nn.ReLU(inplace=True))self.conv_unit4 = nn.Sequential(nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3),nn.BatchNorm2d(128),nn.ReLU(inplace=True),nn.MaxPool2d(kernel_size=2, stride=2))self.fc_unit = nn.Sequential(nn.Linear(128 * 4 * 4, 1024),nn.ReLU(inplace=True),nn.Dropout(p=0.5),nn.Linear(1024, 128),nn.ReLU(inplace=True),nn.Dropout(p=0.5),nn.Linear(128, 7))def forward(self, x):x = self.conv_unit1(x)x = self.conv_unit2(x)x = self.conv_unit3(x)x = self.conv_unit4(x)x = x.view(x.size(0), -1)x = self.fc_unit(x)return x但是在实际训练中,这个模型并没有取得非常好的训练结果,而是在训练开始后不久就出现了严重的过拟合现象——训练集精度达到了0.95以上而测试集精度却还不到0.75。

我重新对数据集进行观察,发现了之前被我忽略的一个问题。在HAM10000数据集中,每一类样本的数量并不相同,其中标签为4的样本数量最多,标签为3和5的样本数量最少。不平衡的样本应该就是导致过拟合的最主要因素,当我们的样本数据占比不平衡的时候,模型就会偏向于多数类的结果。

利用imblearn库处理不平衡样本

针对不平衡数据,最简单的一种方法就是生成少数类的样本,这其中最基本的方法就是从少数类的样本中进行随机采样来增加新的样本。使用imblearn.over_sampling.RandomOverSampler()可以通过简单的随机采样少数类的样本,使得每类样本的比例为1。

from imblearn.over_sampling import RandomOverSampler...ros = RandomOverSampler()

data = pd.read_csv(DATA_PATH, encoding='utf-8')

labels = data['label']

images = data.drop(columns=['label'])

images, labels = ros.fit_resample(images, labels)

print('The size of dataset: {}'.format(images.shape[0]))

The size of dataset: 46935

第二次尝试 (CNN)

my_dataset.py

import numpy as np

from torch.utils.data import Datasetclass my_dataset(Dataset):def __init__(self, df, labels, transform):self.df = df.values.reshape(-1, 28, 28, 3).astype(np.uint8)self.labels = labelsself.transform = transformdef __len__(self):return len(self.df)def __getitem__(self, index):return self.transform(self.df[index]), self.labels[index]train_CNN.py

import os

import tqdmfrom imblearn.over_sampling import RandomOverSampler

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

import torch

from torch.autograd import Variable

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader

from torchsummary import summary

from torchvision import transformsfrom model import CNN

from my_dataset import my_datasetif not os.path.exists('./model/'):os.mkdir('./model/')BATCH_SIZE = 128

DATA_PATH = './data/hmnist_28_28_RGB.csv'

EPOCH = 100

LEARNING_RATE = 1e-3

GPU_IS_OK = torch.cuda.is_available()device = torch.device('cuda' if GPU_IS_OK else 'cpu')

print('SkinCancerMNIST-HAM10000 is being trained using the {}.'.format('GPU' if GPU_IS_OK else 'CPU'))ros = RandomOverSampler()

transform = transforms.Compose([transforms.ToTensor(),transforms.Normalize(mean=[0.5], std=[0.5])

])print('Loading the dataset...')

data = pd.read_csv(DATA_PATH, encoding='utf-8')

labels = data['label']

images = data.drop(columns=['label'])

images, labels = ros.fit_resample(images, labels)

print('The size of dataset: {}'.format(images.shape[0]))

X_train, X_eval, y_train, y_eval = train_test_split(images, labels, train_size=0.8, random_state=21)

y_train = torch.from_numpy(np.array(y_train)).type(torch.LongTensor)

y_eval = torch.from_numpy(np.array(y_eval)).type(torch.LongTensor)

train_data = my_dataset(df=X_train, labels=y_train, transform=transform)

eval_data = my_dataset(df=X_eval, labels=y_eval, transform=transform)

data_loader_train = DataLoader(train_data, batch_size=BATCH_SIZE, shuffle=True)

data_loader_eval = DataLoader(eval_data, batch_size=BATCH_SIZE, shuffle=True)

print('Over.')my_model = CNN().to(device)

print('The model structure and parameters are as follows:')

summary(my_model, input_size=(3, 28, 28))

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(my_model.parameters(), lr=LEARNING_RATE, betas=(0.9, 0.999), eps=1e-8)print('Training...')

for epoch in range(1, EPOCH + 1):# traintrain_loss = 0train_acc = 0train_step = 0my_model.train()for image, label in tqdm.tqdm(data_loader_train):image = Variable(image.to(device))label = Variable(label.to(device))output = my_model(image)loss = criterion(output, label)optimizer.zero_grad()loss.backward()optimizer.step()train_loss += loss.item()_, pred = output.max(1)num_correct = (pred == label).sum().item()acc = num_correct / BATCH_SIZEtrain_acc += acctrain_step += 1train_loss /= train_steptrain_acc /= train_step# evaleval_loss = 0eval_acc = 0eval_step = 0my_model.eval()for image, label in data_loader_eval:image = Variable(image.to(device))label = Variable(label.to(device))output = my_model(image)loss = criterion(output, label)eval_loss += loss.item()_, pred = output.max(1)num_correct = (pred == label).sum().item()acc = num_correct / BATCH_SIZEeval_acc += acceval_step += 1eval_loss /= eval_stepeval_acc /= eval_step# print the loss and accuracyprint('[{:3d}/{:3d}] Train Loss: {:11.9f} | Train Accuracy: {:6.4f} | Eval Loss: {:11.9f} | Eval Accuracy: {:6.4f}'.format(epoch, EPOCH, train_loss, train_acc, eval_loss, eval_acc))# save the modeltorch.save(my_model.state_dict(), './model/cnn_epoch{}.pth'.format(epoch))print('Training completed.')训练结果如下:

SkinCancerMNIST-HAM10000 is being trained using the GPU.

Loading the dataset...

The size of dataset: 46935

Over.

The model structure and parameters are as follows:

----------------------------------------------------------------Layer (type) Output Shape Param #

================================================================Conv2d-1 [-1, 16, 26, 26] 448BatchNorm2d-2 [-1, 16, 26, 26] 32ReLU-3 [-1, 16, 26, 26] 0Conv2d-4 [-1, 32, 24, 24] 4,640BatchNorm2d-5 [-1, 32, 24, 24] 64ReLU-6 [-1, 32, 24, 24] 0MaxPool2d-7 [-1, 32, 12, 12] 0Conv2d-8 [-1, 64, 10, 10] 18,496BatchNorm2d-9 [-1, 64, 10, 10] 128ReLU-10 [-1, 64, 10, 10] 0Conv2d-11 [-1, 128, 8, 8] 73,856BatchNorm2d-12 [-1, 128, 8, 8] 256ReLU-13 [-1, 128, 8, 8] 0MaxPool2d-14 [-1, 128, 4, 4] 0Linear-15 [-1, 1024] 2,098,176ReLU-16 [-1, 1024] 0Dropout-17 [-1, 1024] 0Linear-18 [-1, 128] 131,200ReLU-19 [-1, 128] 0Dropout-20 [-1, 128] 0Linear-21 [-1, 7] 903

================================================================

Total params: 2,328,199

Trainable params: 2,328,199

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 1.08

Params size (MB): 8.88

Estimated Total Size (MB): 9.97

----------------------------------------------------------------

Training...

100%|██████████| 294/294 [00:08<00:00, 33.63it/s]

[ 1/100] Train Loss: 1.097523572 | Train Accuracy: 0.5776 | Eval Loss: 0.755483223 | Eval Accuracy: 0.7227

100%|██████████| 294/294 [00:08<00:00, 33.96it/s]

[ 2/100] Train Loss: 0.609664759 | Train Accuracy: 0.7711 | Eval Loss: 0.454173865 | Eval Accuracy: 0.8177

100%|██████████| 294/294 [00:08<00:00, 34.66it/s]

[ 3/100] Train Loss: 0.412133431 | Train Accuracy: 0.8409 | Eval Loss: 0.323135038 | Eval Accuracy: 0.8657

100%|██████████| 294/294 [00:08<00:00, 34.49it/s]

[ 4/100] Train Loss: 0.328652176 | Train Accuracy: 0.8741 | Eval Loss: 0.259220612 | Eval Accuracy: 0.8865

100%|██████████| 294/294 [00:08<00:00, 34.93it/s]

[ 5/100] Train Loss: 0.266764857 | Train Accuracy: 0.8963 | Eval Loss: 0.190583932 | Eval Accuracy: 0.9202

100%|██████████| 294/294 [00:08<00:00, 34.90it/s]

[ 6/100] Train Loss: 0.222102846 | Train Accuracy: 0.9144 | Eval Loss: 0.168924466 | Eval Accuracy: 0.9260

100%|██████████| 294/294 [00:08<00:00, 34.82it/s]

[ 7/100] Train Loss: 0.187587072 | Train Accuracy: 0.9275 | Eval Loss: 0.159032213 | Eval Accuracy: 0.9310

100%|██████████| 294/294 [00:08<00:00, 34.72it/s]

[ 8/100] Train Loss: 0.158214238 | Train Accuracy: 0.9394 | Eval Loss: 0.137018257 | Eval Accuracy: 0.9411

100%|██████████| 294/294 [00:08<00:00, 34.86it/s]

[ 9/100] Train Loss: 0.150014194 | Train Accuracy: 0.9425 | Eval Loss: 0.107922806 | Eval Accuracy: 0.9505

100%|██████████| 294/294 [00:08<00:00, 33.43it/s]

[ 10/100] Train Loss: 0.131977622 | Train Accuracy: 0.9490 | Eval Loss: 0.104936043 | Eval Accuracy: 0.9538

100%|██████████| 294/294 [00:09<00:00, 32.28it/s]

[ 11/100] Train Loss: 0.114044261 | Train Accuracy: 0.9567 | Eval Loss: 0.097652382 | Eval Accuracy: 0.9596

100%|██████████| 294/294 [00:08<00:00, 33.37it/s]

[ 12/100] Train Loss: 0.093815261 | Train Accuracy: 0.9652 | Eval Loss: 0.103575373 | Eval Accuracy: 0.9539

100%|██████████| 294/294 [00:09<00:00, 32.32it/s]

[ 13/100] Train Loss: 0.086030702 | Train Accuracy: 0.9660 | Eval Loss: 0.104587730 | Eval Accuracy: 0.9559

100%|██████████| 294/294 [00:08<00:00, 32.70it/s]

[ 14/100] Train Loss: 0.086641784 | Train Accuracy: 0.9678 | Eval Loss: 0.105972225 | Eval Accuracy: 0.9604

100%|██████████| 294/294 [00:08<00:00, 34.83it/s]

[ 15/100] Train Loss: 0.066245359 | Train Accuracy: 0.9749 | Eval Loss: 0.090617250 | Eval Accuracy: 0.9644

100%|██████████| 294/294 [00:08<00:00, 34.07it/s]

[ 16/100] Train Loss: 0.058916593 | Train Accuracy: 0.9772 | Eval Loss: 0.101415676 | Eval Accuracy: 0.9639

100%|██████████| 294/294 [00:08<00:00, 33.75it/s]

[ 17/100] Train Loss: 0.064560697 | Train Accuracy: 0.9761 | Eval Loss: 0.094144044 | Eval Accuracy: 0.9644

100%|██████████| 294/294 [00:09<00:00, 31.71it/s]

[ 18/100] Train Loss: 0.051406341 | Train Accuracy: 0.9801 | Eval Loss: 0.094940048 | Eval Accuracy: 0.9689

100%|██████████| 294/294 [00:08<00:00, 34.40it/s]

[ 19/100] Train Loss: 0.053998672 | Train Accuracy: 0.9800 | Eval Loss: 0.079542600 | Eval Accuracy: 0.9718

100%|██████████| 294/294 [00:08<00:00, 33.62it/s]

[ 20/100] Train Loss: 0.039902703 | Train Accuracy: 0.9846 | Eval Loss: 0.104098257 | Eval Accuracy: 0.9623

100%|██████████| 294/294 [00:08<00:00, 34.40it/s]

[ 21/100] Train Loss: 0.048985874 | Train Accuracy: 0.9815 | Eval Loss: 0.096893505 | Eval Accuracy: 0.9678

100%|██████████| 294/294 [00:09<00:00, 30.48it/s]

[ 22/100] Train Loss: 0.040609885 | Train Accuracy: 0.9839 | Eval Loss: 0.091206420 | Eval Accuracy: 0.9707

100%|██████████| 294/294 [00:08<00:00, 32.78it/s]

[ 23/100] Train Loss: 0.037967500 | Train Accuracy: 0.9856 | Eval Loss: 0.093303132 | Eval Accuracy: 0.9688

100%|██████████| 294/294 [00:08<00:00, 33.69it/s]

[ 24/100] Train Loss: 0.042246320 | Train Accuracy: 0.9838 | Eval Loss: 0.077334349 | Eval Accuracy: 0.9705

100%|██████████| 294/294 [00:08<00:00, 34.19it/s]

[ 25/100] Train Loss: 0.027424354 | Train Accuracy: 0.9889 | Eval Loss: 0.088666342 | Eval Accuracy: 0.9719

100%|██████████| 294/294 [00:08<00:00, 33.58it/s]

[ 26/100] Train Loss: 0.031883474 | Train Accuracy: 0.9874 | Eval Loss: 0.087377901 | Eval Accuracy: 0.9731

100%|██████████| 294/294 [00:08<00:00, 34.63it/s]

[ 27/100] Train Loss: 0.025327368 | Train Accuracy: 0.9888 | Eval Loss: 0.105942547 | Eval Accuracy: 0.9707

100%|██████████| 294/294 [00:08<00:00, 34.66it/s]

[ 28/100] Train Loss: 0.028607841 | Train Accuracy: 0.9889 | Eval Loss: 0.100801623 | Eval Accuracy: 0.9684

100%|██████████| 294/294 [00:08<00:00, 33.74it/s]

[ 29/100] Train Loss: 0.041041512 | Train Accuracy: 0.9847 | Eval Loss: 0.085675368 | Eval Accuracy: 0.9736

100%|██████████| 294/294 [00:08<00:00, 34.72it/s]

[ 30/100] Train Loss: 0.035213987 | Train Accuracy: 0.9874 | Eval Loss: 0.090149027 | Eval Accuracy: 0.9726

100%|██████████| 294/294 [00:08<00:00, 34.06it/s]

[ 31/100] Train Loss: 0.020502711 | Train Accuracy: 0.9913 | Eval Loss: 0.091211458 | Eval Accuracy: 0.9746

100%|██████████| 294/294 [00:08<00:00, 32.83it/s]

[ 32/100] Train Loss: 0.019850730 | Train Accuracy: 0.9913 | Eval Loss: 0.105814460 | Eval Accuracy: 0.9719

100%|██████████| 294/294 [00:08<00:00, 34.28it/s]

[ 33/100] Train Loss: 0.033362939 | Train Accuracy: 0.9871 | Eval Loss: 0.117483189 | Eval Accuracy: 0.9702

100%|██████████| 294/294 [00:08<00:00, 34.21it/s]

[ 34/100] Train Loss: 0.023846829 | Train Accuracy: 0.9901 | Eval Loss: 0.111141844 | Eval Accuracy: 0.9719

100%|██████████| 294/294 [00:09<00:00, 31.31it/s]

[ 35/100] Train Loss: 0.023068270 | Train Accuracy: 0.9909 | Eval Loss: 0.118383290 | Eval Accuracy: 0.9683

100%|██████████| 294/294 [00:08<00:00, 34.54it/s]

[ 36/100] Train Loss: 0.018579563 | Train Accuracy: 0.9922 | Eval Loss: 0.087729960 | Eval Accuracy: 0.9750

100%|██████████| 294/294 [00:08<00:00, 34.39it/s]

[ 37/100] Train Loss: 0.021288767 | Train Accuracy: 0.9908 | Eval Loss: 0.109204472 | Eval Accuracy: 0.9714

100%|██████████| 294/294 [00:08<00:00, 33.08it/s]

[ 38/100] Train Loss: 0.015802225 | Train Accuracy: 0.9925 | Eval Loss: 0.117359866 | Eval Accuracy: 0.9723

100%|██████████| 294/294 [00:08<00:00, 33.09it/s]

[ 39/100] Train Loss: 0.018237389 | Train Accuracy: 0.9921 | Eval Loss: 0.104059537 | Eval Accuracy: 0.9731

100%|██████████| 294/294 [00:08<00:00, 33.07it/s]

[ 40/100] Train Loss: 0.025785094 | Train Accuracy: 0.9897 | Eval Loss: 0.077784421 | Eval Accuracy: 0.9748

100%|██████████| 294/294 [00:09<00:00, 31.94it/s]

[ 41/100] Train Loss: 0.013348644 | Train Accuracy: 0.9936 | Eval Loss: 0.094893168 | Eval Accuracy: 0.9747

100%|██████████| 294/294 [00:08<00:00, 34.41it/s]

[ 42/100] Train Loss: 0.025633474 | Train Accuracy: 0.9899 | Eval Loss: 0.125781385 | Eval Accuracy: 0.9703

100%|██████████| 294/294 [00:08<00:00, 34.63it/s]

[ 43/100] Train Loss: 0.014528002 | Train Accuracy: 0.9933 | Eval Loss: 0.105200119 | Eval Accuracy: 0.9748

100%|██████████| 294/294 [00:08<00:00, 34.39it/s]

[ 44/100] Train Loss: 0.017383436 | Train Accuracy: 0.9923 | Eval Loss: 0.099666189 | Eval Accuracy: 0.9758

100%|██████████| 294/294 [00:08<00:00, 34.60it/s]

[ 45/100] Train Loss: 0.013317063 | Train Accuracy: 0.9933 | Eval Loss: 0.119774037 | Eval Accuracy: 0.9671

100%|██████████| 294/294 [00:08<00:00, 34.57it/s]

[ 46/100] Train Loss: 0.017028496 | Train Accuracy: 0.9925 | Eval Loss: 0.088944853 | Eval Accuracy: 0.9780

100%|██████████| 294/294 [00:08<00:00, 34.55it/s]

[ 47/100] Train Loss: 0.023138134 | Train Accuracy: 0.9903 | Eval Loss: 0.092055711 | Eval Accuracy: 0.9753

100%|██████████| 294/294 [00:08<00:00, 34.60it/s]

[ 48/100] Train Loss: 0.014356152 | Train Accuracy: 0.9934 | Eval Loss: 0.143944562 | Eval Accuracy: 0.9688

100%|██████████| 294/294 [00:08<00:00, 34.14it/s]

[ 49/100] Train Loss: 0.016849747 | Train Accuracy: 0.9929 | Eval Loss: 0.103618778 | Eval Accuracy: 0.9726

100%|██████████| 294/294 [00:09<00:00, 29.67it/s]

[ 50/100] Train Loss: 0.021860482 | Train Accuracy: 0.9917 | Eval Loss: 0.074164134 | Eval Accuracy: 0.9779

100%|██████████| 294/294 [00:08<00:00, 33.86it/s]

[ 51/100] Train Loss: 0.010340259 | Train Accuracy: 0.9947 | Eval Loss: 0.090575095 | Eval Accuracy: 0.9783

100%|██████████| 294/294 [00:08<00:00, 34.29it/s]

[ 52/100] Train Loss: 0.011096111 | Train Accuracy: 0.9942 | Eval Loss: 0.187082287 | Eval Accuracy: 0.9708

100%|██████████| 294/294 [00:08<00:00, 34.25it/s]

[ 53/100] Train Loss: 0.018192557 | Train Accuracy: 0.9926 | Eval Loss: 0.096717112 | Eval Accuracy: 0.9745

100%|██████████| 294/294 [00:08<00:00, 34.42it/s]

[ 54/100] Train Loss: 0.014754963 | Train Accuracy: 0.9933 | Eval Loss: 0.090190653 | Eval Accuracy: 0.9764

100%|██████████| 294/294 [00:08<00:00, 33.46it/s]

[ 55/100] Train Loss: 0.014567046 | Train Accuracy: 0.9931 | Eval Loss: 0.123246608 | Eval Accuracy: 0.9743

100%|██████████| 294/294 [00:08<00:00, 33.60it/s]

[ 56/100] Train Loss: 0.016266681 | Train Accuracy: 0.9925 | Eval Loss: 0.099786264 | Eval Accuracy: 0.9768

100%|██████████| 294/294 [00:08<00:00, 33.46it/s]

[ 57/100] Train Loss: 0.013739238 | Train Accuracy: 0.9937 | Eval Loss: 0.139589401 | Eval Accuracy: 0.9717

100%|██████████| 294/294 [00:08<00:00, 33.97it/s]

[ 58/100] Train Loss: 0.017675589 | Train Accuracy: 0.9925 | Eval Loss: 0.087442964 | Eval Accuracy: 0.9765

100%|██████████| 294/294 [00:08<00:00, 34.06it/s]

[ 59/100] Train Loss: 0.007961982 | Train Accuracy: 0.9953 | Eval Loss: 0.096205003 | Eval Accuracy: 0.9787

100%|██████████| 294/294 [00:08<00:00, 33.77it/s]

[ 60/100] Train Loss: 0.017740263 | Train Accuracy: 0.9924 | Eval Loss: 0.105093248 | Eval Accuracy: 0.9766

100%|██████████| 294/294 [00:08<00:00, 33.24it/s]

[ 61/100] Train Loss: 0.011278499 | Train Accuracy: 0.9945 | Eval Loss: 0.112517525 | Eval Accuracy: 0.9760

100%|██████████| 294/294 [00:08<00:00, 33.57it/s]

[ 62/100] Train Loss: 0.011112630 | Train Accuracy: 0.9947 | Eval Loss: 0.102678308 | Eval Accuracy: 0.9769

100%|██████████| 294/294 [00:08<00:00, 33.78it/s]

[ 63/100] Train Loss: 0.015779533 | Train Accuracy: 0.9925 | Eval Loss: 0.095426129 | Eval Accuracy: 0.9745

100%|██████████| 294/294 [00:08<00:00, 33.39it/s]

[ 64/100] Train Loss: 0.016451213 | Train Accuracy: 0.9929 | Eval Loss: 0.105537525 | Eval Accuracy: 0.9766

100%|██████████| 294/294 [00:08<00:00, 33.30it/s]

[ 65/100] Train Loss: 0.011046330 | Train Accuracy: 0.9945 | Eval Loss: 0.120727181 | Eval Accuracy: 0.9730

100%|██████████| 294/294 [00:08<00:00, 33.17it/s]

[ 66/100] Train Loss: 0.011098871 | Train Accuracy: 0.9951 | Eval Loss: 0.121291299 | Eval Accuracy: 0.9739

100%|██████████| 294/294 [00:08<00:00, 33.73it/s]

[ 67/100] Train Loss: 0.009465052 | Train Accuracy: 0.9945 | Eval Loss: 0.102491436 | Eval Accuracy: 0.9764

100%|██████████| 294/294 [00:08<00:00, 33.48it/s]

[ 68/100] Train Loss: 0.006815458 | Train Accuracy: 0.9957 | Eval Loss: 0.110054041 | Eval Accuracy: 0.9756

100%|██████████| 294/294 [00:08<00:00, 32.85it/s]

[ 69/100] Train Loss: 0.006442149 | Train Accuracy: 0.9959 | Eval Loss: 0.111032489 | Eval Accuracy: 0.9747

100%|██████████| 294/294 [00:08<00:00, 33.48it/s]

[ 70/100] Train Loss: 0.012377970 | Train Accuracy: 0.9942 | Eval Loss: 0.130313925 | Eval Accuracy: 0.9713

100%|██████████| 294/294 [00:08<00:00, 33.69it/s]

[ 71/100] Train Loss: 0.013220965 | Train Accuracy: 0.9939 | Eval Loss: 0.136519495 | Eval Accuracy: 0.9721

100%|██████████| 294/294 [00:08<00:00, 33.77it/s]

[ 72/100] Train Loss: 0.014186614 | Train Accuracy: 0.9932 | Eval Loss: 0.100331317 | Eval Accuracy: 0.9760

100%|██████████| 294/294 [00:08<00:00, 33.65it/s]

[ 73/100] Train Loss: 0.007506685 | Train Accuracy: 0.9953 | Eval Loss: 0.138681288 | Eval Accuracy: 0.9746

100%|██████████| 294/294 [00:08<00:00, 33.83it/s]

[ 74/100] Train Loss: 0.013810731 | Train Accuracy: 0.9940 | Eval Loss: 0.125158271 | Eval Accuracy: 0.9715

100%|██████████| 294/294 [00:08<00:00, 33.32it/s]

[ 75/100] Train Loss: 0.015979569 | Train Accuracy: 0.9933 | Eval Loss: 0.135145771 | Eval Accuracy: 0.9712

100%|██████████| 294/294 [00:08<00:00, 33.42it/s]

[ 76/100] Train Loss: 0.008549465 | Train Accuracy: 0.9950 | Eval Loss: 0.083702183 | Eval Accuracy: 0.9791

100%|██████████| 294/294 [00:08<00:00, 33.33it/s]

[ 77/100] Train Loss: 0.007848934 | Train Accuracy: 0.9953 | Eval Loss: 0.116475816 | Eval Accuracy: 0.9760

100%|██████████| 294/294 [00:08<00:00, 33.26it/s]

[ 78/100] Train Loss: 0.014357760 | Train Accuracy: 0.9933 | Eval Loss: 0.112450972 | Eval Accuracy: 0.9745

100%|██████████| 294/294 [00:08<00:00, 33.33it/s]

[ 79/100] Train Loss: 0.010890201 | Train Accuracy: 0.9945 | Eval Loss: 0.107104631 | Eval Accuracy: 0.9762

100%|██████████| 294/294 [00:08<00:00, 33.15it/s]

[ 80/100] Train Loss: 0.009215189 | Train Accuracy: 0.9950 | Eval Loss: 0.098404882 | Eval Accuracy: 0.9766

100%|██████████| 294/294 [00:08<00:00, 33.28it/s]

[ 81/100] Train Loss: 0.006612052 | Train Accuracy: 0.9957 | Eval Loss: 0.111409775 | Eval Accuracy: 0.9762

100%|██████████| 294/294 [00:09<00:00, 32.56it/s]

[ 82/100] Train Loss: 0.007581905 | Train Accuracy: 0.9955 | Eval Loss: 0.110171462 | Eval Accuracy: 0.9760

100%|██████████| 294/294 [00:08<00:00, 33.61it/s]

[ 83/100] Train Loss: 0.008717973 | Train Accuracy: 0.9956 | Eval Loss: 0.109199356 | Eval Accuracy: 0.9758

100%|██████████| 294/294 [00:08<00:00, 33.70it/s]

[ 84/100] Train Loss: 0.014628284 | Train Accuracy: 0.9940 | Eval Loss: 0.159773995 | Eval Accuracy: 0.9683

100%|██████████| 294/294 [00:08<00:00, 33.90it/s]

[ 85/100] Train Loss: 0.012513291 | Train Accuracy: 0.9943 | Eval Loss: 0.111567242 | Eval Accuracy: 0.9767

100%|██████████| 294/294 [00:08<00:00, 33.74it/s]

[ 86/100] Train Loss: 0.008524043 | Train Accuracy: 0.9956 | Eval Loss: 0.108253531 | Eval Accuracy: 0.9766

100%|██████████| 294/294 [00:08<00:00, 33.63it/s]

[ 87/100] Train Loss: 0.010917671 | Train Accuracy: 0.9945 | Eval Loss: 0.101949107 | Eval Accuracy: 0.9756

100%|██████████| 294/294 [00:08<00:00, 33.59it/s]

[ 88/100] Train Loss: 0.009442122 | Train Accuracy: 0.9951 | Eval Loss: 0.107041496 | Eval Accuracy: 0.9775

100%|██████████| 294/294 [00:08<00:00, 33.12it/s]

[ 89/100] Train Loss: 0.007147174 | Train Accuracy: 0.9957 | Eval Loss: 0.123801872 | Eval Accuracy: 0.9780

100%|██████████| 294/294 [00:08<00:00, 33.02it/s]

[ 90/100] Train Loss: 0.006955593 | Train Accuracy: 0.9953 | Eval Loss: 0.132401853 | Eval Accuracy: 0.9771

100%|██████████| 294/294 [00:09<00:00, 32.51it/s]

[ 91/100] Train Loss: 0.011964139 | Train Accuracy: 0.9944 | Eval Loss: 0.099037318 | Eval Accuracy: 0.9772

100%|██████████| 294/294 [00:08<00:00, 33.07it/s]

[ 92/100] Train Loss: 0.007620785 | Train Accuracy: 0.9957 | Eval Loss: 0.149788247 | Eval Accuracy: 0.9732

100%|██████████| 294/294 [00:08<00:00, 33.12it/s]

[ 93/100] Train Loss: 0.009657309 | Train Accuracy: 0.9951 | Eval Loss: 0.108477229 | Eval Accuracy: 0.9762

100%|██████████| 294/294 [00:08<00:00, 33.46it/s]

[ 94/100] Train Loss: 0.015646753 | Train Accuracy: 0.9935 | Eval Loss: 0.123420767 | Eval Accuracy: 0.9747

100%|██████████| 294/294 [00:08<00:00, 33.19it/s]

[ 95/100] Train Loss: 0.009221227 | Train Accuracy: 0.9953 | Eval Loss: 0.104509765 | Eval Accuracy: 0.9768

100%|██████████| 294/294 [00:08<00:00, 33.92it/s]

[ 96/100] Train Loss: 0.006059382 | Train Accuracy: 0.9960 | Eval Loss: 0.116007676 | Eval Accuracy: 0.9760

100%|██████████| 294/294 [00:08<00:00, 33.39it/s]

[ 97/100] Train Loss: 0.005086137 | Train Accuracy: 0.9963 | Eval Loss: 0.119936604 | Eval Accuracy: 0.9760

100%|██████████| 294/294 [00:09<00:00, 31.08it/s]

[ 98/100] Train Loss: 0.007669268 | Train Accuracy: 0.9957 | Eval Loss: 0.131530369 | Eval Accuracy: 0.9760

100%|██████████| 294/294 [00:09<00:00, 30.89it/s]

[ 99/100] Train Loss: 0.010287939 | Train Accuracy: 0.9946 | Eval Loss: 0.111930982 | Eval Accuracy: 0.9774

100%|██████████| 294/294 [00:09<00:00, 32.55it/s]

[100/100] Train Loss: 0.007963784 | Train Accuracy: 0.9956 | Eval Loss: 0.145335207 | Eval Accuracy: 0.9720

Training completed.

可以看到,模型的过拟合问题有了很大的改善(虽然仍有一些轻微过拟合),验证集精度在第59轮达到了最高值0.9787。

第三次尝试 (ResNet18预训练模型)

随后我又进行了第三次尝试,使用torchvision.models中的ResNet18预训练模型进行迁移学习。考虑到HAM10000数据集的图片与预训练模型的数据集图片差异较大,我将pratrained参数设置为True,但并没有冻结前面的网络层参数,而是将模型整体进行微调。

train_ResNet18.py

代码总体上与train_CNN.py差别不大,只是在定义模型的地方不同,另外将学习率LEARNING_RATE由先前的1e-3调整为1e-4。

import os

import tqdmfrom imblearn.over_sampling import RandomOverSampler

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

import torch

from torch.autograd import Variable

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader

from torchsummary import summary

from torchvision import transforms

from torchvision.models import resnet18from my_dataset import my_datasetif not os.path.exists('./model/'):os.mkdir('./model/')BATCH_SIZE = 128

DATA_PATH = './data/hmnist_28_28_RGB.csv'

EPOCH = 100

LEARNING_RATE = 1e-4

GPU_IS_OK = torch.cuda.is_available()device = torch.device('cuda' if GPU_IS_OK else 'cpu')

print('SkinCancerMNIST-HAM10000 is being trained using the {}.'.format('GPU' if GPU_IS_OK else 'CPU'))ros = RandomOverSampler()

transform = transforms.Compose([transforms.ToTensor(),transforms.Normalize(mean=[0.5], std=[0.5])

])print('Loading the dataset...')

data = pd.read_csv(DATA_PATH, encoding='utf-8')

labels = data['label']

images = data.drop(columns=['label'])

images, labels = ros.fit_resample(images, labels)

print('The size of dataset: {}'.format(images.shape[0]))

X_train, X_eval, y_train, y_eval = train_test_split(images, labels, train_size=0.8, random_state=21)

y_train = torch.from_numpy(np.array(y_train)).type(torch.LongTensor)

y_eval = torch.from_numpy(np.array(y_eval)).type(torch.LongTensor)

train_data = my_dataset(df=X_train, labels=y_train, transform=transform)

eval_data = my_dataset(df=X_eval, labels=y_eval, transform=transform)

data_loader_train = DataLoader(train_data, batch_size=BATCH_SIZE, shuffle=True)

data_loader_eval = DataLoader(eval_data, batch_size=BATCH_SIZE, shuffle=True)

print('Over.')ResNet18 = resnet18(pretrained=True)

ResNet18.fc = nn.Linear(ResNet18.fc.in_features, 7)

ResNet18 = ResNet18.to(device)

print('The model structure and parameters are as follows:')

summary(ResNet18, input_size=(3, 28, 28))

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(ResNet18.parameters(), lr=LEARNING_RATE, betas=(0.9, 0.999), eps=1e-8)print('Training...')

for epoch in range(1, EPOCH + 1):# traintrain_loss = 0train_acc = 0train_step = 0ResNet18.train()for image, label in tqdm.tqdm(data_loader_train):image = Variable(image.to(device))label = Variable(label.to(device))output = ResNet18(image)loss = criterion(output, label)optimizer.zero_grad()loss.backward()optimizer.step()train_loss += loss.item()_, pred = output.max(1)num_correct = (pred == label).sum().item()acc = num_correct / BATCH_SIZEtrain_acc += acctrain_step += 1train_loss /= train_steptrain_acc /= train_step# evaleval_loss = 0eval_acc = 0eval_step = 0ResNet18.eval()for image, label in data_loader_eval:image = Variable(image.to(device))label = Variable(label.to(device))output = ResNet18(image)loss = criterion(output, label)eval_loss += loss.item()_, pred = output.max(1)num_correct = (pred == label).sum().item()acc = num_correct / BATCH_SIZEeval_acc += acceval_step += 1eval_loss /= eval_stepeval_acc /= eval_step# print the loss and accuracyprint('[{:3d}/{:3d}] Train Loss: {:11.9f} | Train Accuracy: {:6.4f} | Eval Loss: {:11.9f} | Eval Accuracy: {:6.4f}'.format(epoch, EPOCH, train_loss, train_acc, eval_loss, eval_acc))# save the modeltorch.save(ResNet18.state_dict(), './model/ResNet18_epoch{}.pth'.format(epoch))print('Training completed.')训练结果如下:

SkinCancerMNIST-HAM10000 is being trained using the GPU.

Loading the dataset...

The size of dataset: 46935

Over.

The model structure and parameters are as follows:

----------------------------------------------------------------Layer (type) Output Shape Param #

================================================================Conv2d-1 [-1, 64, 14, 14] 9,408BatchNorm2d-2 [-1, 64, 14, 14] 128ReLU-3 [-1, 64, 14, 14] 0MaxPool2d-4 [-1, 64, 7, 7] 0Conv2d-5 [-1, 64, 7, 7] 36,864BatchNorm2d-6 [-1, 64, 7, 7] 128ReLU-7 [-1, 64, 7, 7] 0Conv2d-8 [-1, 64, 7, 7] 36,864BatchNorm2d-9 [-1, 64, 7, 7] 128ReLU-10 [-1, 64, 7, 7] 0BasicBlock-11 [-1, 64, 7, 7] 0Conv2d-12 [-1, 64, 7, 7] 36,864BatchNorm2d-13 [-1, 64, 7, 7] 128ReLU-14 [-1, 64, 7, 7] 0Conv2d-15 [-1, 64, 7, 7] 36,864BatchNorm2d-16 [-1, 64, 7, 7] 128ReLU-17 [-1, 64, 7, 7] 0BasicBlock-18 [-1, 64, 7, 7] 0Conv2d-19 [-1, 128, 4, 4] 73,728BatchNorm2d-20 [-1, 128, 4, 4] 256ReLU-21 [-1, 128, 4, 4] 0Conv2d-22 [-1, 128, 4, 4] 147,456BatchNorm2d-23 [-1, 128, 4, 4] 256Conv2d-24 [-1, 128, 4, 4] 8,192BatchNorm2d-25 [-1, 128, 4, 4] 256ReLU-26 [-1, 128, 4, 4] 0BasicBlock-27 [-1, 128, 4, 4] 0Conv2d-28 [-1, 128, 4, 4] 147,456BatchNorm2d-29 [-1, 128, 4, 4] 256ReLU-30 [-1, 128, 4, 4] 0Conv2d-31 [-1, 128, 4, 4] 147,456BatchNorm2d-32 [-1, 128, 4, 4] 256ReLU-33 [-1, 128, 4, 4] 0BasicBlock-34 [-1, 128, 4, 4] 0Conv2d-35 [-1, 256, 2, 2] 294,912BatchNorm2d-36 [-1, 256, 2, 2] 512ReLU-37 [-1, 256, 2, 2] 0Conv2d-38 [-1, 256, 2, 2] 589,824BatchNorm2d-39 [-1, 256, 2, 2] 512Conv2d-40 [-1, 256, 2, 2] 32,768BatchNorm2d-41 [-1, 256, 2, 2] 512ReLU-42 [-1, 256, 2, 2] 0BasicBlock-43 [-1, 256, 2, 2] 0Conv2d-44 [-1, 256, 2, 2] 589,824BatchNorm2d-45 [-1, 256, 2, 2] 512ReLU-46 [-1, 256, 2, 2] 0Conv2d-47 [-1, 256, 2, 2] 589,824BatchNorm2d-48 [-1, 256, 2, 2] 512ReLU-49 [-1, 256, 2, 2] 0BasicBlock-50 [-1, 256, 2, 2] 0Conv2d-51 [-1, 512, 1, 1] 1,179,648BatchNorm2d-52 [-1, 512, 1, 1] 1,024ReLU-53 [-1, 512, 1, 1] 0Conv2d-54 [-1, 512, 1, 1] 2,359,296BatchNorm2d-55 [-1, 512, 1, 1] 1,024Conv2d-56 [-1, 512, 1, 1] 131,072BatchNorm2d-57 [-1, 512, 1, 1] 1,024ReLU-58 [-1, 512, 1, 1] 0BasicBlock-59 [-1, 512, 1, 1] 0Conv2d-60 [-1, 512, 1, 1] 2,359,296BatchNorm2d-61 [-1, 512, 1, 1] 1,024ReLU-62 [-1, 512, 1, 1] 0Conv2d-63 [-1, 512, 1, 1] 2,359,296BatchNorm2d-64 [-1, 512, 1, 1] 1,024ReLU-65 [-1, 512, 1, 1] 0BasicBlock-66 [-1, 512, 1, 1] 0

AdaptiveAvgPool2d-67 [-1, 512, 1, 1] 0Linear-68 [-1, 7] 3,591

================================================================

Total params: 11,180,103

Trainable params: 11,180,103

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.01

Forward/backward pass size (MB): 1.09

Params size (MB): 42.65

Estimated Total Size (MB): 43.75

----------------------------------------------------------------

Training...

100%|██████████| 294/294 [00:27<00:00, 10.86it/s]

[ 1/100] Train Loss: 0.483066778 | Train Accuracy: 0.8372 | Eval Loss: 0.149059397 | Eval Accuracy: 0.9429

100%|██████████| 294/294 [00:26<00:00, 11.00it/s]

[ 2/100] Train Loss: 0.092949655 | Train Accuracy: 0.9690 | Eval Loss: 0.088184921 | Eval Accuracy: 0.9625

100%|██████████| 294/294 [00:26<00:00, 10.96it/s]

[ 3/100] Train Loss: 0.048947430 | Train Accuracy: 0.9825 | Eval Loss: 0.075887994 | Eval Accuracy: 0.9664

100%|██████████| 294/294 [00:26<00:00, 10.99it/s]

[ 4/100] Train Loss: 0.047908589 | Train Accuracy: 0.9830 | Eval Loss: 0.078713355 | Eval Accuracy: 0.9673

100%|██████████| 294/294 [00:26<00:00, 10.92it/s]

[ 5/100] Train Loss: 0.036838357 | Train Accuracy: 0.9863 | Eval Loss: 0.074998591 | Eval Accuracy: 0.9707

100%|██████████| 294/294 [00:26<00:00, 10.91it/s]

[ 6/100] Train Loss: 0.035881876 | Train Accuracy: 0.9859 | Eval Loss: 0.069636245 | Eval Accuracy: 0.9722

100%|██████████| 294/294 [00:26<00:00, 10.93it/s]

[ 7/100] Train Loss: 0.015354439 | Train Accuracy: 0.9928 | Eval Loss: 0.062996378 | Eval Accuracy: 0.9760

100%|██████████| 294/294 [00:26<00:00, 10.93it/s]

[ 8/100] Train Loss: 0.013381565 | Train Accuracy: 0.9936 | Eval Loss: 0.071176288 | Eval Accuracy: 0.9750

100%|██████████| 294/294 [00:26<00:00, 10.93it/s]

[ 9/100] Train Loss: 0.025142998 | Train Accuracy: 0.9893 | Eval Loss: 0.072122657 | Eval Accuracy: 0.9716

100%|██████████| 294/294 [00:26<00:00, 10.95it/s]

[ 10/100] Train Loss: 0.034815519 | Train Accuracy: 0.9857 | Eval Loss: 0.073852423 | Eval Accuracy: 0.9726

100%|██████████| 294/294 [00:26<00:00, 10.94it/s]

[ 11/100] Train Loss: 0.019632874 | Train Accuracy: 0.9914 | Eval Loss: 0.083632919 | Eval Accuracy: 0.9722

100%|██████████| 294/294 [00:26<00:00, 10.93it/s]

[ 12/100] Train Loss: 0.016087983 | Train Accuracy: 0.9928 | Eval Loss: 0.055468219 | Eval Accuracy: 0.9781

100%|██████████| 294/294 [00:26<00:00, 10.96it/s]

[ 13/100] Train Loss: 0.008893993 | Train Accuracy: 0.9952 | Eval Loss: 0.074550826 | Eval Accuracy: 0.9755

100%|██████████| 294/294 [00:26<00:00, 10.93it/s]

[ 14/100] Train Loss: 0.018936129 | Train Accuracy: 0.9919 | Eval Loss: 0.068807984 | Eval Accuracy: 0.9756

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 15/100] Train Loss: 0.024428692 | Train Accuracy: 0.9901 | Eval Loss: 0.053713250 | Eval Accuracy: 0.9804

100%|██████████| 294/294 [00:27<00:00, 10.78it/s]

[ 16/100] Train Loss: 0.008889921 | Train Accuracy: 0.9952 | Eval Loss: 0.052808468 | Eval Accuracy: 0.9793

100%|██████████| 294/294 [00:27<00:00, 10.88it/s]

[ 17/100] Train Loss: 0.005512334 | Train Accuracy: 0.9960 | Eval Loss: 0.063315019 | Eval Accuracy: 0.9779

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 18/100] Train Loss: 0.014360317 | Train Accuracy: 0.9934 | Eval Loss: 0.075652671 | Eval Accuracy: 0.9750

100%|██████████| 294/294 [00:27<00:00, 10.64it/s]

[ 19/100] Train Loss: 0.015307088 | Train Accuracy: 0.9928 | Eval Loss: 0.069055489 | Eval Accuracy: 0.9762

100%|██████████| 294/294 [00:27<00:00, 10.86it/s]

[ 20/100] Train Loss: 0.013735209 | Train Accuracy: 0.9932 | Eval Loss: 0.068658131 | Eval Accuracy: 0.9756

100%|██████████| 294/294 [00:27<00:00, 10.79it/s]

[ 21/100] Train Loss: 0.022175656 | Train Accuracy: 0.9909 | Eval Loss: 0.058698054 | Eval Accuracy: 0.9775

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 22/100] Train Loss: 0.009645415 | Train Accuracy: 0.9945 | Eval Loss: 0.055439501 | Eval Accuracy: 0.9795

100%|██████████| 294/294 [00:27<00:00, 10.78it/s]

[ 23/100] Train Loss: 0.006096516 | Train Accuracy: 0.9959 | Eval Loss: 0.069358642 | Eval Accuracy: 0.9757

100%|██████████| 294/294 [00:27<00:00, 10.72it/s]

[ 24/100] Train Loss: 0.007846275 | Train Accuracy: 0.9952 | Eval Loss: 0.073728980 | Eval Accuracy: 0.9760

100%|██████████| 294/294 [00:27<00:00, 10.88it/s]

[ 25/100] Train Loss: 0.011561618 | Train Accuracy: 0.9943 | Eval Loss: 0.111599398 | Eval Accuracy: 0.9689

100%|██████████| 294/294 [00:27<00:00, 10.88it/s]

[ 26/100] Train Loss: 0.020321033 | Train Accuracy: 0.9910 | Eval Loss: 0.065664590 | Eval Accuracy: 0.9772

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 27/100] Train Loss: 0.005860286 | Train Accuracy: 0.9960 | Eval Loss: 0.061213112 | Eval Accuracy: 0.9794

100%|██████████| 294/294 [00:26<00:00, 10.91it/s]

[ 28/100] Train Loss: 0.003614324 | Train Accuracy: 0.9967 | Eval Loss: 0.064343554 | Eval Accuracy: 0.9773

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 29/100] Train Loss: 0.004452085 | Train Accuracy: 0.9968 | Eval Loss: 0.063765692 | Eval Accuracy: 0.9792

100%|██████████| 294/294 [00:27<00:00, 10.89it/s]

[ 30/100] Train Loss: 0.009551118 | Train Accuracy: 0.9954 | Eval Loss: 0.080716959 | Eval Accuracy: 0.9746

100%|██████████| 294/294 [00:26<00:00, 10.91it/s]

[ 31/100] Train Loss: 0.025784245 | Train Accuracy: 0.9899 | Eval Loss: 0.060676569 | Eval Accuracy: 0.9779

100%|██████████| 294/294 [00:27<00:00, 10.89it/s]

[ 32/100] Train Loss: 0.004817960 | Train Accuracy: 0.9966 | Eval Loss: 0.060224885 | Eval Accuracy: 0.9799

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 33/100] Train Loss: 0.002682492 | Train Accuracy: 0.9969 | Eval Loss: 0.064649559 | Eval Accuracy: 0.9802

100%|██████████| 294/294 [00:27<00:00, 10.88it/s]

[ 34/100] Train Loss: 0.005738550 | Train Accuracy: 0.9960 | Eval Loss: 0.057705094 | Eval Accuracy: 0.9789

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 35/100] Train Loss: 0.017126426 | Train Accuracy: 0.9925 | Eval Loss: 0.049599137 | Eval Accuracy: 0.9797

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 36/100] Train Loss: 0.010584366 | Train Accuracy: 0.9943 | Eval Loss: 0.045030481 | Eval Accuracy: 0.9812

100%|██████████| 294/294 [00:27<00:00, 10.89it/s]

[ 37/100] Train Loss: 0.007059852 | Train Accuracy: 0.9954 | Eval Loss: 0.055950528 | Eval Accuracy: 0.9797

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 38/100] Train Loss: 0.005999635 | Train Accuracy: 0.9962 | Eval Loss: 0.063413396 | Eval Accuracy: 0.9802

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 39/100] Train Loss: 0.003029934 | Train Accuracy: 0.9969 | Eval Loss: 0.064003758 | Eval Accuracy: 0.9803

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 40/100] Train Loss: 0.012572614 | Train Accuracy: 0.9943 | Eval Loss: 0.061227709 | Eval Accuracy: 0.9779

100%|██████████| 294/294 [00:27<00:00, 10.89it/s]

[ 41/100] Train Loss: 0.011098052 | Train Accuracy: 0.9946 | Eval Loss: 0.067416840 | Eval Accuracy: 0.9778

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 42/100] Train Loss: 0.010188664 | Train Accuracy: 0.9946 | Eval Loss: 0.051657797 | Eval Accuracy: 0.9798

100%|██████████| 294/294 [00:26<00:00, 10.91it/s]

[ 43/100] Train Loss: 0.001425890 | Train Accuracy: 0.9974 | Eval Loss: 0.055644791 | Eval Accuracy: 0.9799

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 44/100] Train Loss: 0.002125683 | Train Accuracy: 0.9972 | Eval Loss: 0.059153810 | Eval Accuracy: 0.9788

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 45/100] Train Loss: 0.003516213 | Train Accuracy: 0.9967 | Eval Loss: 0.080817615 | Eval Accuracy: 0.9761

100%|██████████| 294/294 [00:26<00:00, 10.91it/s]

[ 46/100] Train Loss: 0.011380621 | Train Accuracy: 0.9942 | Eval Loss: 0.085672604 | Eval Accuracy: 0.9719

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 47/100] Train Loss: 0.014756009 | Train Accuracy: 0.9935 | Eval Loss: 0.082796592 | Eval Accuracy: 0.9737

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 48/100] Train Loss: 0.008038490 | Train Accuracy: 0.9953 | Eval Loss: 0.050740134 | Eval Accuracy: 0.9792

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 49/100] Train Loss: 0.006135156 | Train Accuracy: 0.9958 | Eval Loss: 0.059173986 | Eval Accuracy: 0.9803

100%|██████████| 294/294 [00:27<00:00, 10.89it/s]

[ 50/100] Train Loss: 0.002710547 | Train Accuracy: 0.9969 | Eval Loss: 0.054478022 | Eval Accuracy: 0.9809

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 51/100] Train Loss: 0.006189135 | Train Accuracy: 0.9959 | Eval Loss: 0.059071087 | Eval Accuracy: 0.9796

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 52/100] Train Loss: 0.004576677 | Train Accuracy: 0.9964 | Eval Loss: 0.063089357 | Eval Accuracy: 0.9779

100%|██████████| 294/294 [00:27<00:00, 10.89it/s]

[ 53/100] Train Loss: 0.005469601 | Train Accuracy: 0.9960 | Eval Loss: 0.054615553 | Eval Accuracy: 0.9789

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 54/100] Train Loss: 0.007072183 | Train Accuracy: 0.9956 | Eval Loss: 0.045009875 | Eval Accuracy: 0.9805

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 55/100] Train Loss: 0.006000444 | Train Accuracy: 0.9959 | Eval Loss: 0.052580673 | Eval Accuracy: 0.9803

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 56/100] Train Loss: 0.004500941 | Train Accuracy: 0.9966 | Eval Loss: 0.056490892 | Eval Accuracy: 0.9810

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 57/100] Train Loss: 0.003007725 | Train Accuracy: 0.9968 | Eval Loss: 0.062376558 | Eval Accuracy: 0.9789

100%|██████████| 294/294 [00:27<00:00, 10.88it/s]

[ 58/100] Train Loss: 0.009814496 | Train Accuracy: 0.9950 | Eval Loss: 0.077214261 | Eval Accuracy: 0.9757

100%|██████████| 294/294 [00:26<00:00, 10.90it/s]

[ 59/100] Train Loss: 0.012665438 | Train Accuracy: 0.9941 | Eval Loss: 0.051051934 | Eval Accuracy: 0.9821

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 60/100] Train Loss: 0.005280341 | Train Accuracy: 0.9963 | Eval Loss: 0.056703273 | Eval Accuracy: 0.9798

100%|██████████| 294/294 [00:27<00:00, 10.84it/s]

[ 61/100] Train Loss: 0.004021642 | Train Accuracy: 0.9969 | Eval Loss: 0.060731210 | Eval Accuracy: 0.9792

100%|██████████| 294/294 [00:27<00:00, 10.51it/s]

[ 62/100] Train Loss: 0.003342969 | Train Accuracy: 0.9967 | Eval Loss: 0.064883126 | Eval Accuracy: 0.9800

100%|██████████| 294/294 [00:27<00:00, 10.65it/s]

[ 63/100] Train Loss: 0.004120748 | Train Accuracy: 0.9967 | Eval Loss: 0.087833898 | Eval Accuracy: 0.9765

100%|██████████| 294/294 [00:27<00:00, 10.83it/s]

[ 64/100] Train Loss: 0.005887351 | Train Accuracy: 0.9961 | Eval Loss: 0.055562493 | Eval Accuracy: 0.9817

100%|██████████| 294/294 [00:27<00:00, 10.82it/s]

[ 65/100] Train Loss: 0.003840348 | Train Accuracy: 0.9968 | Eval Loss: 0.072857055 | Eval Accuracy: 0.9765

100%|██████████| 294/294 [00:27<00:00, 10.78it/s]

[ 66/100] Train Loss: 0.009082971 | Train Accuracy: 0.9951 | Eval Loss: 0.052342930 | Eval Accuracy: 0.9804

100%|██████████| 294/294 [00:27<00:00, 10.63it/s]

[ 67/100] Train Loss: 0.001961522 | Train Accuracy: 0.9973 | Eval Loss: 0.048264342 | Eval Accuracy: 0.9813

100%|██████████| 294/294 [00:27<00:00, 10.73it/s]

[ 68/100] Train Loss: 0.004715381 | Train Accuracy: 0.9965 | Eval Loss: 0.082956100 | Eval Accuracy: 0.9770

100%|██████████| 294/294 [00:28<00:00, 10.47it/s]

[ 69/100] Train Loss: 0.009326266 | Train Accuracy: 0.9950 | Eval Loss: 0.053404679 | Eval Accuracy: 0.9804

100%|██████████| 294/294 [00:27<00:00, 10.54it/s]

[ 70/100] Train Loss: 0.003306112 | Train Accuracy: 0.9966 | Eval Loss: 0.057575880 | Eval Accuracy: 0.9802

100%|██████████| 294/294 [00:27<00:00, 10.77it/s]

[ 71/100] Train Loss: 0.006009721 | Train Accuracy: 0.9959 | Eval Loss: 0.047661654 | Eval Accuracy: 0.9817

100%|██████████| 294/294 [00:27<00:00, 10.82it/s]

[ 72/100] Train Loss: 0.002376151 | Train Accuracy: 0.9969 | Eval Loss: 0.042407131 | Eval Accuracy: 0.9828

100%|██████████| 294/294 [00:27<00:00, 10.82it/s]

[ 73/100] Train Loss: 0.006238399 | Train Accuracy: 0.9959 | Eval Loss: 0.053447751 | Eval Accuracy: 0.9789

100%|██████████| 294/294 [00:27<00:00, 10.74it/s]

[ 74/100] Train Loss: 0.002654893 | Train Accuracy: 0.9970 | Eval Loss: 0.060148940 | Eval Accuracy: 0.9797

100%|██████████| 294/294 [00:27<00:00, 10.82it/s]

[ 75/100] Train Loss: 0.003384080 | Train Accuracy: 0.9968 | Eval Loss: 0.050685377 | Eval Accuracy: 0.9812

100%|██████████| 294/294 [00:27<00:00, 10.80it/s]

[ 76/100] Train Loss: 0.005460243 | Train Accuracy: 0.9960 | Eval Loss: 0.064673200 | Eval Accuracy: 0.9785

100%|██████████| 294/294 [00:27<00:00, 10.79it/s]

[ 77/100] Train Loss: 0.004674682 | Train Accuracy: 0.9965 | Eval Loss: 0.048508364 | Eval Accuracy: 0.9811

100%|██████████| 294/294 [00:27<00:00, 10.74it/s]

[ 78/100] Train Loss: 0.006081517 | Train Accuracy: 0.9959 | Eval Loss: 0.061809725 | Eval Accuracy: 0.9793

100%|██████████| 294/294 [00:27<00:00, 10.85it/s]

[ 79/100] Train Loss: 0.003128574 | Train Accuracy: 0.9969 | Eval Loss: 0.050054868 | Eval Accuracy: 0.9814

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 80/100] Train Loss: 0.002726834 | Train Accuracy: 0.9968 | Eval Loss: 0.067210749 | Eval Accuracy: 0.9798

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 81/100] Train Loss: 0.003748428 | Train Accuracy: 0.9969 | Eval Loss: 0.046661229 | Eval Accuracy: 0.9826

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 82/100] Train Loss: 0.001142720 | Train Accuracy: 0.9975 | Eval Loss: 0.049936761 | Eval Accuracy: 0.9825

100%|██████████| 294/294 [00:27<00:00, 10.88it/s]

[ 83/100] Train Loss: 0.003226624 | Train Accuracy: 0.9969 | Eval Loss: 0.074197290 | Eval Accuracy: 0.9760

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 84/100] Train Loss: 0.013108548 | Train Accuracy: 0.9942 | Eval Loss: 0.044454515 | Eval Accuracy: 0.9811

100%|██████████| 294/294 [00:27<00:00, 10.88it/s]

[ 85/100] Train Loss: 0.002160195 | Train Accuracy: 0.9971 | Eval Loss: 0.048371719 | Eval Accuracy: 0.9807

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 86/100] Train Loss: 0.002619434 | Train Accuracy: 0.9971 | Eval Loss: 0.050486882 | Eval Accuracy: 0.9813

100%|██████████| 294/294 [00:27<00:00, 10.85it/s]

[ 87/100] Train Loss: 0.006864140 | Train Accuracy: 0.9961 | Eval Loss: 0.056692976 | Eval Accuracy: 0.9792

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 88/100] Train Loss: 0.004875265 | Train Accuracy: 0.9963 | Eval Loss: 0.041186074 | Eval Accuracy: 0.9832

100%|██████████| 294/294 [00:27<00:00, 10.88it/s]

[ 89/100] Train Loss: 0.000652768 | Train Accuracy: 0.9976 | Eval Loss: 0.047177161 | Eval Accuracy: 0.9815

100%|██████████| 294/294 [00:27<00:00, 10.87it/s]

[ 90/100] Train Loss: 0.000488987 | Train Accuracy: 0.9977 | Eval Loss: 0.045264324 | Eval Accuracy: 0.9824

100%|██████████| 294/294 [00:27<00:00, 10.89it/s]

[ 91/100] Train Loss: 0.000341539 | Train Accuracy: 0.9977 | Eval Loss: 0.034529524 | Eval Accuracy: 0.9848

100%|██████████| 294/294 [00:27<00:00, 10.88it/s]

[ 92/100] Train Loss: 0.002224435 | Train Accuracy: 0.9971 | Eval Loss: 0.057096185 | Eval Accuracy: 0.9810

100%|██████████| 294/294 [00:27<00:00, 10.89it/s]

[ 93/100] Train Loss: 0.009456459 | Train Accuracy: 0.9949 | Eval Loss: 0.057099932 | Eval Accuracy: 0.9805

100%|██████████| 294/294 [00:27<00:00, 10.88it/s]

[ 94/100] Train Loss: 0.004690705 | Train Accuracy: 0.9963 | Eval Loss: 0.054541877 | Eval Accuracy: 0.9821

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 95/100] Train Loss: 0.003725891 | Train Accuracy: 0.9968 | Eval Loss: 0.056962815 | Eval Accuracy: 0.9809

100%|██████████| 294/294 [00:27<00:00, 10.89it/s]

[ 96/100] Train Loss: 0.003008096 | Train Accuracy: 0.9970 | Eval Loss: 0.046588485 | Eval Accuracy: 0.9816

100%|██████████| 294/294 [00:27<00:00, 10.89it/s]

[ 97/100] Train Loss: 0.004697346 | Train Accuracy: 0.9962 | Eval Loss: 0.058122337 | Eval Accuracy: 0.9794

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 98/100] Train Loss: 0.002204622 | Train Accuracy: 0.9971 | Eval Loss: 0.043696313 | Eval Accuracy: 0.9834

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[ 99/100] Train Loss: 0.001267484 | Train Accuracy: 0.9974 | Eval Loss: 0.045243341 | Eval Accuracy: 0.9830

100%|██████████| 294/294 [00:26<00:00, 10.89it/s]

[100/100] Train Loss: 0.011427559 | Train Accuracy: 0.9942 | Eval Loss: 0.051266292 | Eval Accuracy: 0.9795

Training completed.

利用ResNet18预训练模型进行训练,模型的验证集精度很快就达到了0.97以上。在第91轮训练中,验证集精度达到了最高值0.9848,相较于前面的CNN模型提高了0.0061,从结果来看略优于CNN,从训练的学习速度来看要比自定义CNN进行训练快很多。

这篇关于【PyTorch】Kaggle深度学习实战之Skin Cancer MNIST: HAM10000的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!