本文主要是介绍机械臂手眼标定详解,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

机械臂手眼标定详解

作者: Herman Ye @Auromix

测试环境: Ubuntu20.04 、ROS1 Noetic、Realsense D415

更新日期: 2023/12/11

注1: @Auromix 是一个机器人爱好者开源组织。

注2: 由于笔者水平有限,以下内容可能存在事实性错误。

注3: 本文中直接引用各包官方文档的图片等内容,版权归各官方所有。

准备阶段

为手眼标定准备以下部分:

机械臂

准备好能正常运行的真实机械臂。此处以Realman RM75为例。

相机

准备好需要标定的相机,此处以Realsense D415为例,固定相机的位置,并区分所需的标定方式(眼在手上/眼在手外)。

- eye in hand(眼在手上)

eye-in-hand to compute the static transform between the reference frames of a

robot’s hand effector and that of a tracking system, e.g. the optical

frame of an RGB camera used to track AR markers. In this case, the

camera is mounted on the end-effector, and you place the visual target

so that it is fixed relative to the base of the robot; for example,

you can place an AR marker on a table.

使用“眼在手上”来计算机器人手末端执行器的参考框架与跟踪系统框架之间的静态变换,例如用于跟踪AR标记的RGB相机的光学框架。

在这种情况下,相机安装在末端执行器上,你将视觉目标放置在相对于机器人基座固定的位置。

例如,你可以将标定板放置在桌子上。

- eye on base(眼在手外)

eye-on-base to compute the static transform from a robot’s base to a

tracking system, e.g. the optical frame of a camera standing on a

tripod next to the robot. In this case you can attach a marker, e.g.

an AR marker, to the end-effector of the robot.

使用“眼在手外”来计算机器人基座到跟踪系统的静态变换,例如相机安装在机器人旁边的三脚架上的光学框架。

在这种情况下,你可以将标定板附加到机器人的末端执行器上。

注意:

建议相机内参是经过校准的,如何校准相机内参及相关原理可参考我的ROS相机内参标定详细步骤指南

标定板

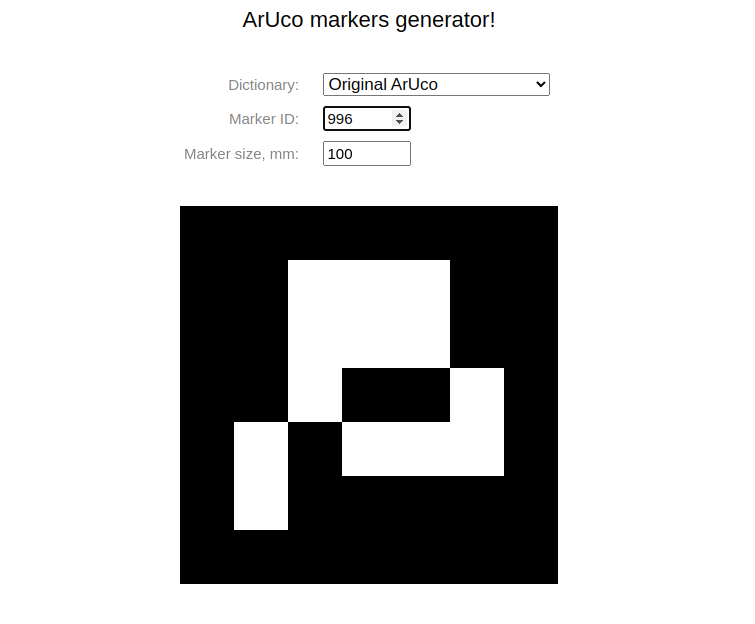

前往ArUco markers generator网站创建标定板,并打印在铝基板或者是A4纸上,需要注意打印时的缩放大小。

| Key | Value |

|---|---|

| Dictionary | Original ArUco |

| Marker ID | 996 |

| Marker size in mm | 100 |

aruco_ros

背景介绍

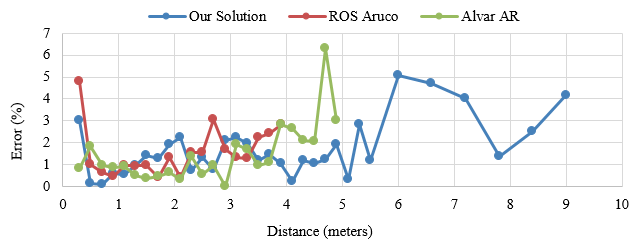

Aruco是一个用于检测和估计姿态的库,用于在增强现实环境中进行标记检测和姿态估计,广泛应用于高频率的AR标记识别与跟踪。

这些标记在几何上呈方形,具有黑色边框和内部网格,用于存储二进制代码中的数字标识符。

在Aruco中,标记的识别依赖于使用字典,该字典定义了一组规则,用于计算标记标识符、执行验证和应用纠错。在使用原始的Aruco字典时,标记的标识符是通过标记的第二列和第四列中的位以自然二进制代码存储的,而其余位则用于奇偶校验。

注意:Aruco的效果在距离标记物0.5m~1m较好

当启动单个节点时,该节点将开始追踪指定的标记,并在相机参考系中发布其姿势信息(发布它相对于相机的位置和方向),参考的命令如下。

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right"

如果希望直接查看带有标记识别结果渲染的视频,可以运行:

rosrun image_view image_view image:=/aruco_single/result

Subscribed Topics

| Topic | Description | Default |

|---|---|---|

| topic_camera | Camera image topic | /camera/rgb/image_raw |

| topic_camera_info | Camera info_expects a Camera Info message | /camera/rgb/camera_info |

| topic_marker_register | Register markers in the node | /marker_register |

| topic_marker_remove | Remove markers registered in the node | /marker_remove |

Published Topics

| Topic | Description | Default |

|---|---|---|

| topic_visible | Publishes true when a marker is visible_false otherwise | /visible |

| topic_position | Publishes the camera world position relative to the registered markers as a Point message | /position |

| topic_rotation | Publishes the camera world rotation relative to the registered markers | /rotation |

| topic_pose | Publishes camera rotation and position as Pose message | /pose |

配置aruco_ros

- 下载

# Go to src directory under your workspace

cd ~/my_handeye_cali_ws/src

# Download repo

git clone https://github.com/pal-robotics/aruco_ros.git --branch noetic-devel

- 安装依赖

# Go to your workspace

cd ~/my_handeye_cali_ws

# Install dependencies

rosdep install -iyr --from-paths src

- 编译

# Go to your workspace

cd ~/my_handeye_cali_ws

# Build your workspace

catkin_make

easy_handeye

背景介绍

“easy handeye” 是一个用于手眼标定(Hand-Eye Calibration)的软件工具。

配置easy_handeye

- 下载

# Create src directory under your workspace

mkdir -p ~/my_handeye_cali_ws/src

# Go to src directory under your workspace

cd ~/my_handeye_cali_ws/src

# Download repo

git clone https://github.com/IFL-CAMP/easy_handeye

- 安装依赖

# Go to your workspace

cd ~/my_handeye_cali_ws

# Install dependencies

rosdep install -iyr --from-paths src

- 编译

# Go to your workspace

cd ~/my_handeye_cali_ws

# Build your workspace

catkin_make

- 配置工作空间环境

# Add to environment path

echo "source ~/my_handeye_cali_ws/devel/setup.bash" >> ~/.bashrc

source ~/.bashrc

- 创建校准配置启动文件

# Go to src directory under your workspace

cd ~/my_handeye_cali_ws/src

# Go to easy handeye launch directory

cd easy_handeye/easy_handeye/launch

# Create calibration file according to official example

touch my_cali.launch

# Edit the file

sudo nano my_cali.launch

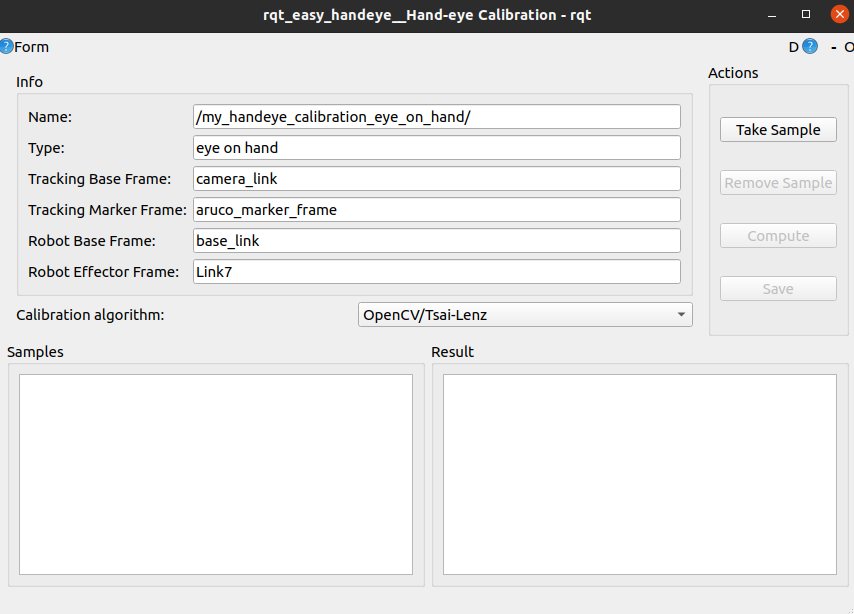

- 填写校准配置启动文件

根据标定类型(眼在手上/眼在手外),选择以下参考配置文件填入其中,并修改TODO项及其他所希望修改的内容。

眼在手上参考示例:

<!-- Description: This launch file is used to calibrate the handeye between tracking system and robot with easy_handeye and aruco_ros -->

<!-- Author: Herman Ye -->

<!-- License: MIT -->

<!-- Environment: Ubuntu 20.04, ROS Noetic, Realsense Camera D415, Realman RM_75 arm -->

<!-- Note: Please change the TODOs to your own settings with Ctrl + F to search TODO--><launch><!-- ARUCO ROS SETTINGS --><arg name="aruco_marker_id" default="996" /> <!-- TODO: Your marker id (Original ArUco) --><arg name="aruco_marker_size" default="0.1" /> <!-- TODO: Your marker side length in m --><arg name="aruco_camera_frame" default="camera_color_optical_frame" /> <!-- TODO: Your rgb camera optical frame --><arg name="aruco_ref_frame" default="camera_link" /> <!-- TODO: Frame in which the marker pose will be refered, empty means (parent frame of optical camera frame) --><arg name="aruco_camera_image_topic" default="/camera/color/image_raw" /> <!-- TODO: Your rgb image raw topic --><arg name="aruco_camera_info_topic" default="/camera/color/camera_info" /> <!-- TODO: Your rgb camera info topic --><arg name="aruco_corner_refinement" default="LINES" /> <!-- Improve the accuracy of corner detection, choose from NONE,LINES, HARRIS, LINES, SUBPIX --><arg name="aruco_marker_frame" default="aruco_marker_frame" /> <!-- Set marker frame name --><!-- EASY HANDEYE SETTINGS --><arg name="handeye_cali_eye_on_hand" default="true" /> <!-- TODO: True for eye on hand, false for eye on base --><arg name="handeye_cali_robot_base_frame" default="base_link" /> <!-- TODO: Your robot base frame --><arg name="handeye_cali_robot_effector_frame" default="Link7" /> <!-- TODO: Your end effector frame --><arg name="handeye_tracking_base_frame" default="camera_link" /> <!-- TODO: Your tracking system base frame --><arg name="handeye_tracking_marker_frame" default="$(arg aruco_marker_frame)" /> <!-- Your tracking marker frame --><arg name="handeye_cali_namespace_prefix" default="my_handeye_calibration" /> <!-- Your easy handeye namespace prefix --><arg name="handeye_cali_use_auto_calibration" default="false" /> <!-- Set false to use manual calibration --><!-- START CAMERA --><!-- TODO: Start your tracking system's ROS driver here, for example, realsense --><include file="$(find realsense2_camera)/launch/rs_camera.launch"> </include><!-- START ARM --><!-- TODO: Start your real arm bringup with moveit here --><include file="$(find rm_75_bringup)/launch/rm_robot.launch"></include><!-- START ARUCO --><!-- Start aruco_ros node to track the marker board --><node pkg="aruco_ros" type="single" name="aruco_single"><remap from="/camera_info" to="$(arg aruco_camera_info_topic)" /><remap from="/image" to="$(arg aruco_camera_image_topic)" /><param name="image_is_rectified" value="True" /><param name="marker_size" value="$(arg aruco_marker_size)" /><param name="marker_id" value="$(arg aruco_marker_id)" /><param name="reference_frame" value="$(arg aruco_ref_frame)" /><param name="camera_frame" value="$(arg aruco_camera_frame)" /><param name="marker_frame" value="$(arg aruco_marker_frame)" /><param name="corner_refinement" value="$(arg aruco_corner_refinement)" /></node><!-- START EASY HANDEYE --><!-- Start easy_handeye node to calibrate the handeye --><include file="$(find easy_handeye)/launch/calibrate.launch"><arg name="namespace_prefix" value="$(arg handeye_cali_namespace_prefix)" /><arg name="eye_on_hand" value="$(arg handeye_cali_eye_on_hand)" /><arg name="tracking_base_frame" value="$(arg handeye_tracking_base_frame)" /><arg name="tracking_marker_frame" value="$(arg handeye_tracking_marker_frame)" /><arg name="robot_base_frame" value="$(arg handeye_cali_robot_base_frame)" /><arg name="robot_effector_frame" value="$(arg handeye_cali_robot_effector_frame)" /><arg name="freehand_robot_movement" value="true" /><arg name="robot_velocity_scaling" value="0.5" /><arg name="robot_acceleration_scaling" value="0.2" /><arg name="translation_delta_meters" default="0.05" /><arg name="rotation_delta_degrees" default="25" /></include><!-- PUBLISH TF [OPTIONAL]--><!-- Publish TF between (link7 and camera_link) after calibration if you want --><!-- <node pkg="tf2_ros" type="static_transform_publisher" name="link1_to_link2_tf_pub" args="x y z qx qy qz qw parent_frame child_frame" /> --><!-- <node pkg="tf2_ros" type="static_transform_publisher" name="link7_to_camera_tf_pub" args="0.08968848705614951 -0.07181737581367453 0.04880358565028789 0.6862856787051447 -0.2077623108887272 0.6660337571805525 0.20553788865888437 Link7 camera_link" /> -->

</launch>

眼在手外官方参考案例

TODO@Herman Ye:这部分暂时没时间整理,后续如果再标眼在手外时,补上这部分

<launch><!-- (start your robot's MoveIt! stack, e.g. include its moveit_planning_execution.launch) --><!-- (start your tracking system' s ROS driver) --><include file="$(find easy_handeye)/launch/calibrate.launch"><arg name="eye_on_hand" value="false"/><arg name="namespace_prefix" value="my_eob_calib"/><!-- fill in the following parameters according to your robot's published tf frames --><arg name="robot_base_frame" value="/base_link"/><arg name="robot_effector_frame" value="/ee_link"/><!-- fill in the following parameters according to your tracking system's published tf frames --><arg name="tracking_base_frame" value="/optical_origin"/><arg name="tracking_marker_frame" value="/optical_target"/></include>

</launch>

了解校准技巧

- 使得每个pose之间的旋转变化足够大

- 使得每个pose之间的平移变化足够小

- 多采样 pose使得校准值收敛

- 使得目标物体尽可能地贴近相机(但应当在相机可行的工作空间内)

- 尽量使得标定图案不要正对着相机,而是要有一些偏转,避免它对称,由于其对称性,位姿估计可能不明确,并且输出可能变得不稳定

- 校准相机的内参(如果内参不够准确的话,但通常出厂时已经校准)

- 校准机械臂自身使得它足够准确

校准阶段

开始校准

当准备好时开始校准,首先启动刚才配置好的校准启动文件:

roslaunch easy_handeye my_cali.launch

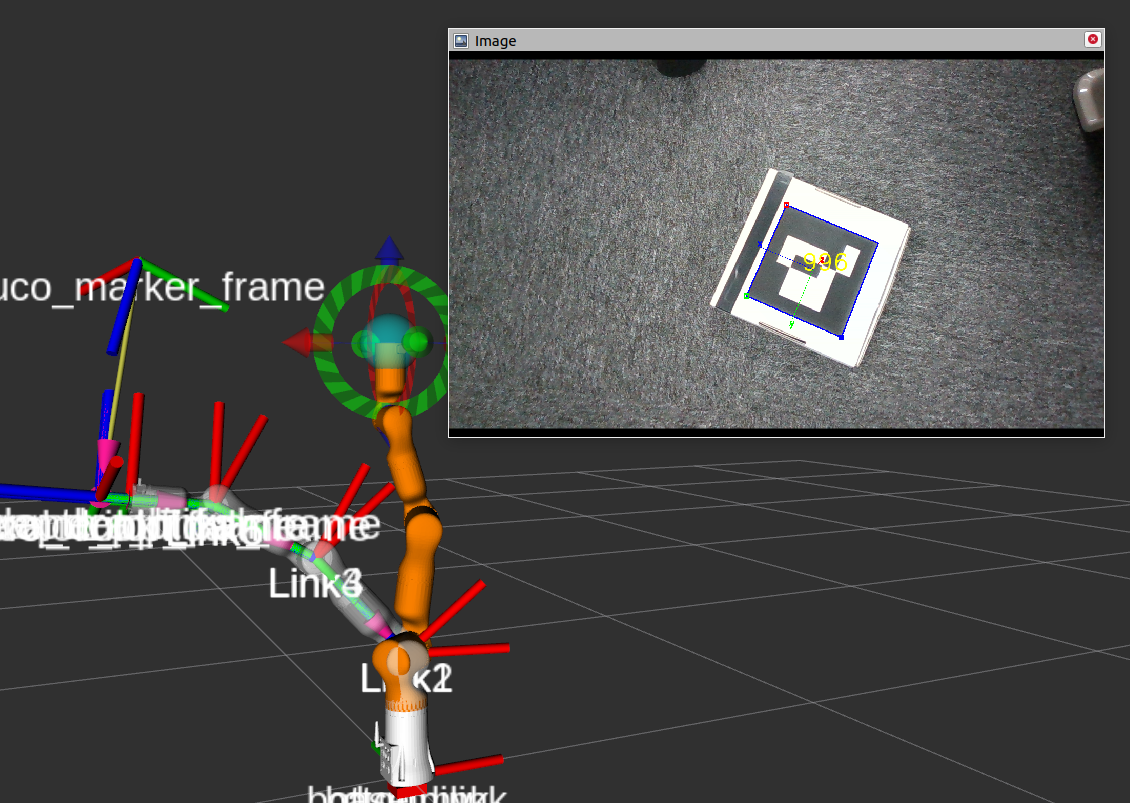

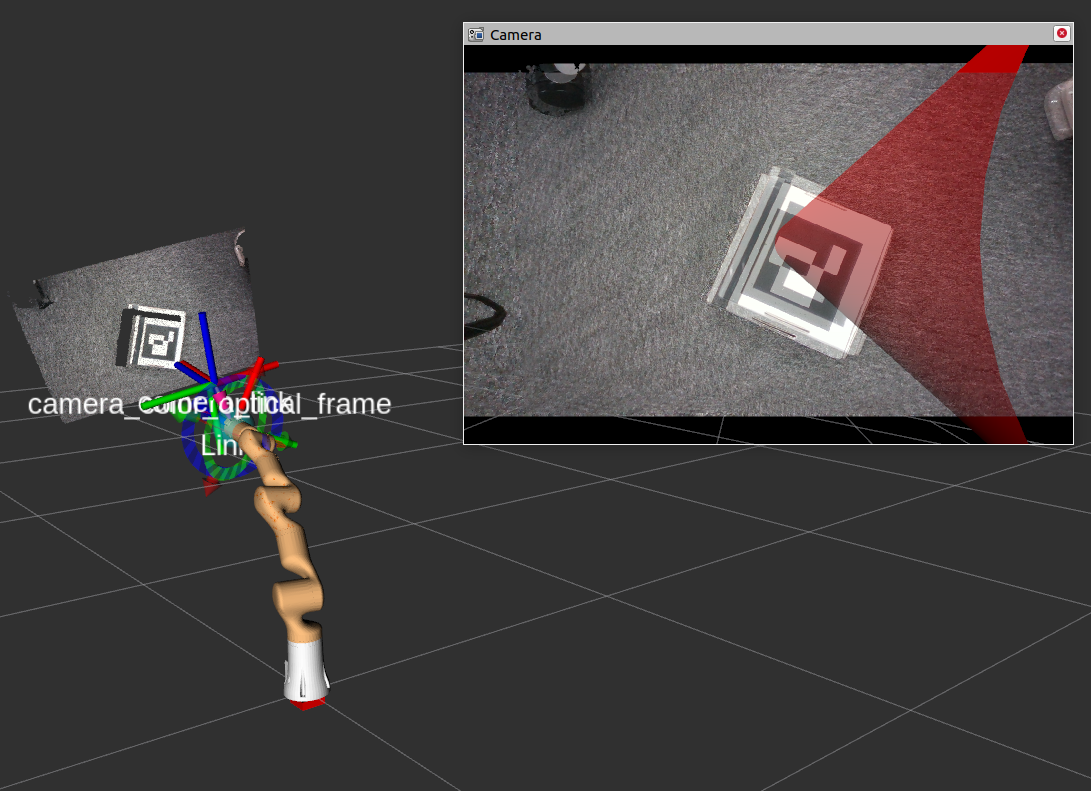

在RViz中添加Image,设置话题为/aruco_single/result来观察识别到标定板的结果

也可以添加TF组件来查看坐标变换的效果。

根据习得的标定技巧,调整机械臂末端位姿,不断采样(Take Sample)和计算(Compute),直至结果收敛

发布TF结果

最终结果将在右下角Result处,将其以静态TF发布即可。

<!-- PUBLISH TF [OPTIONAL]-->

<!-- Publish TF between (link7 and camera_link) after calibration if you want -->

<!-- <node pkg="tf2_ros" type="static_transform_publisher" name="link1_to_link2_tf_pub" args="x y z qx qy qz qw parent_frame child_frame" /> -->

<!-- <node pkg="tf2_ros" type="static_transform_publisher" name="link7_to_camera_tf_pub" args="0.08968848705614951 -0.07181737581367453 0.04880358565028789 0.6862856787051447 -0.2077623108887272 0.6660337571805525 0.20553788865888437 Link7 camera_link" /> -->

检验校准效果

通过采样多帧标定板在机器人基座坐标系下的位姿,求平均数、方差或范数等

TODO@Herman Ye:后续会写一个检验校准效果的简易benchmark放在gihub

参考链接

https://github.com/IFL-CAMP/easy_handeye

https://github.com/pal-robotics/aruco_ros

https://github.com/tentone/aruco

其他参考案例

ur5_kinect_handeyecalibration

<launch><arg name="namespace_prefix" default="ur5_kinect_handeyecalibration" /><arg name="robot_ip" doc="The IP address of the UR5 robot" /><arg name="marker_size" doc="Size of the ArUco marker used, in meters" /><arg name="marker_id" doc="The ID of the ArUco marker used" /><!-- start the Kinect --><include file="$(find freenect_launch)/launch/freenect.launch" ><arg name="depth_registration" value="true" /></include><!-- start ArUco --><node name="aruco_tracker" pkg="aruco_ros" type="single"><remap from="/camera_info" to="/camera/rgb" /><remap from="/image" to="/camera/rgb/image_rect_color" /><param name="image_is_rectified" value="true"/><param name="marker_size" value="$(arg marker_size)"/><param name="marker_id" value="$(arg marker_id)"/><param name="reference_frame" value="camera_link"/><param name="camera_frame" value="camera_rgb_optical_frame"/><param name="marker_frame" value="camera_marker" /></node><!-- start the robot --><include file="$(find ur_bringup)/launch/ur5_bringup.launch"><arg name="limited" value="true" /><arg name="robot_ip" value="192.168.0.21" /></include><include file="$(find ur5_moveit_config)/launch/ur5_moveit_planning_execution.launch"><arg name="limited" value="true" /></include><!-- start easy_handeye --><include file="$(find easy_handeye)/launch/calibrate.launch" ><arg name="namespace_prefix" value="$(arg namespace_prefix)" /><arg name="eye_on_hand" value="false" /><arg name="tracking_base_frame" value="camera_link" /><arg name="tracking_marker_frame" value="camera_marker" /><arg name="robot_base_frame" value="base_link" /><arg name="robot_effector_frame" value="wrist_3_link" /><arg name="freehand_robot_movement" value="false" /><arg name="robot_velocity_scaling" value="0.5" /><arg name="robot_acceleration_scaling" value="0.2" /></include></launch>

iiwa_kinect_xtion

<launch><arg name="namespace_prefix" default="iiwa_kinect_xtion" /><arg name="marker_size" doc="Size of the ArUco marker used, in meters" /><arg name="marker_id" doc="The ID of the ArUco marker used" /><!-- start camera driver --><!-- if you use OpenNI --><!-- start the Kinect_v1/Xtion with openNI --><include file="$(find openni_launch)/launch/openni.launch" ><arg name="depth_registration" value="true" /></include><!-- if you use libfreenect --><!-- start the Kinect_v1 with libfreenect --><!-- <include file="$(find freenect_launch)/launch/freenect.launch" ><arg name="depth_registration" value="true" /></include> --><!-- start the Kinect_v2 with libfreenect --><!-- <include file="$(find freenect2_launch)/launch/freenect2.launch" ><arg name="depth_registration" value="true" /></include> --><!-- start ArUco --><node name="aruco_tracker" pkg="aruco_ros" type="single"><remap from="/camera_info" to="/camera/rgb/camera_info"/><remap from="/image" to="/camera/rgb/image_raw"/><param name="image_is_rectified" value="true"/><param name="marker_size" value="$(arg marker_size)"/><param name="marker_id" value="$(arg marker_id)"/><param name="reference_frame" value="camera_link"/><param name="camera_frame" value="camera_rgb_optical_frame"/><param name="marker_frame" value="camera_marker"/></node><!-- start the robot --><include file="$(find iiwa_moveit)/launch/moveit_planning_execution.launch"><arg name="sim" value="false" /><arg name="rviz" value="false" /></include><!-- start easy_handeye --><include file="$(find easy_handeye)/launch/calibrate.launch" ><arg name="namespace_prefix" value="$(arg namespace_prefix)" /><arg name="eye_on_hand" value="false" /><arg name="start_rviz" value="true"/><arg name="move_group_namespace" value="/iiwa" /><arg name="move_group" value="manipulator" /><!-- need to copy iiwa_stack_config.rviz to launch/ folder --><arg name="rviz_config_file" value="$(find easy_handeye)/launch/iiwa_stack_config.rviz" /><arg name="tracking_base_frame" value="camera_link" /><arg name="tracking_marker_frame" value="camera_marker" /><arg name="robot_base_frame" value="iiwa_link_0" /><arg name="robot_effector_frame" value="iiwa_link_ee" /><arg name="freehand_robot_movement" value="false" /><arg name="robot_velocity_scaling" value="0.5" /><arg name="robot_acceleration_scaling" value="0.2" /></include></launch>

ur5e_realsense_handeyecalibration

<launch><arg name="namespace_prefix" default="ur5_kinect_handeyecalibration" /><arg name="eye_on_hand" default="false" /><arg name="camera_namespace" default="/camera/color" /> <arg name="robot_ip" doc="The IP address of the UR5 robot" default="101.101.101.12" /><arg name="marker_size" doc="Size of the ArUco marker used, in meters" default="0.016" /> <arg name="square_size" doc="The ID of the ArUco marker used" default="0.024" /> <arg name="square_number_x" default="7" /><arg name="square_number_y" default="9" /><!-- start the realsense --><include file="$(find realsense2_camera)/launch/rs_rgbd.launch" > <arg name="color_height" value="1080" /> <arg name="color_width" value="1920" /><arg name="color_fps" value="30" /></include> <!-- start ArUco --><node name="easy_aruco_node" pkg="easy_aruco" type="easy_aruco_node"> <param name="object_type" value="charuco_board" /> <param name="camera_namespace" value="$(arg camera_namespace)" /> <param name="dictionary" value="DICT_6X6_250" /> <param name="camera_frame" value="camera_color_optical_frame" /> <param name="reference_frame" value="camera_color_optical_frame" /> <param name="marker_size" value="$(arg marker_size)" /> <param name="square_size" value="$(arg square_size)" /><param name="square_number_x" value="$(arg square_number_x)" /> <param name="square_number_y" value="$(arg square_number_y)" /> </node><!-- start the robot (using https://github.com/UniversalRobots/Universal_Robots_ROS_Driver) --><include file="$(find ur_robot_driver)/launch/ur5e_bringup.launch"> <arg name="robot_ip" value="$(arg robot_ip)" />

<!-- <arg name="kinematics_config" value="path/to/ur5e_calibration.yaml" />--></include> <include file="$(find ur5e_moveit_config)/launch/ur5e_moveit_planning_execution.launch" /> <include file="$(find ur5e_moveit_config)/launch/moveit_rviz.launch" > <arg name="rviz_config" value="$(find ur5e_moveit_config)/launch/moveit.rviz" /></include> <!-- start easy_handeye --><include file="$(find easy_handeye)/launch/calibrate.launch" > <arg name="namespace_prefix" value="$(arg namespace_prefix)" /> <arg name="eye_on_hand" value="$(arg eye_on_hand)" /> <arg name="tracking_base_frame" value="camera_color_optical_frame" /> <arg name="tracking_marker_frame" value="board" /> <arg name="robot_base_frame" value="base_link" /> <arg name="robot_effector_frame" value="tool0" /><arg name="freehand_robot_movement" value="true" /> <arg name="robot_velocity_scaling" value="0.5" /> <arg name="robot_acceleration_scaling" value="0.2" /><arg name="translation_delta_meters" default="0.05" /> <arg name="rotation_delta_degrees" default="25" /></include> </launch>

panda realsense

<?xml version="1.0" ?>

<launch><arg name="namespace_prefix" default="panda_eob_calib"/><!-- (start your robot's MoveIt! stack, e.g. include its moveit_planning_execution.launch) --> <include file="$(find panda_moveit_config)/launch/franka_control.launch"><arg name="robot_ip" value="172.16.0.2"/> <!-- set your robot ip --><arg name="load_gripper" value="true"/></include> <!-- (start your tracking system's ROS driver) --><include file="$(find realsense2_camera)/launch/rs_camera.launch"> </include> <!-- fill in the following parameters according to your tracking system --><arg name="markerId" default="55"/> <!-- set your marker id --><arg name="markerSize" default="0.07"/> <!-- in m --><arg name="eye" default="left"/><arg name="marker_frame" default="aruco_marker_frame"/> <arg name="ref_frame" default=""/> <!-- leave empty and the pose will be published wrt param parent_name --><arg name="corner_refinement" default="LINES" /> <!-- NONE, HARRIS, LINES, SUBPIX --> <arg name="camera_frame" default="camera_color_frame" /><arg name="camera_image_topic" default="/camera/color/image_raw" /><arg name="camera_info_topic" default="/camera/color/camera_info" /> <node pkg="aruco_ros" type="single" name="aruco_single"><remap to="$(arg camera_info_topic)" from="/camera_info" /><remap to="$(arg camera_image_topic)" from="/image" /><param name="image_is_rectified" value="True"/><param name="marker_size" value="$(arg markerSize)"/><param name="marker_id" value="$(arg markerId)"/><param name="reference_frame" value="$(arg ref_frame)"/> <!-- frame in which the marker pose will be refered --><param name="camera_frame" value="$(arg camera_frame)"/><param name="marker_frame" value="$(arg marker_frame)" /><param name="corner_refinement" value="$(arg corner_refinement)" /></node><!-- (start hand-eye-calibration) --><include file="$(find easy_handeye)/launch/calibrate.launch"><arg name="eye_on_hand" value="false"/><arg name="namespace_prefix" value="$(arg namespace_prefix)"/><arg name="move_group" value="panda_manipulator" doc="the name of move_group for the automatic robot motion with MoveIt!" /><arg name="freehand_robot_movement" value="false"/><!-- fill in the following parameters according to your robot's published tf frames --><arg name="robot_base_frame" value="panda_link0"/><arg name="robot_effector_frame" value="panda_hand_tcp"/><!-- fill in the following parameters according to your tracking system's published tf frames --><arg name="tracking_base_frame" value="camera_link"/><arg name="tracking_marker_frame" value="aruco_marker_frame"/></include><!-- (publish tf after the calibration) --><!-- roslaunch easy_handeye publish.launch eye_on_hand:=false namespace_prefix:=$(arg namespace_prefix) --></launch>这篇关于机械臂手眼标定详解的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!