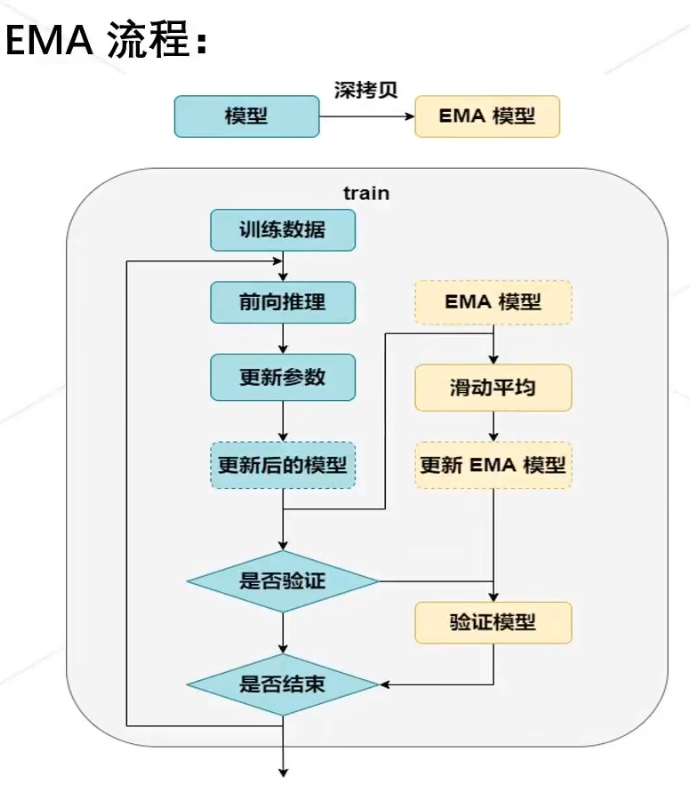

本文主要是介绍EMA训练微调,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

就是取前几个epoch的weight的平均值,可以缓解微调时的灾难性遗忘(因为新数据引导,模型权重逐渐,偏离训练时学到的数据分布,忘记之前学好的先验知识)

class EMA():def __init__(self, model, decay):self.model = modelself.decay = decay # decay rateself.shadow = {} # old weightself.backup = {} # new weightdef register(self): # deep copy weight for initfor name, param in self.model.named_parameters():if param.requires_grad:self.shadow[name] = param.data.clone()def update(self): # ema:average weight for trainfor name, param in self.model.named_parameters():if param.requires_grad:assert name in self.shadownew_average = (1.0 - self.decay) * param.data + self.decay * self.shadow[name]self.shadow[name] = new_average.clone()def apply_shadow(self): # load old weight for eval beginfor name, param in self.model.named_parameters():if param.requires_grad:assert name in self.shadowself.backup[name] = param.dataparam.data = self.shadow[name]def restore(self): # load new weight for eval endfor name, param in self.model.named_parameters():if param.requires_grad:assert name in self.backupparam.data = self.backup[name]self.backup = {}# 初始化

ema = EMA(model, 0.999)

ema.register()# 训练过程中,更新完参数后,同步update shadow weights

def train():optimizer.step()ema.update()# eval前,apply shadow weights;eval之后,恢复原来模型的参数

def evaluate():ema.apply_shadow()# evaluateema.restore()

这篇关于EMA训练微调的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!