本文主要是介绍fifth work,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

基于二进制部署kubernetes v1.27.x高可用环境

服务器划分

| 负载均衡服务器 | 部署服务器 | etcd | master | node | harbor |

|---|---|---|---|---|---|

| 10.0.0.200 | 10.0.0.200 | 10.0.0.203 | 10.0.0.201 | 10.0.0.205 | 10.0.0.207 |

| 10.0.0.204 | 10.0.0.202 | 10.0.0.206 |

创建负载均衡

10.0.0.200主机上安装Keepalived和haproxy

apt install -y keepalived haproxy

#Keepalived配置文件

root@k8s-deploy:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {notification_email {acassen}notification_email_from Alexandre.Cassen@firewall.locsmtp_server 192.168.200.1smtp_connect_timeout 30router_id LVS_DEVEL

}vrrp_instance VI_1 {state MASTERinterface eth0garp_master_delay 10smtp_alertvirtual_router_id 55priority 100advert_int 1authentication {auth_type PASSauth_pass 123456}virtual_ipaddress {10.0.0.230 dev eth0 label eth0:010.0.0.231 dev eth0 label eth0:110.0.0.232 dev eth0 label eth0:2

}}

#重启Keepalived

systemctl restart keepalived.service

#设置开机启动

systemctl enable keepalived.service#设置 net.ipv4.ip_nonlocal_bind = 1 (服务器上不存在的ip,也可以绑定端口)

/etc/sysctl.conf 中添加 net.ipv4.ip_nonlocal_bind = 1

sysctl -p #使之生效

#编辑配置文件/etc/haproxy/haproxy.cfg 添加

listen k82-api-6443bind 10.0.0.230:6443mode tcpserver apiserver1 10.0.0.201:6443 check inter 3 fall 3 rise 3server apiserver2 10.0.0.202:6443 check inter 3 fall 3 rise 3

#重启haproxy并设置开机自动启动

systemctl restart haproxy.service

systemctl enable haproxy.service

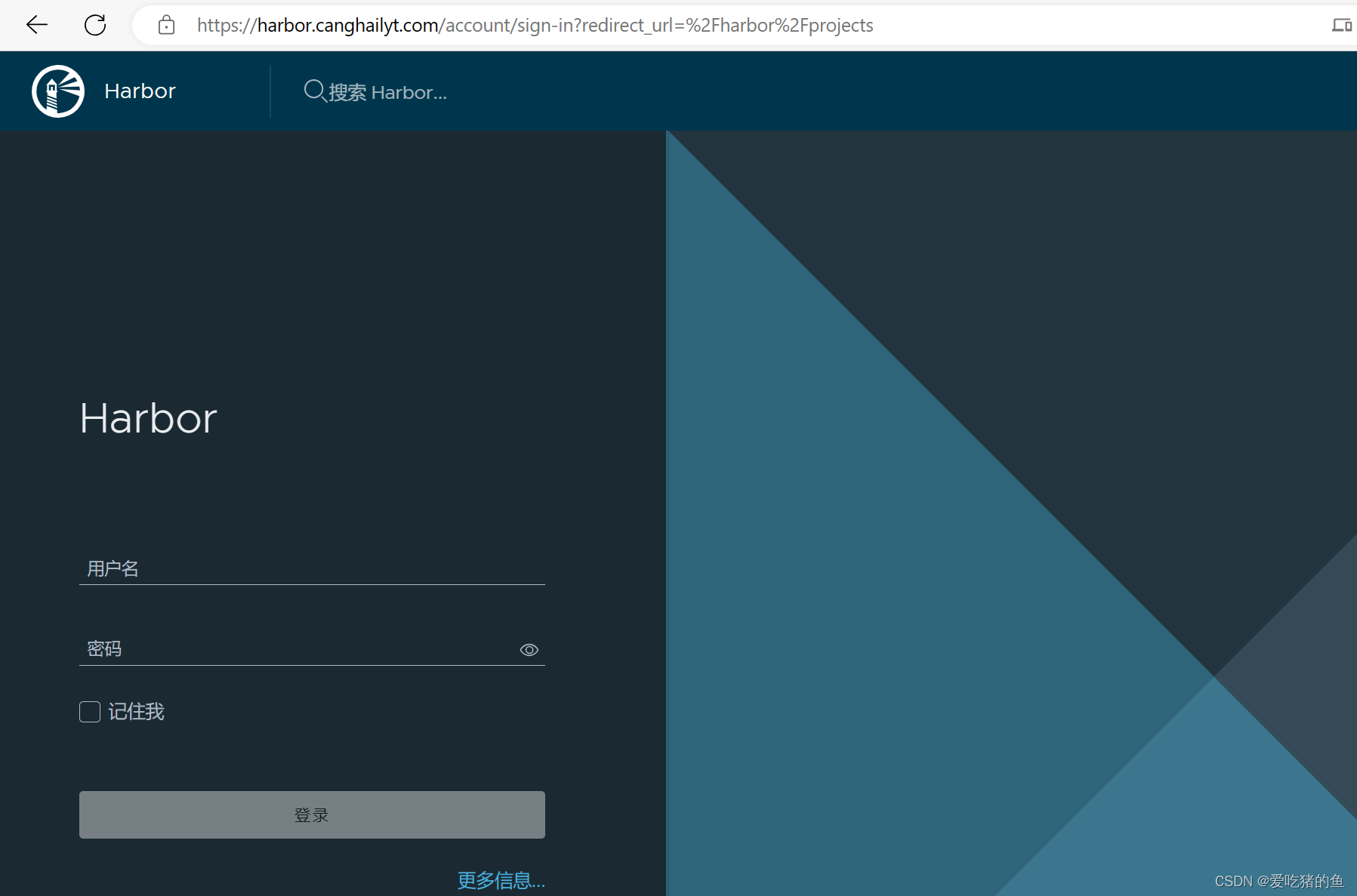

创建harbor服务器

在10.0.0.207安装harbor

#安装docker

tar xvf runtime-docker24.0.2-containerd1.6.21-binary-install.tar.gz

bash runtime-install.sh docker#安装harbor

tar xvf harbor-offline-installer-v2.8.2.tgz

mkdir /opt/apps/

cp -r harbor /opt/apps/

/opt/apps/harbor/

cd harbor

mkdir ssl #创建证书目录

cp harbor.yml.tmpl harbor.yml

#编辑配置文件vim harbor.yml 修改如下:

hostname: harbor.canghailyt.com

certificate: /opt/apps/harbor/ssl/harbor.canghailyt.com.pem

private_key: /opt/apps/harbor/ssl/harbor.canghailyt.com.key

harbor_admin_password: 12345678

#安装harbor

#将证书拷贝至/opt/apps/harbor/ssl目录

./install.sh --with-trivy

测试访问:

kubeasz部署高可用kubernetes

免秘钥登录配置

在部署节点服务器10.0.0.200上

#安装ansible

apt update && apt install -y ansible

#生成秘钥

ssh-keygen -t rsa-sha2-512 -b 4096

#安装sshpass命令用于同步公钥到各k8s服务器(会自动填充密码)

apt install sshpass#编辑秘钥同步脚本vim key_scp.sh#!/bin/bash

#目标主机列表

IP="

10.0.0.201

10.0.0.202

10.0.0.203

10.0.0.204

10.0.0.205

10.0.0.206

10.0.0.207

"

REMOTE_PORT="22"

REMOTE_USER="root"

REMOTE_PASS="123456"

for REMOTE_HOST in ${IP};do

REMOTE_CMD="echo ${REMOTE_HOST} is successfully!"

#添加目标远程主机的公钥

ssh-keyscan -p "${REMOTE_PORT}" "${REMOTE_HOST}" >> ~/.ssh/known_hosts

#通过sshpass配置免秘钥登录、并创建python3软连接

sshpass -p "${REMOTE_PASS}" ssh-copy-id "${REMOTE_USER}@${REMOTE_HOST}"

ssh ${REMOTE_HOST} ln -sv /usr/bin/python3 /usr/bin/python

echo ${REMOTE_HOST} 免秘钥配置完成!

done

#执行秘钥同步脚本

bash key_scp.sh

#测试登录

root@k8s-deploy:~# ssh root@10.0.0.203

Welcome to Ubuntu 22.04.2 LTS (GNU/Linux 5.15.0-60-generic x86_64)* Documentation: https://help.ubuntu.com* Management: https://landscape.canonical.com* Support: https://ubuntu.com/advantageThis system has been minimized by removing packages and content that are

not required on a system that users do not log into.To restore this content, you can run the 'unminimize' command.

Failed to connect to https://changelogs.ubuntu.com/meta-release-lts. Check your Internet connection or proxy settingsLast login: Wed Aug 9 11:32:51 2023 from 10.0.0.1

安装docker

在部署节点服务器10.0.0.200上

tar xvf runtime-docker24.0.2-containerd1.6.21-binary-install.tar.gz

bash runtime-install.sh docker

下载kubeasz项目及组件

在部署节点服务器10.0.0.200上

apt install -y git

#设置环境变量

export release=3.6.1

#下载工具脚本

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown#下载kubeasz代码,二进制,默认容器等

#下载的哪些东西会拷贝到/etc/kubeasz这个目录

chmod a+x ezdown

./ezdown -D

生成并自定义hosts文件

在部署节点服务器10.0.0.200上

cd /etc/kubeasz

root@k8s-deploy:/etc/kubeasz# ./ezctl new k8s-cluster1

2023-08-09 17:37:20 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-cluster1

2023-08-09 17:37:20 DEBUG set versions

2023-08-09 17:37:20 DEBUG cluster k8s-cluster1: files successfully created.

2023-08-09 17:37:20 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-cluster1/hosts'

2023-08-09 17:37:20 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-cluster1/config.yml'

编辑ansible hosts文件

在部署节点服务器10.0.0.200上

指定etcd节点、master节点、node节点、VIP、运行时、网络组建类型、service IP与pod IP范围等配置信息。

cd /etc/kubeasz/clusters/k8s-cluster1

vim hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...) etcd服务器地址

[etcd]

10.0.0.203

10.0.0.204# master node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

#master服务器地址

[kube_master]

10.0.0.201 k8s_nodename='master1'

10.0.0.202 k8s_nodename='master2'# work node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

#node节点服务器地址

[kube_node]

10.0.0.205 k8s_nodename='node1'

10.0.0.206 k8s_nodename='node2'# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

#harbor服务器地址

[harbor]

#192.168.1.8 NEW_INSTALL=false# [optional] loadbalance for accessing k8s from outside

#负载均衡服务器地址

[ex_lb]

#192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

#192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443# [optional] ntp server for the cluster

#时间服务器地址

[chrony]

#192.168.1.1[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

#apiserver的端口

SECURE_PORT="6443"# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

#运行时

CONTAINER_RUNTIME="containerd"# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

#使用什么网络组件

CLUSTER_NETWORK="calico"# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"# K8S Service CIDR, not overlap with node(host) networking

#Service的地址范围

SERVICE_CIDR="10.10.0.0/16"# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

#pod的地址范围

CLUSTER_CIDR="10.20.0.0/16"# NodePort Range

#pod的端口范围

NODE_PORT_RANGE="30000-62767"# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

#二进制的路径

bin_dir="/usr/local/bin"# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster1"# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"# Default 'k8s_nodename' is empty

k8s_nodename=''# Default python interpreter

ansible_python_interpreter=/usr/bin/python3

编辑cluster config.yml文件

在部署节点服务器10.0.0.200上

cd /etc/kubeasz/clusters/k8s-cluster1

vim config.yml

############################

# prepare

############################

# 可选离线安装系统软件包 (offline|online)

#选择online就会在网上下载一些包,如果选择offline,就要保证/etc/kubeasz/down/目录中有需要的包

INSTALL_SOURCE: "online"# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

#这个一般不修改

OS_HARDEN: false############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

#证书签发,一般不修改

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"# force to recreate CA and other certs, not suggested to set 'true'

CHANGE_CA: false# kubeconfig 配置参数

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"# k8s version

K8S_VER: "1.27.2"# set unique 'k8s_nodename' for each node, if not set(default:'') ip add will be used

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character (e.g. 'example.com'),

# regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*'

#不修改;读的hosts文件里面的k8s_nodename='master1'

K8S_NODENAME: "{%- if k8s_nodename != '' -%} \{{ k8s_nodename|replace('_', '-')|lower }} \{%- else -%} \{{ inventory_hostname }} \{%- endif -%}"############################

# role:etcd

############################

# 设置不同的wal目录,可以避免磁盘io竞争,提高性能

#设置etcd的数据目录,一般用单独的数据盘

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true# [containerd]基础容器镜像

#设置从哪里下载镜像,建议使用公司内部的镜像仓库(ezdown -D 刚刚已经拉取这个镜像,然后自己传到公司镜像仓库)

#这个镜像必不可少,用来初始化容器的底层网络的

SANDBOX_IMAGE: "harbor.canghailyt.com/base/pause:3.9"# [containerd]容器持久化存储目录

#containerd的数据目录,一般要使用单独的数据盘

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"# ------------------------------------------- docker

# [docker]容器存储目录

DOCKER_STORAGE_DIR: "/var/lib/docker"# [docker]开启Restful API

ENABLE_REMOTE_API: false# [docker]信任的HTTP仓库

INSECURE_REG:- "http://easzlab.io.local:5000"- "https://{{ HARBOR_REGISTRY }}"############################

# role:kube-master

############################

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

#负载均衡vip,一定要签发

MASTER_CERT_HOSTS:- "10.0.0.230"- "api.canghailyt.com"#- "www.test.com"# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段

# https://github.com/coreos/flannel/issues/847

#每个node里面的pod的子网大小

NODE_CIDR_LEN: 24############################

# role:kube-node

############################

# Kubelet 根目录

#一般不修改

KUBELET_ROOT_DIR: "/var/lib/kubelet"# 每个node节点可以创建的最大pod 数(尽可能的大)

MAX_PODS: 200# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "no"# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan" #网络模型

DIRECT_ROUTING: false #是否启用直接路由;启用node节点就不能跨子网了,不启用就可以# [flannel]

flannel_ver: "v0.21.4"# ------------------------------------------- calico

# [calico] IPIP隧道模式可选项有: [Always, CrossSubnet, Never],跨子网可以配置为Always与CrossSubnet(公有云建议使用always比较省事,其他的话需要修改各自公有云的网络配置,具体可以参考各个公有云说明)

# 其次CrossSubnet为隧道+BGP路由混合模式可以提升网络性能,同子网配置为Never即可.

CALICO_IPV4POOL_IPIP: "Always"# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"# [calico]设置calico 网络 backend: bird, vxlan, none

CALICO_NETWORKING_BACKEND: "bird"# [calico]设置calico 是否使用route reflectors

# 如果集群规模超过50个节点,建议启用该特性

CALICO_RR_ENABLED: false# CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: []# [calico]更新支持calico 版本: ["3.19", "3.23"]

calico_ver: "v3.24.6"# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"# ------------------------------------------- cilium

# [cilium]镜像版本

cilium_ver: "1.13.2"

cilium_connectivity_check: true

cilium_hubble_enabled: false

cilium_hubble_ui_enabled: false# ------------------------------------------- kube-ovn

# [kube-ovn]离线镜像tar包

kube_ovn_ver: "v1.11.5"# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet"

OVERLAY_TYPE: "full"# [kube-router]NetworkPolicy 支持开关

FIREWALL_ENABLE: true# [kube-router]kube-router 镜像版本

kube_router_ver: "v1.5.4"############################

# role:cluster-addon

############################

# coredns 自动安装

dns_install: "no" #是否帮你安装coredns,我们一般自己装

corednsVer: "1.9.3"

ENABLE_LOCAL_DNS_CACHE: false #是否启用本地dns缓存

dnsNodeCacheVer: "1.22.20"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"# metric server 自动安装

#metric server 是做指标收集的

metricsserver_install: "no"#是否帮你安装metric server

metricsVer: "v0.6.3"# dashboard 自动安装

dashboard_install: "no"

dashboardVer: "v2.7.0"

dashboardMetricsScraperVer: "v1.0.8"# prometheus 自动安装

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "45.23.0"# kubeapps 自动安装,如果选择安装,默认同时安装local-storage(提供storageClass: "local-path")

kubeapps_install: "no"

kubeapps_install_namespace: "kubeapps"

kubeapps_working_namespace: "default"

kubeapps_storage_class: "local-path"

kubeapps_chart_ver: "12.4.3"# local-storage (local-path-provisioner) 自动安装

local_path_provisioner_install: "no"

local_path_provisioner_ver: "v0.0.24"

# 设置默认本地存储路径

local_path_provisioner_dir: "/opt/local-path-provisioner"# nfs-provisioner 自动安装

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"# network-check 自动安装

network_check_enabled: false

network_check_schedule: "*/5 * * * *"############################

# role:harbor

############################

# harbor version,完整版本号

HARBOR_VER: "v2.6.4"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_PATH: /var/data

HARBOR_TLS_PORT: 8443

HARBOR_REGISTRY: "{{ HARBOR_DOMAIN }}:{{ HARBOR_TLS_PORT }}"# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: false# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CHARTMUSEUM: false

环境初始化

在部署节点服务器10.0.0.200上

#执行这个命令5s内可以取消

#playbooks/01.prepare.yml 这个文件可以修改

root@k8s-deploy:/etc/kubeasz# ./ezctl setup k8s-cluster1 01

ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/01.prepare.yml

^C

root@k8s-deploy:/etc/kubeasz#

#各节点环境初始化;如果出错可以重复执行的

./ezctl setup k8s-cluster1 01

#/etc/kubeasz/roles/prepare/templates 这个目录下的文件可以调整内核优化

部署etcd集群

在部署节点服务器10.0.0.200上

#/etc/kubeasz/roles/etcd/templates/etcd-csr.json.j2这是etcd服务器证书签发配置信息,可以修改。如果其中一个etcd服务器挂掉,新服务器使用原来的ip,则不需要从新去签发新服务器的。

root@k8s-deploy:/etc/kubeasz# ./ezctl setup k8s-cluster1 02

ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/02.etcd.yml

^C

root@k8s-deploy:/etc/kubeasz#

#部署etcd集群

./ezctl setup k8s-cluster1 02#检查etcd是否部署成功;登录某个etcd服务器,执行如下命令,如果返回如下信息则部署成功

root@k8s-etcd1:~# export NODE_IPS="10.0.0.203 10.0.0.204"

root@k8s-etcd1:~# for ip in ${NODE_IPS};do ETCDCTL_API=3 /usr/local/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health;done

https://10.0.0.203:2379 is healthy: successfully committed proposal: took = 13.32036ms

https://10.0.0.204:2379 is healthy: successfully committed proposal: took = 13.25035ms

部署容器运行时containerd

在部署节点服务器10.0.0.200上

#由于安装运行时,也需要pause:3.9这个镜像;在修改config.yml的时候我们已经上传到了我们自己镜像仓库,也修改了地址;这里验证一下

root@k8s-deploy:/etc/kubeasz# grep SANDBOX_IMAGE ./clusters/* -R

./clusters/k8s-cluster1/config.yml:SANDBOX_IMAGE: "harbor.canghailyt.com/base/pause:3.9"

#由于自己搭建的环境没有dns解析,所以我们需要在安装运行时的服务器上绑定镜像仓库地址的host域名解析;需要在部署容器运行时containerd的配置文件中添加,如下:

vim /etc/kubeasz/roles/containerd/tasks/main.yml- name: 添加域名解析shell: "echo '10.0.0.207 harbor.canghailyt.com' >> /etc/hosts"#修改containerd的service和配置文件;在/etc/kubeasz/roles/containerd/templates下的containerd.service.j2和config.toml.j2;如果你的containerd的有配置需要修改都需要提前在这里修改#在部署containerd的时候,我们希望同时部署上containerd的客户端nerdctl;具体操作如下

cd /etc/kubeasz

wget https://github.com/containerd/nerdctl/releases/download/v1.5.0/nerdctl-1.5.0-linux-amd64.tar.gz

#解压到/etc/kubeasz/bin/containerd-bin/;部署的时候也将nerdctl二进制文件分发到需要部署containerd的服务器中

tar xvf nerdctl-1.5.0-linux-amd64.tar.gz -C /etc/kubeasz/bin/containerd-bin/

#修改containerd的部署配置文件

vim /etc/kubeasz/roles/containerd/tasks/main.yml

- name: 准备containerd相关目录file: name={{ item }} state=directorywith_items:- "{{ bin_dir }}"- "/etc/containerd"- "/etc/nerdctl" #这里添加nerdctl的目录- name: 创建 nerdctl 配置文件template: src=nerdctl.toml.j2 dest=/etc/nerdctl/nerdctl.toml #分发nerdctl配置文件tags: upgrade

#自己创建nerdctl的配置文件

vim /etc/kubeasz/roles/containerd/templates/nerdctl.toml.j2

namespace = "k8s.io"

debug = false

debug_full = false

insecure_registry = true#修改containerd的部署配置文件;dest={{ bin_dir }}这里原来是dest={{ bin_dir }}/containerd-bin/

vim /etc/kubeasz/roles/containerd/tasks/main.yml- name: 下载 containerd 二进制文件copy: src={{ item }} dest={{ bin_dir }}/ mode=0755with_fileglob:- "{{ base_dir }}/bin/containerd-bin/*"tags: upgrade

#上面是在说将{{ base_dir }}/bin/containerd-bin/*的文件,拷贝到{{ bin_dir }}目录下,即,将/etc/kubease/bin/containerd-bin/*的文件,拷贝到/usr/local/bin目录下。要做如上修改,我们还需要修改其他文件

grep 'containerd-bin' -R ./roles/containerd/*

#注意不要修改/etc/kubeasz/roles/containerd/tasks/main.yml文件中,这是源目录with_fileglob:- "{{ base_dir }}/bin/containerd-bin/*"#部署运行时containerd

./ezctl setup k8s-cluster1 03#到其中一个节点去验证是否安装部署成功

部署master节点和node节点

在部署节点服务器10.0.0.200上

#部署master节点

./ezctl setup k8s-cluster1 04

#部署node节点

./ezctl setup k8s-cluster1 05

部署calico网络组件

在部署节点服务器10.0.0.200上

部署网络服务calico-当前版本部署的calico启动pod提示calico.pem找不到

推荐使用yaml文件,我们自己部署网络组件

#使用kubease部署calico,主要注意下面文件的镜像地址,可以先下载下来上传到我们自己镜像仓库;然后修改镜像地址

root@k8s-deploy:/etc/kubeasz# grep 'image:' roles/calico/templates/calico-v3.24.yaml.j2image: easzlab.io.local:5000/calico/cni:{{ calico_ver }}image: easzlab.io.local:5000/calico/node:{{ calico_ver }} image: easzlab.io.local:5000/calico/node:{{ calico_ver }}image: easzlab.io.local:5000/calico/kube-controllers:{{ calico_ver }}#自己使用yaml文件部署calico

#编辑calico的配置yaml文件

cat /etc/kubeasz/clusters/k8s-cluster1/hosts #这个文件中的pod子网范围要和calico3.26.1-ipip_ubuntu2204-k8s-1.27.x.yaml文件中子网范围对应

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.20.0.0/16"#calico3.26.1-ipip_ubuntu2204-k8s-1.27.x.yaml pod子网范围设置#自定义Pod子网掩码- name: CALICO_IPV4POOL_CIDRvalue: "10.20.0.0/16"#calico3.26.1-ipip_ubuntu2204-k8s-1.27.x.yaml文件中,还需要注意修改镜像仓库地址,如果默认的下载慢,就提前下载好,上传自己镜像仓库,然后修改成自己的仓库地址#calico3.26.1-ipip_ubuntu2204-k8s-1.27.x.yaml文件中还需要指定网卡;因为这样安装默认是去用第一块网卡,所以需要去指定。IP_AUTODETECTION_METHOD

- name: IP_AUTODETECTION_METHODvalue: "interface=eth0" #指定使用eth0网卡#- name: IP_AUTODETECTION_METHOD# value: "interface=bond0" #使用bond0网卡#- name: IP_AUTODETECTION_METHOD# value: "skip-interface=eth1,ens33" #不使用指定的网卡#- name: IP_AUTODETECTION_METHOD# value: "interface=bond*,eth*" #使用正则模糊匹配网卡#自己使用yaml文件部署calico

kubectl apply -f calico3.26.1-ipip_ubuntu2204-k8s-1.27.x.yaml#拷贝calico证书到各个node服务器和各个mater

#各个node服务器创建calico证书目录

root@k8s-node1:~# mkdir -p /etc/calico/ssl

#在部署服务器查找calico证书

root@k8s-deploy:/usr/local/src/kubernetes/cluster/addons/dns/coredns# find / -name 'calico.pem'

/etc/kubeasz/clusters/k8s-cluster1/ssl/calico.pem

root@k8s-deploy:/usr/local/src/kubernetes/cluster/addons/dns/coredns# find / -name 'calico-key.pem'

/etc/kubeasz/clusters/k8s-cluster1/ssl/calico-key.pem#拷贝证书到node服务器

scp /etc/kubeasz/clusters/k8s-cluster1/ssl/calico.pem 10.0.0.205:/etc/calico/ssl/

calico.pem

scp /etc/kubeasz/clusters/k8s-cluster1/ssl/calico.pem 10.0.0.206:/etc/calico/ssl/

calico.pem

scp /etc/kubeasz/clusters/k8s-cluster1/ssl/calico-key.pem 10.0.0.206:/etc/calico/ssl/

calico-key.pem

scp /etc/kubeasz/clusters/k8s-cluster1/ssl/calico-key.pem 10.0.0.205:/etc/calico/ssl/

calico-key.pem #测试成功

root@k8s-deploy:/etc/kubeasz# kubectl get -A pod

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6655b6c4b-qjcb8 1/1 Running 0 101s

kube-system calico-node-f9qwt 1/1 Running 0 103s

kube-system calico-node-lkmss 1/1 Running 0 103s

kube-system calico-node-n7bcw 1/1 Running 0 103s

kube-system calico-node-xh7cp 1/1 Running 0 103s#验证node节点路由效果

root@k8s-deploy:/etc/kubeasz# scp ./bin/calicoctl 10.0.0.205:/usr/local/bin/ #使用yaml文件自己安装的calico没有这个二进制执行文件,所以我们拷贝过去

calicoctl 100% 57MB 55.4MB/s 00:01

#查看路由效果

root@k8s-node1:~# calicoctl node status

Calico process is running.IPv4 BGP status

+--------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+----------+-------------+

| 10.0.0.201 | node-to-node mesh | up | 06:26:31 | Established |

| 10.0.0.202 | node-to-node mesh | up | 06:26:31 | Established |

| 10.0.0.206 | node-to-node mesh | up | 06:26:29 | Established |

+--------------+-------------------+-------+----------+-------------+IPv6 BGP status

No IPv6 peers found.root@k8s-node1:~# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.0.2 0.0.0.0 UG 0 0 0 eth0

10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

10.200.44.0 10.0.0.206 255.255.255.0 UG 0 0 0 tunl0

10.200.154.0 0.0.0.0 255.255.255.0 U 0 0 0 *

10.200.161.0 10.0.0.201 255.255.255.0 UG 0 0 0 tunl0

10.200.208.0 10.0.0.202 255.255.255.0 UG 0 0 0 tunl0

创建Pod验证网络通信正常

kubectl run net-test1 --image=alpine sleep 360000 #创建pod测试夸主机网络通信是否正常

kubectl run net-test2 --image=alpine sleep 360000

kubectl run net-test3 --image=alpine sleep 360000

#查看pod的信息

root@k8s-deploy:/etc/kubeasz# kubectl get -A pod -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default net-test1 1/1 Running 0 31m 10.20.44.1 node2 <none> <none>

default net-test2 1/1 Running 0 31m 10.20.154.2 node1 <none> <none>

default net-test3 1/1 Running 0 31m 10.20.44.2 node2 <none> <none>

kube-system calico-kube-controllers-6655b6c4b-sgctm 1/1 Running 0 36m 10.20.154.0 node1 <none> <none>

kube-system calico-node-5cppx 1/1 Running 0 36m 10.0.0.202 master2 <none> <none>

kube-system calico-node-chzn9 1/1 Running 0 36m 10.0.0.201 master1 <none> <none>

kube-system calico-node-gnxzw 1/1 Running 0 36m 10.0.0.205 node1 <none> <none>

kube-system calico-node-lz5fw 1/1 Running 0 36m 10.0.0.206 node2 <none> <none>

#进入net-test1,测试net-test2网络

root@k8s-deploy:/etc/kubeasz# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping 10.20.154.2

PING 10.20.154.2 (10.20.154.2): 56 data bytes

64 bytes from 10.20.154.2: seq=0 ttl=62 time=3.627 ms

64 bytes from 10.20.154.2: seq=1 ttl=62 time=0.392 ms

使kubectl命令可以在其他节点执行

#将/root/.kube/config这个文件拷贝到那个主机,就可以执行了

root@k8s-deploy:/etc/kubeasz# scp /root/.kube/config 10.0.0.201:/root/.kube

config 100% 6198 8.7MB/s 00:00

root@k8s-deploy:/etc/kubeasz#

添加、删除master节点和node节点

#添加master

#k8s_nodename="master3" 可以加可以不加,不加就会默认是ip 10.0.0.208

./ezctl add-master k8s-cluster1 10.0.0.208 k8s_nodename="master3"

#删除master

./ezctl del-master k8s-cluster1 10.0.0.208#添加node

./ezctl add-node k8s-cluster1 10.0.0.209 k8s_nodename="node3"

#删除node

./ezctl del-node k8s-cluster1 10.0.0.209

#验证

root@k8s-deploy:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready,SchedulingDisabled master 3h57m v1.27.2

master2 Ready,SchedulingDisabled master 3h57m v1.27.2

master3 Ready,SchedulingDisabled master 23m v1.27.2

node1 Ready node 3h55m v1.27.2

node2 Ready node 3h55m v1.27.2

node3 Ready node 83s v1.27.2

升级集群

升级方式:滚动升级和新部署一套把数据迁移过去

root@k8s-deploy:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready,SchedulingDisabled master 3h57m v1.27.2

master2 Ready,SchedulingDisabled master 3h57m v1.27.2node1 Ready node 3h55m v1.27.2

node2 Ready node 3h55m v1.27.2

下载更新包

下载包需要去github的k8s项目里面去下载:下载如下包

kubernetes-client-linux-amd64.tar.gz

kubernetes-node-linux-amd64.tar.gz

kubernetes-server-linux-amd64.tar.gz

kubernetes.tar.gz

升级

#解压包

root@k8s-deploy:/usr/local/src# tar xvf kubernetes-server-linux-amd64.tar.gz

root@k8s-deploy:/usr/local/src# tar xvf kubernetes.tar.gz

root@k8s-deploy:/usr/local/src# tar xvf kubernetes-node-linux-amd64.tar.gz

root@k8s-deploy:/usr/local/src# tar xvf kubernetes-client-linux-amd64.tar.gz#拷贝二进制文件

root@k8s-deploy:/usr/local/src# cd kubernetes/server/bin

root@k8s-deploy:/usr/local/src/kubernetes/server/bin# cp kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy kubectl /etc/kubeasz/bin/#验证是否拷贝成功

root@k8s-deploy:/usr/local/src/kubernetes/server/bin# /etc/kubeasz/bin/kube-apiserver --version

Kubernetes v1.27.4#升级

root@k8s-deploy:/usr/local/src/kubernetes/server/bin# cd /etc/kubeasz/

root@k8s-deploy:/etc/kubeasz# ./ezctl upgrade k8s-cluster1#验证是否升级成功

root@k8s-deploy:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready,SchedulingDisabled master 4h33m v1.27.4

master2 Ready,SchedulingDisabled master 4h33m v1.27.4

node1 Ready node 4h31m v1.27.4

node2 Ready node 4h31m v1.27.4

部署coredns

#coredns的yaml文件可以在刚刚下载的kuberneters源码目录去找;

root@k8s-deploy:/usr/local/src/kubernetes/cluster/addons/dns/coredns# pwd

/usr/local/src/kubernetes/cluster/addons/dns/coredns

root@k8s-deploy:/usr/local/src/kubernetes/cluster/addons/dns/coredns# ll

total 44

drwxr-xr-x 2 root root 4096 Jul 19 20:37 ./

drwxr-xr-x 5 root root 4096 Jul 19 20:37 ../

-rw-r--r-- 1 root root 1075 Jul 19 20:37 Makefile

-rw-r--r-- 1 root root 5066 Jul 19 20:37 coredns.yaml.base

-rw-r--r-- 1 root root 5116 Jul 19 20:37 coredns.yaml.in

-rw-r--r-- 1 root root 5118 Jul 19 20:37 coredns.yaml.sed

-rw-r--r-- 1 root root 344 Jul 19 20:37 transforms2salt.sed

-rw-r--r-- 1 root root 287 Jul 19 20:37 transforms2sed.sed#拷贝到kubease目录

root@k8s-deploy:/usr/local/src/kubernetes/cluster/addons/dns/coredns# cp coredns.yaml.base /etc/kubeasz/coredns.yaml

#编辑文件

vim /etc/kubeasz/coredns.yamlreadykubernetes cluster.local in-addr.arpa ip6.arpa {pods insecurefallthrough in-addr.arpa ip6.arpattl 30}prometheus :9153forward . /etc/resolv.conf { max_concurrent 1000 #最大链接数}cache 30 #表示域名解析结果在coredns缓存300秒loopreloadloadbalance}#上面段中__DNS__DOMAIN__表示的是部署k8s的时候services的域名后缀,

#即是/etc/kubeasz/clusters/k8s-cluster1/hosts文件中CLUSTER_DNS_DOMAIN="cluster.local";

#就是说只要遇到cluster.local的域名后缀解析就走services;

#forward . /etc/resolv.conf 表示不是cluster.local的域名后缀就走/etc/resolv.conf这个文件;

#当然也可以修改成其他,如,其他地址dns或者公司内部的dns地址readykubernetes cluster.local in-addr.arpa ip6.arpa {pods insecurefallthrough in-addr.arpa ip6.arpattl 30}prometheus :9153#forward . /etc/resolv.conf {forward . 223.6.6.6 {max_concurrent 1000}cache 300loopreloadloadbalance}myserve.com { #指定myserve.com这个域名走这个dns服务器172.16.16.16:53;如果有特定的域名,需要走特定的dns,就可以这么设置。forward . 172.16.16.16:53}#修改内存和cpu限制resources:limits:memory: __DNS__MEMORY__LIMIT__requests:cpu: 100mmemory: 70Mi

#改成如下resources:limits:cpus: 500m #表示50%的cpumemory: 512Mirequests:cpu: 100mmemory: 70Mi#将镜像的地址最好修改成国内的地址:containers:- name: corednsimage: registry.k8s.io/coredns/coredns:v1.10.1

#改成containers:- name: corednsimage: registry.cn-hangzhou.aliyuncs.com/zhangshijie/coredns:v1.10.1#clusterIP获取,首先创建一个pod,然后进入pod,查看/etc/resolv.conf文件。

spec:selector:k8s-app: kube-dnsclusterIP: __DNS__SERVER__

#改成

spec:selector:k8s-app: kube-dnsclusterIP: 10.10.0.2#部署coredns

kubectl apply -f coredns.yaml

部署dashboard

root@k8s-deploy:~/kubernetes-v1.27.x-files/dashboard-v2.7.0# pwd

/root/kubernetes-v1.27.x-files/dashboard-v2.7.0

#部署dashborad;注意可以更换dashboard-v2.7.0.yaml里面镜像地址,先下载到自己的仓库,然后改成自己的

kubectl apply -f dashboard-v2.7.0.yaml -f admin-user.yaml -f admin-secret.yaml #注意部署顺序#获取登录token

root@k8s-deploy:~/kubernetes-v1.27.x-files/dashboard-v2.7.0# kubectl get secret -A | grep 'admin'

kubernetes-dashboard dashboard-admin-user kubernetes.io/service-account-token 3 3m41s

root@k8s-deploy:~/kubernetes-v1.27.x-files/dashboard-v2.7.0# kubectl describe secret -n kubernetes-dashboard dashboard-admin-user

Name: dashboard-admin-user

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-userkubernetes.io/service-account.uid: a3d35298-da7d-43b4-9296-ff3ec451e55dType: kubernetes.io/service-account-tokenData

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkhadlFaODVScDdlSlI0dDNWX1BfbUkxRzRISHI2SG40Y0toU0pYSXpSR00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdXNlciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYTNkMzUyOTgtZGE3ZC00M2I0LTkyOTYtZmYzZWM0NTFlNTVkIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmFkbWluLXVzZXIifQ.8AxNsR1rrCLX4udcPF_IH796Xf6a-HsqyCqw7kFu_u8Yfq0WCDhyLzyn-Pvy42OCt3MyyJVIy-b8X_R6azySlmGCGCm6zW8OSS2lrdpv-hXFsBWl47A3UPLsKtXJs5eV6ilRh_ljKioIMlqjPXTFIO1Wm1_ta1WGUlhvVrpXQYwRHOBIua3RHiRWgU5huUqjGFZp_Kizax90ppP2riUCB3w_mMIWob31f5PcccHmnE1fNSy-_4wP2OGEK48zEWTKcby0hdScE-2P0jCP4mtR5mFGAHF73Jla15AaDrhHmhQu_GfutJnuuVe3iu9JjkIH3J5THaJiEJ-EHO6JDfkv8w

ca.crt: 1310 bytes

namespace: 20 bytes#获取登录Kubeconfig文件

#编辑部署主机/root/.kube/config文件

server: https://10.0.0.201:6443 #可以修改成负载均衡的vip地址,即:server: https://10.0.0.230:6443

#文件最后添加刚刚获取到的token;修改了这个文件,保存方式是:x!

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkhadlFaODVScDdlSlI0dDNWX1BfbUkxRzRISHI2SG40Y0toU0pYSXpSR00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdXNlciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYTNkMzUyOTgtZGE3ZC00M2I0LTkyOTYtZmYzZWM0NTFlNTVkIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmFkbWluLXVzZXIifQ.8AxNsR1rrCLX4udcPF_IH796Xf6a-HsqyCqw7kFu_u8Yfq0WCDhyLzyn-Pvy42OCt3MyyJVIy-b8X_R6azySlmGCGCm6zW8OSS2lrdpv-hXFsBWl47A3UPLsKtXJs5eV6ilRh_ljKioIMlqjPXTFIO1Wm1_ta1WGUlhvVrpXQYwRHOBIua3RHiRWgU5huUqjGFZp_Kizax90ppP2riUCB3w_mMIWob31f5PcccHmnE1fNSy-_4wP2OGEK48zEWTKcby0hdScE-2P0jCP4mtR5mFGAHF73Jla15AaDrhHmhQu_GfutJnuuVe3iu9JjkIH3J5THaJiEJ-EHO6JDfkv8w

#最后将文件拷贝到自己的电脑#设置token登录会话保持时间

vim dashboard-v2.7.0.yamlargs:- --auto-generate-certificates- --namespace=kubernetes-dashboard- --token-ttl=3600 #设置token登录会话保持时间

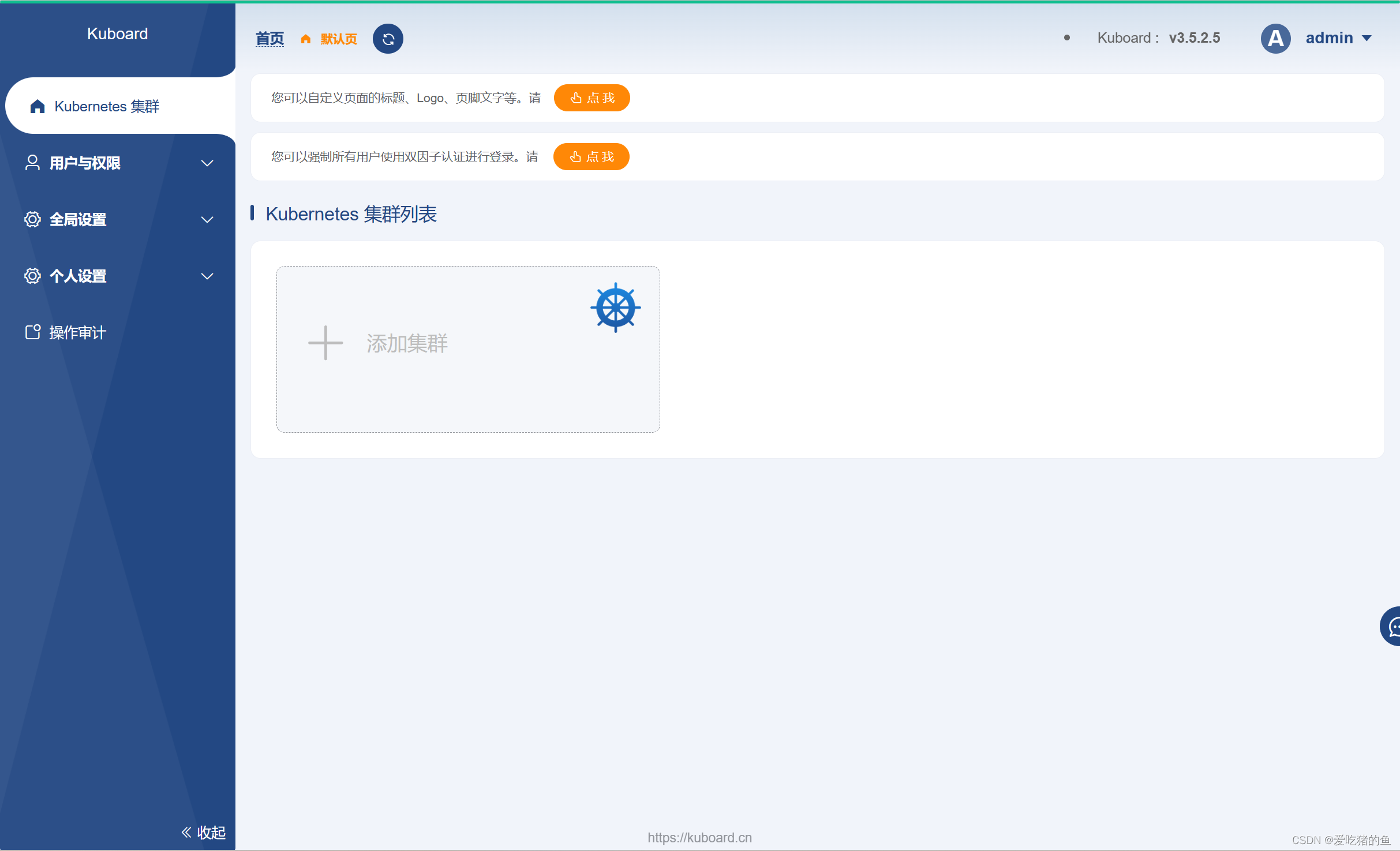

部署kuboard

官方地址:https://kuboard.cn/overview/#kuboard%E5%9C%A8%E7%BA%BF%E4%BD%93%E9%AA%8C

https://github.com/eip-work/kuboard-press #github

kuboard需要共享存储

#创建共享存储;在那个机器上创建自己定就好了;我选择在部署服务器10.0.0.200上创建

#安装nfs-server

apt install nfs-server

#创建目录,用于kuboard的数据存储使用

mkdir -p /data/k8sdata/kuboard

#编辑vim /etc/exports文件,将/data/k8sdata/kuboard木目录共享出去,权限为读写

vim /etc/exports

/data/k8sdata/kuboard *(rw,no_root_squash)

#重启nfs并设置开机启动

systemctl restart nfs-server.service

systemctl enable nfs-server.service#编辑kuboard-all-in-one.yaml文件

vim kuboard-all-in-one.yaml

---

apiVersion: v1

kind: Namespace

metadata:name: kuboard---

apiVersion: apps/v1

kind: Deployment

metadata:annotations: {}labels:k8s.kuboard.cn/name: kuboard-v3name: kuboard-v3namespace: kuboard

spec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:k8s.kuboard.cn/name: kuboard-v3template:metadata:labels:k8s.kuboard.cn/name: kuboard-v3spec:#affinity:# nodeAffinity:# preferredDuringSchedulingIgnoredDuringExecution:# - preference:# matchExpressions:# - key: node-role.kubernetes.io/master# operator: Exists# weight: 100# - preference:# matchExpressions:# - key: node-role.kubernetes.io/control-plane# operator: Exists# weight: 100volumes:- name: kuboard-datanfs:server: 10.0.0.200 #共享存储地址path: /data/k8sdata/kuboard containers:- env:- name: "KUBOARD_ENDPOINT"value: "http://kuboard-v3:80"- name: "KUBOARD_AGENT_SERVER_TCP_PORT"value: "10081"image: swr.cn-east-2.myhuaweicloud.com/kuboard/kuboard:v3 volumeMounts:- name: kuboard-data mountPath: /data readOnly: falseimagePullPolicy: AlwayslivenessProbe:failureThreshold: 3httpGet:path: /port: 80scheme: HTTPinitialDelaySeconds: 30periodSeconds: 10successThreshold: 1timeoutSeconds: 1name: kuboardports:- containerPort: 80name: webprotocol: TCP- containerPort: 443name: httpsprotocol: TCP- containerPort: 10081name: peerprotocol: TCP- containerPort: 10081name: peer-uprotocol: UDPreadinessProbe:failureThreshold: 3httpGet:path: /port: 80scheme: HTTPinitialDelaySeconds: 30periodSeconds: 10successThreshold: 1timeoutSeconds: 1resources: {}#dnsPolicy: ClusterFirst#restartPolicy: Always#serviceAccount: kuboard-boostrap#serviceAccountName: kuboard-boostrap#tolerations:# - key: node-role.kubernetes.io/master# operator: Exists---

apiVersion: v1

kind: Service

metadata:annotations: {}labels:k8s.kuboard.cn/name: kuboard-v3name: kuboard-v3namespace: kuboard

spec:ports:- name: webnodePort: 30080port: 80protocol: TCPtargetPort: 80- name: tcpnodePort: 30081port: 10081protocol: TCPtargetPort: 10081- name: udpnodePort: 30081port: 10081protocol: UDPtargetPort: 10081selector:k8s.kuboard.cn/name: kuboard-v3sessionAffinity: Nonetype: NodePort#部署kuboard

kubectl apply -f kuboard-all-in-one.yaml

#查看,部署成功

root@k8s-deploy:~/kubernetes-v1.27.x-files/20230416-cases/3.kuboard# kubectl get -A pod

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-67c67b9b5f-99xrl 1/1 Running 3 (70m ago) 46h

kube-system calico-node-42hx2 1/1 Running 1 (74m ago) 46h

kube-system calico-node-gk8mn 1/1 Running 1 (70m ago) 46h

kube-system calico-node-qnq6r 1/1 Running 1 (74m ago) 46h

kube-system calico-node-z2579 1/1 Running 1 (70m ago) 46h

kube-system coredns-bbbfcc99-fq57k 1/1 Running 1 (74m ago) 23h

kubernetes-dashboard dashboard-metrics-scraper-7bd9c46f9c-pnjbl 1/1 Running 0 88m

kubernetes-dashboard kubernetes-dashboard-bfc6bfc95-2q4zn 1/1 Running 0 88m

kuboard kuboard-v3-68c48b4cb-9gdkp 1/1 Running 0 13m#查看kuboard的端口映射;http://10.0.0.205:30080/;默认登录账号密码:admin Kuboard123

root@k8s-deploy:~/kubernetes-v1.27.x-files/20230416-cases/3.kuboard# kubectl get svc -A | grep kuboard

kuboard kuboard-v3 NodePort 10.10.213.186 <none> 80:30080/TCP,10081:30081/TCP,10081:30081/UDP 21mkuboard界面操作管理

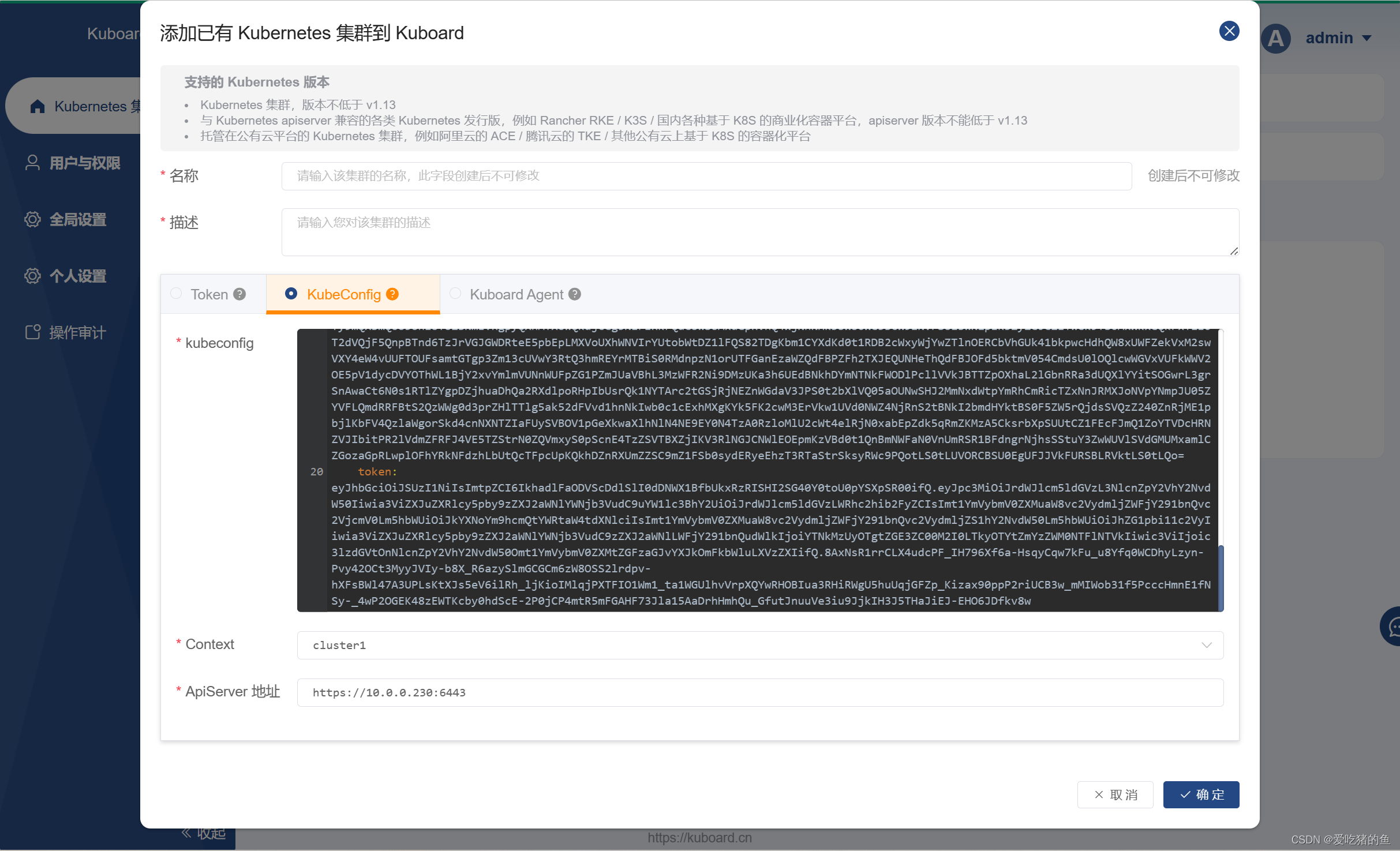

创建集群:点击添加集群

将/root/.kube/config文件内容复制进去

总结kubectl命令的使用

kubectl命令补全

#设置kubectl命令补全;在 bash 中设置当前 shell 的自动补全,要先安装 bash-completion 包

apt install -y bash-completion

kubectl completion bash > /root/kubectl-completion.sh

chmod a+x /root/kubectl-completion.sh

echo "source /root/kubectl-completion.sh" >> /etc/profile

source /etc/profile

## kubectl命令

kubectl命令使用:详情请看官方文档:https://kubernetes.io/zh-cn/docs/reference/kubectl/cheatsheet/```powershell

kubectl get #查看资源对象

kubectl describe #查看资源对象的详细信息

kubectl apply #创建资源对象

kubectl logs #查看日志

kubectl exec #进入容器

kubectl scale #进行容器的动态伸缩

kubectl explain #查看某些资源对象的某些字段的详细说明 (写yaml文件)

kubectl label #给node或pod打标签

kubectl cluster-info #查看集群状态

kubectl top node/pod #查看node或pod的指标数据

kubectl drain #驱逐node上的pod,用于node下线等场景

kubectl taint #给node标记污点,实现反亲pod与node反亲和性

kubectl api-resources/api-versions/version #api资源

kubectl config #客户端kube-config配置 kubectl config viewkubectl cp #复制文件

kubectl cp /tmp/foo_dir my-pod:/tmp/bar_dir # 将 /tmp/foo_dir 本地目录复制到远程当前命名空间中 Pod 中的 /tmp/bar_dir

kubectl cp /tmp/foo my-pod:/tmp/bar -c my-container # 将 /tmp/foo 本地文件复制到远程 Pod 中特定容器的 /tmp/bar 下

kubectl cp /tmp/foo my-namespace/my-pod:/tmp/bar # 将 /tmp/foo 本地文件复制到远程 “my-namespace” 命名空间内指定 Pod 中的 /tmp/bar

kubectl cp my-namespace/my-pod:/tmp/foo /tmp/bar # 将 /tmp/foo 从远程 Pod 复制到本地 /tmp/barkubectl cordon #警戒线,标记node不被调度;即不会在这个node上新建pod呢

root@k8s-deploy:~/kubernetes-v1.27.x-files/20230416-cases/3.kuboard# kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready,SchedulingDisabled master 2d3h v1.27.4

master2 Ready,SchedulingDisabled master 2d3h v1.27.4

node1 Ready node 2d3h v1.27.4

node2 Ready node 2d3h v1.27.4

root@k8s-deploy:~/kubernetes-v1.27.x-files/20230416-cases/3.kuboard# kubectl cordon node1

node/node1 cordoned

root@k8s-deploy:~/kubernetes-v1.27.x-files/20230416-cases/3.kuboard# kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready,SchedulingDisabled master 2d3h v1.27.4

master2 Ready,SchedulingDisabled master 2d3h v1.27.4

node1 Ready,SchedulingDisabled node 2d3h v1.27.4

node2 Ready node 2d3h v1.27.4kubectl uncordon #取消警戒标记为cordon的node

root@k8s-deploy:~/kubernetes-v1.27.x-files/20230416-cases/3.kuboard# kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready,SchedulingDisabled master 2d3h v1.27.4

master2 Ready,SchedulingDisabled master 2d3h v1.27.4

node1 Ready,SchedulingDisabled node 2d3h v1.27.4

node2 Ready node 2d3h v1.27.4

root@k8s-deploy:~/kubernetes-v1.27.x-files/20230416-cases/3.kuboard# kubectl uncordon node1

node/node1 uncordoned

root@k8s-deploy:~/kubernetes-v1.27.x-files/20230416-cases/3.kuboard# kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready,SchedulingDisabled master 2d3h v1.27.4

master2 Ready,SchedulingDisabled master 2d3h v1.27.4

node1 Ready node 2d3h v1.27.4

node2 Ready node 2d3h v1.27.4查看某node上有哪些pod

#查看某node上有哪些pod

kubectl describe nodes nodename

etcd的集群选举机制

etcd基于Raft算法进行集群角色选举,使用Raft的还有Consul、InfluxDB、kafka(KRaft)等

Raft算法是由斯坦福大学的Diego Ongaro(迭戈·翁加罗)和John Ousterhout(约翰·欧斯特霍特)于2013年提出的

Raft算法的设计目标是提供更好的可读性、易用性和可靠性,以便工程师能够更好地理解和实现它,它采用了Paxos算法中的一些核心思想,但通过简化和修改,使其更容易理解和实现,Raft算法的设计理念是使算法尽可能简单化,以便于人们理解和维护,同时也能够提供强大的一致性保证和高可用性

首次选举:

1、各etcd节点启动后默认为 follower角色、默认termID为0、如果发现集群 内没有leader,则会变成 candidate角色并进行选举 leader。

2、candidate(候选节点)向其它候选节点发送投票信息(RequestVote),默认 投票给自己。

3、各候选节点相互收到另外的投票信息(如A收到BC的,B收到AC的,C收到AB 的),然后对比日志是否比自己的更新,如果比自己的更新,

则将自己的选票 投给目的候选人,并回复一个包含自己最新日志信息的响应消息,如果C的日 志更新,

那么将会得到A、B、C的投票,则C全票当选,如果B挂了,得到A、 C的投票,则C超过半票当选。

4、C向其它节点发送自己leader心跳信息,以维护自己的身份(heartbeat- interval、默认100毫秒)。

5、其它节点将角色切换为Follower并向leader同步数据。

6、如果选举超时(election-timeout )、则重新选举,如果选出来两个leader, 则超过集群总数半票的生效。

后期选举:

当一个follower节点在规定时间内未收到leader的消息时,它将转换为 candidate状态,向其他节点发送投票请求(自己的term ID和日志更新记录),

并等待其他节点的响应,如果该candidate的(日志更新记录最新),则会获多 数投票,它将成为新的leader。

新的leader将自己的termID +1 并通告至其它节点。

如果旧的leader恢复了,发现已有新的leader,则加入到已有的leader中并 将自己的term ID更新为和leader一致,在同一个任期内所有节点的term ID是 一致的。

这篇关于fifth work的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!