本文主要是介绍CCE容器集群部署,访问harbor镜像仓库/发布项目,部署后端程序,前台页面部署,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

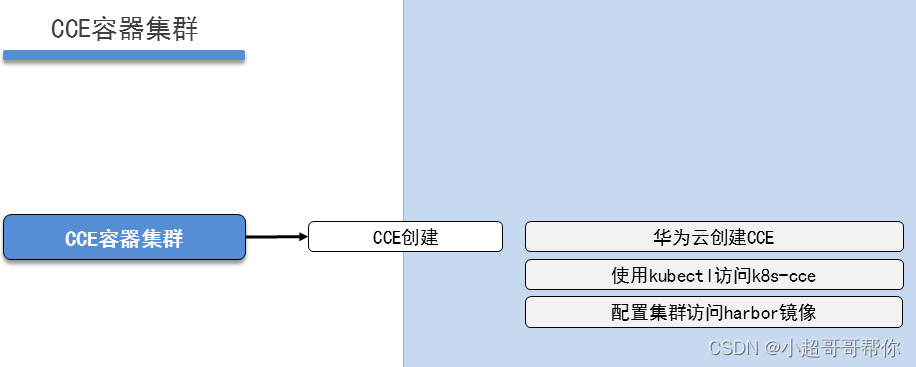

一、CCE容器集群

一、CCE创建

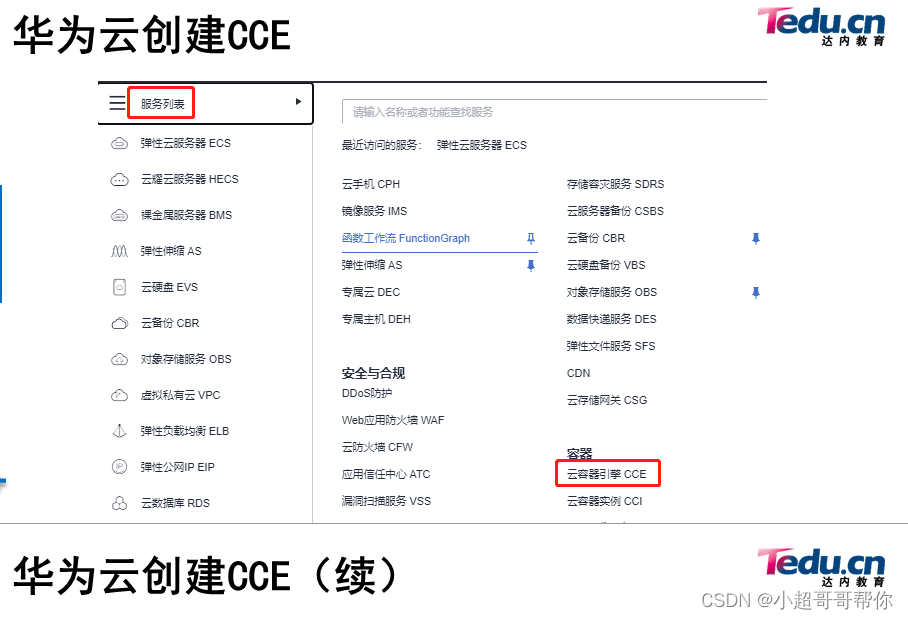

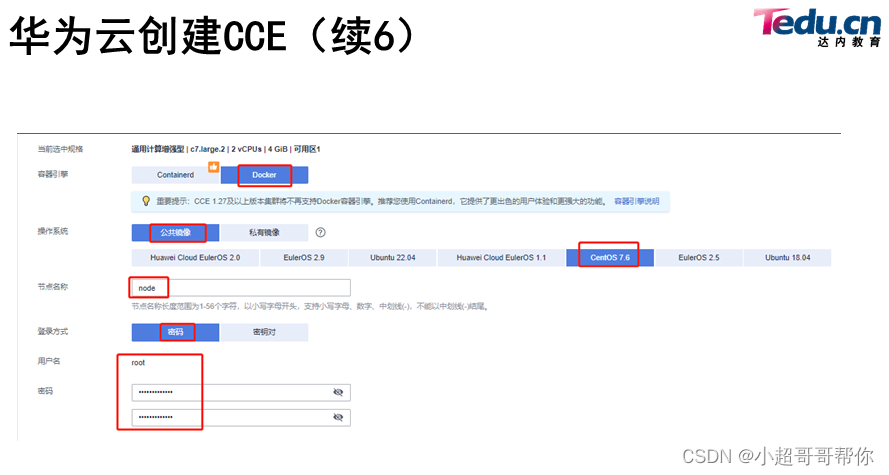

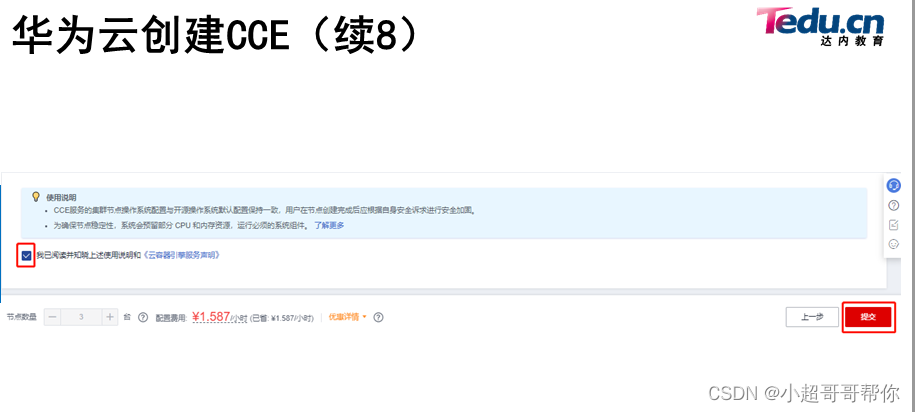

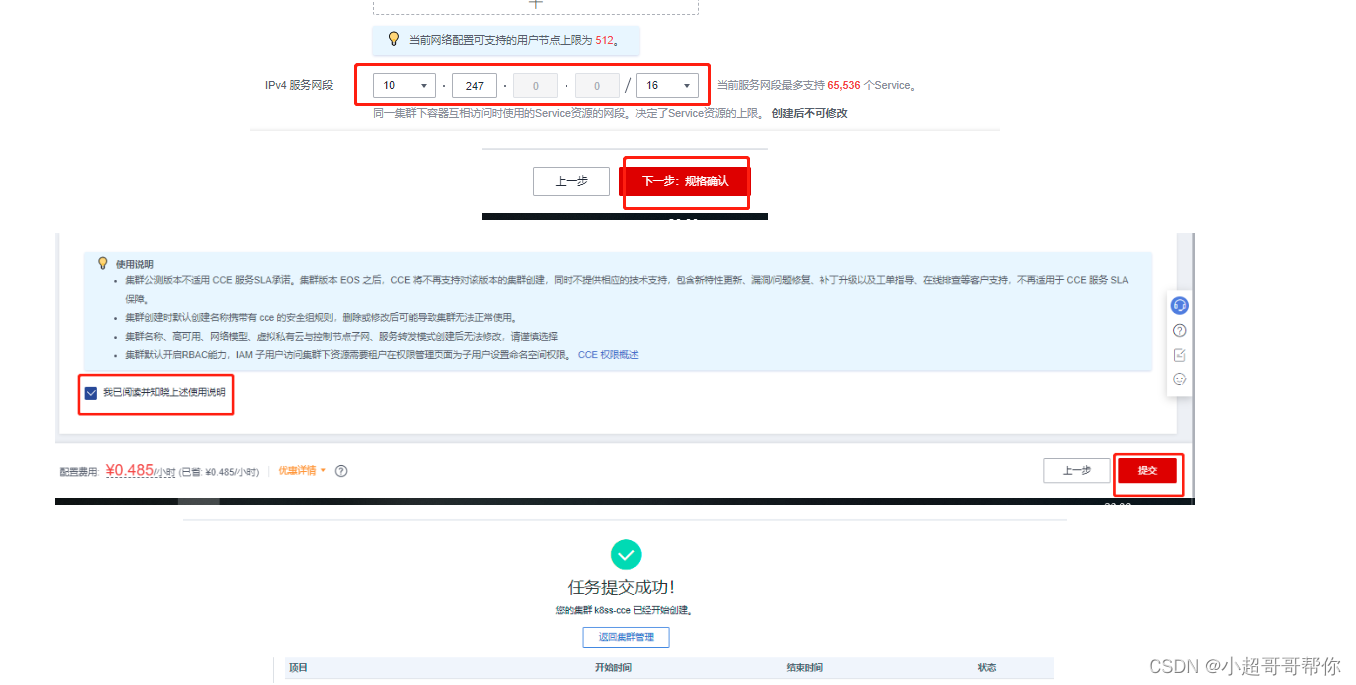

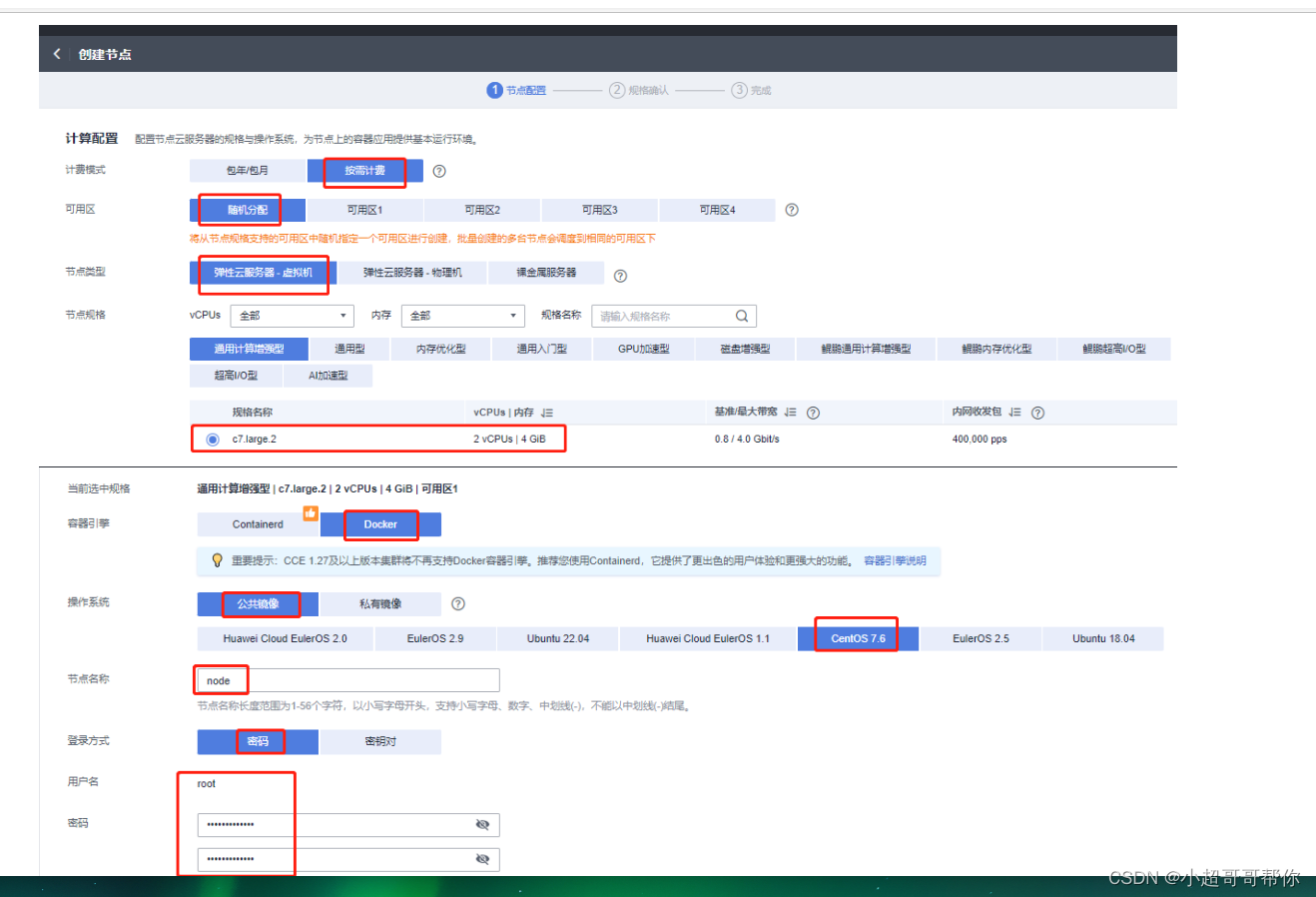

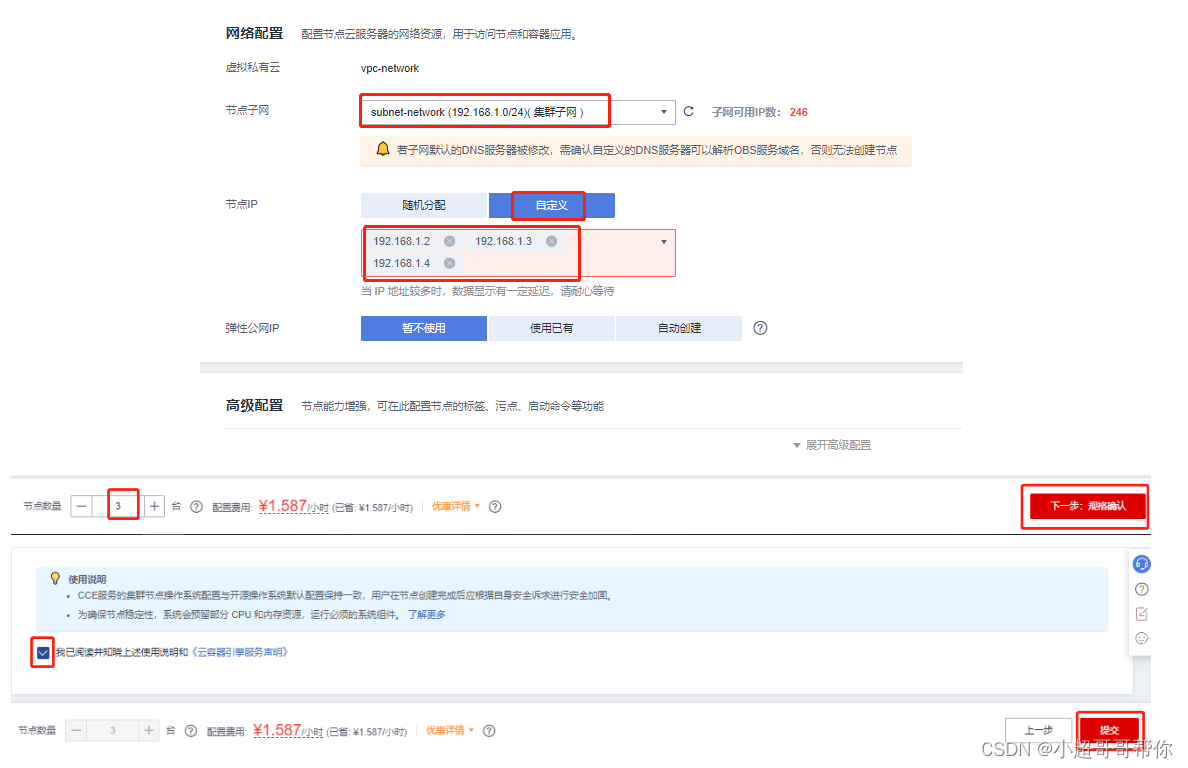

1、华为云创建CCE

此时k8s集群已经搭建完毕,有三个node节点,如果需要把集群放入到jumpserver管理,可以直接按照之前添加资产的配置放入,直接使用operation的用户进行管理即可,使用cce搭建的k8s集群master节点是无法连接的,使用kubectl管理集群,可以找一台机器比如jumpserver主机管理

此时k8s集群已经搭建完毕,有三个node节点,如果需要把集群放入到jumpserver管理,可以直接按照之前添加资产的配置放入,直接使用operation的用户进行管理即可,使用cce搭建的k8s集群master节点是无法连接的,使用kubectl管理集群,可以找一台机器比如jumpserver主机管理

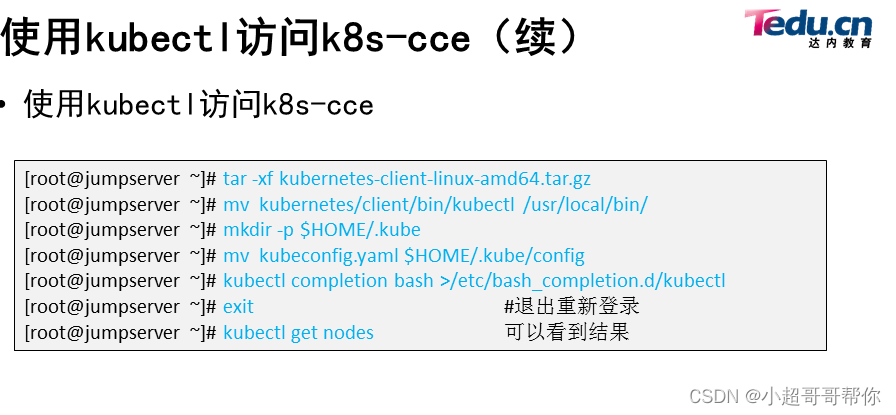

2、使用kubectl访问k8s-cce

需要先下载kubectl以及配置文件,拷贝到jumpserver主机,完成配置后,即可使用kubectl访问k8s集群1、下载kubectl:https://dl.k8s.io/v1.28.0-alpha.3/kubernetes-client-linux-amd64.tar.gz 下载与集群版本对应的或者更新的kubectl2、下载kubectl配置文件:选择有效期,如5天,之后点击下载3、安装和配置kubectl:上传kubernetes-client-linux-amd64.tar 和 kubeconfig.yaml配置文件到jumpserver主机的/root目录下

[root@jumpserver ~]# tar -xf kubernetes-client-linux-amd64.tar.gz

[root@jumpserver ~]# mv kubernetes/client/bin/kubectl /usr/local/bin/4、登录到jumpserver主机,配置kubectl,若是root用户登录,可以直接执行

[root@jumpserver ~]# mkdir -p $HOME/.kube

[root@jumpserver ~]# mv kubeconfig.yaml $HOME/.kube/config5、配置kubectl的tab键,节省输入时间

[root@jumpserver ~]# kubectl completion bash >/etc/bash_completion.d/kubectl

[root@jumpserver ~]# exit #退出重新登录

[root@jumpserver ~]# kubectl get nodes 可以看到结果

NAME STATUS ROLES AGE VERSION

192.168.1.2 Ready <none> 10m v1.23.8-r0-23.2.26

192.168.1.3 Ready <none> 10m v1.23.8-r0-23.2.26

192.168.1.4 Ready <none> 10m v1.23.8-r0-23.2.26

3、配置集群访问harbor镜像仓库

使用jumpserver主机连接三台k8s计算节点,更改hosts文件,做主机名和IP地址解析,更改daemon.json文件,使其后期可以下载镜像,三台机器都需要配置(以其中一台为例)

方法一:可以通过提前配置好的jumpserver资产连接,配置

[root@jumpserver ~]# ssh k8s@192.168.1.252 -p2222

按p,查看资产,输入id进入相关node节点

[ks8@node-pkkea ~]$ sudo -s

[root@node-pkkea ks8]# vim /etc/hosts

192.168.1.30 harbor[root@node-pkkea ks8]# vim /etc/docker/daemon.json

{"storage-driver": "overlay2", #加上, "insecure-registries":["harbor:443"] #添加

}快速添加上面两个内容

cat >>/etc/hosts<<EOF

192.168.1.30 harbor

EOFcat >>/etc/docker/daemon.json <<EOF

, "insecure-registries":["harbor:443"]

EOF[root@node-pkkea ks8]# systemctl restart docker方法二:也可以直接连接配置

[root@jumpserver ~]# kubectl get nodes #获取节点IP地址

NAME STATUS ROLES AGE VERSION

192.168.1.2 Ready <none> 40m v1.23.8-r0-23.2.26

192.168.1.3 Ready <none> 40m v1.23.8-r0-23.2.26

192.168.1.4 Ready <none> 40m v1.23.8-r0-23.2.26[root@jumpserver ~]# ssh 192.168.1.2

[root@node-pkkea ~]# vim /etc/hosts

192.168.1.30 harbor[root@node-pkkea ~]# vim /etc/docker/daemon.json

{"storage-driver": "overlay2", #加上,"insecure-registries":["harbor:443"] #添加

}

[root@node-pkkea ~]# systemctl restart docker

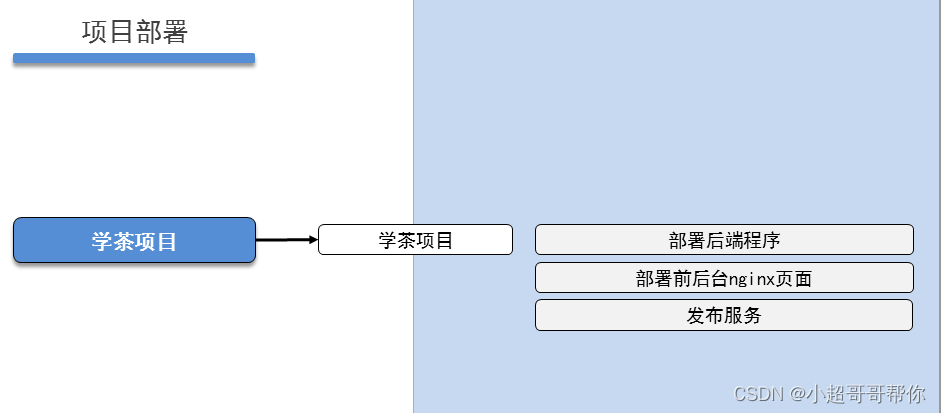

二、项目部署

二、学茶项目

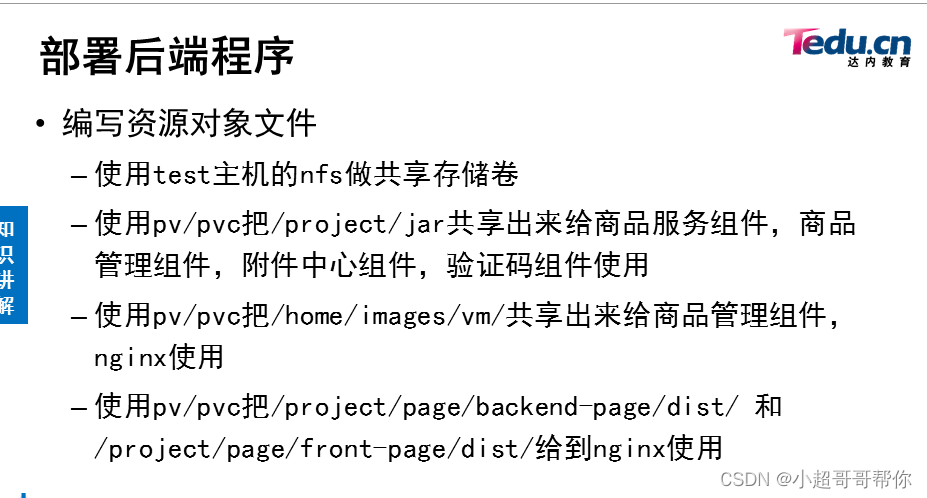

1、部署后端程序

#使用jumpserver主机管理k8s集群,编写资源对象文件

[root@jumpserver ~]# mkdir tea-yaml

[root@jumpserver ~]# cd tea-yaml/

设置时区,确保pod中容器时间和宿主机保持一致

[root@jumpserver tea-yaml]# vim tz.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: timezone

data:timezone: "Asia/Shanghai"

[root@jumpserver tea-yaml]# kubectl apply -f tz.yaml

[root@jumpserver tea-yaml]# kubectl get configmaps

NAME DATA AGE

kube-root-ca.crt 1 31m

timezone 1 9s#创建启动jar包的pv和pvc资源对象文件

[root@jumpserver tea-yaml]# vim pv-pvc-jar.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pv-jar

spec:volumeMode: Filesystemcapacity:storage: 5GiaccessModes:- ReadWriteManypersistentVolumeReclaimPolicy: Retainnfs:server: 192.168.1.101path: /project/jar---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: pvc-jar

spec:#storageClassName: ""volumeMode: FilesystemaccessModes:- ReadWriteManyresources:requests:storage: 2Gi#创建验证码passport的资源对象文件,使用jar包的pvc

[root@jumpserver tea-yaml]# vim passport-jar.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:name: passport-jar

spec:selector:matchLabels:app: passport-jarreplicas: 1template:metadata:labels:app: passport-jarspec:volumes:- name: passport-jarpersistentVolumeClaim:claimName: pvc-jarcontainers:- name: passport-jarimage: harbor:443/myimg/jar:baseenv:- name: TZvalueFrom:configMapKeyRef:name: timezonekey: timezonecommand: ["/bin/bash"]args:- -c- |java -Dfile.encoding=utf-8 -jar /project/jar/passport-provider-1.0-SNAPSHOT.jar -Xmx128M -Xms128M -Xmn64m -XX:MaxMetaspaceSize=128M-XX:MetaspaceSize=128M --server.port=30094 --spring.profiles.active=vmports:- protocol: TCPcontainerPort: 30094volumeMounts:- name: passport-jarmountPath: /project/jarrestartPolicy: Always#创建学茶网前台的资源对象文件,使用jar包的pvc

[root@jumpserver tea-yaml]# vim teaserver-jar.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:name: teaserver-jar

spec:selector:matchLabels:app: teaserver-jarreplicas: 1template:metadata:labels:app: teaserver-jarspec:volumes:- name: teaserver-jarpersistentVolumeClaim:claimName: pvc-jarcontainers:- name: teaserver-jarimage: harbor:443/myimg/jar:baseenv:- name: TZvalueFrom:configMapKeyRef:name: timezonekey: timezonecommand: ["/bin/bash"]args:- -c- |java -Dfile.encoding=utf-8 -jar /project/jar/tea-server-admin-1.0.0-SNAPSHOT.jar -Xmx128M -Xms128M -Xmn64m -XX:MaxMetaspaceSize=128M -XX:MetaspaceSize=128M --server.port=30091 --spring.profiles.active=vmports:- protocol: TCPcontainerPort: 30091volumeMounts:- name: teaserver-jarmountPath: /project/jarrestartPolicy: Always#创建学茶网后台程序使用的图片的pv 和pvc 资源对象文件

[root@jumpserver tea-yaml]# vim pv-pvc-image.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pv-image

spec:volumeMode: Filesystemcapacity:storage: 5GiaccessModes:- ReadWriteManypersistentVolumeReclaimPolicy: Retainnfs:server: 192.168.1.101path: /home/images/vm/---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: image

spec:#storageClassName: ""volumeMode: FilesystemaccessModes:- ReadWriteManyresources:requests:storage: 2Gi#创建学茶网后台程序的资源对象文件,使用jar包和图片的pvc

[root@jumpserver tea-yaml]# vim teaadmin-jar.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:name: teaadmin-jar

spec:selector:matchLabels:app: teaadmin-jarreplicas: 1template:metadata:labels:app: teaadmin-jarspec:volumes:- name: teaadmin-jarpersistentVolumeClaim:claimName: pvc-jar- name: imagepersistentVolumeClaim:claimName: imagecontainers:- name: teaadmin-jarimage: harbor:443/myimg/jar:baseenv:- name: TZvalueFrom:configMapKeyRef:name: timezonekey: timezonecommand: ["/bin/bash"]args:- -c- |java -Dfile.encoding=utf-8 -jar /project/jar/tea-admin-main-1.0.0-SNAPSHOT.jar -Xmx128M -Xms128M -Xmn64m -XX:MaxMetaspaceSize=128M -XX:MetaspaceSize=128M --server.port=30092 --spring.profiles.active=vmports:- protocol: TCPcontainerPort: 30092volumeMounts:- name: teaadmin-jarmountPath: /project/jar- name: imagemountPath: /home/images/vm/restartPolicy: Always#创建学茶网后台附件中心的资源对象文件,使用jar包的pvc

[root@jumpserver tea-yaml]# vim attach-jar.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:name: attache-jar

spec:selector:matchLabels:app: attache-jarreplicas: 1template:metadata:labels:app: attache-jarspec:volumes:- name: attach-jarpersistentVolumeClaim:claimName: pvc-jarcontainers:- name: attache-jarimage: harbor:443/myimg/jar:baseenv:- name: TZvalueFrom:configMapKeyRef:name: timezonekey: timezonecommand: ["/bin/bash"]args:- -c- |java -Dfile.encoding=utf-8 -jar /project/jar/attach-server-main-1.0.0-SNAPSHOT.jar -Xmx128M -Xms128M -Xmn64m -XX:MaxMetaspaceSize=128M -XX:MetaspaceSize=128M --server.port=30093 --spring.profiles.active=vmports:- protocol: TCPcontainerPort: 30093volumeMounts:- name: attach-jarmountPath: /project/jarrestartPolicy: Always[root@jumpserver tea-yaml]# kubectl apply -f pv-pvc-jar.yaml

[root@jumpserver tea-yaml]# kubectl apply -f passport-jar.yaml

[root@jumpserver tea-yaml]# kubectl apply -f teaserver-jar.yaml

[root@jumpserver tea-yaml]# kubectl apply -f pv-pvc-image.yaml

[root@jumpserver tea-yaml]# kubectl apply -f teaadmin-jar.yaml

[root@jumpserver tea-yaml]# kubectl apply -f attach-jar.yaml 创建对应的service服务,对用后端的jar

[root@jumpserver tea-yaml]# vim passport-service.yaml

---

apiVersion: v1

kind: Service

metadata:name: passport-service

spec:ports:- protocol: TCPport: 30094targetPort: 30094selector:app: passport-jartype: ClusterIP[root@jumpserver tea-yaml]# vim teaserver-service.yaml

---

apiVersion: v1

kind: Service

metadata:name: teaserver-service

spec:ports:- protocol: TCPport: 30091targetPort: 30091selector:app: teaserver-jartype: ClusterIP[root@tea jar]# vim teaadmin-service.yaml

---

apiVersion: v1

kind: Service

metadata:name: teaadmin-service

spec:ports:- protocol: TCPport: 30092targetPort: 30092selector:app: teaadmin-jartype: ClusterIP启动jar对应的service服务

[root@jumpserver tea-yaml]# kubectl apply -f passport-service.yaml

[root@jumpserver tea-yaml]# kubectl apply -f teaserver-service.yaml

[root@jumpserver tea-yaml]# kubectl apply -f teaadmin-service.yaml

[root@jumpserver tea-yaml]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.247.0.1 <none> 443/TCP 79m

passport-service ClusterIP 10.247.184.212 <none> 30094/TCP 4m9s

teaadmin-service ClusterIP 10.247.181.110 <none> 30092/TCP 55s

teaserver-service ClusterIP 10.247.248.165 <none> 30091/TCP 2m19s

2、部署前后台nginx页面

#创建学茶网后台管理页面和前台的页面

[root@jumpserver tea-yaml]# vim pv-pvc-backendpage.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pageadmin

spec:volumeMode: Filesystemcapacity:storage: 5GiaccessModes:- ReadWriteManypersistentVolumeReclaimPolicy: Retainnfs:server: 192.168.1.101path: /project/page/backend-page/dist/---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: pageadmin

spec:#storageClassName: ""volumeMode: FilesystemaccessModes:- ReadWriteManyresources:requests:storage: 2Gi[root@jumpserver tea-yaml]# vim pv-pvc-frontpage.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pagefront

spec:volumeMode: Filesystemcapacity:storage: 5GiaccessModes:- ReadWriteManypersistentVolumeReclaimPolicy: Retainnfs:server: 192.168.1.101path: /project/page/front-page/dist/---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: pagefront

spec:#storageClassName: ""volumeMode: FilesystemaccessModes:- ReadWriteManyresources:requests:storage: 2G

[root@jumpserver tea-yaml]# kubectl apply -f pv-pvc-backendpage.yaml

[root@jumpserver tea-yaml]# kubectl apply -f pv-pvc-frontpage.yaml #获取nginx的配置文件,进行nginx前后端页面交互部署,以及图片站点部署

[root@jumpserver tea-yaml]# vim /etc/hosts

...

192.168.1.30 harbor

[root@jumpserver tea-yaml]# vim /etc/docker/daemon.json #只要以下内容

{"insecure-registries":["harbor:443"]

}

[root@jumpserver tea-yaml]# systemctl restart docker

[root@jumpserver tea-yaml]# docker run -itd --name nginx harbor:443/myimg/tea:nginx

[root@jumpserver tea-yaml]# docker cp nginx:/usr/local/nginx/conf/nginx.conf ./

[root@jumpserver tea-yaml]# vim nginx.conf

...

#限制文件传输30 client_body_buffer_size 30m;31 client_max_body_size 30m;

...

#在文件倒数最后一个花括号里面写

server { #网站图片站点listen 30080;server_name __;location / {root /home/images/vm/;index index.html index.htm;}

}server { #网站前台页面listen 30091;server_name __;location / {root "/project/page/front-page/dist/";index index.html;}location /api/ {proxy_pass http://teaserver-service:30091/;}location /passport-api/ {proxy_pass http://passport-service:30094/;}

}server { #网站后台管理页面listen 30092;server_name __;location / {root "/project/page/backend-page/dist/";index index.html;}location /api/ {proxy_pass http://teaadmin-service:30092/;}location /to_passport/ {proxy_pass http://passport-service:30094/;}}

} #最后一个括号已经存在,不要复制#创建nginx的configmap

[root@jumpserver tea-yaml]# kubectl create configmap nginx --from-file=nginx.conf #编写nginx的资源对象文件,定义引用nginx的configmap

[root@jumpserver tea-yaml]# vim nginx.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:name: nginx

spec:selector:matchLabels:app: nginxreplicas: 1template:metadata:labels:app: nginxspec:volumes:- name: nginxconfigMap:name: nginx- name: pageadminpersistentVolumeClaim:claimName: pageadmin- name: pagefrontpersistentVolumeClaim:claimName: pagefront- name: imagepersistentVolumeClaim:claimName: imagecontainers:- name: nginximage: harbor:443/myimg/tea:nginxenv:- name: TZvalueFrom:configMapKeyRef:name: timezonekey: timezoneports:- name: image-30080protocol: TCPcontainerPort: 30080- name: teaserver-30091protocol: TCPcontainerPort: 30091- name: teaadmin-30092protocol: TCPcontainerPort: 30092volumeMounts:- name: nginxmountPath: /usr/local/nginx/conf/nginx.confsubPath: nginx.conf- name: imagemountPath: /home/images/vm/- name: pagefrontmountPath: /project/page/front-page/dist/- name: pageadminmountPath: /project/page/backend-page/dist/restartPolicy: Always

#创建nginx的service文件

[root@jumpserver tea-yaml]# vim nginx-service.yaml

---

apiVersion: v1

kind: Service

metadata:name: nginx-service

spec:ports:- name: image-30080protocol: TCPport: 30080targetPort: 30080nodePort: 30080- name: teaserver-30091protocol: TCPport: 30091targetPort: 30091nodePort: 30091- name: teadmin-30092protocol: TCPport: 30092targetPort: 30092nodePort: 30092selector:app: nginxtype: NodePort

[root@jumpserver tea-yaml]# kubectl apply -f nginx.yaml

[root@jumpserver tea-yaml]# kubectl apply -f nginx-service.yaml

3、发布服务

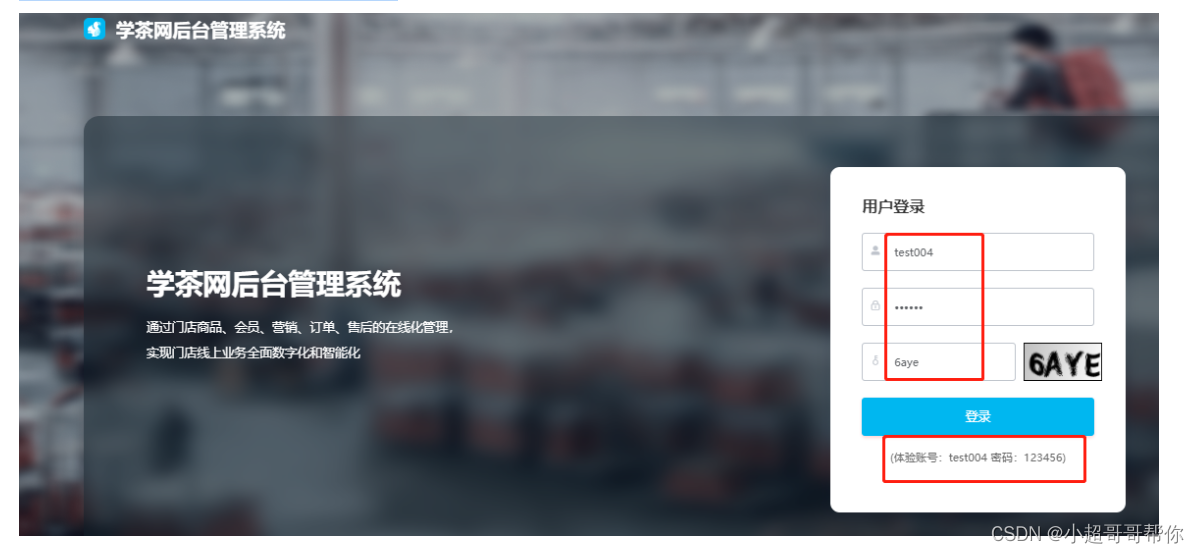

使NodePort发布服务使用负载均衡elb,分别发布30080,30091,30092,前端访问端口和后端访问端口都是一样的,后端服务器选择cce的三个计算节点即可访问图片站点:http://ELB负载均衡IP:30080/tea_attach/dog.jpg

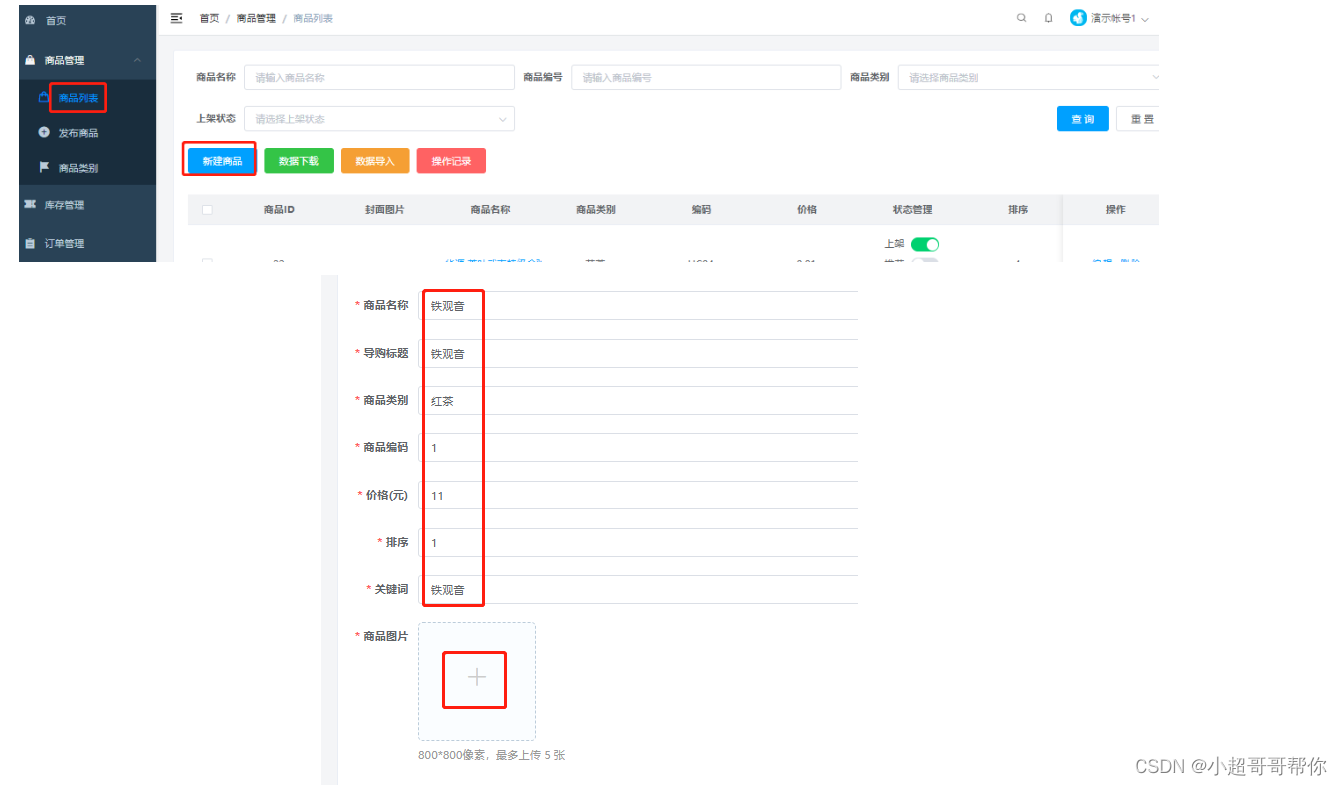

学茶商城后台管理页面:http://ELB负载均衡IP:30092

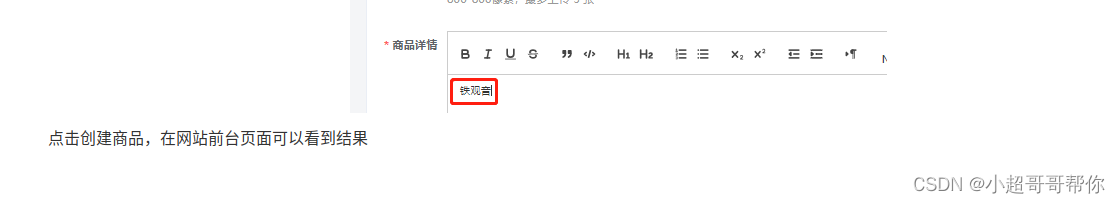

发布新的商品

这篇关于CCE容器集群部署,访问harbor镜像仓库/发布项目,部署后端程序,前台页面部署的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!