本文主要是介绍基于Udacity模拟器的端到端自动驾驶决策,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

1 端到端自动驾驶决策

端到端自动驾驶决策的输入为车辆的感知信息,如摄像头信息,输出为车辆的前轮转角和摄像头等信息。

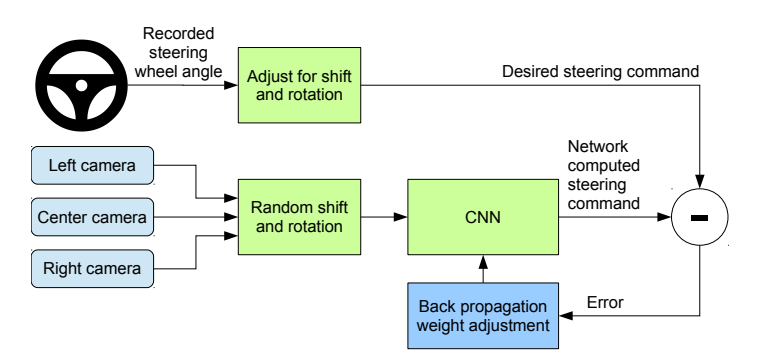

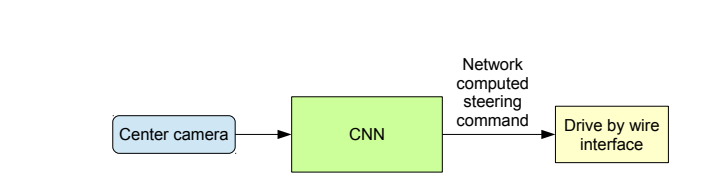

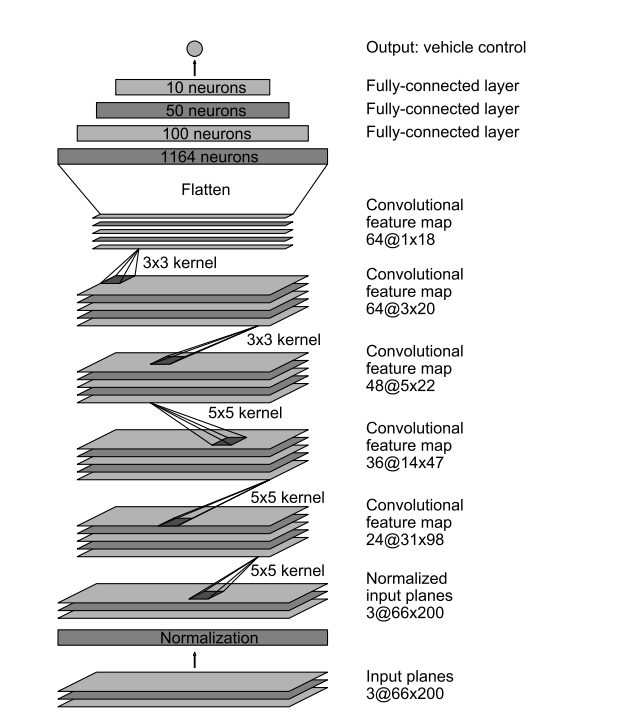

如上图所示,为英伟达公司的端到端自动驾驶决策框架,其CNN网络如下图所示,其中包括一个归一化层、5个卷积层和3个完全的全连接层。

2 Udacity 模拟器介绍

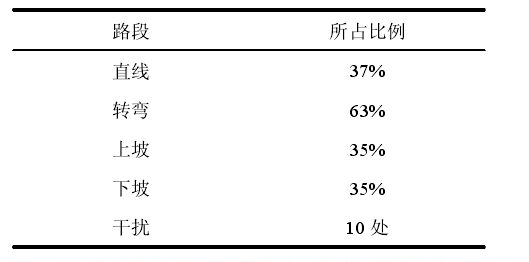

Udacity self-driving-car-sim是Udacity开源的一个汽车模拟器,主要用于自动驾驶模拟仿真实验。模拟器内置车辆,可以感知地图的图像和角度等关键信息。‘驾驶员’可以控制车辆的转向、油门和刹车等信息。模拟器内置两个场景,第一个场景为晴天环形公路简单场景,路面全程平整,无障碍物。第二个场景为盘山公路复杂场景,路面起伏,急弯、干扰路段较多,其中包括阴影、逆光、视线等强干扰信息。

对于第二个场景,弯道约有40个,其中包括小弯、圆弯等;强干扰部分约10处,包括不平整路面加转弯,下坡路面与远处道路造成的实现干扰等。

3 训练数据采集

-

下载模拟器后点击TRAINING MODE(训练模式)

-

进入TRAINING MODE后点击右上角的RECORD选择训练数据保存的位置

-

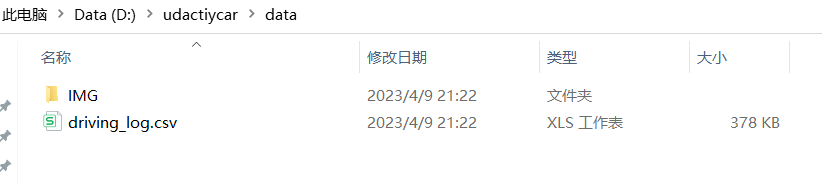

再次点击RECORD,当其变为RECORDING时,即可手动驾驶车辆,手动驾驶两到三圈,点击右上角的RECORDING,即可采集数据,采集的数据格式如下。

4 数据预处理

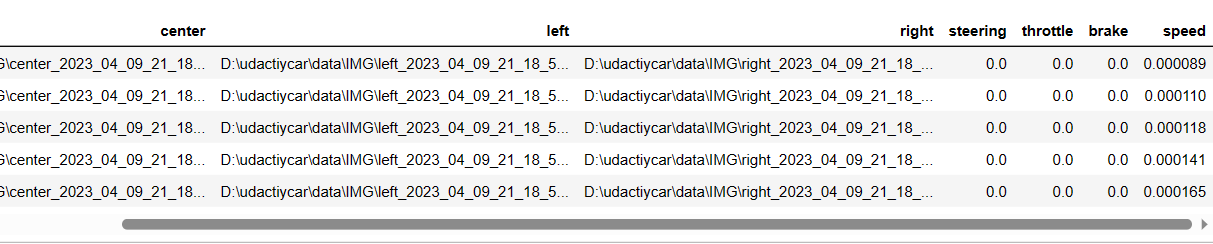

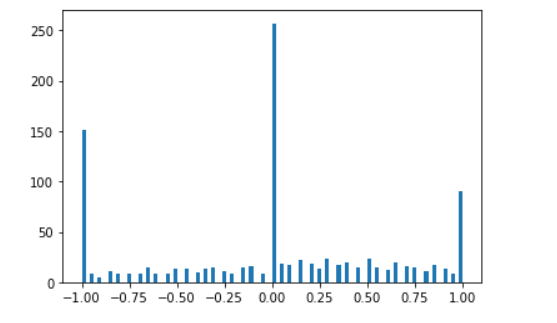

对原始数据进行分析,其中训练道路包含许多弯道,其中转角信息分布如图所示,近似直线行驶的数据即转角信息在0附近的数据占比远大于其他数据,最终导致模型可能更倾向于直线行驶。

导入数据

# load data csv

import pandas as pd

import matplotlib.pyplot as plt

data_folder = 'data/'

drive_log = pd.read_csv(data_folder + 'driving_log.csv')

drive_log.head()

查看原有数据转角分布

# plot the distribution

plt.hist(drive_log['steering'], bins=100)

plt.show()

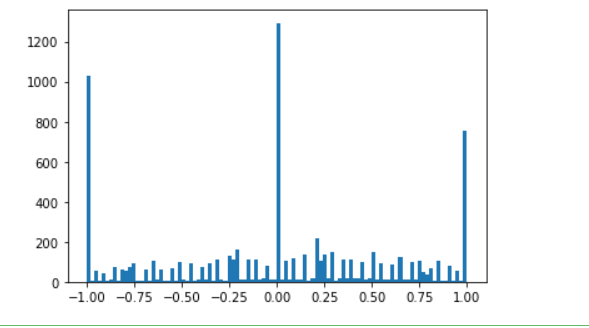

选取百分之20的转角为0的数据

drive_log = drive_log[drive_log['steering'] != 0].append(drive_log[drive_log['steering'] == 0].sample(frac=0.2))

plt.hist(drive_log['steering'], bins=100)

plt.show()

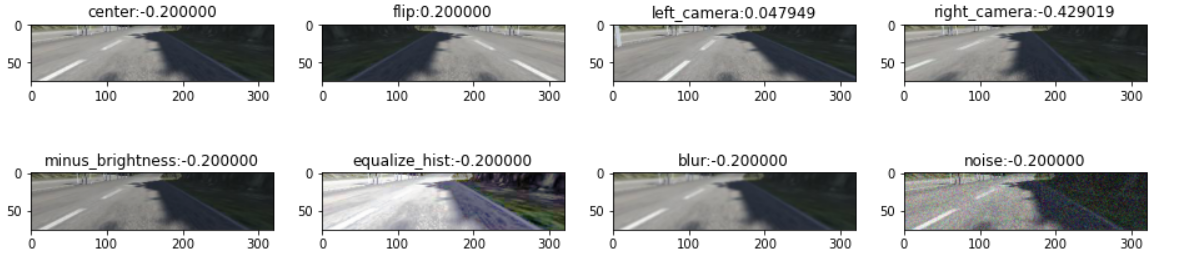

在选取百分之20的转向角数据后,根据左右相机头像用于角度校正和数据论证。

import numpy as np

from skimage import io, color, exposure, filters, img_as_ubyte

from skimage.transform import resize

from skimage.util import random_noise

data_folder = ''

def generate_data(line):type2data = {}# center imagecenter_img = io.imread(data_folder + line['center'].strip())center_ang = line['steering']type2data['center'] = (center_img, center_ang)# flip image if steering is not 0if line['steering']:flip_img = center_img[:, ::-1]flip_ang = center_ang * -1type2data['flip'] = (flip_img, flip_ang)# left image left_img = io.imread(data_folder + line['left'].strip())left_ang = center_ang + .2+ .05 * np.random.random()left_ang = min(left_ang, 1)type2data['left_camera'] = (left_img, left_ang)# right imageright_img = io.imread(data_folder + line['right'].strip())right_ang = center_ang - .2 - .05 * np.random.random()right_ang = max(right_ang, -1)type2data['right_camera'] = (right_img, right_ang)# minus brightnessaug_img = color.rgb2hsv(center_img)aug_img[:, :, 2] *= .5 + .4 * np.random.uniform()aug_img = img_as_ubyte(color.hsv2rgb(aug_img))aug_ang = center_angtype2data['minus_brightness'] = (aug_img, aug_ang)# equalize_histaug_img = np.copy(center_img)for channel in range(aug_img.shape[2]):aug_img[:, :, channel] = exposure.equalize_hist(aug_img[:, :, channel]) * 255aug_ang = center_angtype2data['equalize_hist'] = (aug_img, aug_ang)# blur imageblur_img = img_as_ubyte(np.clip(filters.gaussian(center_img, multichannel=True), -1, 1))blur_ang = center_angtype2data['blur'] = (blur_img, blur_ang)# noise imagenoise_img = img_as_ubyte(random_noise(center_img, mode='gaussian'))noise_ang = center_angtype2data['noise'] = (noise_img, noise_ang)# crop all imagesfor name, (img, ang) in type2data.items():img = img[60: -25, ...]type2data[name] = (img, ang)return type2datadef show_data(type2data):col = 4row = 1 + len(type2data) // 4f, axarr = plt.subplots(2, col, figsize=(16, 4))for idx, (name, (img, ang)) in enumerate(type2data.items()):axarr[idx//col, idx%col].set_title('{}:{:f}'.format(name, ang))axarr[idx//col, idx%col].imshow(img)plt.show()type2data = generate_data(drive_log.iloc[0])

show_data(type2data)

保存训练数据

import warnings

with warnings.catch_warnings():warnings.simplefilter("ignore")X_train, y_train = [], []for idx, row in drive_log.iterrows():type2data = generate_data(row)for img, ang in type2data.values():X_train.append(img)y_train.append(ang)X_train = np.array(X_train)

y_train = np.array(y_train)

np.save('X_train', X_train)

np.save('y_train', y_train)

训练数据分布

plt.hist(y_train, bins=100)

plt.show()

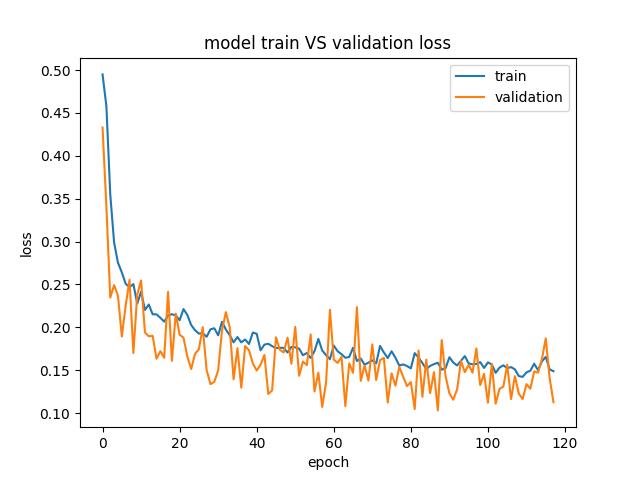

模型训练

import numpy as npfrom keras.models import Sequential

from keras.layers import Flatten, Dense, Lambda, Dropout, Conv2D# Define model

model = Sequential()

model.add(Lambda(lambda x: x / 255.0 - 0.5, input_shape=(75, 320, 3)))

model.add(Conv2D(24, (5, 5), strides=(2, 2), activation="elu"))

model.add(Conv2D(36, (5, 5), strides=(2, 2), activation="elu"))

model.add(Conv2D(48, (5, 5), strides=(2, 2), activation="elu"))

model.add(Conv2D(64, (3, 3), activation="elu"))

model.add(Conv2D(64, (3, 3), activation="elu"))

model.add(Dropout(0.8))

model.add(Flatten())

model.add(Dense(100))

model.add(Dense(50))

model.add(Dense(10))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mse')# Train model

X_train = np.load('X_train.npy')

y_train = np.load('y_train.npy')

model.fit(X_train, y_train, epochs=5, validation_split=0.1)# Save model

model.save('model.h5')

模型测试

import argparse

import base64

from datetime import datetime

import os

import shutilimport numpy as np

import socketio

import eventlet

import eventlet.wsgi

from PIL import Image

from flask import Flask

from io import BytesIOfrom keras.models import load_model

import h5py

from keras import __version__ as keras_versionsio = socketio.Server()

app = Flask(__name__)

model = None

prev_image_array = Noneclass SimplePIController:def __init__(self, Kp, Ki):self.Kp = Kpself.Ki = Kiself.set_point = 0.self.error = 0.self.integral = 0.def set_desired(self, desired):self.set_point = desireddef update(self, measurement):# proportional errorself.error = self.set_point - measurement# integral errorself.integral += self.errorreturn self.Kp * self.error + self.Ki * self.integralcontroller = SimplePIController(0.1, 0.002)

set_speed = 30

controller.set_desired(set_speed)@sio.on('telemetry')

def telemetry(sid, data):if data:# The current steering angle of the carsteering_angle = data["steering_angle"]# The current throttle of the carthrottle = data["throttle"]# The current speed of the carspeed = data["speed"]# The current image from the center camera of the carimgString = data["image"]image = Image.open(BytesIO(base64.b64decode(imgString)))image_array = np.asarray(image)image_array = image_array[60:-25, :, :]steering_angle = float(model.predict(image_array[None, ...], batch_size=1))throttle = controller.update(float(speed))print(steering_angle, throttle)send_control(steering_angle, throttle)# save frameif args.image_folder != '':timestamp = datetime.utcnow().strftime('%Y_%m_%d_%H_%M_%S_%f')[:-3]image_filename = os.path.join(args.image_folder, timestamp)image.save('{}.jpg'.format(image_filename))else:# NOTE: DON'T EDIT THIS.sio.emit('manual', data={}, skip_sid=True)@sio.on('connect')

def connect(sid, environ):print("connect ", sid)send_control(0, 0)def send_control(steering_angle, throttle):sio.emit("steer",data={'steering_angle': steering_angle.__str__(),'throttle': throttle.__str__()},skip_sid=True)if __name__ == '__main__':parser = argparse.ArgumentParser(description='Remote Driving')parser.add_argument('model',type=str,help='Path to model h5 file. Model should be on the same path.')parser.add_argument('image_folder',type=str,nargs='?',default='',help='Path to image folder. This is where the images from the run will be saved.')args = parser.parse_args()# check that model Keras version is same as local Keras versionf = h5py.File(args.model, mode='r')model_version = f.attrs.get('keras_version')keras_version = str(keras_version).encode('utf8')if model_version != keras_version:print('You are using Keras version ', keras_version,', but the model was built using ', model_version)model = load_model(args.model)if args.image_folder != '':print("Creating image folder at {}".format(args.image_folder))if not os.path.exists(args.image_folder):os.makedirs(args.image_folder)else:shutil.rmtree(args.image_folder)os.makedirs(args.image_folder)print("RECORDING THIS RUN ...")else:print("NOT RECORDING THIS RUN ...")# wrap Flask application with engineio's middlewareapp = socketio.Middleware(sio, app)# deploy as an eventlet WSGI servereventlet.wsgi.server(eventlet.listen(('', 4567)), app)

这篇关于基于Udacity模拟器的端到端自动驾驶决策的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!