本文主要是介绍【python3爬虫应用+PHP数据清洗】爬取研究生招生信息网的研究生专业信息,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

-

说明:对于譬如百度爬虫能爬到的页面内容,此python爬虫不当作破解活动。

-

由于需要抓取的信息比较棘手,没有使用基于cookie的虚拟环境想法,4联级分类才能得到最终信息详情页的内容,而信息详情页的内容包含了4联级的分类名称内容:类->目->学科->专业。于是乎手动20分钟把专业的目录页的片段源代码复制到html文件里面->用python3抓取所有专业目录->得到专业详情页的url->python3第一次清洗节点,得到基本信息->PHP保存基本信息->用PHP做第二次数据清洗,数据归类分表。

1)zy-研究生考研专业详情页链接目录.7z

下载:https://download.csdn.net/download/weixin_41827162/12173925

2)python3单线程全部代码:

import requests # pip install requests

from bs4 import BeautifulSoup

import urllib.request

import os

import sys

import re

import time

import _thread

import chardet

from urllib import parse

import random# 向txt文本写入内容

def write_txt(filename, info):with open(filename, 'a', encoding='utf-8') as txt:txt.write(info + '\n\n')passpass# GET请求

def request_get(get_url=''):get_response = requests.get(get_url)res = get_response.textreturn respass# POST请求

def request_post(post_url='', data_dict=None):if data_dict is None:data_dict = {'test': 'my test-post data', 'create_time': '2019'} # data示例字典格式res = requests.post(url=post_url, data=data_dict, headers={'Content-Type': 'application/x-www-form-urlencoded'})return respass# 使用代理(GET)请求接口

def use_proxy_request_api(url, proxy_addr='122.241.72.191:808'):req = urllib.request.Request(url)req.add_header("User-Agent","Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0")proxy = urllib.request.ProxyHandler({'http': proxy_addr})opener = urllib.request.build_opener(proxy, urllib.request.HTTPHandler)urllib.request.install_opener(opener)res = urllib.request.urlopen(req).read().decode('utf-8', 'ignore')return respass# ############################################################################# 获取url参数

def get_url_param(url='', key=''):array = parse.parse_qs(parse.urlparse(url).query)return array[key]pass# 获取url网页

def get_url_html(url, state=0):if state == 0:url = domain + urlpasselse:url = urlpass# 获取网页# 任意请求头hearder_list = [{'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.16 Safari/537.36 Edg/80.0.361.9'},{'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36'},{'User-Agent': 'Mozilla/5.0 (iPhone; CPU iPhone OS 10_3_1 like Mac OS X) AppleWebKit/603.1.3 (KHTML, like Gecko) Version/10.0 Mobile/14E304 Safari/602.1 wechatdevtools/1.02.1910120 MicroMessenger/7.0.4 Language/zh_CN webview/15780410115046065 webdebugger port/41084'},{'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:71.0) Gecko/20100101 Firefox/71.0'},{'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64; rv:68.0) Gecko/20100101 Firefox/68.0'},]index = random.randint(0, len(hearder_list) - 1)headers = hearder_list[index]req = urllib.request.Request(url=url, headers=headers)try:response = urllib.request.urlopen(req)passexcept:returnpasstry:page = response.read().decode('utf-8') # 编码格式gb2312,utf-8,GBKpassexcept:page = response.read().decode('gb2312') # 编码格式gb2312,utf-8,GBKpasshtml_string_page = str(page) # 转换成string,可以直接向数据库添加soup_page = BeautifulSoup(html_string_page, "html.parser") # 解析网页标签return soup_pagepass#

def get_html2(url):soup_page = get_url_html(url, 1)if soup_page is None:print('无此页面=' + url)sys.exit()passthat_div_info = soup_page.find('div', attrs={'class', 'zyk-base-info'})# print(that_div_info)# print(that_div_info.get_text().strip())that_div_province = soup_page.find('div', attrs={'class', 'zyk-zyfb-tab'}).find_all('li')# print(that_div_province)info = ''university = ''course_item_name = '' # 专业course_item_code = '' # 专业代码course_class_name = '' # 门类course_subject_name = '' # 学科for j in range(0, len(that_div_province) - 1):one_university = that_div_province[j].get('title')university = university + '@@' + one_universitypassinfo = info + '##' + that_div_info.get_text().strip().replace(' ', '') + '#@' + universityprint(info)# 保存post_url = 'app/save_zy_info'post_data = {'href': url, #'info': info,'course_catalog_id': 2,}# print(post_data)res = request_post(api + post_url, post_data)print(res.text)pass#

def get_html1(url):soup_page = get_url_html(url, 1)print(url)if soup_page is None:sys.exit()passthat_tr = soup_page.find_all('tr')# print(that_tr)num = 0for i in range(0, len(that_tr)-1):try:a = that_tr[i].find_all('a')[0]# print(a)title = a.get_text().strip()href = domain + a.get('href').strip()print(title + '=' + str(i))print(href)print(num)print('\n')num = num + 1#get_html2(href)time.sleep(1.3)passexcept:print('a=nil=' + str(i) + '\n')passpasspass'参数'

api = 'http://192.168.131.129/pydata/public/index.php/api/'

domain = 'https://yz.chsi.com.cn' # 主网址if __name__ == '__main__': # 函数执行入口print('---开始---')# 在此启动函数get_html1('http://192.168.131.129/pydata/view-admin/zy/2.html')print('---完成---')pass

-

3)PHP后端清洗数据:

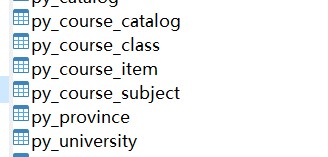

数据库表:

a.

// 记录python3抓取的信息public function save_zy_info(Request $request){$href = $request->input('href');$info = $request->input('info');$course_catalog_id = $request->input('course_catalog_id');$data = ['href'=> $href,'info'=> $info,'course_catalog_id'=> $course_catalog_id,];$res = Db::table('zy_data')->insertGetId($data);return array_to_json($res);}b.

// 清洗数据,数据分表保存public function exp_zy(){$zy = Db::table('zy_data')->select('zy_data_id', 'course_catalog_id', 'info', 'href')->get();$zy = json_to_array($zy);$item_array = [];$start_time = date('Y-m-d H:i:s');$end_time = '';for ($i=0; $i<count($zy); $i++){// 过滤$that_info = str_replace(' ', '_', $zy[$i]['info']);$that_info = str_replace('#@', '##', $that_info);$that_info = str_replace(PHP_EOL, '', $that_info);//$course_catalog_id = $zy[$i]['course_catalog_id'];$href = $zy[$i]['href'];// 专业信息$zy_info = explode('##', $that_info)[1];$zy_info_array = explode('_', $zy_info);$course_item_name = explode(':', $zy_info_array[0])[1]; // 专业$course_item_code = explode(':', $zy_info_array[1])[1]; // 专业代码$course_class_name = explode(':', $zy_info_array[3])[1]; // 学科$course_subject_name = explode(':', $zy_info_array[2])[1]; // 门类$data1 = ['course_catalog_id'=> $course_catalog_id,'course_subject_name'=> $course_subject_name,];$new_course_subject_id = Db::table('course_subject')->where('course_subject_name', '=', $course_subject_name)->where('course_catalog_id', '=', $course_catalog_id)->value('course_subject_id');if (!$new_course_subject_id){$new_course_subject_id = Db::table('course_subject')->insertGetId($data1);}if ($new_course_subject_id){$data2 = ['course_class_name'=> $course_class_name,'course_catalog_id'=> $course_catalog_id,'course_subject_id'=> $new_course_subject_id,];$new_course_class_id = Db::table('course_class')->where('course_class_name', '=', $course_class_name)->where('course_subject_id', '=', $new_course_subject_id)->value('course_class_id');if (!$new_course_class_id){$new_course_class_id = Db::table('course_class')->insertGetId($data2);}$zy_university = explode('##', $that_info)[2];$zy_university_array = explode('@@', $zy_university);for ($j=0; $j<count($zy_university_array); $j++){$the_university = $zy_university_array[$j];if (!empty($the_university)){$the_university = str_replace('(', '(', $the_university);$the_university =str_replace(')', ')', $the_university);$old_university_id = Db::table('university')->where('university_name', '=', $the_university)->value('university_id');if (!$old_university_id){$data4 = ['university_name'=> $the_university,];$old_university_id = Db::table('university')->insertGetId($data4);}$data3 = ['href'=> $href,'course_item_code'=> $course_item_code,'course_item_name'=> $course_item_name,'course_class_id'=> $new_course_class_id,'course_catalog_id'=> $course_catalog_id,'course_subject_id'=> $new_course_subject_id,'university_id'=> $old_university_id,];$course_item_id = Db::table('course_item')->insertGetId($data3);$end_time = date('Y-m-d H:i:s');$item_array[] = ['course_item_id'=> $course_item_id,'the_university'=> $the_university,'this_time'=> $end_time,$data3,];}}}}return array_to_json(['start_time'=> $start_time,'end_time'=> $end_time,//'item_array'=> $item_array,]);}-

-

这篇关于【python3爬虫应用+PHP数据清洗】爬取研究生招生信息网的研究生专业信息的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!