本文主要是介绍激活函数-Concatenated Rectified Linear Units,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

ICML2016

Understanding and Improving Convolutional Neural Networks via

Concatenated Rectified Linear Units

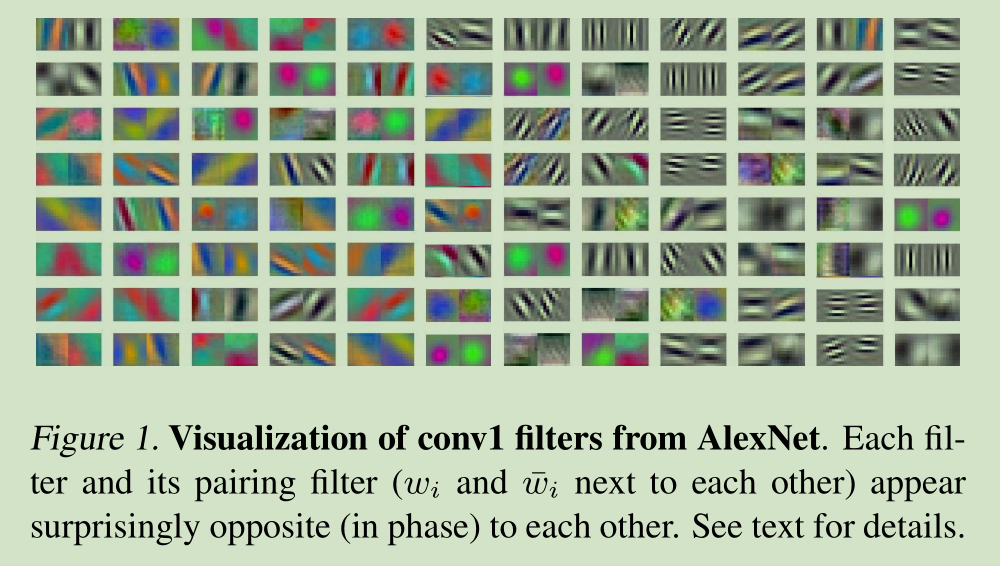

本文在深入分析CNN网络内部结构,发现在CNN网络的前几层学习到的滤波器中存在负相关。

they appear surprisingly opposite to each other, i.e., for each filter, there does exist another filter that is almost on the opposite phase

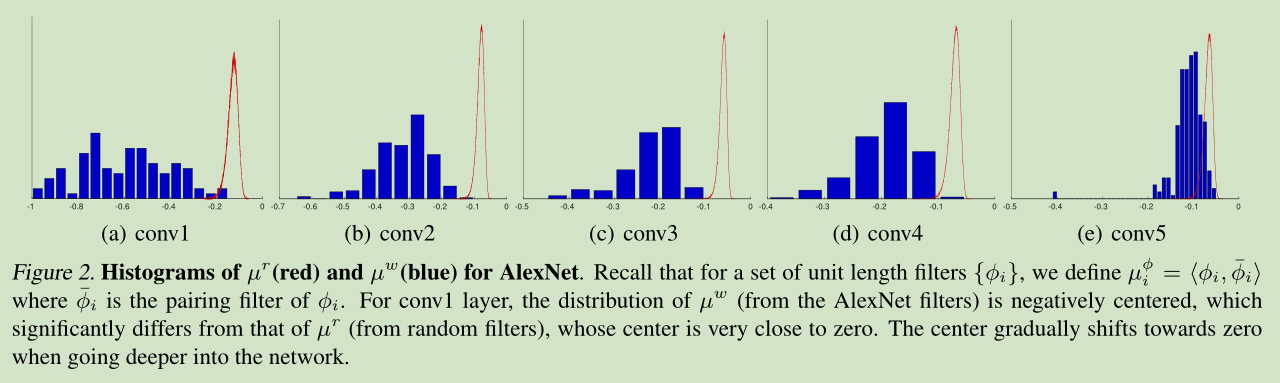

下图说明在第一卷积层,蓝色的直方图分布以-0.5为中心点,对称均匀分布,也就是说有较多成对的滤波器。越到后面的层,蓝色的直方图分布越集中,成对的滤波器越少。

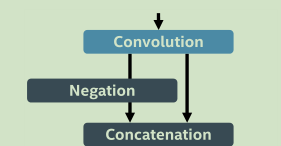

也就是说学习到的滤波器存在冗余。对此我们设计了CReLU, It simply makes

an identical copy of the linear responses after convolution, negate them, concatenate both parts of activation, and then apply ReLU altogether

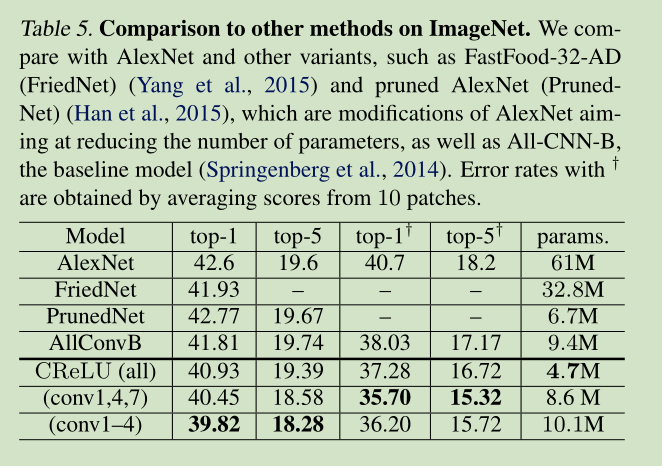

和其他方法结果对比

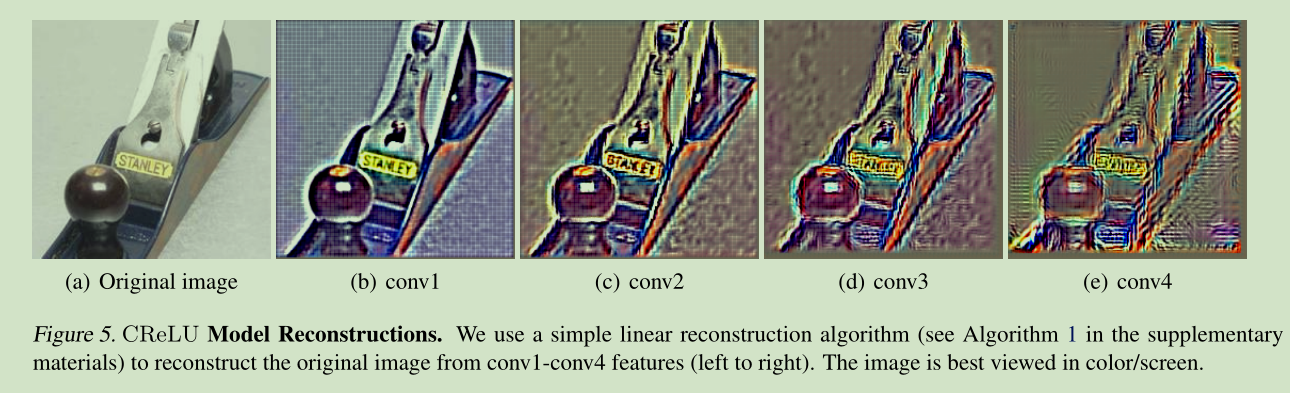

CReLU 的重构很好

这篇关于激活函数-Concatenated Rectified Linear Units的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!