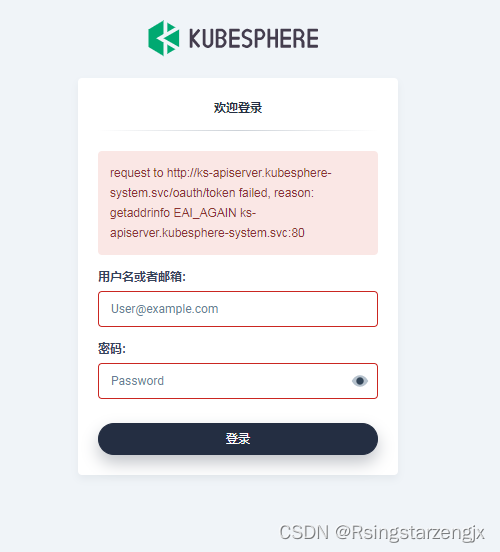

本文主要是介绍KubeSphere:登录错误,token failed, reason: getaddrinfo EAI_AGAIN ks-apiserver,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

1.问题现象:

2.问题解决:

[root@k8s-node1 ~]# kubectl get pods --all-namespaces

[root@k8s-node1 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default tomcat6-5f7ccf4cb9-4nx9r 1/1 Running 14 6d3h

default tomcat6-5f7ccf4cb9-dgc9s 1/1 Running 14 6d3h

default tomcat6-5f7ccf4cb9-svht8 1/1 Running 16 6d18h

gulimall gulimall-elasticsearch-v1-0 0/1 CrashLoopBackOff 8 21h

gulimall gulimall-mysql-master-v1-0 0/1 Pending 0 11h

gulimall gulimall-mysql-slaver-v1-0 1/1 Running 6 47h

gulimall gulimall-zipkin-v1-6f95cdbb4c-96862 1/1 Running 1 11h

gulimall kibana-v1-684496fddd-628m7 0/1 CrashLoopBackOff 106 21h

gulimall redis-v1-0 0/1 Pending 0 22h

gulimall sentinel-v1-658756445-m8zmr 1/1 Running 1 17h

ingress-nginx nginx-ingress-controller-9nxcr 1/1 Running 31 6d17h

ingress-nginx nginx-ingress-controller-c5znk 1/1 Running 32 6d7h

ingress-nginx nginx-ingress-controller-mgxhs 0/1 CrashLoopBackOff 601 6d17h

kube-flannel kube-flannel-ds-2lwj5 1/1 Running 25 7d22h

kube-flannel kube-flannel-ds-l8x48 1/1 Running 19 7d22h

kube-flannel kube-flannel-ds-pcz79 1/1 Running 20 7d22h

kube-system coredns-7f9c544f75-2vvgt 1/1 Running 16 7d23h

kube-system coredns-7f9c544f75-t77g6 1/1 Running 16 7d23h

kube-system etcd-k8s-node1 1/1 Running 27 7d23h

kube-system kube-apiserver-k8s-node1 1/1 Running 28 7d23h

kube-system kube-controller-manager-k8s-node1 1/1 Running 27 7d23h

kube-system kube-flannel-ds-amd64-b7cnx 0/1 CrashLoopBackOff 584 7d22h

kube-system kube-flannel-ds-amd64-g8rkd 0/1 CrashLoopBackOff 567 7d22h

kube-system kube-flannel-ds-amd64-r2nsv 0/1 CrashLoopBackOff 725 7d22h

kube-system kube-proxy-2hdnc 1/1 Running 20 7d22h

kube-system kube-proxy-m8z84 1/1 Running 19 7d22h

kube-system kube-proxy-wvf2p 1/1 Running 27 7d23h

kube-system kube-scheduler-k8s-node1 1/1 Running 27 7d23h

kube-system snapshot-controller-0 1/1 Running 1 11h

kube-system tiller-deploy-6d68b98c95-s4cn2 1/1 Running 16 6d17h

kubesphere-controls-system default-http-backend-5d464dd566-g5tft 1/1 Running 15 6d3h

kubesphere-controls-system kubectl-admin-6c9bd5b454-n5qzg 1/1 Running 1 11h

kubesphere-controls-system kubectl-ws-admin-5654bf4f9f-z2whw 1/1 Running 10 4d11h

kubesphere-devops-system ks-jenkins-55c7cc49b7-5fbh7 0/1 CrashLoopBackOff 45 11h

kubesphere-devops-system s2ioperator-0 0/1 CrashLoopBackOff 44 11h

kubesphere-logging-system elasticsearch-logging-data-0 1/1 Running 1 11h

kubesphere-logging-system elasticsearch-logging-data-1 0/1 Init:ImageInspectError 0 5d8h

kubesphere-logging-system elasticsearch-logging-discovery-0 1/1 Running 1 11h

kubesphere-logging-system fluent-bit-j7s85 1/1 Running 12 5d7h

kubesphere-logging-system fluent-bit-mv46r 1/1 Running 12 5d7h

kubesphere-logging-system fluent-bit-wrmbs 1/1 Running 17 5d7h

kubesphere-logging-system fluentbit-operator-9b69495b-hglx8 1/1 Running 13 5d8h

kubesphere-logging-system kube-auditing-operator-74f65b9c66-m52kf 1/1 Running 1 11h

kubesphere-logging-system kube-auditing-webhook-deploy-59b8479fdf-4vv4d 0/1 CrashLoopBackOff 421 5d8h

kubesphere-logging-system kube-auditing-webhook-deploy-59b8479fdf-8rb2m 1/1 Running 1 11h

kubesphere-monitoring-system alertmanager-main-0 1/2 Running 9 11h

kubesphere-monitoring-system alertmanager-main-1 2/2 Running 28 6d2h

kubesphere-monitoring-system alertmanager-main-2 1/2 Running 9 11h

kubesphere-monitoring-system kube-state-metrics-7bc59d8bdd-7xzsv 3/3 Running 3 11h

kubesphere-monitoring-system node-exporter-27f7g 2/2 Running 28 6d2h

kubesphere-monitoring-system node-exporter-4vfjx 2/2 Running 42 6d2h

kubesphere-monitoring-system node-exporter-7qd77 2/2 Running 28 6d2h

kubesphere-monitoring-system notification-manager-deployment-7f7f4656f9-8qz6k 1/1 Running 1 11h

kubesphere-monitoring-system notification-manager-deployment-7f7f4656f9-w8mmh 1/1 Running 14 6d2h

kubesphere-monitoring-system notification-manager-operator-75cff7dc87-nptvw 2/2 Running 12 11h

kubesphere-monitoring-system prometheus-k8s-0 3/3 Running 4 11h

kubesphere-monitoring-system prometheus-k8s-1 2/3 CrashLoopBackOff 333 6d2h

kubesphere-monitoring-system prometheus-operator-677cc4c58b-hvjsh 2/2 Running 2 11h

kubesphere-monitoring-system thanos-ruler-kubesphere-0 2/2 Running 35 5d7h

kubesphere-monitoring-system thanos-ruler-kubesphere-1 1/2 CrashLoopBackOff 12 11h

kubesphere-system ks-apiserver-69fd6b8fb7-gh8kn 0/1 CrashLoopBackOff 1 43s

kubesphere-system ks-console-5885c9df7f-cn5cx 1/1 Running 0 9m55s

kubesphere-system ks-controller-manager-859f757b98-6hncf 1/1 Running 0 42s

kubesphere-system ks-installer-5ccd968cd5-c6ds6 1/1 Running 0 9m59s

kubesphere-system minio-5f49b564fd-v75g5 1/1 Running 1 11h

kubesphere-system openldap-0 1/1 Running 15 6d3h

kubesphere-system redis-77f4bf4455-kmkkz 1/1 Running 14 6d2h

openebs maya-apiserver-77f486b6b9-6mkww 1/1 Running 14 6d3h

openebs openebs-admission-server-5d87f9f5d7-zq2hx 0/1 CrashLoopBackOff 520 6d7h

openebs openebs-localpv-provisioner-cfbd956fc-5tw78 1/1 Running 54 6d3h

openebs openebs-ndm-fxwxc 1/1 Running 20 6d7h

openebs openebs-ndm-operator-674d75bd7c-2rh74 1/1 Running 15 6d7h

openebs openebs-ndm-v7ktn 1/1 Running 40 6d7h

openebs openebs-ndm-z2b2p 0/1 CrashLoopBackOff 520 6d7h

openebs openebs-provisioner-b5dc795f-q7nc6 1/1 Running 59 6d7h

openebs openebs-snapshot-operator-db88f5744-dcl65 2/2 Running 66 6d3h

kubectl get pods -n kube-system

找到coredns服务

kubectl delete pod coredns-7f9c544f75-2vvgt -n kube-system

kubectl delete pod coredns-7f9c544f75-t77g6 -n kube-system

reboot 重启

关闭 网络代理

3.参考:

官网:

kubesphere登录失败,redis连接失败 - KubeSphere 开发者社区

参考解决:

kubesphere安装后登录报错 token failed, reason: getaddrinfo EAI_AGAIN ks-apiserver_request to http://ks-apiserver/oauth/token failed,_技术人小冯的博客-CSDN博客

目前这几个image pull policy都是Always,直接执行下面的命令

kubectl rollout restart deployments ks-installer -n kubesphere-system

kubectl rollout restart deployments ks-apiserver -n kubesphere-system

kubectl rollout restart deployments ks-controller-manager -n kubesphere-system

kubectl rollout restart deployments ks-console -n kubesphere-system

这篇关于KubeSphere:登录错误,token failed, reason: getaddrinfo EAI_AGAIN ks-apiserver的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!