本文主要是介绍基于 BERT 对 IMDB 电影评论进行情感分类,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

前言

系列专栏:【深度学习:算法项目实战】✨︎

涉及医疗健康、财经金融、商业零售、食品饮料、运动健身、交通运输、环境科学、社交媒体以及文本和图像处理等诸多领域,讨论了各种复杂的深度神经网络思想,如卷积神经网络、循环神经网络、生成对抗网络、门控循环单元、长短期记忆、自然语言处理、深度强化学习、大型语言模型和迁移学习。

BERT 是 Bidirectional Representation for Transformers 的缩写,由谷歌人工智能语言研究人员于 2018 年提出。虽然其主要目的是提高对谷歌搜索相关查询含义的理解,但 BERT 已成为各种自然语言任务中最重要、最完整的架构之一,在句子对分类任务、问答任务等方面取得了最先进的成果。

BERT 是一种强大的自然语言处理技术,可以提高计算机理解人类语言的能力。BERT 的基础是利用双向语境来获取复杂而深刻的单词和短语表述。通过同时检查单词上下文的两侧,BERT 可以从上下文中捕捉到单词的全部含义,而早期的模型只考虑单词的左右上下文。这使得 BERT 能够处理模糊而复杂的语言现象,包括多义词、共同参照和远距离关系。

为此,论文还提出了不同任务的架构。在本篇文章中,我们将使用 BERT 架构来处理情感分类任务,特别是用于 CoLA(语言可接受性语料库)二元分类任务的架构。

目录

- 1. 相关数据集

- 1.1 导入必要库

- 1.2 加载数据集

- 2. 文本预处理

- 2.1 文本统计

- 2.2 文本清理

- 3. 词云可视化

- 3.1 正面评价

- 3.2 负面评论

- 4. 数据集拆分

- 4.1 拆分输入文本和目标情感的训练集和测试集

- 4.2 将 TEST 数据分为测试和验证两部分

- 5. 标记与编码

- 6. 构建分类模型

- 6.1 加载模型

- 6.2 编译模型

- 6.3 训练模型

- 7. 评估分类模型

- 7.1 评估模型

- 7.2 利用用户输入进行预测

- 8. 总结

对于 TensorFlow 的实现,谷歌提供了 BERT BASE 和 BERT LARGE 的两个版本:无大小写版本和有大小写版本。在无大小写版本中,字母在 WordPiece 标记化之前是小写的。

1. 相关数据集

1.1 导入必要库

import os

import shutil

import tarfile

import tensorflow as tfimport re

import pandas as pd

import matplotlib.pyplot as plt

from bs4 import BeautifulSoupimport plotly.express as px

import plotly.offline as pyo

import plotly.graph_objects as gofrom wordcloud import WordCloud, STOPWORDS

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report

from transformers import BertTokenizer, TFBertForSequenceClassification

1.2 加载数据集

①获取数据集所在目录

# Get the current working directory

current_folder = os.getcwd()dataset = tf.keras.utils.get_file(fname ="aclImdb.tar.gz", origin ="http://ai.stanford.edu/~amaas/data/sentiment/aclImdb_v1.tar.gz",cache_dir= current_folder,extract = True)

②查看数据集文件夹

# Check the dataset

dataset_path = os.path.dirname(dataset)

os.listdir(dataset_path)

['aclImdb', 'aclImdb.tar.gz']

③查看 "aclImdb "目录

# Dataset directory

dataset_dir = os.path.join(dataset_path, 'aclImdb')# Check the Dataset directory

os.listdir(dataset_dir)

['imdb.vocab', 'imdbEr.txt', 'README', 'test', 'train']

④查看 "Train "数据集文件夹

train_dir = os.path.join(dataset_dir,'train')

os.listdir(train_dir)

['labeledBow.feat','neg','pos','unsup','unsupBow.feat','urls_neg.txt','urls_pos.txt','urls_unsup.txt']

⑤读取 "Train "目录下的文件

for file in os.listdir(train_dir):file_path = os.path.join(train_dir, file)# Check if it's a file (not a directory)if os.path.isfile(file_path): with open(file_path, 'r', encoding='utf-8') as f:first_value = f.readline().strip()print(f"{file}: {first_value}")else:print(f"{file}: {file_path}")

labeledBow.feat: 9 0:9 1:1 2:4 3:4 4:6 5:4 6:2 7:2 8:4 10:4 12:2 26:1 27:1 28:1 29:2 32:1 41:1 45:1 47:1 50:1 54:2 57:1 59:1 63:2 64:1 66:1 68:2 70:1 72:1 78:1 100:1 106:1 116:1 122:1 125:1 136:1 140:1 142:1 150:1 167:1 183:1 201:1 207:1 208:1 213:1 217:1 230:1 255:1 321:5 343:1 357:1 370:1 390:2 468:1 514:1 571:1 619:1 671:1 766:1 877:1 1057:1 1179:1 1192:1 1402:2 1416:1 1477:2 1940:1 1941:1 2096:1 2243:1 2285:1 2379:1 2934:1 2938:1 3520:1 3647:1 4938:1 5138:4 5715:1 5726:1 5731:1 5812:1 8319:1 8567:1 10480:1 14239:1 20604:1 22409:4 24551:1 47304:1

neg: C:\Users\83168\datasets\aclImdb\train\neg

pos: C:\Users\83168\datasets\aclImdb\train\pos

unsup: C:\Users\83168\datasets\aclImdb\train\unsup

unsupBow.feat: 0 0:8 1:6 3:5 4:2 5:1 7:1 8:5 9:2 10:1 11:2 13:3 16:1 17:1 18:1 19:1 22:3 24:1 26:3 28:1 30:1 31:1 35:2 36:1 39:2 40:1 41:2 46:2 47:1 48:1 52:1 63:1 67:1 68:1 74:1 81:1 83:1 87:1 104:1 105:1 112:1 117:1 131:1 151:1 155:1 170:1 198:1 225:1 226:1 288:2 291:1 320:1 331:1 342:1 364:1 374:1 384:2 385:1 407:1 437:1 441:1 465:1 468:1 470:1 519:1 595:1 615:1 650:1 692:1 851:1 937:1 940:1 1100:1 1264:1 1297:1 1317:1 1514:1 1728:1 1793:1 1948:1 2088:1 2257:1 2358:1 2584:2 2645:1 2735:1 3050:1 4297:1 5385:1 5858:1 7382:1 7767:1 7773:1 9306:1 10413:1 11881:1 15907:1 18613:1 18877:1 25479:1

urls_neg.txt: http://www.imdb.com/title/tt0064354/usercomments

urls_pos.txt: http://www.imdb.com/title/tt0453418/usercomments

urls_unsup.txt: http://www.imdb.com/title/tt0018515/usercomments

⑥加载电影评论,并将其转换为带有各自情感的 pandas 数据帧

这里 0 表示负面,1 表示正面

def load_dataset(directory):data = {"sentence": [], "sentiment": []}for file_name in os.listdir(directory):print(file_name)if file_name == 'pos':positive_dir = os.path.join(directory, file_name)for text_file in os.listdir(positive_dir):text = os.path.join(positive_dir, text_file)with open(text, "r", encoding="utf-8") as f:data["sentence"].append(f.read())data["sentiment"].append(1)elif file_name == 'neg':negative_dir = os.path.join(directory, file_name)for text_file in os.listdir(negative_dir):text = os.path.join(negative_dir, text_file)with open(text, "r", encoding="utf-8") as f:data["sentence"].append(f.read())data["sentiment"].append(0)return pd.DataFrame.from_dict(data)

⑦加载训练数据集

# Load the dataset from the train_dir

train_df = load_dataset(train_dir)

print(train_df.head())

labeledBow.feat

neg

pos

unsup

unsupBow.feat

urls_neg.txt

urls_pos.txt

urls_unsup.txtsentence sentiment

0 Story of a man who has unnatural feelings for ... 0

1 Airport '77 starts as a brand new luxury 747 p... 0

2 This film lacked something I couldn't put my f... 0

3 Sorry everyone,,, I know this is supposed to b... 0

4 When I was little my parents took me along to ... 0

⑧加载测试数据集

test_dir = os.path.join(dataset_dir,'test')# Load the dataset from the train_dir

test_df = load_dataset(test_dir)

print(test_df.head())

labeledBow.feat

neg

pos

urls_neg.txt

urls_pos.txtsentence sentiment

0 Once again Mr. Costner has dragged out a movie... 0

1 This is an example of why the majority of acti... 0

2 First of all I hate those moronic rappers, who... 0

3 Not even the Beatles could write songs everyon... 0

4 Brass pictures (movies is not a fitting word f... 0

2. 文本预处理

2.1 文本统计

sentiment_counts = train_df['sentiment'].value_counts()fig =px.bar(x= {0:'Negative',1:'Positive'},y= sentiment_counts.values,color=sentiment_counts.index,color_discrete_sequence = px.colors.qualitative.Dark24,title='<b>Sentiments Counts')fig.update_layout(title='Sentiments Counts',xaxis_title='Sentiment',yaxis_title='Counts',template='plotly_white')# Show the bar chart

fig.show()

pyo.plot(fig, filename = 'Sentiments Counts.html', auto_open = True)

2.2 文本清理

定义文本清理函数

def text_cleaning(text):soup = BeautifulSoup(text, "html.parser")text = re.sub(r'\[[^]]*\]', '', soup.get_text())pattern = r"[^a-zA-Z0-9\s,']"text = re.sub(pattern, '', text)return text

应用文本清理函数

# Train dataset

train_df['Cleaned_sentence'] = train_df['sentence'].apply(text_cleaning).tolist()

# Test dataset

test_df['Cleaned_sentence'] = test_df['sentence'].apply(text_cleaning)

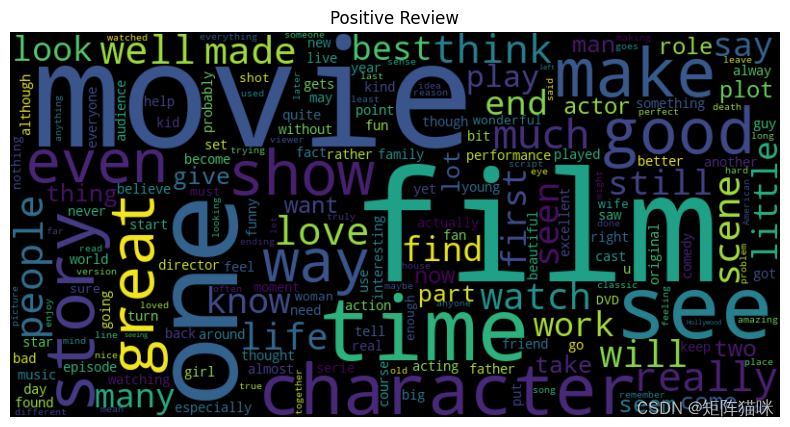

3. 词云可视化

# Function to generate word cloud

def generate_wordcloud(text,Title):all_text = " ".join(text)wordcloud = WordCloud(width=800, height=400,stopwords=set(STOPWORDS), background_color='black').generate(all_text)plt.figure(figsize=(10, 5))plt.imshow(wordcloud, interpolation='bilinear')plt.axis("off")plt.title(Title)plt.show()

3.1 正面评价

positive = train_df[train_df['sentiment']==1]['Cleaned_sentence'].tolist()

generate_wordcloud(positive,'Positive Review')

3.2 负面评论

negative = train_df[train_df['sentiment']==0]['Cleaned_sentence'].tolist()

generate_wordcloud(negative,'Negative Review')

4. 数据集拆分

4.1 拆分输入文本和目标情感的训练集和测试集

# Training data

#Reviews = "[CLS] " +train_df['Cleaned_sentence'] + "[SEP]"

Reviews = train_df['Cleaned_sentence']

Target = train_df['sentiment']# Test data

#test_reviews = "[CLS] " +test_df['Cleaned_sentence'] + "[SEP]"

test_reviews = test_df['Cleaned_sentence']

test_targets = test_df['sentiment']

4.2 将 TEST 数据分为测试和验证两部分

x_val, x_test, y_val, y_test = train_test_split(test_reviews,test_targets,test_size=0.5, stratify = test_targets)

5. 标记与编码

BERT标记化用于将原始文本转换为可输入 BERT 模型的数字输入。它对文本进行标记,并执行一些预处理,以便为模型的输入格式准备文本。让我们来了解一下 BERT 标记化模型的一些主要特点。

- BERT标记器会将单词分割成子单词。例如,单词 "geeksforgeeks “可以拆分成 “geeks””##for "和 “##geeks”。##"前缀表示子词是前一个词的延续。这减少了词汇量,有助于模型处理罕见词或未知词。

- BERT 标记符号生成器会在序列中添加 [CLS]、[SEP] 和 [MASK] 等特殊标记符号。这些标记具有特殊含义,如 :

- [CLS]用于分类,在情感分析中代表整个输入、

- [SEP]用作分隔符,即标记不同句子或片段之间的边界、

- [MASK]用于遮蔽,即在预训练过程中向模型隐藏一些标记。

- BERT 标记符号生成器将其组件作为输出:

- input_ids: 词汇标记的数字标识符

- token_type_ids: 它标识每个标记属于哪个片段或句子。

- attention_mask: 标记,告知模型哪些标记需要关注,哪些不需要关注。

加载预训练的 BERT 标记器

#Tokenize and encode the data using the BERT tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased', do_lower_case=True)

在训练、测试和验证数据集中应用 BERT 标记化技术

max_len= 128

# Tokenize and encode the sentences

X_train_encoded = tokenizer.batch_encode_plus(Reviews.tolist(),padding=True, truncation=True,max_length = max_len,return_tensors='tf')X_val_encoded = tokenizer.batch_encode_plus(x_val.tolist(), padding=True, truncation=True,max_length = max_len,return_tensors='tf')X_test_encoded = tokenizer.batch_encode_plus(x_test.tolist(), padding=True, truncation=True,max_length = max_len,return_tensors='tf')

检查编码后的数据集

k = 0

print('Training Comments -->>',Reviews[k])

print('\nInput Ids -->>\n',X_train_encoded['input_ids'][k])

print('\nDecoded Ids -->>\n',tokenizer.decode(X_train_encoded['input_ids'][k]))

print('\nAttention Mask -->>\n',X_train_encoded['attention_mask'][k])

print('\nLabels -->>',Target[k])

Training Comments -->> Story of a man who has unnatural feelings for a pig Starts out with a opening scene that is a terrific example of absurd comedy A formal orchestra audience is turned into an insane, violent mob by the crazy chantings of it's singers Unfortunately it stays absurd the WHOLE time with no general narrative eventually making it just too off putting Even those from the era should be turned off The cryptic dialogue would make Shakespeare seem easy to a third grader On a technical level it's better than you might think with some good cinematography by future great Vilmos Zsigmond Future stars Sally Kirkland and Frederic Forrest can be seen brieflyInput Ids -->>tf.Tensor(

[ 101 2466 1997 1037 2158 2040 2038 21242 5346 2005 1037 103694627 2041 2007 1037 3098 3496 2008 2003 1037 27547 2742 199718691 4038 1037 5337 4032 4378 2003 2357 2046 2019 9577 10106355 11240 2011 1996 4689 22417 2015 1997 2009 1005 1055 84536854 2009 12237 18691 1996 2878 2051 2007 2053 2236 7984 27762437 2009 2074 2205 2125 5128 2130 2216 2013 1996 3690 23232022 2357 2125 1996 26483 7982 2052 2191 8101 4025 3733 20001037 2353 3694 2099 2006 1037 4087 2504 2009 1005 1055 24882084 2017 2453 2228 2007 2070 2204 16434 2011 2925 2307 681913728 2891 1062 5332 21693 15422 2925 3340 8836 11332 3122 199815296 16319 2064 2022 2464 4780 102 0], shape=(128,), dtype=int32)Decoded Ids -->>[CLS] story of a man who has unnatural feelings for a pig starts out with a opening scene that is a terrific example of absurd comedy a formal orchestra audience is turned into an insane, violent mob by the crazy chantings of it's singers unfortunately it stays absurd the whole time with no general narrative eventually making it just too off putting even those from the era should be turned off the cryptic dialogue would make shakespeare seem easy to a third grader on a technical level it's better than you might think with some good cinematography by future great vilmos zsigmond future stars sally kirkland and frederic forrest can be seen briefly [SEP] [PAD]Attention Mask -->>tf.Tensor(

[1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 11 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 11 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 11 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0], shape=(128,), dtype=int32)Labels -->> 0

6. 构建分类模型

6.1 加载模型

# Intialize the model

model = TFBertForSequenceClassification.from_pretrained('bert-base-uncased', num_labels=2)

Model: "tf_bert_for_sequence_classification"

_________________________________________________________________Layer (type) Output Shape Param #

=================================================================bert (TFBertMainLayer) multiple 109482240 dropout_37 (Dropout) multiple 0 classifier (Dense) multiple 1538 =================================================================

Total params: 109,483,778

Trainable params: 109,483,778

Non-trainable params: 0

_________________________________________________________________

如果当前任务与训练检查点模型的任务类似,我们就可以使用 TFBertForSequenceClassification 进行预测,而无需进一步训练。

6.2 编译模型

# Compile the model with an appropriate optimizer, loss function, and metrics

optimizer = tf.keras.optimizers.Adam(learning_rate=2e-5)

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

metric = tf.keras.metrics.SparseCategoricalAccuracy('accuracy')

model.compile(optimizer=optimizer, loss=loss, metrics=[metric])

6.3 训练模型

# Train the model

history = model.fit([X_train_encoded['input_ids'], X_train_encoded['token_type_ids'], X_train_encoded['attention_mask']],Target,validation_data=([X_val_encoded['input_ids'], X_val_encoded['token_type_ids'], X_val_encoded['attention_mask']],y_val),batch_size=32,epochs=3

)

Epoch 1/3

782/782 [==============================] - 513s 587ms/step - loss: 0.3445 - accuracy: 0.8446 - val_loss: 0.2710 - val_accuracy: 0.8880

Epoch 2/3

782/782 [==============================] - 432s 552ms/step - loss: 0.2062 - accuracy: 0.9186 - val_loss: 0.2686 - val_accuracy: 0.8886

Epoch 3/3

782/782 [==============================] - 431s 551ms/step - loss: 0.1105 - accuracy: 0.9615 - val_loss: 0.3235 - val_accuracy: 0.8908

7. 评估分类模型

7.1 评估模型

#Evaluate the model on the test data

test_loss, test_accuracy = model.evaluate([X_test_encoded['input_ids'], X_test_encoded['token_type_ids'], X_test_encoded['attention_mask']],y_test

)

print(f'Test loss: {test_loss}, Test accuracy: {test_accuracy}')

391/391 [==============================] - 67s 171ms/step - loss: 0.3417 - accuracy: 0.8873

Test loss: 0.3417432904243469, Test accuracy: 0.8872799873352051

②将模型和标记程序保存到本地文件夹中

path = 'path-to-save'

# Save tokenizer

tokenizer.save_pretrained(path +'/Tokenizer')# Save model

model.save_pretrained(path +'/Model')

③预测测试数据集的情感特征

pred = bert_model.predict([X_test_encoded['input_ids'], X_test_encoded['token_type_ids'], X_test_encoded['attention_mask']])# pred is of type TFSequenceClassifierOutput

logits = pred.logits# Use argmax along the appropriate axis to get the predicted labels

pred_labels = tf.argmax(logits, axis=1)# Convert the predicted labels to a NumPy array

pred_labels = pred_labels.numpy()label = {1: 'positive',0: 'Negative'

}# Map the predicted labels to their corresponding strings using the label dictionary

pred_labels = [label[i] for i in pred_labels]

Actual = [label[i] for i in y_test]print('Predicted Label :', pred_labels[:10])

print('Actual Label :', Actual[:10])

391/391 [==============================] - 68s 167ms/step

Predicted Label : ['positive', 'positive', 'positive', 'positive', 'positive', 'Negative', 'Negative', 'Negative', 'positive', 'positive']

Actual Label : ['positive', 'positive', 'positive', 'Negative', 'positive', 'Negative', 'Negative', 'Negative', 'positive', 'positive']

④分类报告

print("Classification Report: \n", classification_report(Actual, pred_labels))

Classification Report: precision recall f1-score supportNegative 0.91 0.86 0.88 6250positive 0.87 0.91 0.89 6250accuracy 0.89 12500macro avg 0.89 0.89 0.89 12500

weighted avg 0.89 0.89 0.89 12500

7.2 利用用户输入进行预测

def Get_sentiment(Review, Tokenizer=bert_tokenizer, Model=bert_model):# Convert Review to a list if it's not already a listif not isinstance(Review, list):Review = [Review]Input_ids, Token_type_ids, Attention_mask = Tokenizer.batch_encode_plus(Review,padding=True,truncation=True,max_length=128,return_tensors='tf').values()prediction = Model.predict([Input_ids, Token_type_ids, Attention_mask])# Use argmax along the appropriate axis to get the predicted labelspred_labels = tf.argmax(prediction.logits, axis=1)# Convert the TensorFlow tensor to a NumPy array and then to a list to get the predicted sentiment labelspred_labels = [label[i] for i in pred_labels.numpy().tolist()]return pred_labels

让我们用自己的评论来预测

Review ='''Bahubali is a blockbuster Indian movie that was released in 2015.

It is the first part of a two-part epic saga that tells the story of a legendary hero who fights for his kingdom and his love.

The movie has received rave reviews from critics and audiences alike for its stunning visuals,

spectacular action scenes, and captivating storyline.'''

Get_sentiment(Review)

1/1 [==============================] - 3s 3s/step

['positive']

8. 总结

在这篇文章中,我们展示了如何使用 BERT 对 IMDB 电影评论数据集进行情感分类。我们讨论了 BERT 的架构和功能,如双向上下文、WordPiece 标记化和微调。我们还介绍了加载 BERT 模型、在其基础上构建自定义分类器、训练和评估模型以及对新输入进行预测的代码和方法。BERT 在情感分类测试中取得了很高的准确率和性能,并且能够处理复杂多样的语言表达。

这篇关于基于 BERT 对 IMDB 电影评论进行情感分类的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!