之自专题

Dubbo SPI之自适应扩展机制 @Adaptive

上一篇介绍了 Dubbo SPI 的基本实现,这篇就介绍下 Dubbo SPI 的自适应扩展机制,对应注解 @Adaptive。 介绍 @Adaptive 定义如下: public @interface Adaptive {/*** parameter names in URL*/String[] value() default {};} value 是个字符数组,通过该属性从 URL

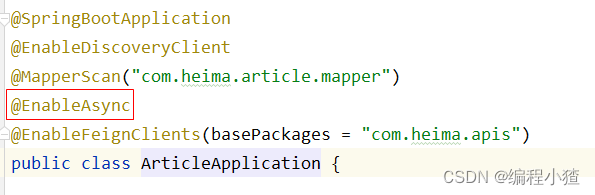

厂里资讯之自媒体文章自动审核

自媒体文章-自动审核 1)自媒体文章自动审核流程 1 自媒体端发布文章后,开始审核文章 2 审核的主要是审核文章的内容(文本内容和图片) 3 借助第三方提供的接口审核文本 4 借助第三方提供的接口审核图片,由于图片存储到minIO中,需要先下载才能审核 5 如果审核失败,则需要修改自媒体文章的状态,status:2 审核失败 status:3 转到人工审核 6 如果审核成功,则需要在文章

ABAQUS软件实训(六):Mesh模块之自底向上的网格划分

所谓自底向上网格划分,就是从二维的网格划分,拉伸或扫掠成三维的网格。 一、绘制实体 先新建一个实体: 得到实体: 二、自底向上的网格划分 设置网格划分为Bottom-up,如图所示: (我悄悄把旋转角度改成了180度,纯粹看着舒服点) Sweep Method 如果把几何体当做扫掠,选择Sweep: 选择完成后,点击mesh即可: 还可以对网格划分密度

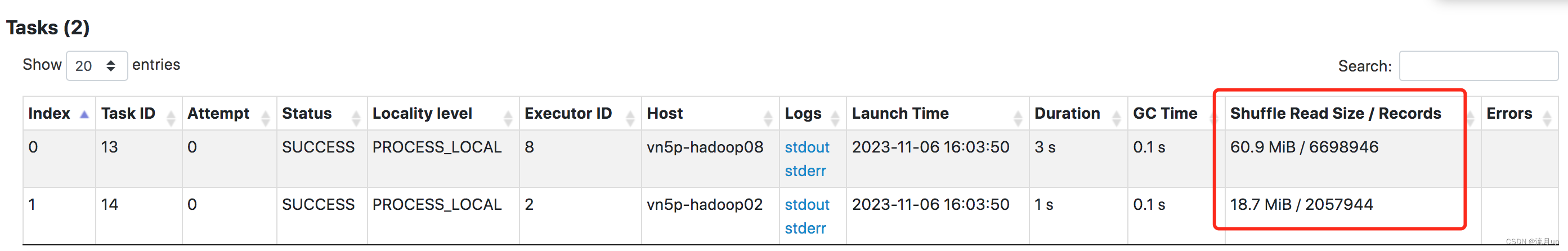

8.spark自适应查询-AQE之自适应调整Shuffle分区数量

目录 概述主要功能自适应调整Shuffle分区数量原理默认环境配置修改配置 结束 概述 自适应查询执行(AQE)是 Spark SQL中的一种优化技术,它利用运行时统计信息来选择最高效的查询执行计划,自Apache Spark 3.2.0以来默认启用该计划。从Spark 3.0开始,AQE有三个主要功如下 自适应查询AQE(Adaptive Query Execution)

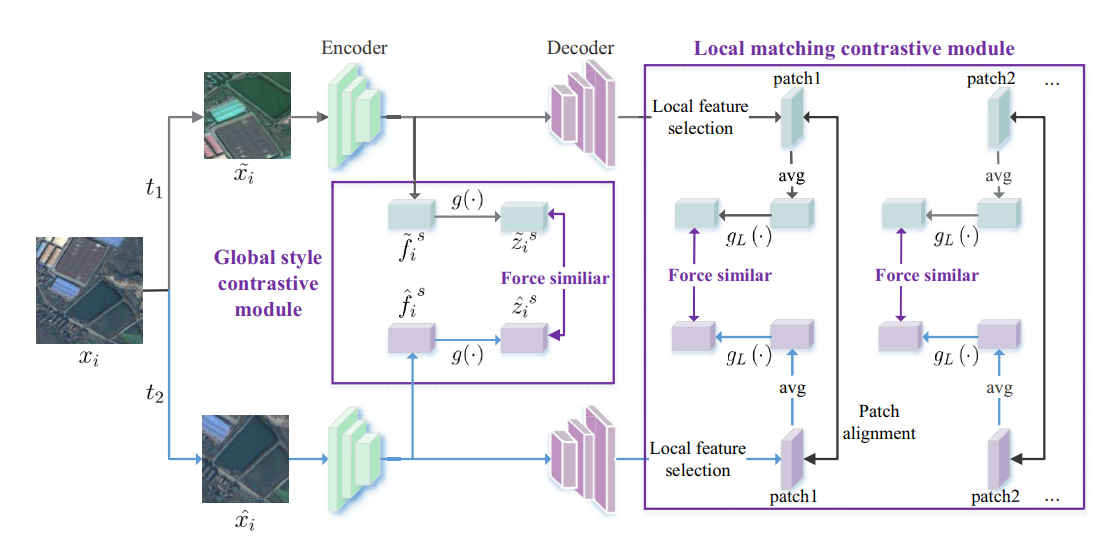

机器学习之自监督学习(四)MoCo系列翻译与总结(一)

Momentum Contrast for Unsupervised Visual Representation Learning Abstract 我们提出了“动量对比”(Momentum Contrast,MoCo)来进行无监督的视觉表示学习。从对比学习的角度来看,我们将其视为字典查找,通过构建一个带有队列和移动平均编码器的动态字典。这使得可以动态构建一个大型且一致的字典,有助于进行对比的

深度学习之自监督模型汇总

1.BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding paper:https://arxiv.org/pdf/1810.04805v2.pdf code:GitHub - google-research/bert: TensorFlow code and pre-trained mo

8.spark自适应查询-AQE之自适应调整Shuffle分区数量

概述 自适应查询执行(AQE)是 Spark SQL中的一种优化技术,它利用运行时统计信息来选择最高效的查询执行计划,自Apache Spark 3.2.0以来默认启用该计划。从Spark 3.0开始,AQE有三个主要功如下 自适应查询AQE(Adaptive Query Execution) 自适应调整Shuffle分区数量 原理默认环境配置修改配置 动态调整Join策略动态优化倾斜的 Jo

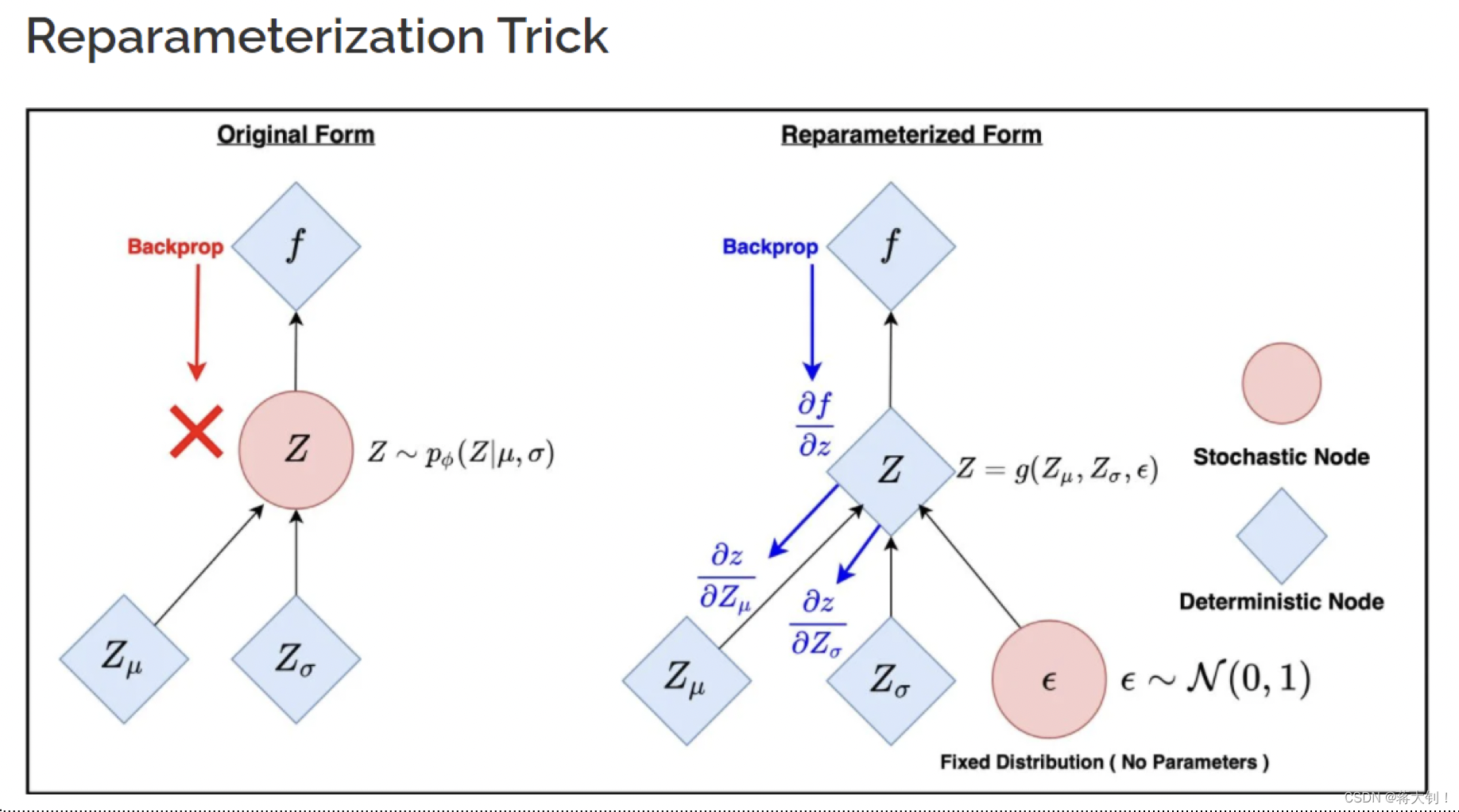

高级人工智能之自编码器Auto-encoders

文章目录 Traditional (Vanilla/Classical) AutoencoderVariational AutoencoderKullback–Leibler divergenceReparameterization 这章讲述模型框架和概念的时间较多,好像并没有涉及过多的运算,重在一些概念的理解。 Traditional (Vanilla/Classical)