stop专题

解决开机提示STOP:c0000218办法教程

“STOP:c0000218 {Registry File Failure}”是一个很典型的错误信息,造成错误的原因是硬盘错误或硬盘损坏。解决办法如下: 1. 从 Windows XP 光盘启动计算机。如果出现提示,请选择从光盘启动计算机必须选择的任何选项。 2. 当提示你选择“修复或故障恢复”时,请按R。这将启动Microsoft故障恢复控制台。 3. 根据提示,键

spyglass-lint关闭(disable/stop/waiver)规则

1.在verilog中disable检查 spyglass-lint可以在verilog代码中通过注释的方式disable掉某些检查,规则如下: // spyglass disable_block xxx// ... verilog code// spyglass enable_block xxx 其中xxx就是被disable的某一项规则。在disable_block和enable_b

记录java使用selenium驱动谷歌浏览器中的坑(三)ExecuteException:The stop timeout of 2000 ms was exceeded

错误信息: INFORMATION: Unable to drain process streams. Ignoring but the exception being swallowed follows.org.apache.commons.exec.ExecuteException: The stop timeout of 2000 ms was exceeded (Exit value:

Android13禁用Settings里面的Force Stop 強制停止按钮

总纲 android13 rom 开发总纲说明 目录 1.前言 2.问题分析 3.代码修改 4.编译 5.彩蛋 1.前言 禁用Settings里面的 強制停止按钮,禁用下面这个按钮 2.问题分析 根据文本找到对应的位置 搜索 Force stop 或者 強制停止,结果 ./packages/apps/Settings/res/values/strings

Rabbit mq 虚拟机stop无法重启

之前从后台进去,这个地方死活无法重启 然后重启docker 以及mq都不行 docker exec -it <CONTAINER_ID_OR_NAME> /bin/bash rabbitmqctl stop_app rabbitmqctl start_app 最后删除虚拟机,然后重建就行了 rabbitmqctl delete_vhost / rabbitmqctl add_v

java实现线程的三种方式, stop()和suspend()方法为何不推荐使用

文章目录 1 线程的实现1.1 继承Thread类1.2 实现Runnable类1.3 继承和实现区别1.4 线程池写法 2 stop和suspend方法 1 线程的实现 java5以前,有如下两种: 有两种实现方法,分别使用new Thread()和new Thread(runnable)形式,第一种直接调用thread的run方法,所以,我们往往使用Thread子类,即new

Os bootup and stop working when the / directory is ful

From the Gnome UI, there is no response in the command line. So telnet from other machine, and cleanup and make up some space in / dir, it will work as normal.

stop-the-world STW

转至: http://f.dataguru.cn/thread-363910-1-1.html "stop-the-world" 机制简称STW,即,在执行垃圾收集算法时,Java应用程序的其他所有除了垃圾收集帮助器线程之外的线程都被挂起 Java中一种全局暂停的现象 全局停顿,所有Java代码停止,native代码可以执行,但不能和JVM交互 多半由于GC引起 Dump线程 死

STM32进入STOP模式后无法唤醒问题

STM32进入STOP模式后无法唤醒问题原因 不能在中断ISR中写进入STOP模式的代码,会导致无法唤醒。 目前测试, Sleep模式, standby模式都能在中断ISR中进入, 只有stop模式不行。

docker 停止重启容器命令start/stop/restart详解(容器生命周期管理教程-2)

Docker 提供了多个命令来管理容器的生命周期, 其中start、stop 和 restart。这些命令允许用户控制容器的运行状态。 1. docker start 命令格式: docker start [OPTIONS] CONTAINER [CONTAINER...] 功能: 启动一个或多个已经停止的 Docker 容器。 选项: --attach, -a:附加 STDOUT

Docker常用命令、超实用、讲解清晰明了(rm、stop、start、kill、logs、diff、top、cp、restart ...)

前些天发现了一个巨牛的人工智能学习网站,通俗易懂,风趣幽默,忍不住分享一下给大家。点击跳转到教程。 1. 查看docker信息(version、info) # 查看docker版本 $docker version # 显示docker系统的信息 $docker info 2. 对image的操作(search、pull、images、rmi、history) [plain]

Docker无法stop或者rm指定容器

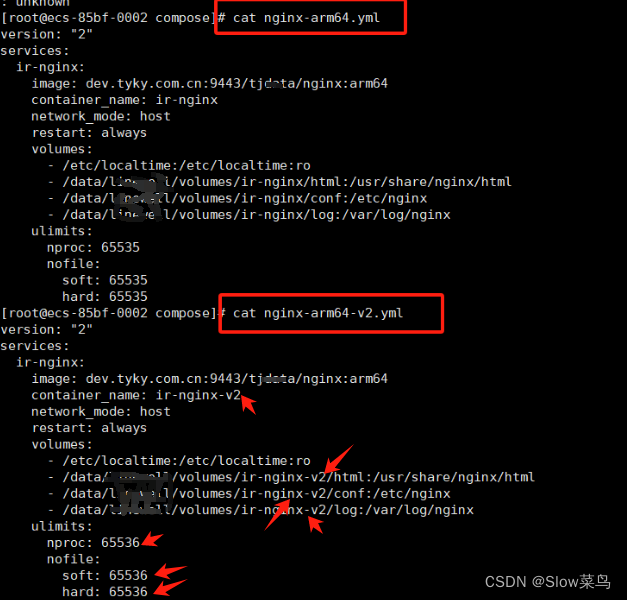

Docker无法stop或者rm指定容器 今日准备重启一下docker 容器部署的 Nginx 时,使用的命令是 docker exec -it ir-nginx nginx -s reload 结果发现无法重启报错 然后想着关闭再启动,结果发现 docker restart 、docker stop 、docker kill 、docker exec 都不行 于是用system

jvm最让人无奈的痛点---stop the world

1.垃圾回收线程,垃圾回收器,垃圾回收算法的关系 垃圾回收线程和垃圾回收器配合起来使用,使用自己的垃圾回收算法,对指定的内存区域进行垃圾回收. 比如新生代我们会用ParNew垃圾回收器来进行回收,parNew垃圾回收器针对新生代使用复制算法来清理垃圾. 2.GC的时候还能继续创建新的对象吗? 不能,在垃圾回收的时候,java系统不能创建对

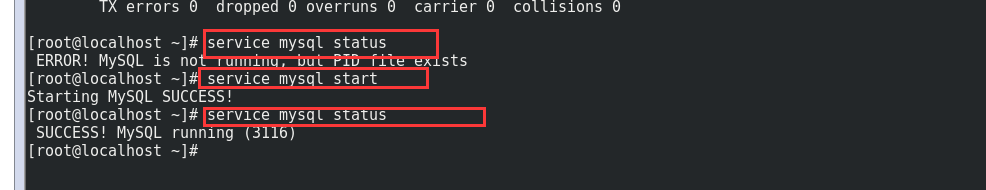

mysql数据库各种版本的的启动start、停止stop等操作

一.mysql 5.7版本以下 1.查看mysql状态 service mysql status 2.停止mysql service mysql stop 3.启动mysql service mysql start 4. 重启服务: service mysql restart 二.mysql 8.0版本以上 1.查看mysql状态 service mysq

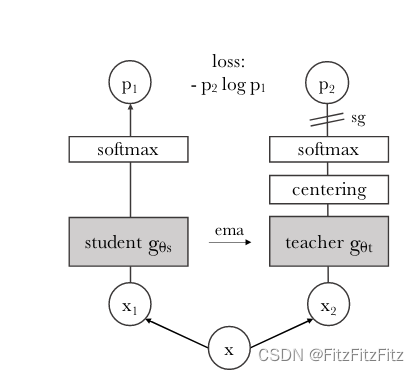

DINO结构中的exponential moving average (ema)和stop-gradient (sg)

DINO思路介绍 在 DINO 中,教师和学生网络分别预测一个一维的嵌入。为了训练学生模型,我们需要选取一个损失函数,不断地让学生的输出向教师的输出靠近。softmax 结合交叉熵损失函数是一种常用的做法,来让学生模型的输出与教师模型的输出匹配。具体地,通过 softmax 函数把教师和学生的嵌入向量尺度压缩到 0 到 1 之间,并计算两个向量的交叉熵损失。这样,在训练过程中,学生模型可以通

hadoop:no namenode to stop及其他

在重隔几个月后重新启动hadoop时,发现namenode启动不了(在bin/stop-all.sh时提示no namenode to stop),上网搜寻no namenode to stop 发现各种各样的解决问题的方法,例如format namenode...等等,发现都不管用。自己还是不够耐心,一气之下就把hadoop和cygwin和jdk全部重装了一遍。下面记录下需要注意的一

mongoDB数据库net stop mongoDB 发生系统错误 5。 拒绝访问。

在使用mongoDB的时候命令行输入 net stop /start mongDB停止/启动数据数据库时,终端报错如下: 报错原因:权限不够,启动MongoDB服务需要以管理员的身份启动CMD 解决方案: CMD命令提示符地址: c盘 --> windows --> system32 --> 当前文件夹搜索cmd.exe --> 右键点击 --> 以管理员方式运行即可,然后在终端输入启动/停止数据

Ubuntu下一步一步安装nginx,make: *** No rule to make target `build‘, needed by `default‘. Stop.

第一步:搭建nodejs环境 注意:阿里云Ubuntu服务器进入系统后的默认文件夹是/root,个人建议先进入/root文件夹的上层文件夹后再进行下面的操作,输入cd …回车 如果你的服务器是全新的,那么首先更新源: 输入apt-get update回车 安装curl,用来下载资源: 输入apt-get install -y curl回车 下载node安装脚本: 输入curl -sL https

Spark集群无法停止:no org.apache.spark.deploy.master.Master to stop

Question 前段时间Spark遇到一个Spark集群无法停止的问题,操作为./stop-all.sh no org.apache.spark.deploy.master.Master to stopno org.apache.spark.deploy.worker.Worker to stop Solution 因为Spark程序在启动后会在/tmp目录创建临时文件/tmp/

严重 catalina.stop java.net.connectexception connection refused connect

tomcat stop 报错。。 root@localhost bin]# ./shutdown.sh Using CATALINA_BASE: /usr/local/tomcat Using CATALINA_HOME: /usr/local/tomcat Using CATALINA_TMPDIR: /usr/local/tomcat/temp Using JRE_HOME: /usr/

Makefile 时出现错误missing separator. Stop.解决方法

使用make命令时,可能会出现如下的储物信息爆出: missing separator. Stop. 在相应的行前tab键,很多时候可能是加了空格键

jvm的stop the world时间过长优化

现象:小米有一个比较大的公共离线HBase集群,用户很多,每天有大量的MapReduce或Spark离线分析任务在进行访问,同时有很多其他在线集群Replication过来的数据写入,集群因为读写压力较大,且离线分析任务对延迟不敏感,所以其G1GC的MaxGCPauseMillis设置是500ms。 但是随着时间的推移,我们发现了一个新的现象,线程的STW时间可以到3秒以上,但是实际GC的STW

Python处理Thread的方式start, stop回收

该问题请百度,如何创建和停止Python的Thread线程。 解答: Python的threading.Thread对象只能start一次,若想stop之后重新start只能重新生成一个对象,那就每需要就new一个,用完系统自己回收。

vue外卖十八:商家详情-食物详情:用ref获取食物详情组件的方法来显示/隐藏子组件、props向子组件传当前食物对象用于显示、@click.stop阻止外层元素的点击事件,让当前点击事件始终有效

1)编写显示隐藏当前组件显示/隐藏方法 <template><!-- v-if显示隐藏当前组件--><div class="food" v-if="isShow">methods: {toggleShow () {this.isShow = !this.isShow}}, 2)结合ref调用子组件的显示隐藏方法src/pages/shops/goods/goods.vue 知识点:

SparkR运行时错误:Re-using existing Spark Context. Please stop SparkR with sparkR.stop() or restart R to c

在SparkR shell运行时出现如下错误 Re-using existing Spark Context. Please stop SparkR with sparkR.stop() or restart R to create a new 错误原因: 上次使用完为关闭 解决方法: 使用如下命令关闭上次程序开启的程序: sparkR.stop()

How to start/restart/stop apache server on ubuntu

1. start sudo /etc/init.d/apache2 start 2. restart sudo /etc/init.d/apache2 restart 3. stop sudo /etc/init.d/apache2 stop