本文主要是介绍ImplicitDeepfake:通过使用NeRF和高斯溅射的隐式Deepfake生成的合理换脸,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

ImplicitDeepfake: Plausible Face-Swapping through Implicit Deepfake Generation using NeRF and Gaussian Splatting

ImplicitDeepfake:通过使用NeRF和高斯溅射的隐式Deepfake生成的合理换脸

格奥尔基·斯坦尼舍夫斯基 1 ∗ 雅库布·斯特茨凯维奇 1 ∗Tomasz Szczepanik1∗

Sławomir Tadeja2 Sjanawomir Tadeja 2Jacek Tabor1 Jacek塔博尔 1Przemysław Spurek1 Przemysbilaw Spurek 1

∗Equal contribution ∗ 平等贡献

1Jagiellonian University, Faculty of Mathematics and Computer Science, Cracow, Poland

1 Jagiellonian大学,数学和计算机科学学院,克拉科夫,波兰

2Department of Engineering, University of Cambridge, Cambridge, UK

2 英国剑桥剑桥大学工程系

przemyslaw.spurek@uj.edu.pl

Abstract 摘要

Numerous emerging deep-learning techniques have had a substantial impact on computer graphics. Among the most promising breakthroughs are the recent rise of Neural Radiance Fields (NeRFs) and Gaussian Splatting (GS). NeRFs encode the object’s shape and color in neural network weights using a handful of images with known camera positions to generate novel views. In contrast, GS provides accelerated training and inference without a decrease in rendering quality by encoding the object’s characteristics in a collection of Gaussian distributions. These two techniques have found many use cases in spatial computing and other domains. On the other hand, the emergence of deepfake methods has sparked considerable controversy. Such techniques can have a form of artificial intelligence-generated videos that closely mimic authentic footage. Using generative models, they can modify facial features, enabling the creation of altered identities or facial expressions that exhibit a remarkably realistic appearance to a real person. Despite these controversies, deepfake can offer a next-generation solution for avatar creation and gaming when of desirable quality. To that end, we show how to combine all these emerging technologies to obtain a more plausible outcome. Our ImplicitDeepfake11The source code is available at: GitHub - quereste/implicit-deepfake: Official repository of paper "ImplicitDeepfake: Plausible Face-Swapping through Implicit Deepfake Generation using NeRF and Gaussian Splatting"

源代码可在https://github.com/quereste/implicit-deepfake上获得

许多新兴的深度学习技术对计算机图形学产生了重大影响。其中最有希望的突破是最近兴起的神经辐射场(NeRFs)和高斯溅射(GS)。NeRFs使用少量具有已知相机位置的图像将对象的形状和颜色编码到神经网络权重中,以生成新的视图。相比之下,GS通过将对象的特征编码在高斯分布的集合中,提供了加速的训练和推理,而不会降低渲染质量。这两种技术在空间计算和其他领域中有许多用例。另一方面,deepfake方法的出现引发了相当大的争议。这种技术可以有一种人工智能生成的视频形式,可以很好地模仿真实的镜头。 使用生成模型,他们可以修改面部特征,从而能够创建改变的身份或面部表情,这些面部表情对真人表现出非常逼真的外观。尽管存在这些争议,但deepfake可以提供下一代化身创建和游戏的解决方案。为此,我们将展示如何将所有这些新兴技术联合收割机结合起来,以获得更合理的结果。 我们的ImplicitDeepfake 1 uses the classical deepfake algorithm to modify all training images separately and then train NeRF and GS on modified faces. Such relatively simple strategies can produce plausible 3D deepfake-based avatars.

使用经典的deepfake算法分别修改所有训练图像,然后在修改后的人脸上训练NeRF和GS。这种相对简单的策略可以产生可信的基于3D deepfake的化身。

1Introduction 一、导言

Manipulating images and videos of human avatars has a long history and spikes many controversies (Zhang, 2022). Moreover, a number of dedicated to this task software tools and packages like Adobe Photoshop22Official Adobe Photoshop - Photo & Design Software

操纵人类化身的图像和视频有着悠久的历史,并引发了许多争议(Zhang,2022)。此外,一些专门用于此任务的软件工具和软件包,如Adobe Photoshop 2 or Adobe Lightroom33Download Adobe Photoshop Lightroom | Photo editing and organizing

或Adobe Lightning 3 have been developed and become commercially available (Maares et al., 2021). In this context, realistic facial feature modification that allows for a convincing avatar creation, i.e. resembling someone else appearance to a great extent, remains a non-trivial task that can be supported with emerging deepfake technology (Waseem et al., 2023).

已经被开发出来并可商购获得(Maares等人,2021年)。在这种情况下,允许令人信服的化身创建的真实面部特征修改,即在很大程度上类似于其他人的外观,仍然是可以用新兴的deepfake技术支持的重要任务(Waseem等人,2023年)。

Neural rendering on original imagesNeural rendering on modified imagesDeepfake 2D

Figure 1: Thanks to neural rendering, we are able to aggregate information from 2D images to produce novel views of 3D objects (see left-hand column). In ImplicitDeepfake, we use 2D deepfake on 2D images, and then we use neural rendering to obtain 3D deepfake (see right-hand column).

图1:由于神经渲染,我们能够从2D图像中聚合信息,以生成3D对象的新视图(见左栏)。在ImplicitDeepfake中,我们在2D图像上使用2D deepfake,然后使用神经渲染来获得3D deepfake(见右栏)。

The deepfake term is a combination of deep learning and fake referring to manipulated media content created using machine learning and artificial neural networks (Waseem et al., 2023). Various deepfake methods employ deep generative models like autoencoders (Tewari et al., 2018) or generative adversarial networks (GAN) (Kumar et al., 2020) for examining the facial characteristics and mimics of a given individual. Such analyses enable the creation of manipulated facial pictures that imitate comparable expressions and motions (Pan et al., 2020).

Deepfake术语是深度学习和假的组合,指的是使用机器学习和人工神经网络创建的操纵媒体内容(Waseem等人,2023年)。各种deepfake方法采用深度生成模型,如自动编码器(Tewari等人,2018)或生成对抗网络(GAN)(Kumar等人,2020)用于检查给定个体的面部特征和模仿。这样的分析使得能够创建模仿可比较的表情和运动的经操纵的面部图片(Pan等人,2020年)。

The availability of user-friendly tools, including DeepFaceLab (Liu et al., 2023), smartphone applications such as Zao44https://zaodownload.com

用户友好工具的可用性,包括DeepFaceLab(Liu等人,2023年),智能手机应用程序,如Zao 4 and FakeApp55FakeApp 2.2 - Download for PC Free 关于FakeApp 5 has simplified the use and fostered adoption of deepfake by nonprofessionals helping them to swap faces with any target person seamlessly for any desired purpose. Deepfake generation and detection are characterised by intense competition, with defenders (i.e., detectors) and adversaries (i.e. generators) continuously trying to outdo each other. A range of notable advances have been made in both areas in recent years (Turek, 2019).

它简化了非专业人士对deepfake的使用,并促进了他们对deepfake的采用,帮助他们为任何期望的目的与任何目标人物无缝交换面孔。Deepfake生成和检测的特点是激烈的竞争,与捍卫者(即,检测器)和对手(即生成器)不断试图超越对方。近年来,这两个领域都取得了一系列显著进展(Turek,2019)。

To extend this growing body of research, we present in this paper ImplicitDeepfake a first model that produces a 3D deepfake. To obtain real word 3D objects, we use novel, state-of-the-art machine learning-based methods such as Neural Radiance Fields (NeRFs) (Mildenhall et al., 2020) and Gaussian Splatting (GS) (Kerbl et al., 2023).

为了扩展这一不断增长的研究,我们在本文中提出了ImplicitDeepfake,它是第一个产生3D deepfake的模型。为了获得真实的单词3D对象,我们使用新颖的、最先进的基于机器学习的方法,例如神经辐射场(NeRFs)(Mildenhall等人,2020)和高斯溅射(GS)(Kerbl等人,2023年)。

| Gaussian Splatting 高斯溅射 |

|

|

|

|---|---|---|---|

| NeRF |

|

|

|

Figure 2:In our paper, we present ImplicitDeepfake train on GS and NeRF. As we can see, both models give similar results, with the former producing slightly better deepfakes.

图2:在我们的论文中,我们在GS和NeRF上展示了ImplicitDeepfake训练。正如我们所看到的,两个模型给予相似的结果,前者产生的deepfake略好。

The NeRF concept, first introduced by (Mildenhall et al., 2020), offers a unique approach for generating new views of intricate scenes from a limited set of 2D images with documented camera positional coordinates. NeRFs can create detailed and complex scenes from new perspectives by combining input images and established computer graphics techniques. The strength of NeRF lies in its capacity to extract information from 2D images and camera positions to reconstruct fully-formed 3D objects. It is worth mentioning that the network incorporates the 3D structure, color, shape, and texture of the face into its weights. After training, the network is capable of generating 2D views from any imaginable viewpoint.

NeRF概念,首先由(Mildenhall等人,2020),提供了一种独特的方法,从一组有限的二维图像与记录的相机位置坐标生成复杂场景的新视图。NeRFs可以通过结合输入图像和现有的计算机图形技术,从新的角度创建详细而复杂的场景。NeRF的优势在于它能够从2D图像和相机位置中提取信息,以重建完整的3D对象。值得一提的是,该网络将面部的3D结构、颜色、形状和纹理纳入其权重。经过训练后,该网络能够从任何可以想象的视点生成2D视图。

The GS (Kerbl et al., 2023) emerges as a compelling alternative for high-quality 3D scene rendering, exhibiting comparable visual fidelity to existing methods while achieving significantly faster inference and training times. This efficiency stems from the lightweight representation of 3D objects as a collection of Gaussian distributions acting like efficient proxies for traditional meshes.

GS(Kerbl等人,2023)作为高质量3D场景渲染的一个引人注目的替代方案出现,表现出与现有方法相当的视觉保真度,同时实现更快的推理和训练时间。这种效率源于3D对象的轻量级表示,作为高斯分布的集合,就像传统网格的有效代理一样。

These rendering methods, i.e. NeRFs and GS, can be considered converters from 2D to 3D objects. At the input, we provide 2D images with viewing positions, and as output, we can obtain 3D objects. In the context of 3D deepfake, we can use a classical model dedicated to 2D images to produce input to the NeRF-based model. ImplicitDeepfake apply 2D deepfake for all 2D images separately and then add to NeRF architecture (see Fig. 1). In practice, deepfake technology produces consistent image swapping, and we can directly use natural rendering to produce 3D faces. This solution is universal and can be used with many different approaches based on NeRF or (GS) (Kerbl et al., 2023).

这些渲染方法,即NeRF和GS,可以被认为是从2D到3D对象的转换器。在输入端,我们提供具有查看位置的2D图像,并且作为输出,我们可以获得3D对象。在3D deepfake的上下文中,我们可以使用专用于2D图像的经典模型来生成基于NeRF的模型的输入。ImplicitDeepfake将2D deepfake分别应用于所有2D图像,然后添加到NeRF架构(见图1)。在实践中,deepfake技术会产生一致的图像交换,我们可以直接使用自然渲染来生成3D人脸。该解决方案是通用的,并且可以与基于NeRF或(GS)的许多不同方法一起使用(Kerbl等人,2023年)。

The contributions of our paper can be summarized as follows:

我们的论文的贡献可以总结如下:

- •

We propose a new method dubbed ImplicitDeepfake which combines neural rendering procedure with 3D deepfake technology.

·我们提出了一种名为ImplicitDeepfake的新方法,它将神经渲染过程与3D deepfake技术相结合。 - •

We show that ImplicitDeepfake can produce consistent face swapping, which allows a direct application of neural rendering on the deepfake output.

我们证明了ImplicitDeepfake可以产生一致的人脸交换,这允许在deepfake输出上直接应用神经渲染。 - •

The 2D deepfake produces consistent images, which can be combined with NeRF and GS (see Fig. 2).

·2D deepfake产生一致的图像,可以与NeRF和GS结合使用(见图2)。

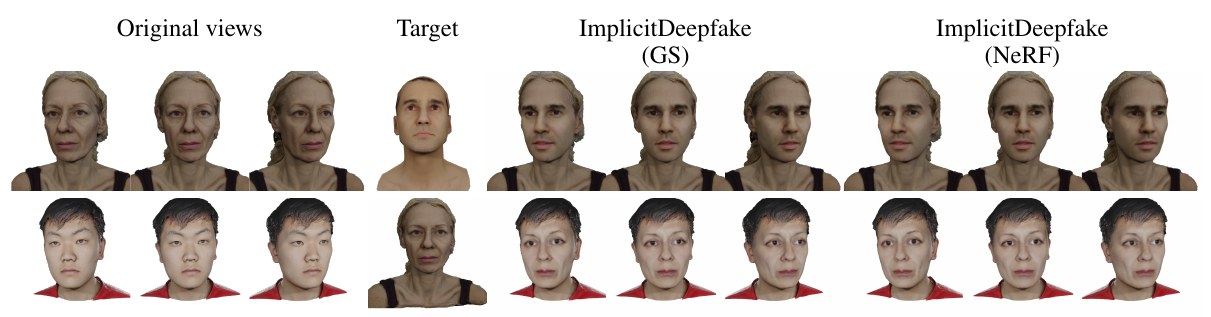

Figure 3:Comparison between ImplicitDeepfake train on GS and NeRF, respectively. In the first column, we see the original input 3D avatars. Then, we present an image of the target celebrity for deepfake. In the last two columns, we present the results obtained with the help of GS and NeRF. As we can observe, GS generally provides more visually plausible renders.

图3:分别在GS和NeRF上的ImplicitDeepfake火车之间的比较。在第一列中,我们看到原始输入的3D化身。然后,我们为deepfake呈现目标名人的图像。在最后两列中,我们展示了在GS和NeRF的帮助下获得的结果。正如我们可以观察到的,GS通常提供更直观的渲染。

2Related Works 2相关作品

Here, we will discuss various methods proposed for face swapping in images and videos. In most cases, authors use large generative models like autoencoders (Tewari et al., 2018) and generative adversarial networks (GAN) (Kumar et al., 2020). Typically, performing a face swap involves three primary stages (Waseem et al., 2023). Firstly, the algorithm identifies faces in both the source and target videos. Subsequently, the method substitutes the target face’s nose, mouth, and eyes with the corresponding features from the source face. The color and lighting of the candidate’s facial image are modified to ensure a smooth integration of the two faces. Finally, the overlapping region undergoes match distance computation to assess and rank the quality of the merged candidate replacement.

在这里,我们将讨论在图像和视频中进行人脸交换的各种方法。在大多数情况下,作者使用大型生成模型,如自动编码器(Tewari等人,2018)和生成对抗网络(GAN)(Kumar等人,2020年)。通常,执行换脸涉及三个主要阶段(Waseem等人,2023年)。该算法首先对源视频和目标视频中的人脸进行识别。随后,该方法用源面部的相应特征替换目标面部的鼻子、嘴和眼睛。候选人的面部图像的颜色和照明被修改,以确保两张脸的平滑融合。最后,重叠区域进行匹配距离计算,以评估和排序合并的候选替换的质量。

Initially, classical convolutional neural networks (CNNs) were employed for generating deep fakes (Korshunova et al., 2017). However, these methods are limited, as they can only transform individual images and are unsuitable for creating high-quality videos.

最初,经典卷积神经网络(CNN)被用于生成深度伪像(Korshunova等人,2017年)。然而,这些方法是有限的,因为它们只能转换单个图像,不适合创建高质量的视频。

In contrast, Reddit66https://www.reddit.com/r/deepfakes/ 相比之下,Reddit 6 introduced a deepfake video creation method that employs an autoencoder architecture. This approach involves a deepfake face-swapping autoencoder network consisting of one encoder and two decoders. Throughout the training phase, the encoder and the two decoders share parameters. Such autoencoder-based technique is utilized by different face-swapping applications, including DeepFaceLab (Liu et al., 2023) and DFaker77GitHub - dfaker/df: Larger resolution face masked, weirdly warped, deepfake,

介绍了一种使用自动编码器架构的deepfake视频创建方法。这种方法涉及由一个编码器和两个解码器组成的deepfake面部交换自动编码器网络。在整个训练阶段,编码器和两个解码器共享参数。这种基于自动编码器的技术由不同的面部交换应用利用,包括DeepFaceLab(Liu等人,2023)和DFaker 7.

The next generative models used in deepfake were generative adversarial networks (GANs). For example, the Face-Swap GAN (FS-GAN) utilizes an encoder-decoder architecture as its generator, along with antagonistic and perceptive losses, to enhance the automated coding system. Including counter losses has improved image reconstruction efficiency while using perceptual loss has helped align the generated face with the input image. On the other hand, the RSGAN (Natsume et al., 2018) utilizes two variational autoencoders (VAE) to produce distinct latent vector embeddings for hair and face areas. These embeddings are subsequently merged to generate a face that has been swapped.

Deepfake中使用的下一个生成模型是生成对抗网络(GAN)。例如,Face-Swap GAN(FS-GAN)利用编码器-解码器架构作为其生成器,沿着对抗性和感知性损失,以增强自动编码系统。包括计数器损失提高了图像重建效率,而使用感知损失有助于将生成的面部与输入图像对齐。另一方面,RSGAN(Natalie等人,2018)利用两个变分自编码器(VAE)为头发和面部区域生成不同的潜在向量嵌入。这些嵌入随后被合并以生成已交换的面。

FSNet (Natsume et al., 2019) simplifies the pre- and post-processing phases by removing their complexity. FSNet consists of two sub-networks: a VAE, which produces the latent vector for the face region in the source image, and a generator network, which combines the latent vector of the source face with the non-face components, such as hairstyles and backgrounds of the target image, thereby achieving face swapping.

FSNet(Natalie等人,2019)通过消除其复杂性简化了预处理和后处理阶段。FSNet由两个子网络组成:VAE,它为源图像中的人脸区域产生潜在向量,以及生成器网络,它将源人脸的潜在向量与目标图像的非人脸成分(如发型和背景)相结合,从而实现人脸交换。

Figure 4:Comparison between ImplicitDeepfake train on GS and NeRF, respectively. In the first column, we see the original input 3D avatars. Then, we present an image of the target avatar (in training, we use only a single image) for deepfake. In the last two columns, we show the results obtained with the help of GS and NeRF.

图4:分别在GS和NeRF上的ImplicitDeepfake火车之间的比较。在第一列中,我们看到原始输入的3D化身。然后,我们为deepfake呈现目标化身的图像(在训练中,我们只使用单个图像)。在最后两列中,我们展示了在GS和NeRF的帮助下获得的结果。

The FaceShifter approach (Li et al., 2019) employs a two-step approach. Initially, a GAN network is utilized to extract and flexibly merge the identities of the source and target images. Subsequently, the occluded regions are refined using the Heuristic Error Acknowledging Refinement Network (HEAR). In the study conducted by Xu et al. (2022), StyleGAN is employed to separate facial image’s texture and appearance characteristics. This technique allows for the preservation of desired target look and texture while maintaining the source identity.

FaceShifter方法(Li等人,2019年,采用了两步走的方法。首先,利用GAN网络来提取和灵活地合并源图像和目标图像的身份。随后,使用启发式错误确认细化网络(HEAR)细化遮挡区域。在Xu等人(2022)进行的研究中,StyleGAN用于分离面部图像的纹理和外观特征。该技术允许在保持源身份的同时保留期望的目标外观和纹理。

Aside from the discussed examples, there exist many different approaches in the literature and on many GitHub pages. Thus, it is not achievable to verify all existing methods in a practical manner. As such, we decided to use GHOST (Groshev et al., 2022) during our experiments. To our knowledge, ImplicitDeepfake is the first model that utilizes the NeRF and GS approach for generating 3D deepfakes.

除了讨论的例子之外,在文献和许多GitHub页面上还有许多不同的方法。因此,不可能以实际的方式验证所有现有的方法。因此,我们决定使用GHOST(Groshev等人,2022年,在我们的实验中。据我们所知,ImplicitDeepfake是第一个利用NeRF和GS方法生成3D deepfake的模型。

3Method 3方法

Here, we describe our ImplicitDeepfake model. First, we introduce both the NeRF and GS and establish a notation. Then, we describe a deepfake model used in our approach. Finally, we present our model.

在这里,我们描述了我们的ImplicitDeepfake模型。首先,我们介绍NeRF和GS,并建立一个符号。然后,我们描述了在我们的方法中使用的deepfake模型。最后,我们提出了我们的模型。

3.1Neural Radiance Field (NeRF)

3.1神经辐射场(NeRF)

The vanilla NeRF model proposed in Mildenhall et al. (2020) is a neural architecture used to represent 3D scenes. In NeRF, a 5-dimensional coordinate is taken as input, consisting of the spatial location 𝐱=(�,�,�) and the viewing direction 𝐝=(�,�). The model then outputs the emitted color 𝐜=(�,�,�) and the volume density �.

Mildenhall等人(2020)提出的香草NeRF模型是一种用于表示3D场景的神经架构。在NeRF中,将5维坐标作为输入,该5维坐标由空间位置 𝐱=(�,�,�) 和观看方向 𝐝=(�,�) 组成。然后,模型输出发射颜色 𝐜=(�,�,�) 和体积密度 � 。

A classical NeRF model utilizes a set of images during the training process. This approach generates a group of rays, which pass through the image. Neural networks predict color and depth information on such rays to represent a 3D object. NeRF encodes such 3D objects using a Multilayer Perceptron (MLP) network:

经典的NeRF模型在训练过程中使用一组图像。该方法生成一组穿过图像的光线。神经网络预测这些光线的颜色和深度信息,以表示3D对象。NeRF使用多层感知器(MLP)网络对这样的3D对象进行编码:

| ℱ����(𝐱,𝐝;Θ)=(𝐜,�). |

The training process involves optimizing the parameters (𝚯) of the multilayer perceptron (MLP) to minimize the difference between the rendered and reference images obtained from a specific dataset. This calibration allows the MLP network to take in a 3D coordinate and its corresponding viewing direction as input and produce a density value and color (radiance) along that direction.

训练过程涉及优化多层感知器(MLP)的参数( 𝚯 ),以最小化从特定数据集获得的渲染图像和参考图像之间的差异。这种校准允许MLP网络将3D坐标及其相应的观看方向作为输入,并沿着该方向产生密度值和颜色(辐射)。

The loss function of NeRF is inspired by the classical volume rendering Kajiya and Von Herzen (1984). It involves tracing the paths of individual light rays through the scene geometry and calculating their final color. The loss function, used to quantify the discrepancy between the rendered and ground truth pixel colors, is formulated as the sum of squared errors between the corresponding color values in the following manner:

NeRF的损失函数受到经典体绘制Kajiya和Von Herzen(1984)的启发。它涉及跟踪单个光线通过场景几何体的路径并计算其最终颜色。损失函数用于量化渲染像素颜色与地面实况像素颜色之间的差异,其以以下方式被公式化为对应颜色值之间的平方误差之和:

| ℒ=∑𝐫∈�‖�^(𝐫)−�(𝐫)‖22, |

where � is the set of rays in each batch, �(𝐫) is the ground truth red-green-blue (RGB) color, and �^(𝐫) is predicted RGB colors for a given ray r . The predicted RGB colors �^(𝐫) can be calculated thanks to this formula:

其中 � 是每一批中的射线集合, �(𝐫) 是地面实况红-绿-蓝(RGB)颜色,并且 �^(𝐫) 是针对给定射线r的预测RGB颜色。预测的RGB颜色 �^(𝐫) 可以通过以下公式计算:

| �^(𝐫)=∑�=1���(1−exp(−����))𝐜�, |

where ��=exp(−∑�=1�−1����) and � denotes the number of samples, �� is adjacent samples distance, and �� is the opacity of a given sample �. This function for calculating �^(𝐫) from the set of (��,��) values is trivially differentiable.

其中 ��=exp(−∑�=1�−1����) 和 � 表示样本的数量, �� 是相邻样本距离,而 �� 是给定样本 � 的不透明度。用于从 (��,��) 值的集合计算 �^(𝐫) 的该函数是可微的。

| PSNR ↑ PSNR ↑ 的问题 | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| FACE 1 面1 | FACE 2 面2 | FACE 3 面3 | FACE 4 面4 | FACE 5 面5 | FACE 6 面6 | FACE 7 面7 | FACE 8 面8 | Avg. | |

| NeRF | 37.89 | 38.58 | 37.95 | 41.01 | 36.86 | 37.77 | 35.91 | 35.14 | 37.64 |

| Gaussian Splatting 高斯溅射 | 41.58 | 41.71 | 42.45 | 44.00 | 41.25 | 41.73 | 39.65 | 41.43 | 41.73 |

| SSIM ↑ 阿信 ↑ | |||||||||

| NeRF | 0.97 | 0.97 | 0.98 | 0.99 | 0.98 | 0.98 | 0.98 | 0.97 | 0.98 |

| Gaussian Splatting 高斯溅射 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 1.00 | 0.99 | 0.99 | 0.99 |

| LPIPS ↓ LPIPPS ↓ 的问题 | |||||||||

| NeRF | 0.08 | 0.08 | 0.07 | 0.06 | 0.04 | 0.04 | 0.05 | 0.06 | 0.06 |

| Gaussian Splatting 高斯溅射 | 0.04 | 0.04 | 0.03 | 0.03 | 0.02 | 0.01 | 0.02 | 0.02 | 0.03 |

Table 1:Comparison between deepfake on test positions (camera position that is not used in training of ImplicitDeepfake) obtained by ImplicitDeepfake and direct application of deepfake. Results are calculated using faces from Fig. 3.

表1:由ImplicitDeepfake获得的测试位置(在ImplicitDeepfake的训练中未使用的相机位置)上的deepfake与直接应用deepfake之间的比较。使用图3中的面计算结果。

The NeRF’s performance limitations can be attributed to two primary factors, i.e., the inherent capacity constraints of the underlying neural network and the computational difficulty of accurately computing the intersection points between camera rays and the scene geometry. These limitations can lead to prolonged rendering times for high-resolution images, particularly for complex scenes, impeding the potential of real-time applications.

NeRF的性能限制可归因于两个主要因素,即,底层神经网络的固有容量约束以及精确计算相机射线和场景几何形状之间的交点的计算难度。这些限制可能导致高分辨率图像的渲染时间延长,特别是对于复杂场景,阻碍了实时应用的潜力。

3.2Gaussian Splatting (GS)

3.2高斯溅射

The GS model employs a collection of 3D Gaussian functions to represent a 3D scene. Each Gaussian is characterized by a set of parameters, i.e., its position (mean) specifying its center, its covariance matrix defining the shape and orientation of the Gaussian distribution, its opacity controlling the level of transparency, and its color represented by spherical harmonics (SH) (Fridovich-Keil et al., 2022; Müller et al., 2022).

GS模型采用3D高斯函数的集合来表示3D场景。每个高斯分布由一组参数表征,即,其位置(均值)指定其中心,其协方差矩阵定义高斯分布的形状和方向,其不透明度控制透明度水平,以及其颜色由球谐函数(SH)表示(Fridovich-Keil等人,2022; Müller等人,2022年)。

The GS represents a radiance field by optimizing all the 3D Gaussian parameters. Moreover, the computational efficiency of the GS algorithm stems from its rendering process, which leverages the projection properties of Gaussian components. This approach relies on representing the scene with a dense set of 3D Gaussians, mathematically denoted as:

GS通过优化所有3D高斯参数来表示辐射场。此外,GS算法的计算效率源于其渲染过程,该过程利用了高斯分量的投影特性。这种方法依赖于用一组密集的3D高斯来表示场景,数学上表示为:

| 𝒢={(𝒩(m�,Σ�),��,��)}�=1�, |

where m� is the position, Σ� denotes the covariance, �� is the opacity, and �� are the �-th component’s SH for color representation.

其中 m� 是位置, Σ� 表示协方差, �� 是不透明度, �� 是用于颜色表示的第 � 分量的SH。

The GS optimization algorithm operates through an iterative image synthesis process and comparison with training views. The challenges of this procedure arise due to potential inaccuracies in Gaussian component placement stemming from the inherent dimensionality reduction of 3D to 2D projection. To mitigate these issues, the algorithm incorporates mechanisms for creating, removing, and relocating Gaussian components. Notably, the precision of 3D Gaussian covariance parameters proves paramount in ensuring a compact data representation. This is attributed to their ability to effectively model large homogeneous regions with a limited number of large anisotropic Gaussians.

GS优化算法通过迭代图像合成过程和与训练视图的比较来操作。这个过程的挑战是由于高斯组件放置的潜在不准确性而产生的,这是由于3D到2D投影的固有降维。为了缓解这些问题,该算法结合了创建,删除和重新定位高斯分量的机制。值得注意的是,3D高斯协方差参数的精度在确保紧凑的数据表示方面是至关重要的。这是由于他们能够有效地模拟大型均匀区域与有限数量的大型各向异性高斯。

GS begins with a finite set of points and proceeds by implementing a strategy of generating fresh elements and removing redundant ones. After every one hundred iterations, the algorithm eliminates any Gaussians that possess an opacity below a predetermined threshold. Concurrently, novel Gaussian components are introduced within unoccupied regions of the 3D space to progressively fill vacant areas and refine the scene representation. Despite the GS optimization procedure’s inherent complexity, implementing the algorithm using CUDA kernels facilitates highly efficient training. This enables GS to achieve comparable quality to NeRF while potentially exhibiting faster training and inference times.

GS从一组有限的点开始,并通过执行生成新元素和删除冗余元素的策略来进行。在每一百次迭代之后,该算法消除具有低于预定阈值的不透明度的任何高斯。同时,在3D空间的未占用区域内引入新颖的高斯分量,以逐步填充空置区域并细化场景表示。尽管GS优化过程具有固有的复杂性,但使用CUDA内核实现算法有助于高效训练。这使GS能够实现与NeRF相当的质量,同时可能表现出更快的训练和推理时间。

3.3Deepfake with GHOST 3.3 Deepfake与GHOST

Numerous deepfake technologies have been developed in the literature, each with its own set of limitations. These may include errors in face edge detection, inconsistencies in eye gaze, and overall low quality, particularly when replacing a face from a single image with a video (Waseem et al., 2023). An example approach to deepfake is represented by Generative High-fidelity One Shot Transfer (GHOST) (Groshev et al., 2022), in which the authors build on the FaceShifter (Li et al., 2019) model as a starting point and introduce several enhancements in both the quality and stability of deepfake. Consequently, we decided to utilize GHOST (Groshev et al., 2022) as a deepfake component of our model.

文献中已经开发了许多deepfake技术,每种技术都有自己的局限性。这些可能包括面部边缘检测中的错误、眼睛注视中的不一致以及整体低质量,特别是当用视频替换来自单个图像的面部时(Waseem等人,2023年)。deepfake的示例方法由生成高保真单次转移(GHOST)(Groshev等人,2022),其中作者建立在FaceShifter(Li等人,2019)模型作为起点,并介绍了deepfake的质量和稳定性的几个增强功能。因此,我们决定利用GHOST(Groshev等人,2022)作为我们模型的deepfake组件。

Let �� and �� be the cropped faces from the source and target images, respectively, and let �^�,� be the newly generated face. The architecture of GHOST (Groshev et al., 2022) consists of a few main parts. The identity encoder extracts information from the source image �� and keeps information about the identity of the source person. The attribute encoder is a model with a U-Net (Ronneberger et al., 2015).

令 �� 和 �� 分别是来自源图像和目标图像的裁剪面部,并且令 �^�,� 是新生成的面部。GHOST的架构(Groshev等人,2022年,由几个主要部分组成。身份编码器从源图像 �� 提取信息,并保持关于源人物的身份的信息。属性编码器是具有U-Net的模型(Ronneberger等人,2015年)。

| PSNR ↑ PSNR ↑ 的问题 | ||||||||

|---|---|---|---|---|---|---|---|---|

| FACE 1 面1 | FACE 2 面2 | FACE 3 面3 | FACE 4 面4 | FACE 5 面5 | FACE 6 面6 | FACE 7 面7 | Avg. | |

| deepfake 2D 深度伪造2D | 12.75 | 11.97 | 13.94 | 11.35 | 11.47 | 12.31 | 11.84 | 12.23 |

| NeRF | 13.27 | 12.92 | 12.18 | 11.76 | 12.08 | 12.91 | 12.61 | 12.54 |

| Gaussian Splatting 高斯溅射 | 12.81 | 12.04 | 13.86 | 11.42 | 11.51 | 12.33 | 11.89 | 12.27 |

| SSIM ↑ 阿信 ↑ | ||||||||

| deepfake 2D 深度伪造2D | 0.75 | 0.76 | 0.74 | 0.70 | 0.72 | 0.75 | 0.75 | 0.74 |

| NeRF | 0.83 | 0.83 | 0.81 | 0.79 | 0.82 | 0.80 | 0.81 | 0.81 |

| GS | 0.75 | 0.76 | 0.75 | 0.71 | 0.72 | 0.75 | 0.75 | 0.74 |

| LPIPS ↓ LPIPPS ↓ 的问题 | ||||||||

| deepfake 2D 深度伪造2D | 0.28 | 0.27 | 0.26 | 0.27 | 0.25 | 0.25 | 0.24 | 0.26 |

| NeRF | 0.26 | 0.26 | 0.25 | 0.26 | 0.24 | 0.24 | 0.24 | 0.25 |

| GS | 0.27 | 0.27 | 0.26 | 0.27 | 0.25 | 0.25 | 0.24 | 0.26 |

Table 2: We conduct a comparison using data from two 3D avatars. We select an image from the target avatar and employ it like in the initial experiment. At the same time, we use the remaining views of the target avatar as testing data. In this experiment, we evaluate the outcomes of ImplicitDeepfake compared to the original training views of the target avatar. As a reference point, we utilize a model that directly applies 2D deepfake on the training views of the target avatar.

表2:我们使用来自两个3D化身的数据进行比较。我们从目标化身中选择一个图像,并像最初的实验一样使用它。同时,我们使用目标化身的剩余视图作为测试数据。在这个实验中,我们将ImplicitDeepfake的结果与目标化身的原始训练视图进行了比较。作为参考点,我们利用一个模型,直接将2D deepfake应用于目标化身的训练视图。

The AAD generator is a model that combines the attribute vector obtained from �� and the identity vector obtained successively from �� using AAD ResBlocks. This process generates a new face �^�,� that possesses the attributes of both the source identity and the target. Moreover, using a multiscale discriminator (Park et al., 2019) improves the quality of the output synthesis by comparing genuine and fake images.

AAD生成器是使用AAD ResBlocks组合从 �� 获得的属性向量和从 �� 连续获得的身份向量的模型。该过程生成具有源身份和目标两者的属性的新面部 �^�,� 。此外,使用多尺度插值(Park等人,2019)通过比较真实和虚假图像来提高输出合成的质量。

The GHOST model uses the cost function consisting of a few elements representing reconstruction, attributes, identity, and the GAN loss based on a multi-scale discriminator. Once a model has been trained, it can replace a person’s face in one image with the face from another image. However, since the model is trained explicitly on cropped faces, it cannot be directly applied to any images. To utilize the model, it is necessary to first crop the faces from both the source and target images. Once this step is completed, the model can swap the faces in the cropped images. Finally, the result of the face swap is inserted back into the original target image.

GHOST模型使用由表示重建、属性、身份的几个元素组成的成本函数,以及基于多尺度递归的GAN损失。一旦模型经过训练,它就可以用一张图像中的人脸替换另一张图像中的人脸。然而,由于该模型是在裁剪的面部上显式训练的,因此不能直接应用于任何图像。为了利用该模型,必须首先从源图像和目标图像中裁剪面部。完成此步骤后,模型可以交换裁剪图像中的面部。最后,人脸交换的结果被插入到原始目标图像中。

The primary issue with this procedure is that the attributes of �� on �^�,� are not identical. Therefore, if we were to insert �^�,� directly back into the target image, we could see the edges of such an operation. To solve such problems, GHOST first blends the model’s output �^�,� with ��, then inserts the result into the target image.

此过程的主要问题是 �^�,� 上 �� 的属性不相同。因此,如果我们将 �^�,� 直接插入到目标图像中,我们可以看到这种操作的边缘。为了解决这些问题,GHOST首先将模型的输出 �^�,� 与 �� 混合,然后将结果插入目标图像。

Such a procedure is complicated since we need to crop images. But in the context of neural rendering, applying in the second stage is crucial. The main problem with applying NeRF to modified images is the consistency of the scene. In the case of NeRF inpainting, when we apply the 2D algorithm separately, we obtain inconsistent images, and the NeRF component cannot be trained (Mirzaei et al., 2023). To solve this problem, we must train the inpainting component with the NeRF model simultaneously (Shen et al., 2024).

这样的过程是复杂的,因为我们需要裁剪图像。但在神经渲染的背景下,在第二阶段的应用是至关重要的。将NeRF应用于修改后的图像的主要问题是场景的一致性。在NeRF修复的情况下,当我们单独应用2D算法时,我们获得不一致的图像,并且无法训练NeRF组件(Mirzaei等人,2023年)。为了解决这个问题,我们必须同时用NeRF模型训练修复组件(Shen et al.,2024年)。

Our approach takes the main face shape from the source image and changes only the cropped part. Therefore, we can use deepfake and neural rendering components separately.

我们的方法从源图像中获取主要的人脸形状,只改变裁剪的部分。因此,我们可以分别使用deepfake和神经渲染组件。

3.4 ImplicitDeepfake

Our model is a hybrid of the classical deepfake model and neural rendering that can use either NeRF or GS models. In general, we can rely on any other approach to produce novel views from 2D images.

我们的模型是经典deepfake模型和神经渲染的混合,可以使用NeRF或GS模型。一般来说,我们可以依靠任何其他方法从2D图像中生成新视图。

To unify the notation, we will treat the neural rendering model as a black box that takes a set of images with camera positions:

为了统一符号,我们将神经渲染模型视为一个黑盒,它采用一组具有相机位置的图像:

| ℐ={(��,𝐝�)}�=1�, |

where �� is a 3D image from training data and 𝐝� is the camera position of the �� image.

其中 �� 是来自训练数据的3D图像, 𝐝� 是 �� 图像的相机位置。

We define a neural rendering model by the following function:

我们通过以下函数定义神经渲染模型:

| �(𝐝;ℐ,𝚯)=�𝐝, |

that depends on training images ℐ and parameters 𝚯 that are adjusted during training. In the case of NeRF, this function describes neural network parameters. In contrast, in the case of GS, it describes the parameters of Gaussian components. Our neural rendering process returns image �𝐝 from given position 𝐝.

这取决于训练图像 ℐ 和在训练期间调整的参数 𝚯 。在NeRF的情况下,该函数描述神经网络参数。相反,在GS的情况下,它描述了高斯分量的参数。我们的神经渲染过程从给定的位置 𝐝 返回图像 �𝐝 。

The deepfake model, we denote by

Deepfake模型,我们表示为

| �^�,�=𝒢(��,��;Φ), |

where �� and �� are the faces of the source and target images, respectively, Φ denote all parameters of the deepfake network, in our case GHOST (Groshev et al., 2022). Our deepfake model returns the newly generated face �^�,�.

其中 �� 和 �� 分别是源图像和目标图像的面部, Φ 表示deepfake网络的所有参数,在我们的情况下是GHOST(Groshev等人,2022年)。我们的deepfake模型返回新生成的脸 �^�,� 。

The general framework for our ImplicitDeepfake consists of two parts. First, we take training images of the 3D face:

我们的ImplicitDeepfake的一般框架由两部分组成。首先,我们获取3D人脸的训练图像:

| ℐ={(��,𝐝�)}�=1�, |

and target image �� for the deepfake procedure. Then, we apply the deepfake model separately for all modified images:

以及用于深度伪造过程的目标图像 �� 。然后,我们将deepfake模型分别应用于所有修改后的图像:

| ℐ^={�^�,�}�=1�={𝒢(��,��;Φ)}�=1�. |

Next, we train neural rendering on modified images to obtain a model dedicated to producing novel views �𝐝:

接下来,我们在修改后的图像上训练神经渲染,以获得一个专门用于生成新视图的模型 �𝐝 :

| �(𝐝;ℐ^,𝚯)=�𝐝. |

The above procedure is relatively simple and can generate plausible 3D deepfake avatars, as shown in Fig. 3.

上述过程相对简单,可以生成看似合理的3D deepfake头像,如图3所示。

4Experiments 4实验

Here, we present the resulting deepfakes produced by our model. We start from a classical approach when we have one target image of a celebrity and the 3D object of a 3D human avatar. We aim to change the latter into an avatar of a celebrity using a target image, as shown in Fig. 3.

在这里,我们展示了由我们的模型产生的deepfake。当我们有一个名人的目标图像和一个3D人类化身的3D对象时,我们从经典的方法开始。我们的目标是使用目标图像将后者变成名人的化身,如图3所示。

In our experiment, we apply classical 2D deepfake on source images. Then, we train our NeRF or GS model on modified images to obtain a 3D avatar of a given celebrity. To evaluate our model, we chose novel views of the source 3D avatar and applied deepfake. Such images were compared with results generated with our ImplicitDeepfake. In Tab. 1, we present a numerical comparison between NeRF and GS is presented. As we can see, GS obtained slightly better results.

在我们的实验中,我们在源图像上应用经典的2D deepfake。然后,我们在修改后的图像上训练NeRF或GS模型,以获得给定名人的3D头像。为了评估我们的模型,我们选择了源3D化身的新颖视图并应用了deepfake。这些图像与我们的ImplicitDeepfake生成的结果进行了比较。在Tab。1,我们给出了NeRF和GS之间的数值比较。正如我们所看到的,GS获得了稍微好一点的结果。

In the second experiment, we wanted to contrast our results with the actual images of the target celebrity. Therefore, we used two 3D avatars instead of one. We took a single image from the target avatar and used it like in the first experiment. The other views of the target avatar served as testing data. Due to this, we could compare the results given by our ImplicitDeepfake with the original training views of the target avatar, as shown in Fig. 4. As a baseline, we used a model that directly applies 3D deepfake to training views of the target avatar. A numerical comparison between NeRF and GS can be seen in Tab. 2. As we can observe, ImplicitDeepfake gives better results than our baseline.

在第二个实验中,我们希望将我们的结果与目标名人的实际图像进行对比。因此,我们使用了两个3D头像而不是一个。我们从目标化身中提取了一张图像,并像第一个实验中一样使用它。目标化身的其他视图用作测试数据。因此,我们可以将ImplicitDeepfake给出的结果与目标化身的原始训练视图进行比较,如图4所示。作为基线,我们使用了一个模型,该模型直接将3D deepfake应用于目标化身的训练视图。NeRF和GS之间的数值比较见表1。2.正如我们所观察到的,ImplicitDeepfake给出了比我们的基线更好的结果。

In summary, we can see that applying 2D deepfake constructs well-aligned data. Moreover, independent modification produces consistent views to train both NeRF and GS.

总之,我们可以看到,应用2D deepfake构建了对齐良好的数据。此外,独立修改产生一致的视图来训练NeRF和GS。

We also can observe that GS gives sharper renders, as seen in Tab. 1. We believe that this difference is caused by NeRF, which occasionally produces blurred renders when the 2D Deepfake model produces inconsistent renders. We can observe that, compared to NeRF, GS is more robust and works with issues related to the classical deepfake algorithm.

我们还可以观察到GS提供了更清晰的渲染,如Tab中所示。1.我们认为这种差异是由NeRF造成的,当2D Deepfake模型产生不一致的渲染时,NeRF偶尔会产生模糊的渲染。我们可以观察到,与NeRF相比,GS更强大,并且可以处理与经典deepfake算法相关的问题。

5Conclusions 5结论

This paper introduces a novel, ImplicitDeepfake, a method that uses the conventional deepfake algorithm to alter the training images for NeRF and GS, respectively. Subsequently, NeRF and GS are trained separately on the modified facial images, generating realistic and plausible deepfakes that can serve as 3D avatars. As shown in the results, GS produces sharper renders, with the former occasionally generating blurred renders. We attribute these effects to minor inconsistencies introduced by the 2D deepfake model. Overall, GS demonstrates greater resilience to view inconsistencies than NeRF.

本文介绍了一种新的方法,ImplicitDeepfake,这种方法使用传统的deepfake算法来分别改变NeRF和GS的训练图像。随后,NeRF和GS分别在修改后的面部图像上进行训练,生成逼真且合理的deepfake,可以作为3D化身。如结果所示,GS生成更清晰的渲染,前者偶尔生成模糊的渲染。我们将这些影响归因于2D deepfake模型引入的微小不一致。总体而言,GS表现出更大的弹性,以查看不一致比NeRF。

这篇关于ImplicitDeepfake:通过使用NeRF和高斯溅射的隐式Deepfake生成的合理换脸的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!