本文主要是介绍k8s中calico网络组件部署时一个节点一直处于Pending状态,希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

k8s中calico网络组件部署时一个节点一直处于Pending状态

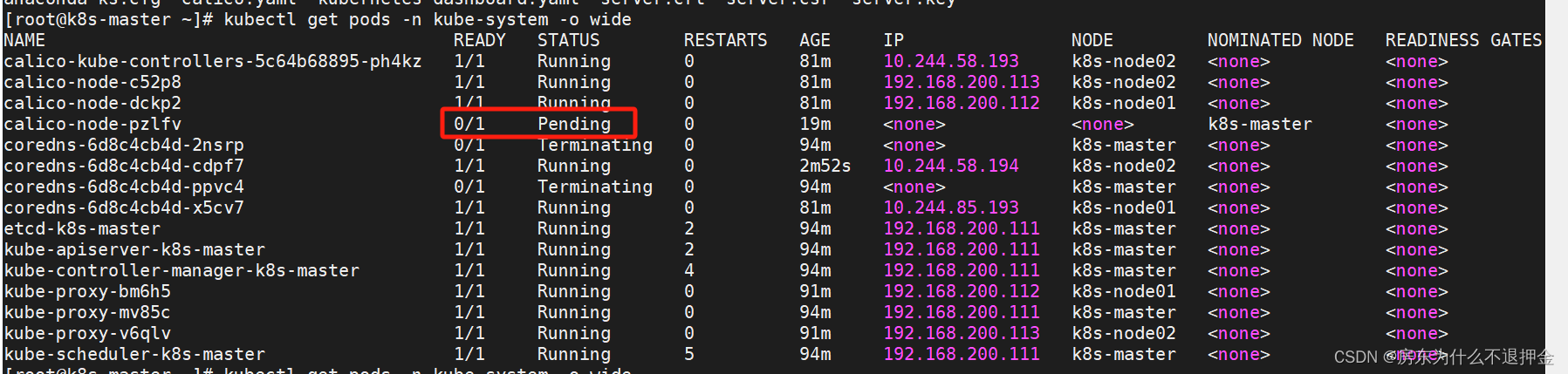

故障截图

- 故障排查思路,通过describe查看具体原因

~]# kubectl describe pod calico-node-pzlfv -n kube-system

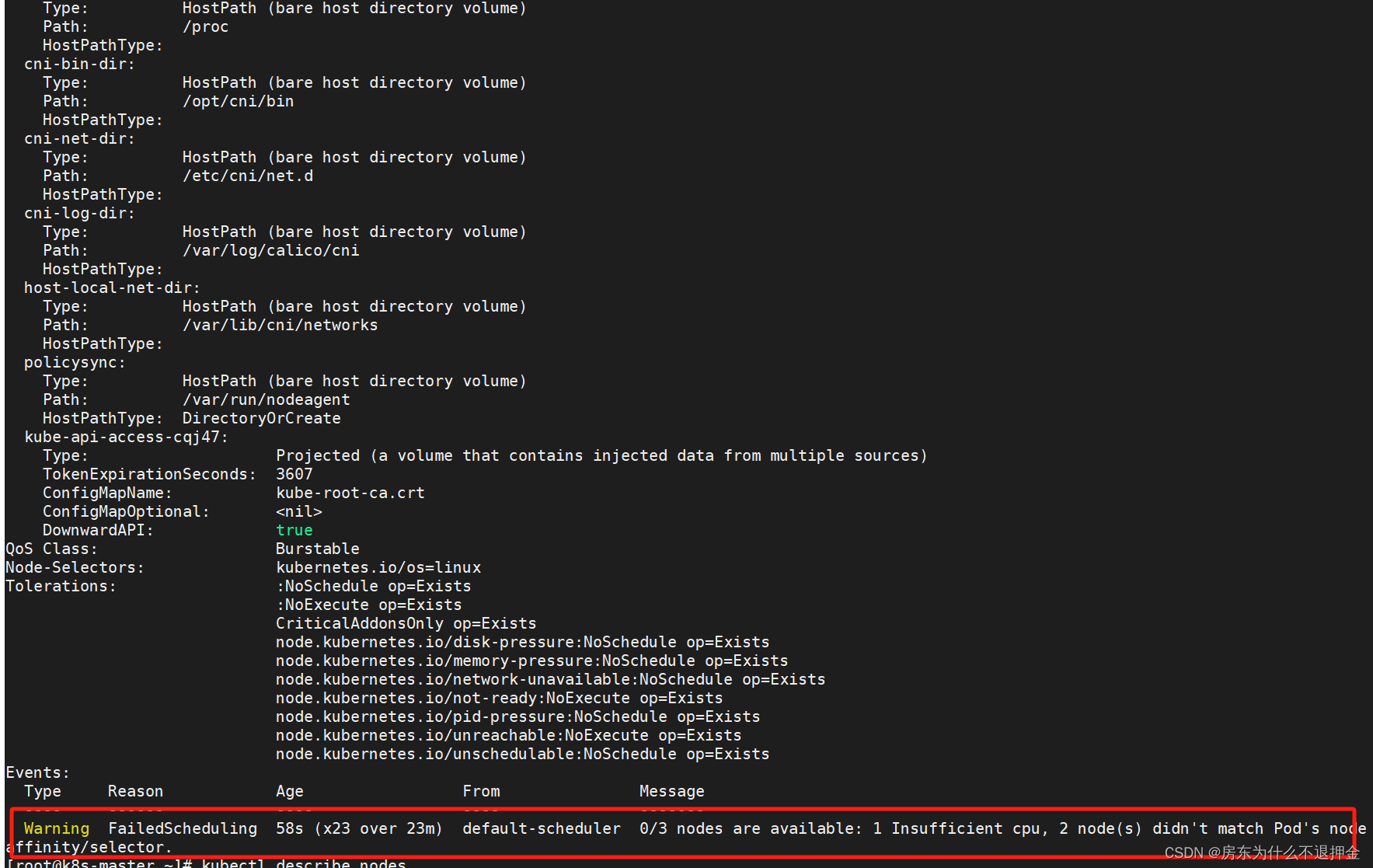

通过describe查看得知报错

Warning FailedScheduling 58s (x23 over 23m) default-scheduler 0/3 nodes are available: 1 Insufficient cpu, 2 node(s) didn't match Pod's node affinity/selector.

- 根据报错定位具体故障,通过网上查找资料,可能是节点CPU资源不够导致。可以使用命令以下命令查看集群中节点的资源使用情况。

~]# kubectl describe node |grep -E '((Name|Roles):\s{6,})|(\s+(memory|cpu)\s+[0-9]+\w{0,2}.+%\))'

Name: k8s-master

Roles: control-plane,mastercpu 850m (85%) 0 (0%)memory 240Mi (13%) 340Mi (19%)

Name: k8s-node01

Roles: <none>cpu 350m (35%) 0 (0%)memory 70Mi (4%) 170Mi (9%)

Name: k8s-node02

Roles: <none>cpu 350m (35%) 0 (0%)memory 70Mi (4%) 170Mi (9%)

可以看到master节点的cpu使用已经达到85%,剩余的已经不多。

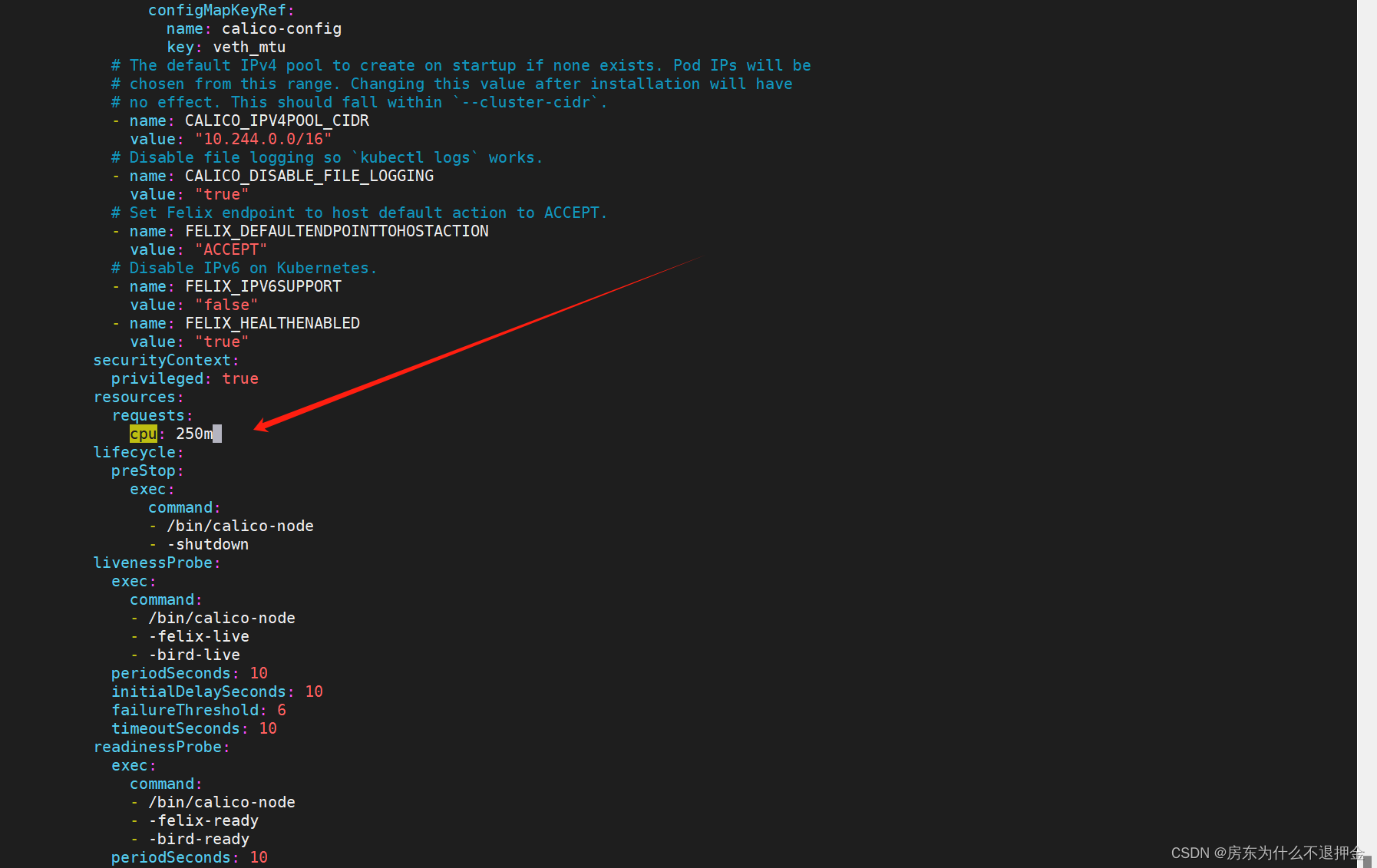

查看calico组件yaml文件中requests下cpu的值。

可以看到定义的值大于目前已经剩余,在不影响calico正常运行的情况下调节requests值,然后重新apply即可。

~]# kubectl apply -f calico.yaml

configmap/calico-config unchanged

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org configured

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrole.rbac.authorization.k8s.io/calico-node unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-node unchanged

daemonset.apps/calico-node configured

serviceaccount/calico-node unchanged

deployment.apps/calico-kube-controllers unchanged

serviceaccount/calico-kube-controllers unchanged

poddisruptionbudget.policy/calico-kube-controllers configured查看pod状态,可以看到calico状态已经正常,等待一分钟后,coredns状态也全部正常。

~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-5c64b68895-ph4kz 1/1 Running 0 99m 10.244.58.193 k8s-node02 <none> <none>

calico-node-ctsmx 0/1 Running 0 11s 192.168.200.111 k8s-master <none> <none>

calico-node-dckp2 1/1 Running 0 99m 192.168.200.112 k8s-node01 <none> <none>

calico-node-t59rb 0/1 Running 0 11s 192.168.200.113 k8s-node02 <none> <none>

coredns-6d8c4cb4d-2nsrp 0/1 Terminating 0 112m <none> k8s-master <none> <none>

coredns-6d8c4cb4d-cdpf7 1/1 Running 0 21m 10.244.58.194 k8s-node02 <none> <none>

coredns-6d8c4cb4d-ppvc4 0/1 Terminating 0 112m <none> k8s-master <none> <none>

coredns-6d8c4cb4d-x5cv7 1/1 Running 0 99m 10.244.85.193 k8s-node01 <none> <none>

etcd-k8s-master 1/1 Running 2 113m 192.168.200.111 k8s-master <none> <none>

kube-apiserver-k8s-master 1/1 Running 2 113m 192.168.200.111 k8s-master <none> <none>

kube-controller-manager-k8s-master 1/1 Running 4 113m 192.168.200.111 k8s-master <none> <none>

kube-proxy-bm6h5 1/1 Running 0 110m 192.168.200.112 k8s-node01 <none> <none>

kube-proxy-mv85c 1/1 Running 0 112m 192.168.200.111 k8s-master <none> <none>

kube-proxy-v6qlv 1/1 Running 0 109m 192.168.200.113 k8s-node02 <none> <none>

kube-scheduler-k8s-master 1/1 Running 5 113m 192.168.200.111 k8s-master <none> <none>

r ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-5c64b68895-ph4kz 1/1 Running 0 100m 10.244.58.193 k8s-node02 <none> <none>

calico-node-ctsmx 1/1 Running 0 37s 192.168.200.111 k8s-master <none> <none>

calico-node-lf94h 1/1 Running 0 17s 192.168.200.112 k8s-node01 <none> <none>

calico-node-t59rb 1/1 Running 0 37s 192.168.200.113 k8s-node02 <none> <none>

coredns-6d8c4cb4d-cdpf7 1/1 Running 0 21m 10.244.58.194 k8s-node02 <none> <none>

coredns-6d8c4cb4d-x5cv7 1/1 Running 0 100m 10.244.85.193 k8s-node01 <none> <none>

etcd-k8s-master 1/1 Running 2 113m 192.168.200.111 k8s-master <none> <none>

kube-apiserver-k8s-master 1/1 Running 2 113m 192.168.200.111 k8s-master <none> <none>

kube-controller-manager-k8s-master 1/1 Running 4 113m 192.168.200.111 k8s-master <none> <none>

kube-proxy-bm6h5 1/1 Running 0 110m 192.168.200.112 k8s-node01 <none> <none>

kube-proxy-mv85c 1/1 Running 0 113m 192.168.200.111 k8s-master <none> <none>

kube-proxy-v6qlv 1/1 Running 0 110m 192.168.200.113 k8s-node02 <none> <none>

kube-scheduler-k8s-master 1/1 Running 5 113m 192.168.200.111 k8s-master <none> <none>

这篇关于k8s中calico网络组件部署时一个节点一直处于Pending状态的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!