本文主要是介绍PID神经网络原理与MATLAB实现(SISO),希望对大家解决编程问题提供一定的参考价值,需要的开发者们随着小编来一起学习吧!

序

最近想实现一下PID神经网络,但是书籍和博客都令人头疼,主要是卡在误差反向传播的计算过程中。找一篇通俗易懂的文章实在不易,最终,只能自己静下心,仔细琢磨。只要每一步在逻辑上都是合理的,我们有理由相信能够得到正确的结果。抱着这样的心态,由浅入深,来实现一下。

一、网络结构定义

简单起见,假设一个受控系统单输入 u u u 单输出 x x x ,使用一个PID神经网络来作为控制器,使得系统输出达到目标值 z z z。

其中, W 3 × 2 W_{3\times 2} W3×2和 V 1 × 3 V_{1\times 3} V1×3分别为输入层—隐含层权重矩阵和隐含层—输出层权重矩阵。 y i y_i yi和 y o y_o yo分别是PID计算前后向量。 z , x , u z, x, u z,x,u分别为期望值、实际值和网络输出,都是数。

1.1 前向传播

根据以上结构,容易得出前向传播如下。注意简介起见,第 ( k ) (k) (k)次的标注省略。

y i 1 = w 11 z + w 12 x y i 2 = w 21 z + w 22 x y i 3 = w 31 z + w 32 x (1) \begin{aligned} & y_{i1} = w_{11} z + w_{12} x \\ & y_{i2} = w_{21} z + w_{22} x \\ & y_{i3} = w_{31} z + w_{32} x \tag{1} \end{aligned} yi1=w11z+w12xyi2=w21z+w22xyi3=w31z+w32x(1)

y o 1 = y i 1 y o 2 = y o 2 ( k − 1 ) + y i 2 y o 3 = y i 3 ( k ) − y i 3 ( k − 1 ) (2) \begin{aligned} & y_{o1} = y_{i1} \\ & y_{o2} = y_{o2}(k-1) + y_{i2} \\ & y_{o3} = y_{i3}(k) - y_{i3}(k-1) \tag{2} \end{aligned} yo1=yi1yo2=yo2(k−1)+yi2yo3=yi3(k)−yi3(k−1)(2)

u = v 11 y o 1 + v 12 y o 2 + v 13 y o 3 (3) u = v_{11} y_{o1} + v_{12} y_{o2} + v_{13} y_{o3} \tag{3} u=v11yo1+v12yo2+v13yo3(3)

不妨设受控对象为一个类似二次函数的时变系统(用于仿真),则输出及损失函数为

x = u 2 ( k ) + 0.1 x ( k − 1 ) (4) x = u^2(k) + 0.1x(k-1) \tag{4} x=u2(k)+0.1x(k−1)(4)

J = 1 2 ( z − x ) 2 (5) J = \frac{1}{2} (z-x)^2 \tag{5} J=21(z−x)2(5)

1.2 反向传播

前向传播计算完成,以下开始反向传播。首先考虑权重 V V V 对误差 J J J 的影响,也即计算 ∂ J ∂ V \frac{\partial J}{\partial V} ∂V∂J,根据前向传递函数关系,易得

∂ J ∂ v 11 = ∂ J ∂ x ∂ x ∂ u ∂ u ∂ v 11 = − ( z − x ) ⋅ ∂ x ∂ u ⋅ y o 1 (6) \begin{aligned} \frac{\partial J}{\partial v_{11}} &= \frac{\partial J}{\partial x} \frac{\partial x}{\partial u} \frac{\partial u}{\partial v_{11}} \\ &= -(z-x) \cdot \frac{\partial x}{\partial u}\cdot y_{o1} \end{aligned} \tag{6} ∂v11∂J=∂x∂J∂u∂x∂v11∂u=−(z−x)⋅∂u∂x⋅yo1(6)

由于受控对象的数学模型往往不知道或者偏导难以计算,中间的 ∂ x ∂ u \frac{\partial x}{\partial u} ∂u∂x 常用下式子代替

∂ x ∂ u = s i g n x ( k ) − x ( k − 1 ) u ( k ) − u ( k − 1 ) (7) \frac{\partial x}{\partial u} ={\rm sign} \frac{x(k) - x(k-1)}{u(k) - u(k-1)} \tag{7} ∂u∂x=signu(k)−u(k−1)x(k)−x(k−1)(7)

以下 ∂ x ∂ u \frac{\partial x}{\partial u} ∂u∂x皆由式(7)得出,不在赘述。

其中 s i g n ( ⋅ ) {\rm sign}(\cdot) sign(⋅)是符号函数,输入正数输出 1 1 1,输入负数输出 − 1 -1 −1,输入 0 0 0输出 0 0 0。使用符号函数的好处是归一化到 [ − 1 , 1 ] [-1,1] [−1,1],避免分母很接近0导致输出很大的问题。

同理易得

∂ J ∂ v 12 = − ( z − x ) ⋅ ∂ x ∂ u ⋅ y o 2 (8) \frac{\partial J}{\partial v_{12}} = -(z-x) \cdot \frac{\partial x}{\partial u} \cdot y_{o2} \tag{8} ∂v12∂J=−(z−x)⋅∂u∂x⋅yo2(8)

∂ J ∂ v 13 = − ( z − x ) ⋅ ∂ x ∂ u ⋅ y o 3 (9) \frac{\partial J}{\partial v_{13}} = -(z-x) \cdot \frac{\partial x}{\partial u} \cdot y_{o3} \tag{9} ∂v13∂J=−(z−x)⋅∂u∂x⋅yo3(9)

接下来,计算真正的难点 ∂ J ∂ W \frac{\partial J}{\partial W} ∂W∂J。

∂ J ∂ w 11 = ∂ J ∂ x ∂ x ∂ u ∂ u ∂ y o 1 ∂ y o 1 ∂ y i 1 ∂ y i 1 ∂ w 11 = − ( z − x ) ⋅ ∂ x ∂ u ⋅ v 11 ⋅ 1 ⋅ z (10) \begin{aligned} \frac{\partial J}{\partial w_{11}} &= \frac{\partial J}{\partial x} \frac{\partial x}{\partial u} \frac{\partial u}{\partial y_{o1}} \frac{\partial y_{o1}}{\partial y_{i1}} \frac{\partial y_{i1}}{\partial w_{11}} \\ &=-(z-x) \cdot\frac{\partial x}{\partial u} \cdot v_{11} \cdot 1 \cdot z \end{aligned} \tag{10} ∂w11∂J=∂x∂J∂u∂x∂yo1∂u∂yi1∂yo1∂w11∂yi1=−(z−x)⋅∂u∂x⋅v11⋅1⋅z(10)

同理易得

∂ J ∂ w 12 = ∂ J ∂ x ∂ x ∂ u ∂ u ∂ y o 1 ∂ y o 1 ∂ y i 1 ∂ y i 1 ∂ w 12 = − ( z − x ) ⋅ ∂ x ∂ u ⋅ v 11 ⋅ 1 ⋅ x (11) \begin{aligned} \frac{\partial J}{\partial w_{12}} &= \frac{\partial J}{\partial x} \frac{\partial x}{\partial u} \frac{\partial u}{\partial y_{o1}} \frac{\partial y_{o1}}{\partial y_{i1}} \frac{\partial y_{i1}}{\partial w_{12}} \\ &=-(z-x) \cdot\frac{\partial x}{\partial u} \cdot v_{11} \cdot 1 \cdot x \end{aligned} \tag{11} ∂w12∂J=∂x∂J∂u∂x∂yo1∂u∂yi1∂yo1∂w12∂yi1=−(z−x)⋅∂u∂x⋅v11⋅1⋅x(11)

∂ J ∂ w 21 = ∂ J ∂ x ∂ x ∂ u ∂ u ∂ y o 2 ∂ y o 2 ∂ y i 2 ∂ y i 2 ∂ w 21 = − ( z − x ) ⋅ ∂ x ∂ u ⋅ v 12 ⋅ ∂ y o 2 ∂ y i 2 ⋅ z (12) \begin{aligned} \frac{\partial J}{\partial w_{21}} &= \frac{\partial J}{\partial x} \frac{\partial x}{\partial u} \frac{\partial u}{\partial y_{o2}} \frac{\partial y_{o2}}{\partial y_{i2}} \frac{\partial y_{i2}}{\partial w_{21}} \\ &=-(z-x) \cdot \frac{\partial x}{\partial u} \cdot v_{12} \cdot \frac{\partial y_{o2}}{\partial y_{i2}} \cdot z \end{aligned} \tag{12} ∂w21∂J=∂x∂J∂u∂x∂yo2∂u∂yi2∂yo2∂w21∂yi2=−(z−x)⋅∂u∂x⋅v12⋅∂yi2∂yo2⋅z(12)

其中

∂ y o 2 ∂ y i 2 = Δ y o 2 Δ y i 2 = y o 2 ( k ) − y o 2 ( k − 1 ) y i 2 ( k ) − y i 2 ( k − 1 ) = y i 2 ( k ) y i 2 ( k ) − y i 2 ( k − 1 ) \frac{\partial y_{o2}}{\partial y_{i2}} = \frac{\Delta y_{o2}}{\Delta y_{i2}} = \frac{y_{o2}(k) - y_{o2}(k-1)}{y_{i2}(k) - y_{i2}(k-1)} = \frac{y_{i2}(k) }{y_{i2}(k) - y_{i2}(k-1)} ∂yi2∂yo2=Δyi2Δyo2=yi2(k)−yi2(k−1)yo2(k)−yo2(k−1)=yi2(k)−yi2(k−1)yi2(k)

和之前一样,使用符号函数计算可得

∂ y o 2 ∂ y i 2 = s i g n y i 2 ( k ) y i 2 ( k ) − y i 2 ( k − 1 ) (13) \frac{\partial y_{o2}}{\partial y_{i2}} = {\rm sign}\frac{y_{i2}(k) }{y_{i2}(k) - y_{i2}(k-1)} \tag{13} ∂yi2∂yo2=signyi2(k)−yi2(k−1)yi2(k)(13)

同理

∂ J ∂ w 22 = ∂ J ∂ x ∂ x ∂ u ∂ u ∂ y o 2 ∂ y o 2 ∂ y i 2 ∂ y i 2 ∂ w 22 = − ( z − x ) ⋅ ∂ x ∂ u ⋅ v 12 ⋅ ∂ y o 2 ∂ y i 2 ⋅ x (14) \begin{aligned} \frac{\partial J}{\partial w_{22}} &= \frac{\partial J}{\partial x} \frac{\partial x}{\partial u} \frac{\partial u}{\partial y_{o2}} \frac{\partial y_{o2}}{\partial y_{i2}} \frac{\partial y_{i2}}{\partial w_{22}} \\ &=-(z-x) \cdot \frac{\partial x}{\partial u} \cdot v_{12} \cdot \frac{\partial y_{o2}}{\partial y_{i2}} \cdot x \end{aligned} \tag{14} ∂w22∂J=∂x∂J∂u∂x∂yo2∂u∂yi2∂yo2∂w22∂yi2=−(z−x)⋅∂u∂x⋅v12⋅∂yi2∂yo2⋅x(14)

∂ J ∂ w 31 = ∂ J ∂ x ∂ x ∂ u ∂ u ∂ y o 3 ∂ y o 3 ∂ y i 3 ∂ y i 3 ∂ w 31 = − ( z − x ) ⋅ ∂ x ∂ u ⋅ v 13 ⋅ ∂ y o 3 ∂ y i 3 ⋅ z (15) \begin{aligned} \frac{\partial J}{\partial w_{31}} &= \frac{\partial J}{\partial x} \frac{\partial x}{\partial u} \frac{\partial u}{\partial y_{o3}} \frac{\partial y_{o3}}{\partial y_{i3}} \frac{\partial y_{i3}}{\partial w_{31}} \\ &=-(z-x) \cdot \frac{\partial x}{\partial u} \cdot v_{13} \cdot \frac{\partial y_{o3}}{\partial y_{i3}} \cdot z \end{aligned} \tag{15} ∂w31∂J=∂x∂J∂u∂x∂yo3∂u∂yi3∂yo3∂w31∂yi3=−(z−x)⋅∂u∂x⋅v13⋅∂yi3∂yo3⋅z(15)

其中

∂ y o 3 ∂ y i 3 = Δ y o 3 Δ y i 3 = y o 3 ( k ) − y o 3 ( k − 1 ) y i 3 ( k ) − y i 3 ( k − 1 ) = y o 3 ( k ) − y o 3 ( k − 1 ) y o 3 ( k ) \frac{\partial y_{o3}}{\partial y_{i3}} = \frac{\Delta y_{o3}}{\Delta y_{i3}} = \frac{y_{o3}(k) - y_{o3}(k-1)}{y_{i3}(k) - y_{i3}(k-1)} = \frac{y_{o3}(k) - y_{o3}(k-1)}{y_{o3}(k)} ∂yi3∂yo3=Δyi3Δyo3=yi3(k)−yi3(k−1)yo3(k)−yo3(k−1)=yo3(k)yo3(k)−yo3(k−1)

依旧使用符号函数归一化

∂ y o 3 ∂ y i 3 = s i g n y o 3 ( k ) − y o 3 ( k − 1 ) y o 3 ( k ) \frac{\partial y_{o3}}{\partial y_{i3}} ={\rm sign} \frac{y_{o3}(k) - y_{o3}(k-1)}{y_{o3}(k)} ∂yi3∂yo3=signyo3(k)yo3(k)−yo3(k−1)

同理

∂ J ∂ w 32 = ∂ J ∂ x ∂ x ∂ u ∂ u ∂ y o 3 ∂ y o 3 ∂ y i 3 ∂ y i 3 ∂ w 32 = − ( z − x ) ⋅ ∂ x ∂ u ⋅ v 13 ⋅ ∂ y o 3 ∂ y i 3 ⋅ x (16) \begin{aligned} \frac{\partial J}{\partial w_{32}} &= \frac{\partial J}{\partial x} \frac{\partial x}{\partial u} \frac{\partial u}{\partial y_{o3}} \frac{\partial y_{o3}}{\partial y_{i3}} \frac{\partial y_{i3}}{\partial w_{32}} \\ &=-(z-x) \cdot \frac{\partial x}{\partial u} \cdot v_{13} \cdot \frac{\partial y_{o3}}{\partial y_{i3}} \cdot x \end{aligned} \tag{16} ∂w32∂J=∂x∂J∂u∂x∂yo3∂u∂yi3∂yo3∂w32∂yi3=−(z−x)⋅∂u∂x⋅v13⋅∂yi3∂yo3⋅x(16)

1.3 权值更新

如果使用传统的梯度下降法,则更新公式为

W = W − α 1 ∂ J ∂ W (17) W = W - \alpha_1 \frac{\partial J}{\partial W} \tag{17} W=W−α1∂W∂J(17)

V = V − α 2 ∂ J ∂ V (18) V = V - \alpha_2 \frac{\partial J}{\partial V} \tag{18} V=V−α2∂V∂J(18)

其中, α 1 , α 2 \alpha_1, \alpha_2 α1,α2为学习率。

有时,也增加动量项,则更新公式为

W d ( k ) = β 1 W d ( k − 1 ) + ( 1 − β 1 ) ∂ J ∂ W W = W − α 1 W d (19) \begin{aligned} & W_d(k) = \beta_1 W_d(k-1) + (1-\beta_1)\frac{\partial J}{\partial W}\\ & W = W - \alpha_1 W_d \tag{19} \end{aligned} Wd(k)=β1Wd(k−1)+(1−β1)∂W∂JW=W−α1Wd(19)

V d ( k ) = β 2 V d ( k − 1 ) + ( 1 − β 2 ) ∂ J ∂ V V = V − α 2 V d (20) \begin{aligned} & V_d(k) = \beta_2 V_d(k-1) + (1-\beta_2)\frac{\partial J}{\partial V}\\ & V = V - \alpha_2 V_d \tag{20} \end{aligned} Vd(k)=β2Vd(k−1)+(1−β2)∂V∂JV=V−α2Vd(20)

其中, β 1 , β 2 \beta_1, \beta_2 β1,β2为动量因子,一般取 0.9; W d , V d W_d, V_d Wd,Vd 可取初始值为0进行迭代。

1.4 实际问题

以上算法纯属理论,在仿真中可能都会遇到问题。一个典型的问题是除数为0的情况,在这里很容易发生,例如 u ( k ) = u ( k − 1 ) u(k) = u(k-1) u(k)=u(k−1) 就是如此。另一个问题是数值过大,比如除数很小就很容易导致这种现象。因此有必要规避除数为0,也要做好限幅工作。写程序时需要注意这些细节。

二、仿真

现假设受控系统是一个式(4) 描述的系统,设期望值为一个阶梯信号,整个程序如下

%% 参数初始化

% 初始化

rng(1); % 设置随机数种子

z = 1; % 目标值

x = 0; % 实际值初始化

W = 0.3*(rand(3,2) - 0.5); % 隐含层—输入层权重矩阵

V = 0.3*(rand(3,1) - 0.5); % 输出层—隐含层权重矩阵%学习率

alpha1 = 0.06; % W权重相关学习率

alpha2 = 0.02; % V权重相关学习率% 动量因子

beta1 = 0.9;

beta2 = 0.9;% 限幅值

xmax = 1;

ymax = 1;

umax = 1;% 变量初始化,_1 结尾为上一次的值,_2 结尾表示上两个时刻值

yi3_1 = 0;

u_1 = 0;

u_2 = 0;

x_1 = 0;

yi2_1 = 0;

yo3_1 = 0;

dW = 0; % W变化量,初始化为0

dV = 0;

yo = zeros(3,1); xdata = []; % 记录实际值

zdata = []; % 记录期望值N = 2000; % 迭代次数

for k=1:N%% 设置目标值if k <500z = 0.5;elseif k < 1000z = 0.2;elseif k < 1500 z = 0.8;elsez = 0;end%% 正向传播% 隐含层输入yi = W * [z; x]; % 隐含层输出yo(1) = yi(1); % P yo(2) = yo(2) + yi(2); % Iyo(3) = yi(3) - yi3_1; % Dyo(find(yo>ymax)) = ymax; % 限幅yo(find(yo<-ymax)) = -ymax;% 输出层输出u = V' * yo; u(find(u>umax)) = umax;u(find(u<-umax)) = -umax;% 受控对象输出x = u^2 + 0.1*x_1; x(find(x>xmax)) = xmax; x(find(x<-xmax)) = -xmax;% 损失函数J = 1/2 * (z-x)^2; %% 反向传播% 计算J对V的偏导数 pJ_pVpJ_px = -(z-x);temp = u - u_1; % u(k)-u(k-1)if temp >= 0 && temp < 1e-20 % 除数限幅在(-∞,-1e-20]∪[1e-20,+∞)temp = 1e-20; % 防止除数为0elseif temp < 0 && temp > -1e-20temp = -1e-20;endpx_pu = sign((x - x_1) / temp); % 使用sign,限幅在{-1,0,1}pJ_pV = pJ_px * px_pu * yo; % 误差对V的偏导,yo为3x1向量% 计算J对W的偏导数 pJ_pWpJ_pw11 = pJ_px * px_pu * V(1) * 1 * z;pJ_pw12 = pJ_px * px_pu * V(1) * 1 * x;temp = yi(2) - yi2_1; % yi(k) - yi(k-1)if temp >= 0 && temp < 1e-20 % 除数限幅在(-∞,-1e-20]∪[1e-20,+∞)temp = 1e-20; % 防止除数为0elseif temp < 0 && temp > -1e-20temp = -1e-20;endpJ_pw21 = pJ_px * px_pu * V(2) * (sign(yi(2)/temp)) * z;pJ_pw22 = pJ_px * px_pu * V(2) * (sign(yi(2)/temp)) * x;temp = yo(3) ; % yo(k)if temp >= 0 && temp < 1e-20 % 除数限幅在(-∞,-1e-20]∪[1e-20,+∞)temp = 1e-20; % 防止除数为0elseif temp < 0 && temp > -1e-20temp = -1e-20;endpJ_pw31 = pJ_px * px_pu * V(3) * (sign((yo(3) - yo3_1)/temp)) * z;pJ_pw32 = pJ_px * px_pu * V(3) * (sign((yo(3) - yo3_1)/temp)) * x;pJ_pW = [pJ_pw11, pJ_pw12; pJ_pw21, pJ_pw22; pJ_pw31, pJ_pw32]; % 误差对W的偏导%% 动量法权重更新dW = beta1 * dW + (1-beta1) * pJ_pW; % 动量法计算增量dV = beta2 * dV + (1-beta2) * pJ_pV;W = W - alpha1 * dW; % 权重更新V = V - alpha2 * dV;%% 其他数据更新u_2 = u_1;u_1 = u; % u(k-1)x_1 = x; % x(k-1)yi2_1 = yi(2); % yi2_1 为上一次 yi(2)yo3_1 = yo(3); % yo3_1 为上一次 yo(3)xdata = [xdata; x]; % 记录数据方便绘图zdata = [zdata; z];

end%% 可视化

plot(1:N, zdata, 'LineWidth',1.5);hold on; % 目标值

plot(1:N, xdata, '.'); hold off % 实际值

legend('目标值','实际值') % 标注

title('PID神经网络'); % 标题

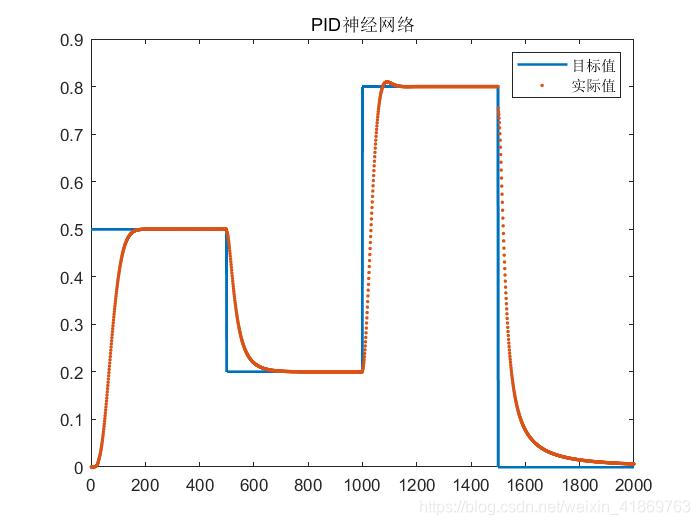

运行结果如下

可见,跟踪效果很不错。不过,和所有神经网络一样,参数要小心谨慎,如果设置不合理,就达不到期望的效果。

四、总结与展望

和PID和神经网络相比,PID神经网络有什么美妙之处呢?其实,它结合了两者的优势,PID使用了反馈控制,好的PID参数使得实际值快速稳定地接近目标值,但是有时候很难调节出一组好的PID参数。神经网络的优势是,通过误差反馈传播调整权值,这个过程是自动完成的。PID参数已经通过权值的方式隐藏在网络结构中,使用反向传播时该参数自动得到优化。

其实,PID神经网络大显身手的地方是多输入多输出的耦合系统的控制,下一次,就用来做一个无人机姿态控制器。

— 完 —

这篇关于PID神经网络原理与MATLAB实现(SISO)的文章就介绍到这儿,希望我们推荐的文章对编程师们有所帮助!